This repository collects research papers on Vision Language Models in Autonomous Driving: A Survey and Outlook. The repo maintained by TUM-AIR will be continuously updated to track the latest work in the community.

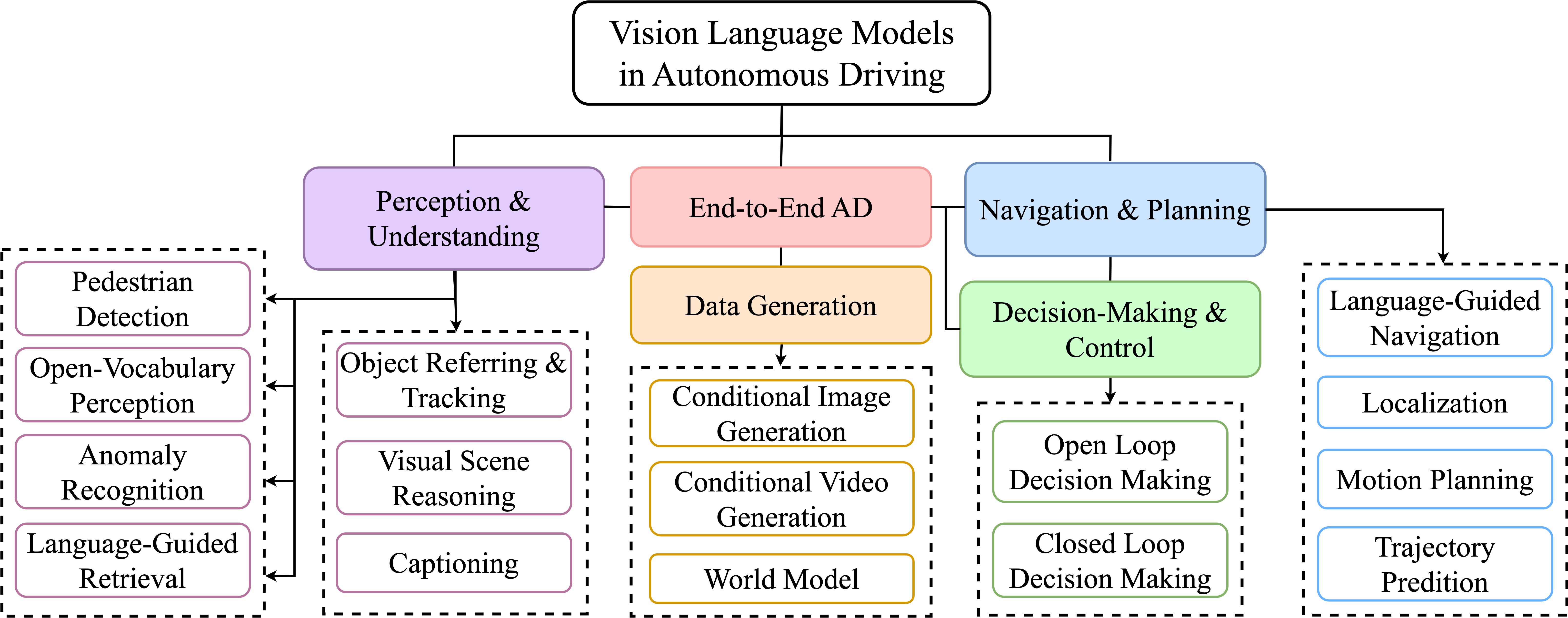

Keywords: Vision Language Model, Large Language Model, Autonomous Driving, Intelligent Vehicle, Conditional Data Generation, Decision Making, Language-guided Navigation, End-to-End Autonomous Driving

- [17.May.2024] Our paper has been accepted by IEEE Transactions on Intelligent Vehicles.

- [22.Oct.2023] ArXiv Version: Vision Language Models in Autonomous Driving and Intelligent Transportation Systems

Please visit Vision Language Models in Autonomous Driving: A Survey and Outlook for more details and comprehensive information. If you find our paper and repo helpful, please consider citing it as follows:

@ARTICLE{10531702,

author={Zhou, Xingcheng and Liu, Mingyu and Yurtsever, Ekim and Zagar, Bare Luka and Zimmer, Walter and Cao, Hu and Knoll, Alois C.},

journal={IEEE Transactions on Intelligent Vehicles},

title={Vision Language Models in Autonomous Driving: A Survey and Outlook},

year={2024},

pages={1-20},

keywords={Autonomous vehicles;Task analysis;Planning;SData models;Surveys;Computational modeling;Visualization;Vision Language Model;Large Language Model;Autonomous Driving;Intelligent Vehicle;Conditional Data Generation;Decision Making;Language-guided Navigation;End-to-End Autonomous Driving},

doi={10.1109/TIV.2024.3402136}}

The applications of Vision-Language Models (VLMs) in the fields of Autonomous Driving (AD) and Intelligent Transportation Systems (ITS) have attracted widespread attention due to their outstanding performance and the ability to leverage Large Language Models (LLMs). By integrating language data, the vehicles, and transportation systems are able to deeply understand real-world environments, improving driving safety and efficiency

| Method | Year | Task | Code Link |

|---|---|---|---|

| Talk to the vehicle: Language conditioned autonomous navigation of self driving car | 2019 | Language-Guided Navigation | |

| Ground then Navigate: Language-guided Navigation in Dynamic Scenes | 2022 | Language-Guided Navigation | |

| ALT-Pilot: Autonomous navigation with Language augmented Topometric maps | 2023 | Vision-Language Localization, Language-Guided Navigation | Page |

| GPT-Driver: Learning to Drive with GPT | 2023 | Motion Planing | Github |

| Can you text what is happening? Integrating pre-trained language encoders into trajectory prediction models for autonomous driving | 2023 | Trajectory Prediction | |

| DRIVEVLM: The Convergence of Autonomous Driving and Large Vision-Language Models | 2024 | Trajectory Prediction, Motion Planning | Github |

| Text-to-Drive: Diverse Driving Behavior Synthesis via Large Language Models | 2024 | Trajectory Prediction, Motion Planning | Github |

| Method | Year | Task | Code Link |

|---|---|---|---|

| Advisable Learning for Self-driving Vehicles by Internalizing Observation-to-Action Rules | 2020 | Open-loop Decision-Making | |

| LanguageMPC: Large Language Models as Decision Makers for Autonomous Driving | 2023 | Open-loop Decision-Making | |

| Receive, Reason, and React: Drive as You Say with Large Language Models in Autonomous Vehicles | 2023 | Open-loop Decision-Making, Motion Planing | |

| Driving with LLMs: Fusing Object-Level Vector Modality for Explainable Autonomous Driving | 2023 | Open-loop Control, Visual Spatial Reasoning | Github |

| DiLu: A Knowledge-Driven Approach to Autonomous Driving with Large Language Models | 2023 | Closed-loop Decision-Making | |

| SurrealDriver: Designing Generative Driver Agent Simulation Framework in Urban Contexts based on Large Language Model | 2023 | Closed-loop Decision-Making | |

| Drive Like a Human: Rethinking Autonomous Driving with Large Language Models | 2024 | Closed-loop Decision-Making | Github |

| Method | Year | Task | Code Link |

|---|---|---|---|

| DriveGPT4: Interpretable End-to-end Autonomous Driving via Large Language Model | 2023 | Open-loop Control, Visual Question Answering | |

| ADAPT: Action-aware Driving Caption Transformer | 2023 | Open-loop Decision-Making, Visual Spatial Reasoning | Github |

| DriveMLM: Aligning Multi-Modal Large Language Models with Behavioral Planning States for Autonomous Driving | 2023 | Closed-loop Control | Github |

| VLP: Vision Language Planning for Autonomous Driving | 2023 | Open-loop Control, 3D Object Detection and Tracking | |

| CoVLA: Comprehensive Vision-Language-Action Dataset for Autonomous Driving | 2024 | Open-loop Control, Visual Spatial Reasoning | |

| Hint-AD: Holistically Aligned Interpretability in End-to-End Autonomous Driving | 2024 | Open-loop Control, Visual Spatial Reasoning | |

| MiniDrive: More Efficient Vision-Language Models with Multi-Level 2D Features as Text Tokens for Autonomous Driving | 2024 | Open-loop Control, Visual Spatial Reasoning |

| Method | Year | Task | Code Link |

|---|---|---|---|

| DriveGAN: Towards a Controllable High-Quality Neural Simulation | 2021 | Conditional Video Generation | Page |

| GAIA-1: A Generative World Model for Autonomous Driving | 2023 | Conditional Video Generation | Page |

| DriveDreamer: Towards Real-world-driven World Models for Autonomous Driving | 2023 | Conditional Video Generation | Github |

| DrivingDiffusion: Layout-Guided multi-view driving scene video generation with latent diffusion model | 2023 | Conditional Multi-view Video Generation | Github |

| BEVControl: Accurately Controlling Street-view Elements with Multi-perspective Consistency via BEV Sketch Layout | 2023 | Conditional Image Generation | |

| DriveDreamer-2: LLM-Enhanced World Models for Diverse Driving Video Generation | 2023 | Conditional Video Generation | Github |

| DriveGenVLM: Real-world Video Generation for Vision Language Model-based Autonomous Driving | 2024 | Conditional Video Generation |

| Method | Year | Task | Code Link |

|---|---|---|---|

| A Multi-granularity Retrieval System for Natural Language-based Vehicle Retrieval | 2022 | Language-Guided Vehicle Retrieval | Page |

| Tracked-Vehicle Retrieval by Natural Language Descriptions With Multi-Contextual Adaptive Knowledge | 2023 | Language-Guided Vehicle Retrieval | Page |

| A Unified Multi-modal Structure for Retrieving Tracked Vehicles through Natural Language Descriptions | 2023 | Language-Guided Vehicle Retrieval | Page |

| Traffic-Domain Video Question Answering with Automatic Captioning | 2023 | Image Captioning, Visual Question Answering | |

| Causality-aware Visual Scene Discovery for Cross-Modal Question Reasoning | 2023 | Visual Question Answering | |

| Tem-adapter: Adapting Image-Text Pretraining for Video Question Answer | 2023 | Visual Question Answering | Github |

| Delving into CLIP latent space for Video Anomaly Recognition | 2023 | Video Anomaly Recognition | Github |

| Method | Year | Task | Code Link |

|---|---|---|---|

| LLM Powered Sim-to-real Transfer for Traffic Signal Control | 2023 | Traffic Signal Control |

| Dataset | Year | Task | Data Link |

|---|---|---|---|

| Pedestrian Detection: A Benchmark | 2009 | 2D OD | Link |

| Vision meets robotics: The kitti dataset | 2012 | 2D/3D OD, SS, OT | Link |

| The Cityscapes Dataset for Semantic Urban Scene Understanding | 2016 | 2D/3D OD, SS | Link |

| Citypersons: A diverse dataset for pedestrian detection | 2017 | 2D OD | Link |

| SemanticKITTI: A Dataset for Semantic Scene Understanding of LiDAR Sequences | 2019 | 3D SS | Link |

| Cityflow: A city-scale benchmark for multi-target multi-camera vehicle tracking and re-identification | 2019 | OT, ReID | Link |

| nuscenes: A multimodal dataset for autonomous driving | 2020 | 2D/3D OD, 2D/3D SS, OT, MP | Link |

| BDD100K: A Diverse Driving Dataset for Heterogeneous Multitask Learning | 2020 | 2D OD, 2D SS, OT | Link |

| Scalability in Perception for Autonomous Driving: Waymo Open Dataset | 2020 | 2D/3D OD, 2D/3D SS, OT | Link |

| Dataset | Year | Task | Data Link |

|---|---|---|---|

| Future Frame Prediction for Anomaly Detection – A New Baseline | 2018 | Anomaly Detection | Link |

| Real-world Anomaly Detection in Surveillance Videos | 2018 | Anomaly Detection | Link |

| Sutd-trafficqa: A question answering benchmark and an efficient network for video reasoning over traffic events | 2021 | Visual Question Answering | Link |

| AerialVLN: Vision-and-Language Navigation for UAVs | 2023 | Vision-Language Navigation | Link |

This repository is released under the Apache 2.0 license.