English | 简体中文

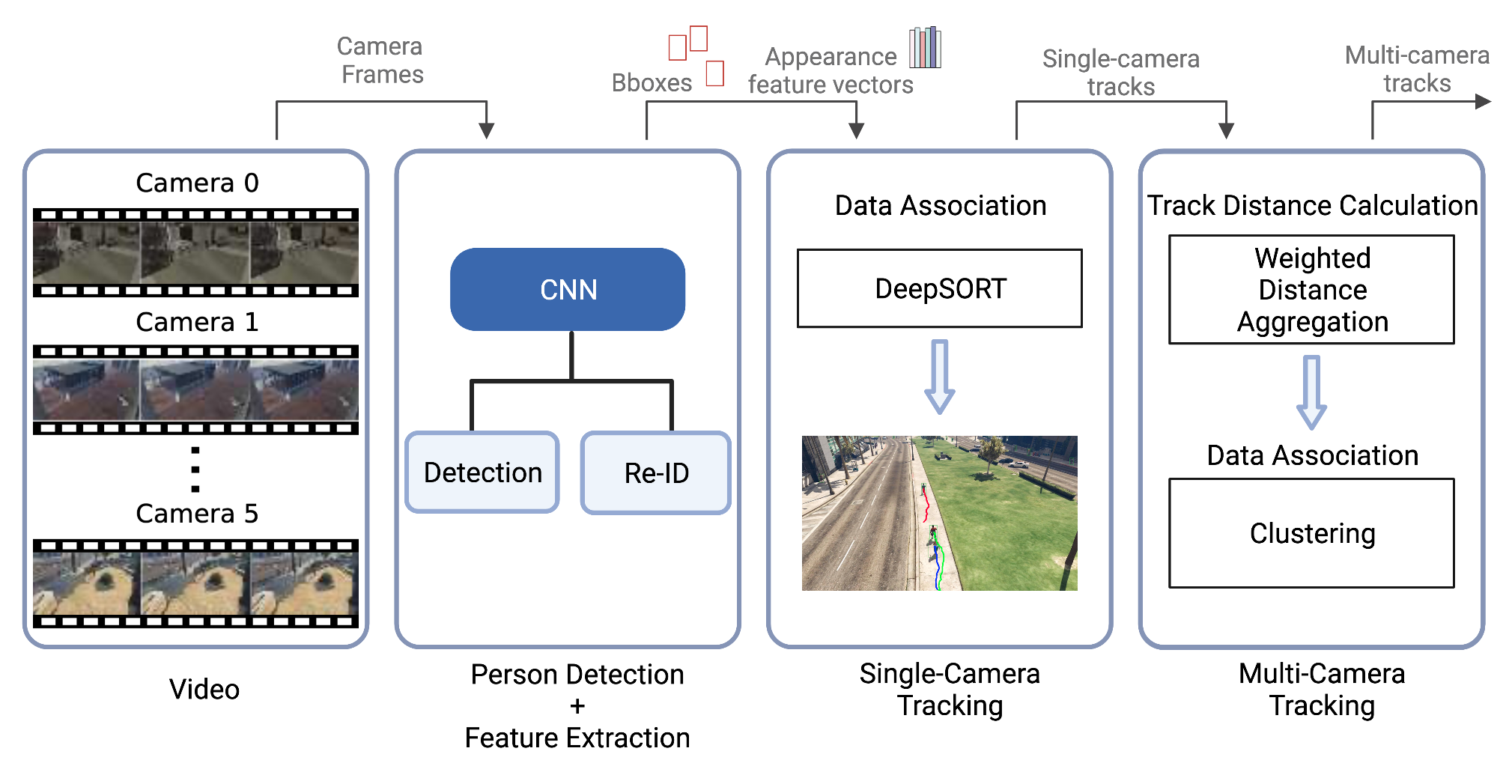

This project demonstrates the design and implementation of a Multi-Target Multi-Camera Tracking (MTMCT) solution.

Results and comparisons with FairMOT and wda_tracker trained and tested on a 6x2-minute MTA dataset

| Method | Single-Camera | Multi-Camera | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| MOTA | IDF1 | IDs | MT | ML | MOTA | IDF1 | IDs | MT | ML | |

| WDA | 58.2 | 37.3 | 534.2 | 16.8% | 17.2 | 46.6 | 19.8 | 563.8 | 6.5% | 7.0% |

| FairMOT | 64.1 | 48.0 | 588.2 | 34.7% | 7.8% | N/A | N/A | N/A | N/A | N/A |

| Ours | 70.8 | 47.8 | 470.2 | 40.5% | 5.6% | 65.6 | 31.5 | 494.5 | 31.2% | 1.1% |

Demo on Multi Camera Track Auto (MTA) dataset

Demo GIFs can be seen here

Full-length demo videos can be found at: https://youtu.be/lS9YvbrhOdo

conda create -n mtmct python=3.7.7 -y

conda activate mtmct

pip install -r requirements.txtInstall dependencies for FairMOT:

cd trackers/fair

conda install pytorch==1.7.0 torchvision==0.8.0 cudatoolkit=10.2 -c pytorch

pip install cython

pip install -r requirements.txt

cd DCNv2

./make.sh

conda install -c conda-forge ffmpegGo to https://github.com/schuar-iosb/mta-dataset to download the MTA data. Or use other datasets that match the same format.

Modify config files under tracker_configs and clustering_configs for customization. Create a work_dirs and see more instructions at FairMOT and wda_tracker.

E.g. in configs/tracker_configs/fair_high_30e set the data -> source -> base_folder to your dataset location.

Run single and the multi-camera tracking with one script:

sh start.sh fair_high_30eModify config files under tracker_configs and clustering_configs for customization. More instructions can be found at FairMOT and wda_tracker.

A large part of the code is borrowed from FairMOT and wda_tracker. The dataset used is MTA

Ruizhe Zhang is the author of this repository and the corresponding report, the copyright belongs to Wireless System Research Group (WiSeR), McMaster University.