DeepLabv3, DeepLabv3+ with pretrained models for Pascal VOC & Cityscapes & ade20k

Specify the model architecture with '--model ARCH_NAME' and set the output stride using '--output_stride OUTPUT_STRIDE'.

| DeepLabV3 | DeepLabV3+ |

|---|---|

| deeplabv3_resnet50 | deeplabv3plus_resnet50 |

| deeplabv3_resnet101 | deeplabv3plus_resnet101 |

| deeplabv3_mobilenet | deeplabv3plus_mobilenet |

| deeplabv3_hrnetv2_48 | deeplabv3plus_hrnetv2_48 |

| deeplabv3_hrnetv2_32 | deeplabv3plus_hrnetv2_32 |

All pretrained models: Dropbox, Tencent Weiyun

Note: The HRNet backbone was contributed by @timothylimyl. A pre-trained backbone is available at google drive.

model.load_state_dict( torch.load( CKPT_PATH )['model_state'] )outputs = model(images)

preds = outputs.max(1)[1].detach().cpu().numpy()

colorized_preds = val_dst.decode_target(preds).astype('uint8') # To RGB images, (N, H, W, 3), ranged 0~255, numpy array

# Do whatever you like here with the colorized segmentation maps

colorized_preds = Image.fromarray(colorized_preds[0]) # to PIL ImageNote: pre-trained models in this repo do not use Seperable Conv.

Atrous Separable Convolution is supported in this repo. We provide a simple tool network.convert_to_separable_conv to convert nn.Conv2d to AtrousSeparableConvolution. Please run main.py with '--separable_conv' if it is required. See 'main.py' and 'network/_deeplab.py' for more details.

Single image:

python predict.py --input datasets/data/cityscapes/leftImg8bit/train/bremen/bremen_000000_000019_leftImg8bit.png --dataset cityscapes --model deeplabv3plus_mobilenet --ckpt checkpoints/best_deeplabv3plus_mobilenet_cityscapes_os16.pth --save_val_results_to test_resultsImage folder:

python predict.py --input datasets/data/cityscapes/leftImg8bit/train/bremen --dataset cityscapes --model deeplabv3plus_mobilenet --ckpt checkpoints/best_deeplabv3plus_mobilenet_cityscapes_os16.pth --save_val_results_to test_resultsTraining: 513x513 random crop

validation: 513x513 center crop

| Model | Batch Size | FLOPs | train/val OS | mIoU | Dropbox | Tencent Weiyun |

|---|---|---|---|---|---|---|

| DeepLabV3-MobileNet | 16 | 6.0G | 16/16 | 0.701 | Download | Download |

| DeepLabV3-ResNet50 | 16 | 51.4G | 16/16 | 0.769 | Download | Download |

| DeepLabV3-ResNet101 | 16 | 72.1G | 16/16 | 0.773 | Download | Download |

| DeepLabV3Plus-MobileNet | 16 | 17.0G | 16/16 | 0.711 | Download | Download |

| DeepLabV3Plus-ResNet50 | 16 | 62.7G | 16/16 | 0.772 | Download | Download |

| DeepLabV3Plus-ResNet101 | 16 | 83.4G | 16/16 | 0.783 | Download | Download |

Training: 768x768 random crop

validation: 1024x2048

| Model | Batch Size | FLOPs | train/val OS | mIoU | Dropbox | Tencent Weiyun |

|---|---|---|---|---|---|---|

| DeepLabV3Plus-MobileNet | 16 | 135G | 16/16 | 0.721 | Download | Download |

| DeepLabV3Plus-ResNet101 | 16 | N/A | 16/16 | 0.762 | Download | Comming Soon |

pip install -r requirements.txtYou can run train.py with "--download" option to download and extract pascal voc dataset. The defaut path is './datasets/data':

/datasets

/data

/VOCdevkit

/VOC2012

/SegmentationClass

/JPEGImages

...

...

/VOCtrainval_11-May-2012.tar

...

See chapter 4 of [2]

The original dataset contains 1464 (train), 1449 (val), and 1456 (test) pixel-level annotated images. We augment the dataset by the extra annotations provided by [76], resulting in 10582 (trainaug) training images. The performance is measured in terms of pixel intersection-over-union averaged across the 21 classes (mIOU).

./datasets/data/train_aug.txt includes the file names of 10582 trainaug images (val images are excluded). Please to download their labels from Dropbox or Tencent Weiyun. Those labels come from DrSleep's repo.

Extract trainaug labels (SegmentationClassAug) to the VOC2012 directory.

/datasets

/data

/VOCdevkit

/VOC2012

/SegmentationClass

/SegmentationClassAug # <= the trainaug labels

/JPEGImages

...

...

/VOCtrainval_11-May-2012.tar

...

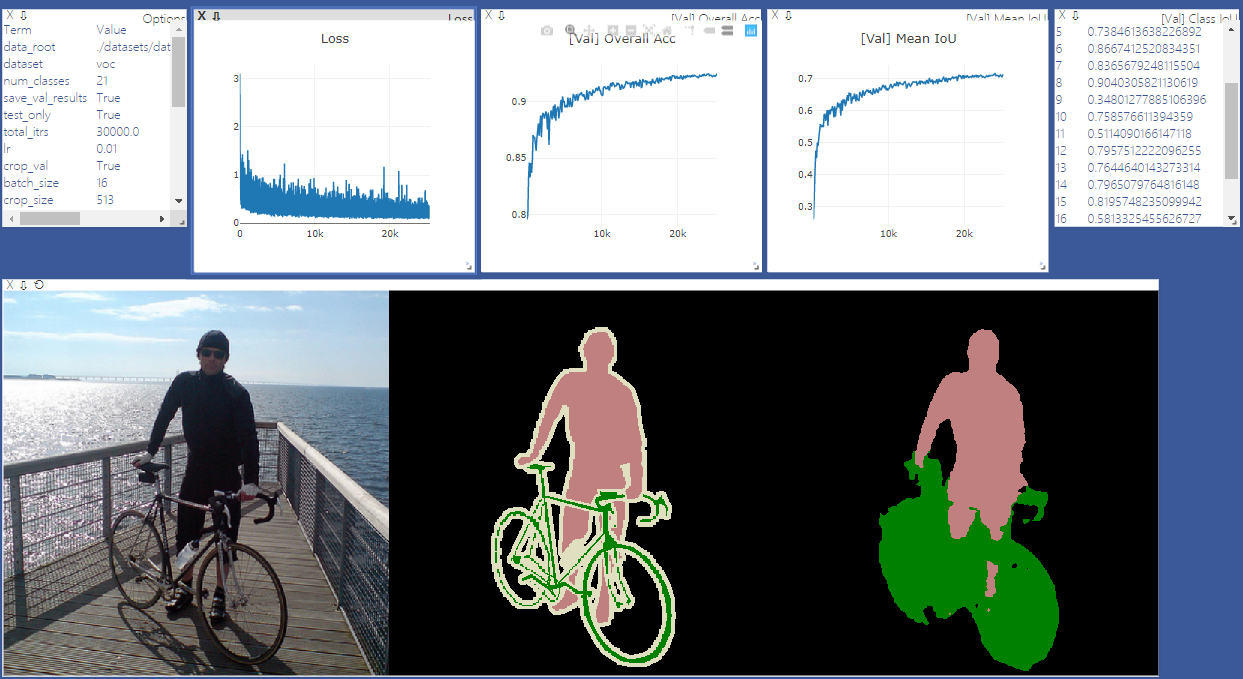

Start visdom sever for visualization. Please remove '--enable_vis' if visualization is not needed.

# Run visdom server on port 28333

visdom -port 28333Run main.py with "--year 2012_aug" to train your model on Pascal VOC2012 Aug. You can also parallel your training on 4 GPUs with '--gpu_id 0,1,2,3'

Note: There is no SyncBN in this repo, so training with multple GPUs and small batch size may degrades the performance. See PyTorch-Encoding for more details about SyncBN

python main.py --model deeplabv3plus_mobilenet --enable_vis --vis_port 28333 --gpu_id 0 --year 2012_aug --crop_val --lr 0.01 --crop_size 513 --batch_size 16 --output_stride 16Run main.py with '--continue_training' to restore the state_dict of optimizer and scheduler from YOUR_CKPT.

python main.py ... --ckpt YOUR_CKPT --continue_trainingResults will be saved at ./results.

python main.py --model deeplabv3plus_mobilenet --enable_vis --vis_port 28333 --gpu_id 0 --year 2012_aug --crop_val --lr 0.01 --crop_size 513 --batch_size 16 --output_stride 16 --ckpt checkpoints/best_deeplabv3plus_mobilenet_voc_os16.pth --test_only --save_val_results/datasets

/data

/cityscapes

/gtFine

/leftImg8bit

python main.py --model deeplabv3plus_mobilenet --dataset cityscapes --enable_vis --vis_port 28333 --gpu_id 0 --lr 0.1 --crop_size 768 --batch_size 16 --output_stride 16 --data_root ./datasets/data/cityscapes cd deeplabv3_pytorch-ade20k

chmod +x download_ADE20K.sh

./download_ADE20K.sh/datasets

/data

/ade20k

/ADEChallengeData2016

/annotations

/training

/validation

/images

/training

/validation

/objectInfo150.txt

/sceneCategories.txy

cd deeplabv3_pytorch-ade20k

python main.py --dataset ade20k --gpu 0 --lr 0.05cd deeplabv3_pytorch-ade20k

python predict.py --input ~/deeplabv3/deeplabv3_pytorch-ade20k/input/syscon2.mov --input_type video --dataset ade20k --model deeplabv3plus_mobilenet --ckpt checkpoints/best_deeplabv3plus_mobilenet_ade20k_os16.pth --save_val_results_to test_results/220728_sysconcd deeplabv3_pytorch-ade20k

python predict.py --input /home/jaewan/deeplabv3/DeepLabV3Plus-Pytorch/input/syscon.jpg --dataset ade20k --model deeplabv3plus_mobilenet --ckpt checkpoints/best_deeplabv3plus_mobilenet_ade20k_os16.pth --save_val_results_to test_results/220728_syscon```

## Reference

[1] [Rethinking Atrous Convolution for Semantic Image Segmentation](https://arxiv.org/abs/1706.05587)

[2] [Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation](https://arxiv.org/abs/1802.02611)