Michaël Gharbi (mgharbi@adobe.com), Tzu-Mao Li, Miika Aittala, Jaakko Lehtinen, Frédo Durand

Check out our project page.

The quickest way to get started is to run the code from a Docker image. Proceed as follows:

-

Download and install Docker on your machine.

-

Allow

dockerto be executed withoutsudo- Add username to the

dockergroup

sudo usermod -aG docker ${USER}- To apply the new group membership, log out of the server and back in, or type the following:

su - ${USER}- Confirm that your user is now added to the

dockergroup by typing:

id -nG

- Add username to the

-

To enable GPU acceleration in your Docker instance, install the NVidia container toolkit: https://github.com/NVIDIA/nvidia-docker. We provide a shortcut to install the latter:

make nvidia_docker

-

Once these prerequisites are installed, you can build a pre-configured Docker image and run it:

make docker_build make docker_run

If all goes well, this will launch a shell on the Docker instance and you should not have to worry about configuring the Linux or Python environment.

Alternatively, you can build a CPU-only version of the Docker image:

make docker_build_cpu make docker_run_cpu

-

(optional) From within the running Docker instance, run the package's tests:

make test -

Again, within the Docker instance. Try a few demo commands, e.g. run a pretrained denoiser on a test input:

make demo/denoise

This should download the pretrained models to

$(DATA)/pretrained_models, some demo scenes to$(DATA)/demo/scenes, and render some noisy samples data to$(OUTPUT)/demo/test_samples. After that, our model will be run to produce a denoised output:$(OUTPUT)/demo/ours_4spp.exr(linear radiance) and$(OUTPUT)/demo/ours_4spp.png(clamped 8bit rendering).In the docker,

$(OUTPUT)maps to/sbmc_app/outputby default. Outside the docker this is mapped to theoutputsubfolder of this repository, so that both data and output persist across runs.See below, or have a look at the

Makefilefor moredemo/*commands you can try.

If you just intend to install our library, you can run:

HALIDE_DISTRIB_DIR=<path/to/Halide> python setup.py installfrom the root of this repo. In any cases the docker file in dockerfiles

should help you configure your runtime environment.

We build on the following dependencies:

- Halide: our splatting kernel operator is implemented in Halide

https://halide-lang.org/. The

setup.pyscript looks for the path to the Halide distribution root under the environment variableHALIDE_DISTRIB_DIR. If this variable is not defined, the script will prompt you whether to download the Halide locally. - Torch-Tools: we use the

ttoolslibrary for PyTorch helpers and our training and evaluation scripts https://github.com/mgharbi/ttools. This should get installed automatically when runningpython setup.py install.

We provide a patch to PBRTv2's commit #e6f6334f3c26ca29eba2b27af4e60fec9fdc7a8d

https://github.com/mmp/pbrt-v2 in pbrt_patches/sbmc_pbrt.diff. This patch

contains our modification to the renderer to save individual samples to disk.

To render samples as .bin files from a .pbrt scene description, use the

scripts/render_samples.py script. This script assumes the PBRT scene file

contains only the scene description. It will create the appropriate header

description for the camera, sampler, path-tracer, etc. For an example, try:

make demo/render_samplesIn the manuscript we described a scene generation procedure that used the SunCG dataset. Because of the legal issues that were later discovered with this dataset, we decided to no longer support this source of training scenes.

You can still use our custom, outdoor random scenes generator to generate

training data, scripts/generate_training_data.py. For an example, run:

make demo/generate_scenesWe provide a helper script to inspect the content of .bin sample files,

scripts/visualize_dataset.py. For instance, to visualize the training data

generated in the previous section, run:

make demo/visualizeTo run a pre-trained model, use scripts/denoise.py. The command below runs

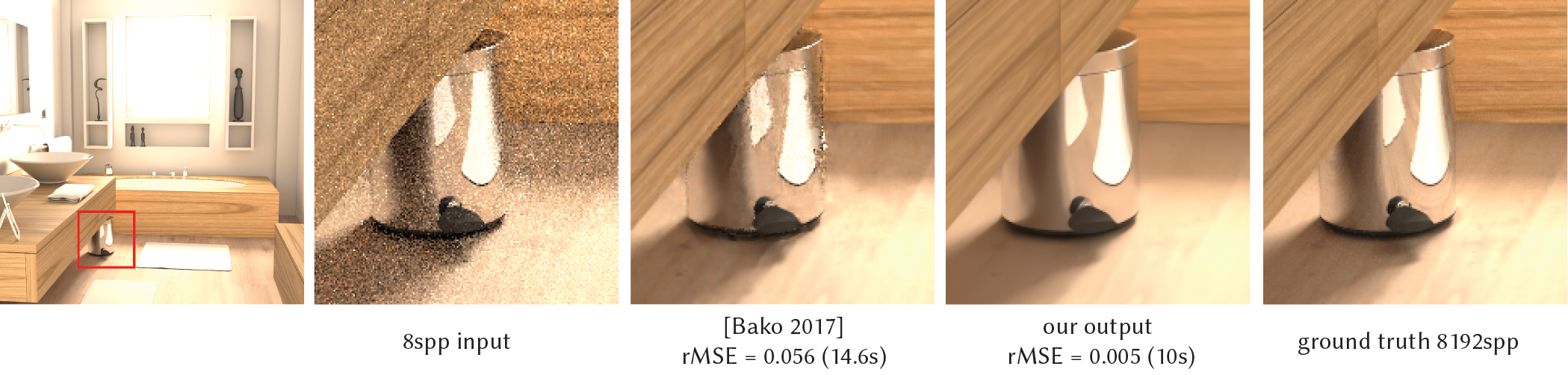

our model and that of [Bako2017] on a test image:

make demo/denoiseIn the dockerfile, we setup the code from several previous work to facilitate

comparison. We provide our modifications to the original codebases as patch

files in pbrt_patches/. The changes are mostly simple modification to the C++

code so it compiles with gcc.

The comparison include:

- [Sen2011] "On Filtering the Noise from the Random Parameters in Monte Carlo Rendering"

- [Rousselle2012] "Adaptive Rendering with Non-Local Means Filtering"

- [Kalantari2015] "A Machine Learning Approach for Filtering Monte Carlo Noise"

- [Bitterli2016] "Nonlinearly Weighted First-order Regression for Denoising Monte Carlo Renderings"

- [Bako2017] "Kernel-Predicting Convolutional Networks for Denoising Monte Carlo Renderings"

To run the comparisons:

make demo/render_reference

make demo/comparisonsTo train your own model, you can use the

script scripts/train.py. For instance,

to train our model:

make demo/trainOr to train that of Bako et al.:

make demo/train_kpcnThose scripts will also launch a Visdom server to enable you to monitor the training. In your web browser, to view the plots navigate to http://localhost:2001.

The script scripts/compute_metrics.py can be used to

evaluate a set of .exr renderings numerically. It will print out

the averages and save the result to .csv files.

For example, you can download the renderings we produced for our paper evaluation and compute the metrics by running:

make demo/evalWe provide the pre-rendered .exr results used in our Siggraph submission on-demand. To download them, run the command below. Please note this data is rather large (54 GB).

make precomputed_renderingsYou can download the .pbrt scenes we used for evaluation by running:

make test_scenesThis will only download the scene description and assets. The images (or

samples) themselves still need to be rendered from this data, using the

scripts/render_exr.py and scripts/render_samples.py scripts respectively.

Some sample data used throughout the demo commands can be downloaded using:

make demo_dataDownload our pretrained models with the following command:

make pretrained_models