This is the Pytorch implementation of our following paper:

GFNet: Geometric Flow Network for 3D Point Cloud Semantic Segmentation

Accepted by TMLR, 2022

Haibo Qiu, Baosheng Yu and Dacheng TaoAbstract

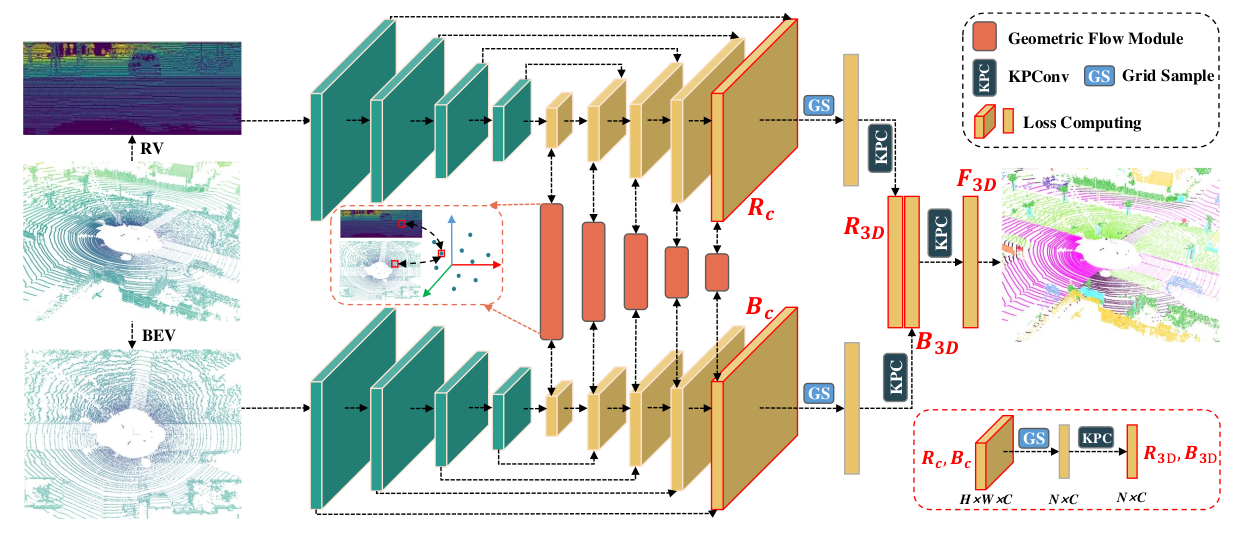

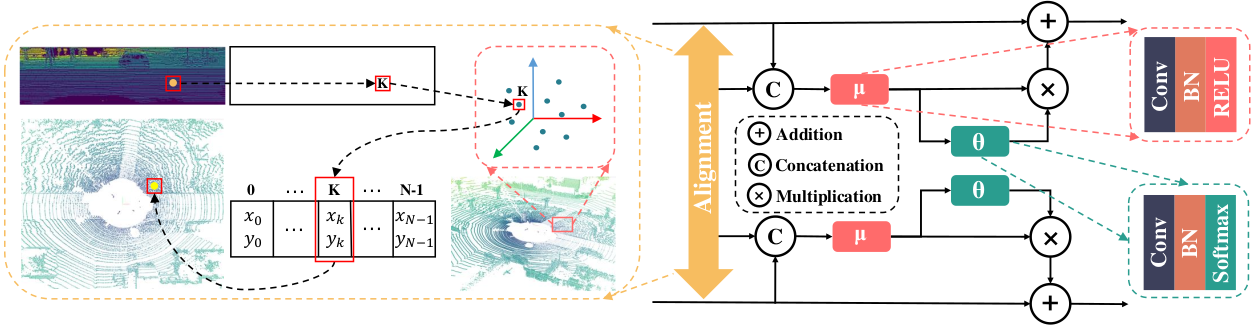

Point cloud semantic segmentation from projected views, such as range-view (RV) and bird's-eye-view (BEV), has been intensively investigated. Different views capture different information of point clouds and thus are complementary to each other. However, recent projection-based methods for point cloud semantic segmentation usually utilize a vanilla late fusion strategy for the predictions of different views, failing to explore the complementary information from a geometric perspective during the representation learning. In this paper, we introduce a geometric flow network (GFNet) to explore the geometric correspondence between different views in an align-before-fuse manner. Specifically, we devise a novel geometric flow module (GFM) to bidirectionally align and propagate the complementary information across different views according to geometric relationships under the end-to-end learning scheme. We perform extensive experiments on two widely used benchmark datasets, SemanticKITTI and nuScenes, to demonstrate the effectiveness of our GFNet for project-based point cloud semantic segmentation. Concretely, GFNet not only significantly boosts the performance of each individual view but also achieves state-of-the-art results over all existing projection-based models.

Segmentation GIF

(A gif of segmentation results on SemanticKITTI by GFNet)

Table of Contents

- Clone this repo:

git clone https://github.com/haibo-qiu/GFNet.git

- Create a conda env with

Note that we also provide the

conda env create -f environment.yml

Dockerfilefor an alternative setup method.

- Download point clouds data from SemanticKITTI and nuScenes.

- For SemanticKITTI, directly unzip all data into

dataset/SemanticKITTI. - For nuScenes, first unzip data to

dataset/nuScenes/fulland then use the following cmd to generate pkl files for both training and testing:python dataset/utils_nuscenes/preprocess_nuScenes.py

- Final data folder structure will look like:

dataset └── SemanticKITTI └── sequences ├── 00 ├── ... └── 21 └── nuScenes └── full ├── lidarseg ├── smaples ├── v1.0-{mini, test, trainval} └── ... └── nuscenes_train.pkl └── nuscenes_val.pkl └── nuscenes_trainval.pkl └── nuscenes_test.pkl

- Please refer to

configs/semantic-kitti.yamlandconfigs/nuscenes.yamlfor dataset specific properties. - Download the pretrained resnet model to

pretrained/resnet34-333f7ec4.pth. - The hyperparams for training are included in

configs/resnet_semantickitti.yamlandconfigs/resnet_nuscenes.yaml. After modifying corresponding settings to satisfy your purpose, the network can be trained in an end-to-end manner by:./scripts/start.shon SemanticKITTI../scripts/start_nuscenes.shon nuScenes.

- Download gfnet_63.0_semantickitti.pth.tar into

pretrained/. - Evaluate on SemanticKITTI valid set by:

Alternatively, you can use the official semantic-kitti api for evaluation.

./scripts/infer.sh

- To reproduce the results we submitted to the test server:

- download gfnet_submit_semantickitti.pth.tar into

pretrained/, - uncomment and run the second cmd in

./scripts/infer.sh. - zip

path_to_results_folder/sequencesfor submission.

- download gfnet_submit_semantickitti.pth.tar into

- Download gfnet_76.8_nuscenes.pth.tar into

pretrained/. - Evaluate on nuScenes valid set by:

./scripts/infer_nuscenes.sh

- To reproduce the results we submitted to the test server:

- download gfnet_submit_nuscenes.pth.tar into

pretrained/. - uncomment and run the second cmd in

./scripts/infer_nuscenes.sh. - check the valid format of predictions by:

where

./dataset/utils_nuscenes/check.sh

result_pathneeds to be modified correspondingly. - submit the

dataset/nuScenes/preds.zipto the test server.

- download gfnet_submit_nuscenes.pth.tar into

This repo is built based on lidar-bonnetal, PolarSeg and kprnet. Thanks the contributors of these repos!

If you use our code or results in your research, please consider citing with:

@article{qiu2022gfnet,

title={{GFN}et: Geometric Flow Network for 3D Point Cloud Semantic Segmentation},

author={Haibo Qiu and Baosheng Yu and Dacheng Tao},

journal={Transactions on Machine Learning Research},

year={2022},

url={https://openreview.net/forum?id=LSAAlS7Yts},

}