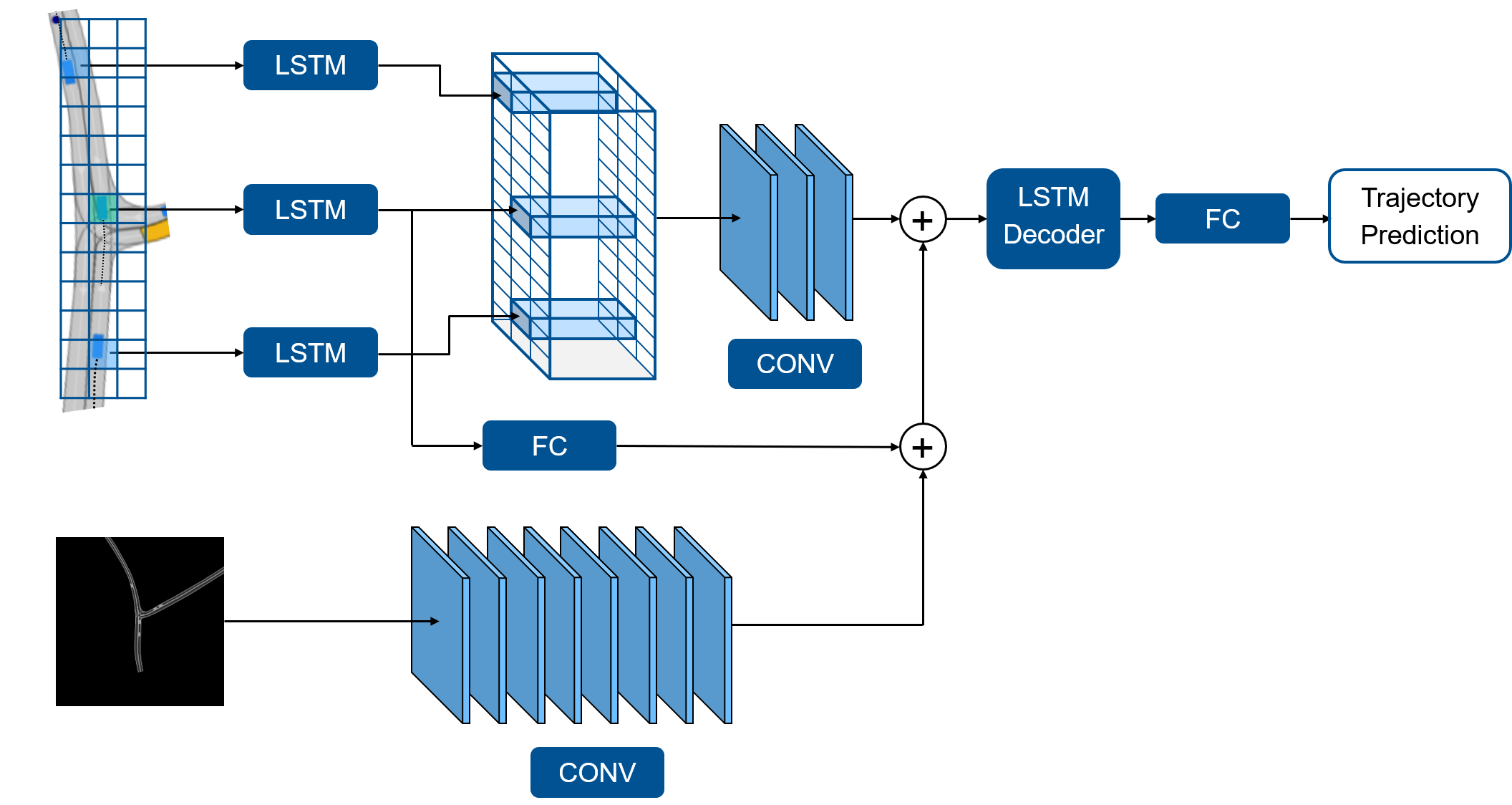

This repository provides a Encoder-Decoder Neural Network for vehicle trajectory prediction with uncertainties. It builds up on the work of Convolutional Social Pooling. It has been adapted to CommonRoad and extended by the ability of scene understanding and online learning.

- Linux Ubuntu (tested on versions 16.04, 18.04 and 20.04)

- Python >=3.6

Clone repository:

git clone https://github.com/TUMFTM/Wale-Net.git

Install requirements:

pip install -r requirements.txt

-

After installation import the prediction class from

mod_predictione.g. withfrom mod_prediction import WaleNet. Available classes for prediction are:Predictionfor ground truth predictions, uncertainties are zero.WaleNetfor probability-based LSTM prediction.

-

Initialize the class with a CommonRoad scenario with

predictor = WaleNet(<CommonRoad Scenario object>). Optionally provide a dictionary of online_args for the prediction with different models or for online learning. -

Call

predictor.step(time_step, obstacle_id_list)in a loop, wheretime_stepis the current time step of a CommonRoad scenario object andobstacle_id_listis a list of all the IDs of the dynamic obstacle that should be predicted. It outputs a dictionary in the following format:prediction_result = { <obstacle_id>: { 'pos_list': [np.ndarray] array n x 2 with x,y positions of the predicted trajectory in m 'cov_list': [np.ndarray] array n x 2 x 2 with 2D-covariance matrices for uncertainties } ... } -

Optionally call

predictor.get_positions()orpredictor.get_positions()to get a list of x,y positions or covariances of all predicted vehicles.

To get a stand-alone prediction of a CommonRoad scenario call mod_prediction/main.py and provide a CommonRoad scenario:

python mod_prediction/main.py --scenario <path/to/scenario>

- Create your desired configuration for the prediction network and training. Start by making a copy of the default.json.

- Make sure your dataset is available, either downloaded or self-created (see Data) or use the

--debugargument. - Execute

python train.py. This will train a model on the given dataset specified in the configs. The result will be saved intrained_modelsand the logs intb_logs- Add the argument

--config <path to your config>to use your config. Per defaultdefault.jsonis used.

- Add the argument

| File | Description |

|---|---|

| main.py | Deploy the prediction network on a CommonRoad scenario. |

| train.py | Train the prediction network. |

| evaluate.py | Evaluate a trained prediction model on the test set. |

| evaluate_online_learning.py | Ordered evaluation of an online configuration on all the scenarios. |

- The full dataset for training can be downloaded here. To start a training unpack the folders

cr_datasetandsc_img_crinto the/data/directory and follow the steps above. - Alternatively a new dataset can be generated with the

tools/commonroad_dataset.pyscript. CommonRoad scenes can be downloaded here.

Below is an exemplary visualization of the prediction on a scenario that was not trained on.

Time for the prediction of a single vehicle takes around 10 ms on NVIDIA V100 GPU and 23 ms on an average laptop CPU.

- Maximilian Geisslinger, Phillip Karle, Johannes Betz and Markus Lienkamp "Watch-and-Learn-Net: Self-supervised Online Learning for Vehicle Trajectory Prediction". 2021 IEEE International Conference on Systems, Man and Cybernetics

- Nachiket Deo and Mohan M. Trivedi,"Convolutional Social Pooling for Vehicle Trajectory Prediction." CVPRW, 2018