Pytorch implementation of various neural network interpretability methods and how they can interpret uncertainty awareness models.

The main implementation can be found in the nn_interpretability package. We also provide every method an accompanied Jupyter Notebook to demonstrate how we can use the nn_interpretability package in practice. Some of the methods are showcased together in one notebook for better comparison. Next to the interpretability functionality, we have defined a repository for models we trained and additional functionality for loading and visualizing data and the results from the interpretability methods. Furthermore, we have implemented uncertainty techniques to observe the behavior of interpretability methods under stochastical settings.

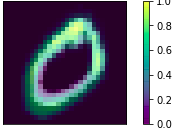

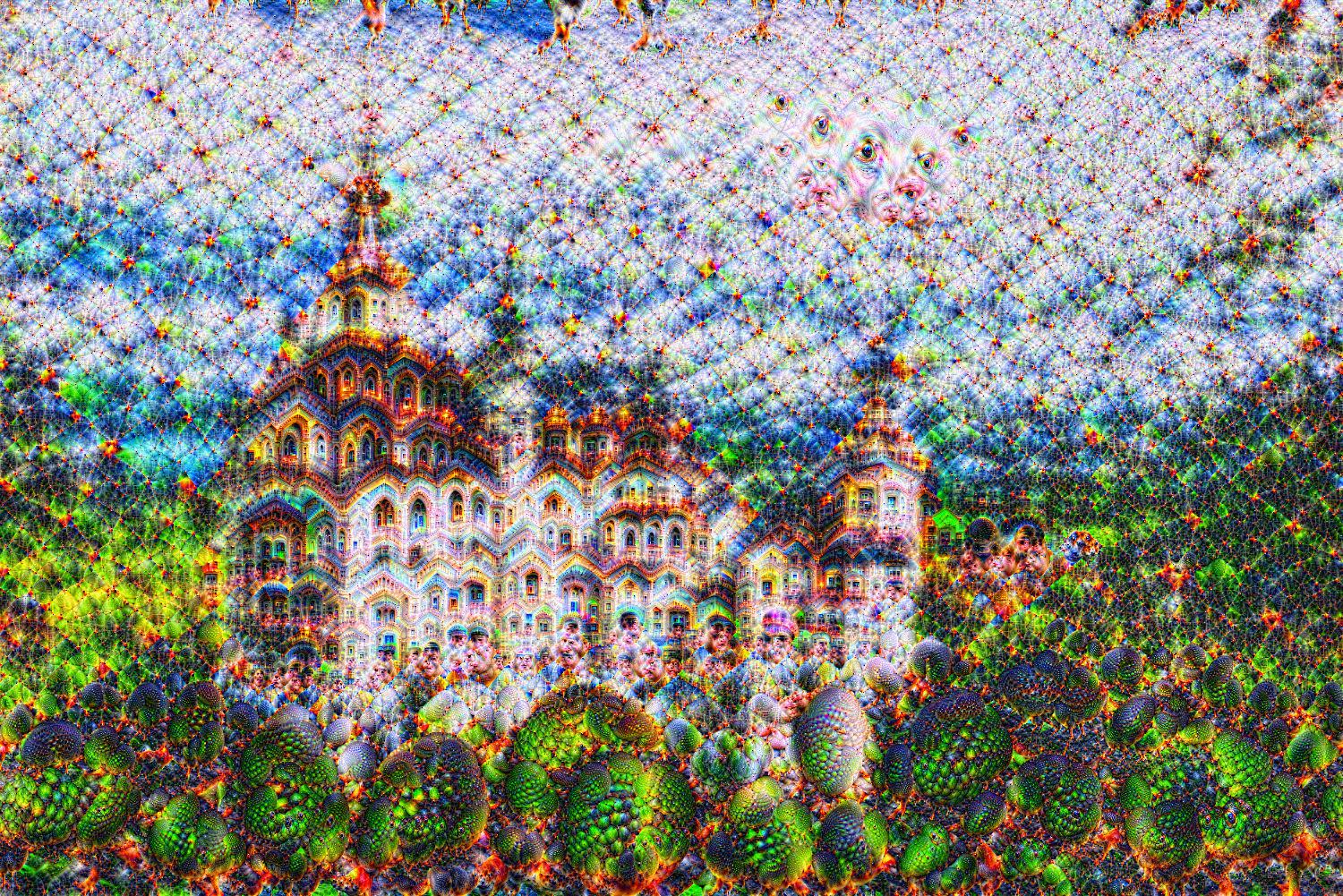

| Original Image | VGG19 Layer:25 | VGG19 Layer:25 Filter: 150 |

|

|

|

The main deliverable of this repository is the package nn_interpretability, which entails every implementation of a NN interpretability method that we have done as part of the course. It can be installed and used as a library in any project. In order to install it one should clone this repository and execute the following command:

pip install -e .

After that, the package can be used anywhere by importing it:

import nn_interpretability as nni

An example usage of a particular interpretability method can be found in the corresponding Jupyter Notebook as outlined below. We also prepared a general demonstration of the developed package in this Jupyter Notebook.

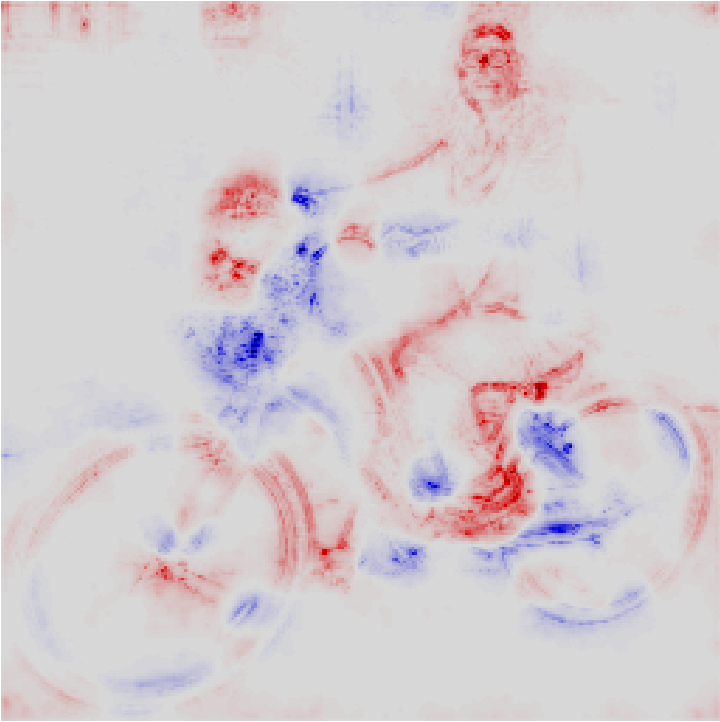

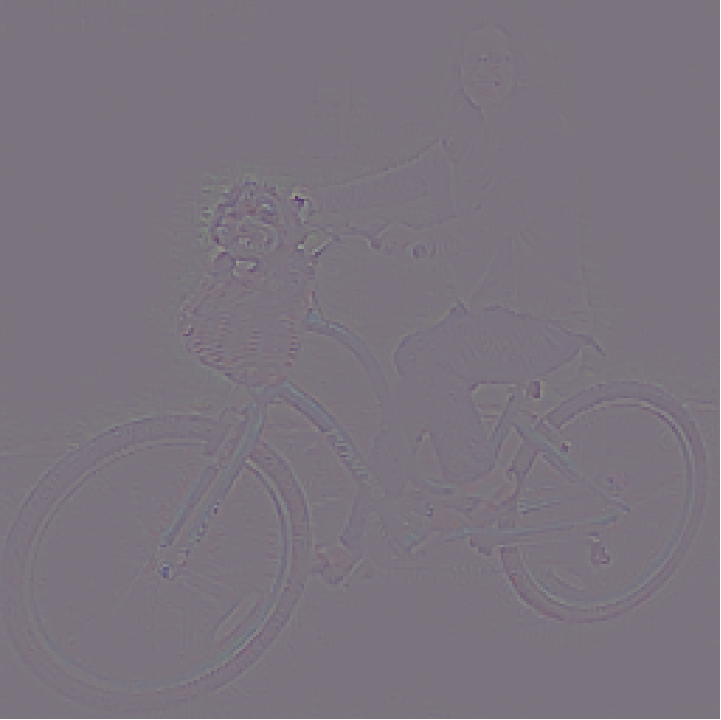

| Image classified as tandem bicycle by pretrained VGG16 |  |

| LRP Composite |  |

| Guided Backpropagation |  |

| DeepLIFT RevealCancel |  |

Note: The package assume that layers of the model are constructed inside containers(e.g. features and classifier). This setting is due to the structure of the pretrained models from the model zoo. You could use torch.nn.Sequential or torch.nn.ModuleList to achieve this on your own model.

- Activation Maximization

- DeepDream [2][13]

- Saliency Map [4]

- DeConvNet

- Occlusion Sensitivity [5]

- Backpropagation

- Vallina Backpropagation [4]

- Guided Backpropagation [6]

- Integrated Gradients [7]

- SmoothGrad [9]

- Taylor Decomposition

- LRP

- LRP-0 [8]

- LRP-epsilon [8]

- LRP-gamma [8]

- LRP-ab [1][8]

- DeepLIFT

- DeepLIFT Rescale [12]

- DeepLIFT Linear [12]

- DeepLIFT RevealCancel [12]

- CAM

- Monte Carlo Dropout

- Evidential Deep Learning

- Uncertain DeepLIFT

[1] Montavon, Grégoire and Samek, Wojciech and Müller, Klaus-Robert. Methods for interpreting and understanding deep neural networks. Digital Signal Processing, 73:1–15, Feb 2018. | Paper

[2] Chris Olah, Alexander Mordvintsev, and Ludwig Schubert. Feature visualization.Distill, 2017. | Paper

[3] Chris Olah et al. The Building Blocks of Interpretability.Distill, 2017. | Paper

[4] Karen Simonyan, Andrea Vedaldi, and Andrew Zisserman. Deep inside convolutional networks:Visualising image classification models and saliency maps, 2013. | Paper

[5] Matthew D Zeiler and Rob Fergus. Visualizing and understanding convolutional networks, 2013. | Paper

[6] Jost Springenberg, Alexey Dosovitskiy, Thomas Brox, and Martin Riedmiller. Striving for sim-plicity: The all convolutional net. 12 2014. | Paper

[7] Mukund Sundararajan, Ankur Taly, and Qiqi Yan. Axiomatic attribution for deep networks.CoRR, abs/1703.01365, 2017. | Paper

[8] Grégoire Montavon, Alexander Binder, Sebastian Lapuschkin, Wojciech Samek, and Klaus-Robert Müller.Layer-Wise Relevance Propagation: An Overview, pages 193–209. 09 2019. | Paper

[9] Daniel Smilkov, Nikhil Thorat, Been Kim, Fernanda B. Viégas, and Martin Wattenberg. Smooth-grad: removing noise by adding noise.CoRR, abs/1706.03825, 2017 | Paper

[10] B. Zhou, A. Khosla, Lapedriza. A., A. Oliva, and A. Torralba. Learning Deep Features forDiscriminative Localization.CVPR, 2016. | Paper

[11] Ramprasaath R. Selvaraju, Abhishek Das, Ramakrishna Vedantam, Michael Cogswell, DeviParikh, and Dhruv Batra. Grad-cam: Why did you say that? visual explanations from deepnetworks via gradient-based localization.CoRR, abs/1610.02391, 2016. | Paper

[12] Avanti Shrikumar, Peyton Greenside, and Anshul Kundaje. Learning important features throughpropagating activation differences, 2017. | Paper

[13] Alexander Mordvintsev, Christopher Olah, and Mike Tyka. Inceptionism: Going deeper intoneural networks, 2015. | Paper

[14] Yarin Gal and Zoubin Ghahramani. Dropout as a bayesian approximation: Representing modeluncertainty in deep learning, 2015. | Paper

[15] Murat Sensoy, Lance Kaplan, and Melih Kandemir. Evidential deep learning to quantify classifi-cation uncertainty, 2018. | Paper

[16] Chuan Guo, Geoff Pleiss, Yu Sun, and Kilian Q. Weinberger. On calibration of modern neuralnetworks, 2017. | Paper