This repository contains the essential code for the paper Deep Anomaly Detection with Outlier Exposure (ICLR 2019).

Requires Python 3+ and PyTorch 0.4.1+.

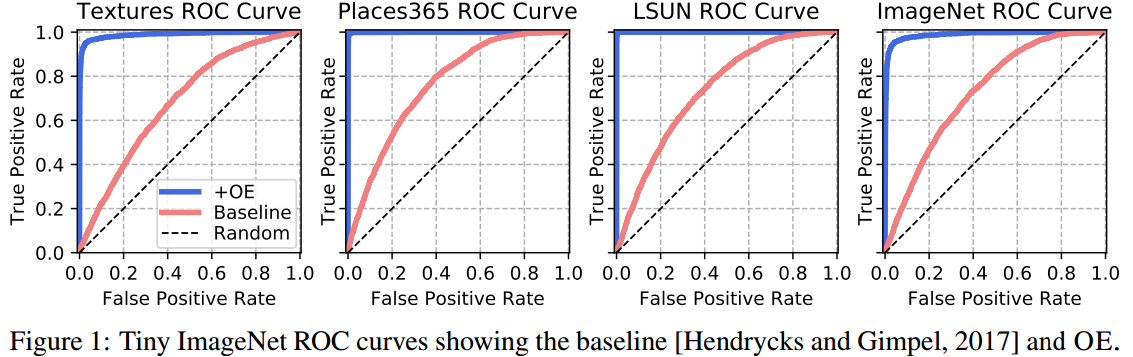

Outlier Exposure (OE) is a method for improving anomaly detection performance in deep learning models. Using an out-of-distribution dataset, we fine-tune a classifier so that the model learns heuristics to distinguish anomalies and in-distribution samples. Crucially, these heuristics generalize to new distributions. Unlike ODIN, OE does not require a model per OOD dataset and does not require tuning on "validation" examples from the OOD dataset in order to work. This repository contains a subset of the calibration and multiclass classification experiments. Please consult the paper for the full results and method descriptions.

Contained within this repository is code for the NLP experiments and the multiclass and calibration experiments for SVHN, CIFAR-10, CIFAR-100, and Tiny ImageNet.

80 Million Tiny Images is available here (mirror link).

If you do not want to use 80 Million Tiny Images, we prepared a cleaned ("debiased") subset with 300K images. We removed images that belong to CIFAR classes from it, images that belong to Places or LSUN classes, and images with divisive metadata.

300K Random Images is available here.

If you find this useful in your research, please consider citing:

@article{hendrycks2019oe,

title={Deep Anomaly Detection with Outlier Exposure},

author={Hendrycks, Dan and Mazeika, Mantas and Dietterich, Thomas},

journal={Proceedings of the International Conference on Learning Representations},

year={2019}

}

These experiments make use of numerous outlier datasets. Links for less common datasets are as follows, 80 Million Tiny Images (mirror link), Icons-50, Textures, Chars74K, and Places365.