This is the official repository for the ICLR 2024 paper "Towards Seamless Adaptation of Pre-trained Models for Visual Place Recognition".

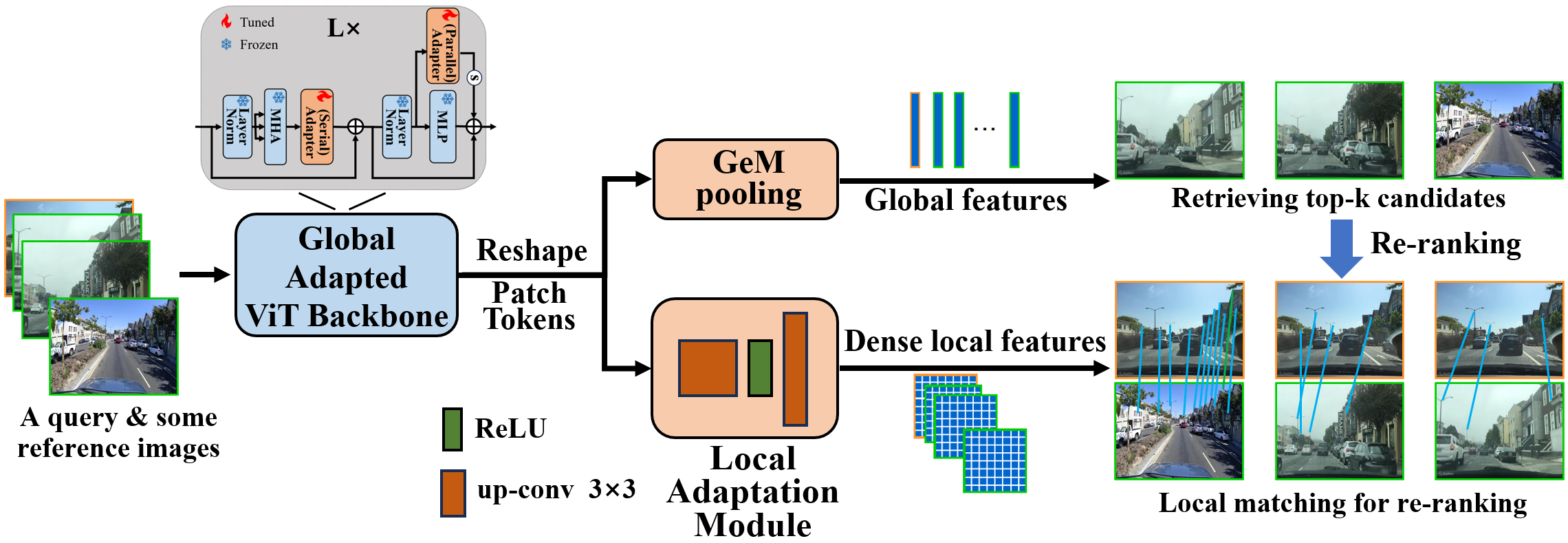

This paper presents a novel method to realize Seamless adaptation of pre-trained foundation models for the (two-stage) VPR task, named SelaVPR. By adding a few tunable lightweight adapters to the frozen pre-trained model, we achieve an efficient hybrid global-local adaptation to get both global features for retrieving candidate places and dense local features for re-ranking. The SelaVPR feature representation can focus on discriminative landmarks, thus closing the gap between the pre-training and VPR tasks (fully unleash the capability of pre-trained models for VPR). SelaVPR can directly match the local features without spatial verification, making the re-ranking much faster.

The global adaptation is achieved by adding adapters after the multi-head attention layer and in parallel to the MLP layer in each transformer block (see adapter1 and adapter2 in /backbone/dinov2/block.py).

The local adaptation is implemented by adding up-convolutional layers after the entire ViT backbone to upsample the feature map and get dense local features (see LocalAdapt in network.py).

This repo follows the Visual Geo-localization Benchmark. You can refer to it (VPR-datasets-downloader) to prepare datasets.

The dataset should be organized in a directory tree as such:

├── datasets_vg

└── datasets

└── pitts30k

└── images

├── train

│ ├── database

│ └── queries

├── val

│ ├── database

│ └── queries

└── test

├── database

└── queries

Before training, you should download the pre-trained foundation model DINOv2(ViT-L/14) HERE.

Finetuning on MSLS

python3 train.py --datasets_folder=/path/to/your/datasets_vg/datasets --dataset_name=msls --queries_per_epoch=30000 --foundation_model_path=/path/to/pre-trained/dinov2_vitl14_pretrain.pth

Further finetuning on Pitts30k

python3 train.py --datasets_folder=/path/to/your/datasets_vg/datasets --dataset_name=pitts30k --queries_per_epoch=5000 --resume=/path/to/finetuned/msls/model/SelaVPR_msls.pth

Set rerank_num=100 to reproduce the results in paper, and set rerank_num=20 to achieve a close result with only 1/5 re-ranking runtime (0.018s for a query).

python3 eval.py --datasets_folder=/path/to/your/datasets_vg/datasets --dataset_name=pitts30k --resume=/path/to/finetuned/pitts30k/model/SelaVPR_pitts30k.pth --rerank_num=100

The model finetuned on MSLS (for diverse scenes).

| DOWNLOAD |

MSLS-val | Nordland-test | St. Lucia | ||||||

|---|---|---|---|---|---|---|---|---|---|

| R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | |

| LINK | 90.8 | 96.4 | 97.2 | 85.2 | 95.5 | 98.5 | 99.8 | 100.0 | 100.0 |

The model further finetuned on Pitts30k (only for urban scenes).

| DOWNLOAD |

Tokyo24/7 | Pitts30k | Pitts250k | ||||||

|---|---|---|---|---|---|---|---|---|---|

| R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | |

| LINK | 94.0 | 96.8 | 97.5 | 92.8 | 96.8 | 97.7 | 95.7 | 98.8 | 99.2 |

Parts of this repo are inspired by the following repositories:

Visual Geo-localization Benchmark

If you find this repo useful for your research, please consider citing the paper

@inproceedings{selavpr,

title={Towards Seamless Adaptation of Pre-trained Models for Visual Place Recognition},

author={Lu, Feng and Zhang, Lijun and Lan, Xiangyuan and Dong, Shuting and Wang, Yaowei and Yuan, Chun},

booktitle={The Twelfth International Conference on Learning Representations},

year={2024}

}