MIT-licensed implementation of Structured Volume Sampling technique, along with simple framework for comparing other techniques.

Teaser video here.

Draft slides describing latest approach here.

Shadertoy volume rendering demo: Mt3GWs

Shadertoy sampling diagram: ll3GWs

Contacts: Huw Bowles (@hdb1 , huw dot bowles at gmail dot com), Daniel Zimmermann (daniel dot zimmermann at studiogobo dot com), Beibei Wang (bebei dot wang at gmail dot com)

Retweet to support this work: https://twitter.com/hdb1/status/769615284672028672

Analysis and thread by Mirko Salm: https://twitter.com/Mirko_Salm/status/1372365191481032705

Impressive application of this technique by Felix Westin: https://twitter.com/FewesW , in particular https://twitter.com/FewesW/status/1364935000790102019

Alternative implementation: https://github.com/gokselgoktas/structured-volume-sampling

Volume rendering in real-time applications is expensive, and sample counts are typically low. When the camera is inside the volume, the volume samples typically move around with the camera which can cause severe aliasing. This repository provides a new, fast, efficient and simple algorithm for eliminating aliasing for this camera-in-volume case.

This repos started as the source code for the course titled A Novel Sampling Algorithm for Fast and Stable Real-Time Volume Rendering, in the Advances in Real-Time Rendering in Games course at SIGGRAPH 2015 [1]. The full presentation PPT is available for download from the course page here. While this is useful reading, the latest implementation takes a new approach which completely replaces most of the approaches introduced in the talk.

The latest approach, Structured Volume Sampling, works differently. See the Algorithm section below.

This is implemented as a Unity 5 project (last run on 5.6) and should "just work" without requiring any set up steps. It should be very easily ported to other Unity versions as well.

Find the current test scenes in the Scenes/ folder. A few volume sampling schemes are implemented for comparison and can be selected via the on screen GUI.

All volume sampling methods and volumetric scenes are implemented into VolumeRender.shader, as shader features. This is a quick and dirty way to associate shader code with unity scenes, and at the same time support different volume sampling approaches, without requiring a ton of shader code duplication.

Draft slides describing latest approach here.

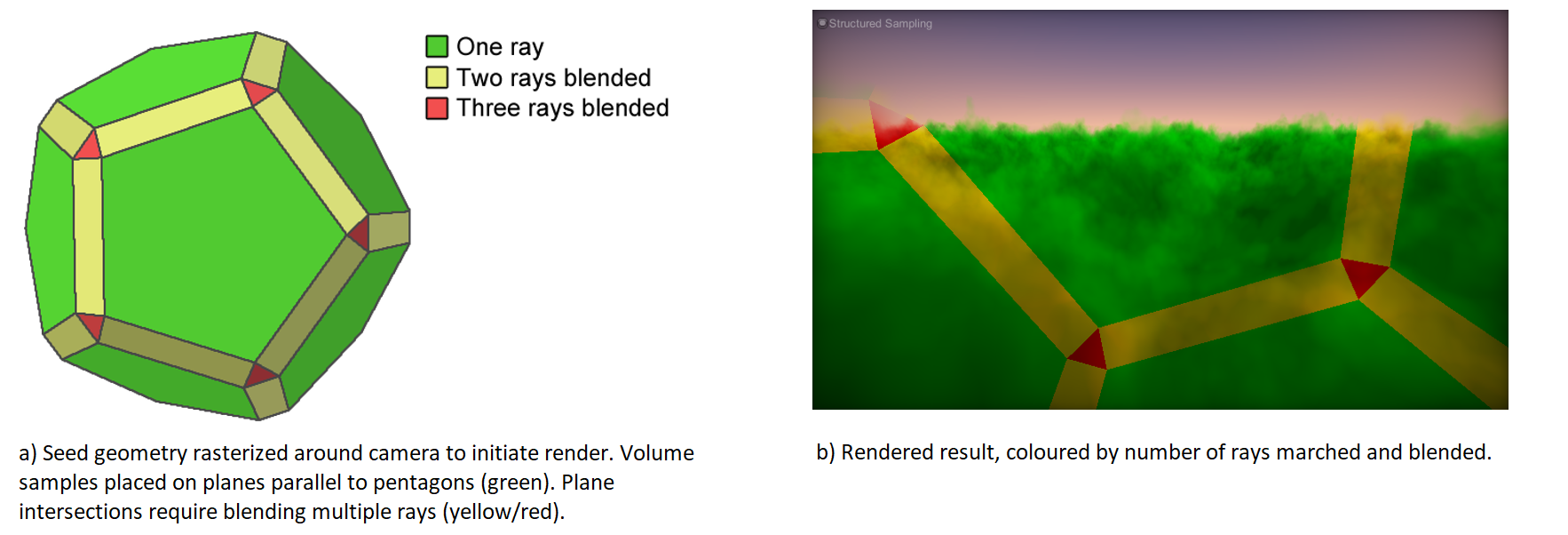

For further understanding it may help to enable the define DEBUG_BEVEL and play with the Bevel amount on the Platonic Solid Blend script. Doing a GPU trace capture in unity can also be helpful to see the dodecahedron.

We hope to publish a full description of this technique soon. Stay tuned!

- The adaptive sampling method published here should be compatible with the new approach and could be reinstated.

- If enough of the code that samples the volume / does the lighting is scalar (calculations on floats/ints rather than vectors), it may make sense to vectorize the loop to calculate 4 samples simultaneously.

[1] Bowles H. and Zimmermann D., A Novel Sampling Algorithm for Fast and Stable Real-Time Volume Rendering, Advances in Real-Time Rendering in Games course, SIGGRAPH 2015. Course page.