An Easy, Fast and Memory-Efficient System for DiT Training and Inference

- [2024/06] 🔥Propose Pyramid Attention Broadcast (PAB)[blog][doc], the first approach to achieve real-time DiT-based video generation, delivering negligible quality loss without requiring any training.

- [2024/06] Support Open-Sora-Plan and Latte.

- [2024/03] Propose Dynamic Sequence Parallel (DSP)[paper][doc], achieves 3x speed for training and 2x speed for inference in Open-Sora compared with sota sequence parallelism.

- [2024/03] Support Open-Sora: Democratizing Efficient Video Production for All.

- [2024/02] Release OpenDiT: An Easy, Fast and Memory-Efficent System for DiT Training and Inference.

OpenDiT is an open-source project that provides a high-performance implementation of Diffusion Transformer (DiT) powered by Colossal-AI, specifically designed to enhance the efficiency of training and inference for DiT applications, including text-to-video generation and text-to-image generation.

OpenDiT will continue to integrate more open-source DiT models and techniques. Stay tuned for upcoming enhancements and additional features!

Prerequisites:

- Python >= 3.10

- PyTorch >= 1.13 (We recommend to use a >2.0 version)

- CUDA >= 11.6

We strongly recommend using Anaconda to create a new environment (Python >= 3.10) to run our examples:

conda create -n opendit python=3.10 -y

conda activate openditInstall ColossalAI:

pip install colossalai==0.3.7Install OpenDiT:

git clone https://github.com/NUS-HPC-AI-Lab/OpenDiT

cd OpenDiT

pip install -e .OpenDiT supports many diffusion models with our various acceleration techniques, enabling these models to run faster and consume less memory.

You can find all available models and their supported acceleration techniques in the following table. Click Doc to see how to use them.

| Model | Train | Infer | Acceleration Techniques | Usage | |

|---|---|---|---|---|---|

| DSP | PAB | ||||

| Open-Sora [source] | 🟡 | ✅ | ✅ | ✅ | Doc |

| Open-Sora-Plan [source] | ❌ | ✅ | ✅ | ✅ | Doc |

| Latte [source] | ❌ | ✅ | ✅ | ✅ | Doc |

| DiT [source] | ✅ | ✅ | ❌ | ❌ | Doc |

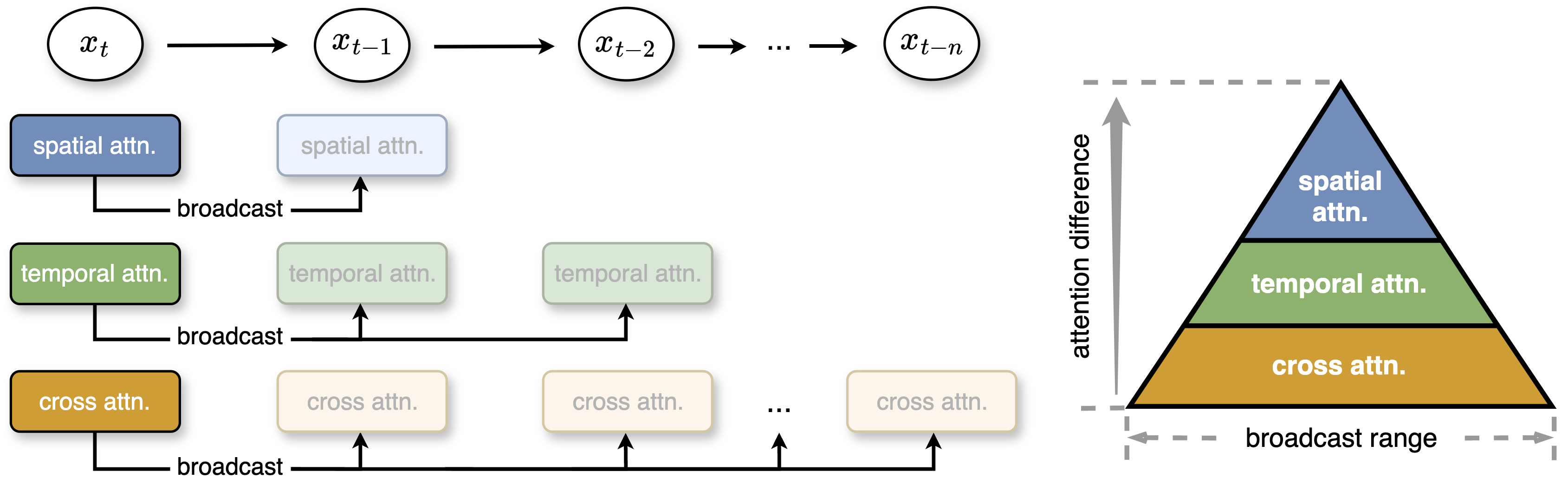

Real-Time Video Generation with Pyramid Attention Broadcast

Authors: Xuanlei Zhao1*, Xiaolong Jin2*, Kai Wang1*, and Yang You1 (* indicates equal contribution)

1National University of Singapore, 2Purdue University

PAB is the first approach to achieve real-time DiT-based video generation, delivering lossless quality without requiring any training. By mitigating redundant attention computation, PAB achieves up to 21.6 FPS with 10.6x acceleration, without sacrificing quality across popular DiT-based video generation models including Open-Sora, Latte and Open-Sora-Plan.

See its details here.

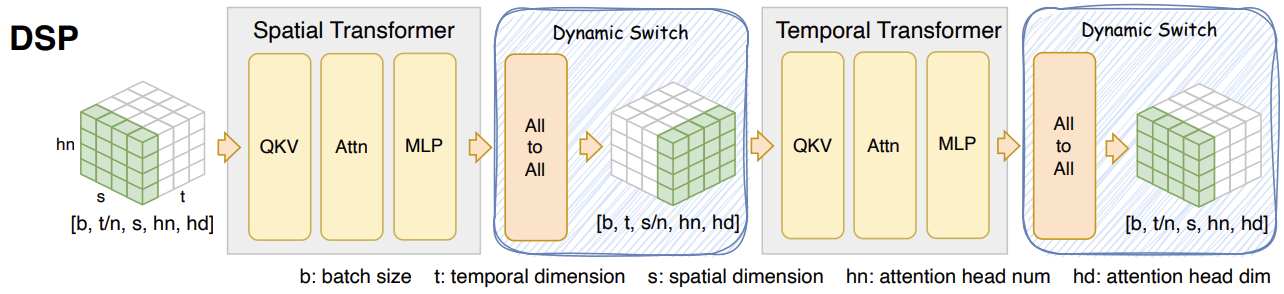

DSP is a novel, elegant and super efficient sequence parallelism for Open-Sora, Latte and other multi-dimensional transformer architecture.

It achieves 3x speed for training and 2x speed for inference in Open-Sora compared with sota sequence parallelism (DeepSpeed Ulysses). For a 10s (80 frames) of 512x512 video, the inference latency of Open-Sora is:

| Method | 1xH800 | 8xH800 (DS Ulysses) | 8xH800 (DSP) |

|---|---|---|---|

| Latency(s) | 106 | 45 | 22 |

See its details here.

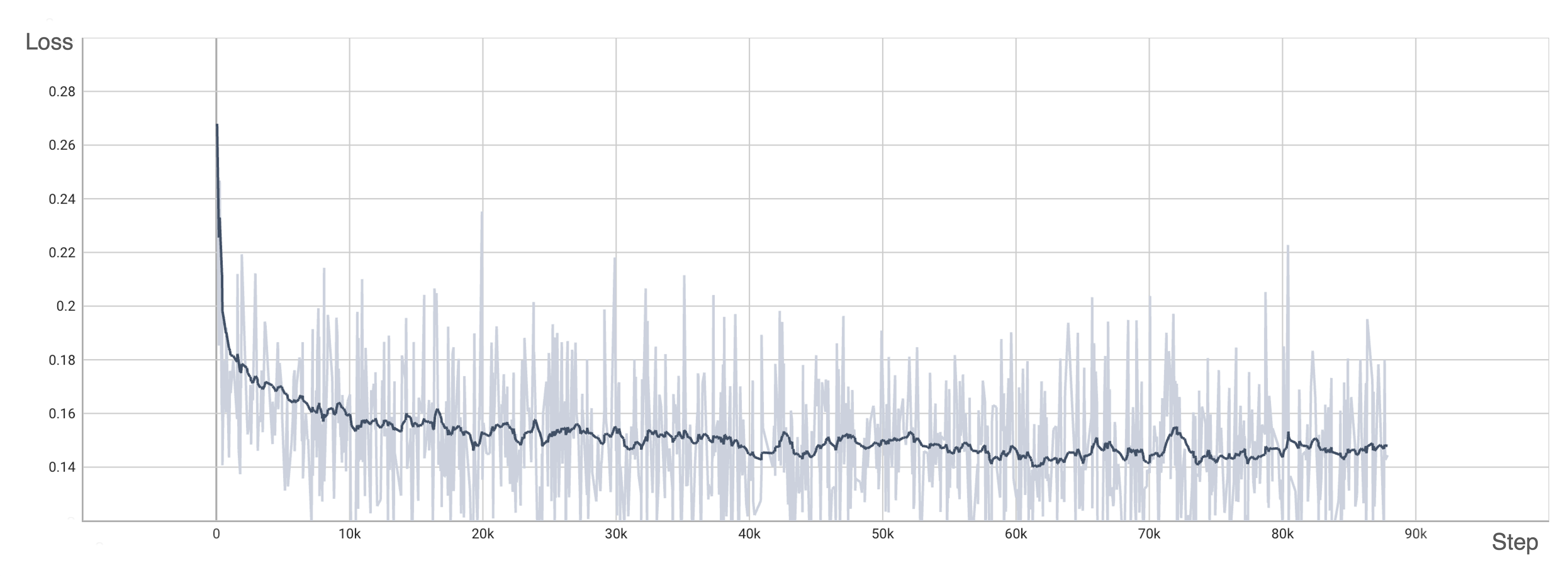

We have trained DiT using the origin method with OpenDiT to verify our accuracy. We have trained the model from scratch on ImageNet for 80k steps on 8xA100. Here are some results generated by our trained DiT:

Our loss also aligns with the results listed in the paper:

To reproduce our results, you can follow our instruction.

Thanks Xuanlei Zhao, Zhongkai Zhao, Ziming Liu, Haotian Zhou, Qianli Ma, Yang You, Xiaolong Jin, Kai Wang for their contributions. We also extend our gratitude to Zangwei Zheng, Shenggan Cheng, Fuzhao Xue, Shizun Wang, Yuchao Gu, Shenggui Li, and Haofan Wang for their invaluable advice.

This codebase borrows from:

- Open-Sora: Democratizing Efficient Video Production for All.

- DiT: Scalable Diffusion Models with Transformers.

- PixArt: An open-source DiT-based text-to-image model.

- Latte: An attempt to efficiently train DiT for video.

If you encounter problems using OpenDiT or have a feature request, feel free to create an issue! We also welcome pull requests from the community.

@misc{zhao2024opendit,

author = {Xuanlei Zhao, Zhongkai Zhao, Ziming Liu, Haotian Zhou, Qianli Ma, and Yang You},

title = {OpenDiT: An Easy, Fast and Memory-Efficient System for DiT Training and Inference},

year = {2024},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/NUS-HPC-AI-Lab/OpenDiT}},

}

@misc{zhao2024dsp,

title={DSP: Dynamic Sequence Parallelism for Multi-Dimensional Transformers},

author={Xuanlei Zhao and Shenggan Cheng and Zangwei Zheng and Zheming Yang and Ziming Liu and Yang You},

year={2024},

eprint={2403.10266},

archivePrefix={arXiv},

primaryClass={cs.DC}

}