Jetbot_OD or Jetbot Object Detection

To this repo to work, please:

- Downlad jetbot_diff_drive to your catkin_ws/src folder

- Change inside the folder jetbot_diff_drive

- Execute the command git checkout cd8f47e in the terminal

- Now download this repository inside catkin_ws/src

- Move to catkin_ws and do a catkin_make

- Now you could continue with these repository and it will work

Brief Review

This project is an implementation of YOLOv3 object detection using OpenCV, the ROS cv_bridge and Nodelets for perform simple inferences.

The YOLO implementation is based on code of the github repo of Satya Mallick.

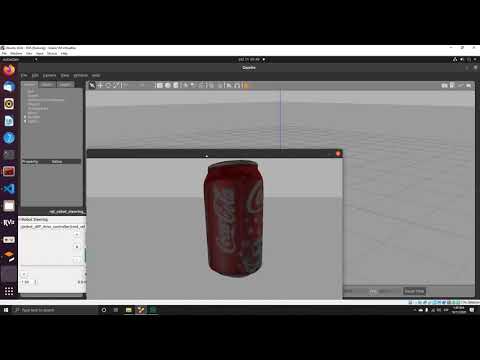

Below an example image of the outcome.

The project tree:

This applications function as follows after the jetbot_diff_drive is loaded and this package is loaded later.

- After you load the nodelet you have to wait a few seconds

- An image of the coke_can will pop up

- Next you have to rotate the robot to see the soccer ball using the controls

- Wait a few seconds to see the inference.

- You can also use dynamic reconfigure to play with the inferences

Using Jetbot Object Detection Package

- You need to have the jetbot_diff_drive package

- Also, there are other prerequisites like:

- cv_bridge

- image_transport

- nodelet

- roscpp

- std_msgs

- dynamic_reconfigure

- For YOLO to inference you have to download the weights file in jetbot_od/object_detection/yolo folder

wget https://pjreddie.com/media/files/yolov3.weights

- We supplied the yolo.cfg and coco.names files pre-configured in that folder, you do not need to download it.

Next:

- Create a ROS ros workspace and compile an empty package:

cd ~

mkdir -p catkin_ws/src

cd catkin_ws

catkin_make

- Open the

.bashrcwith nano:

nano ~/.bashrc

- Insert this line at the end of the

~/.bashrcfile for sourcing your workspace:

source ~/catkin_ws/devel/setup.bash

- Clone this repo in the

~/catkin_ws/srcfolder by typing:

cd ~/catkin_ws/src

git clone https://github.com/issaiass/jetbot_diff_drive

rm -rf README.md

rm -rf imgs

- Go to the root folder

~/catkin_wsand make the folder runningcatkin_maketo ensure the application compiles. - Finally launch the application by:

roslaunch jetbot_diff_drive jetbot_rviz.launch

roslaunch jetbot_od yolo.launch

- You must see that

roscoreand all configurations loading succesfully. - When everything ends, you must see gazebo and rviz loaded and the jetbot displaying a coke can in rviz and also a soccer ball model that is supplied in the jetbot_diff_drive package.

- Next, with the controller or the gazebo toolbar, rotate the jetbot until it has the soccer ball in front.

- Wait until the neural network inference the ball. This could take some time (between 2-4 seconds), depending on the speed of your computer and also the inference depends on the simulator ilumniation.

Video Explanation

I will try my best for making an explanatory video of the application as in this youtube video.

Issues

- None, but just to let know that the implementation is running only on the CPU, you will have to adapt the code to add GPU support.

Future Work

Planning to add to this project:

- OpenVINO for inferencing and accelerate the speed.

Contributiong

Your contributions are always welcome! Please feel free to fork and modify the content but remember to finally do a pull request.