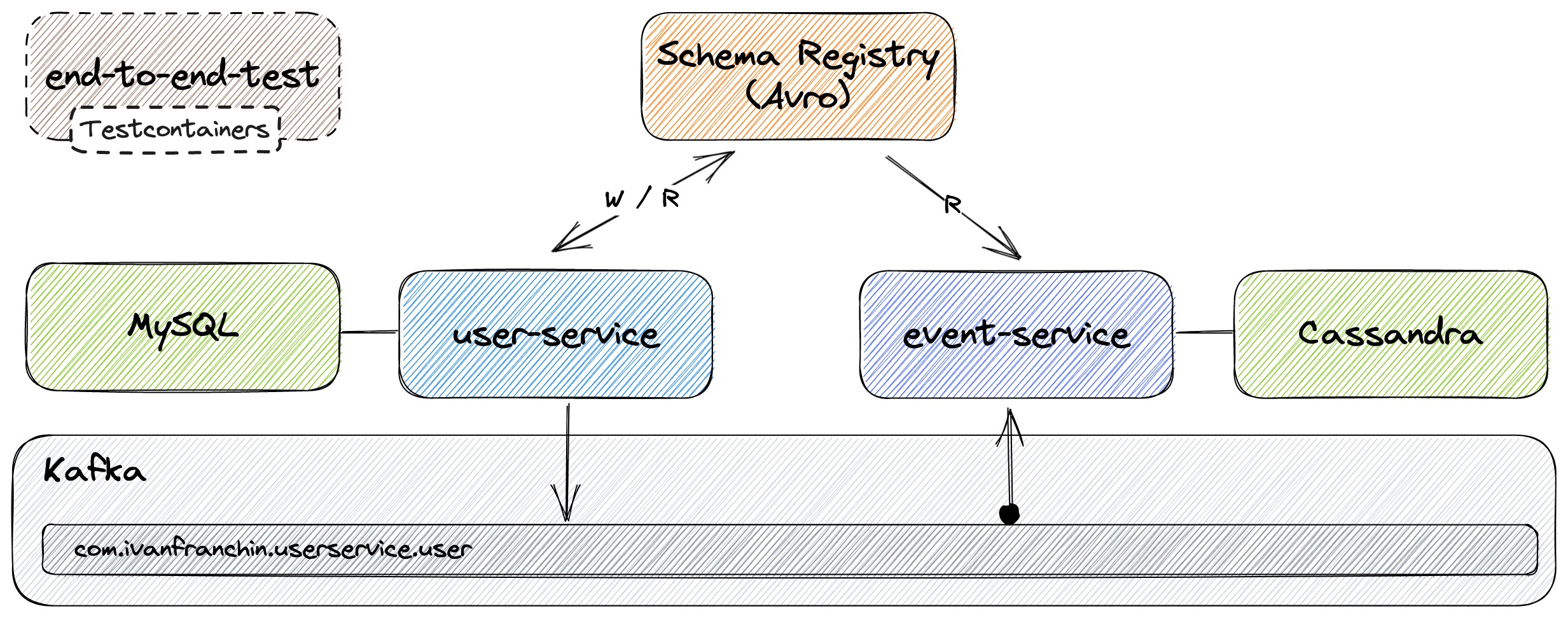

The goal of this project is to create a Spring Boot application that handles users using Event Sourcing. So, besides the traditional create/update/delete, whenever a user is created, updated, or deleted, an event informing this change is sent to Kafka. Furthermore, we will implement another Spring Boot application that listens to those events and saves them in Cassandra. Finally, we will use Testcontainers for end-to-end testing.

Note: In

kubernetes-minikube-environmentrepository, it's shown how to deploy this project inKubernetes(Minikube)

On ivangfr.github.io, I have compiled my Proof-of-Concepts (PoCs) and articles. You can easily search for the technology you are interested in by using the filter. Who knows, perhaps I have already implemented a PoC or written an article about what you are looking for.

- [Medium] Implementing a Kafka Producer and Consumer using Spring Cloud Stream

- [Medium] Implementing Unit Tests for a Kafka Producer and Consumer that uses Spring Cloud Stream

- [Medium] Implementing End-to-End testing for a Kafka Producer and Consumer that uses Spring Cloud Stream

- [Medium] Configuring Distributed Tracing with Zipkin in a Kafka Producer and Consumer that uses Spring Cloud Stream

- [Medium] Using Cloudevents in a Kafka Producer and Consumer that uses Spring Cloud Stream

-

Spring BootWeb Java application responsible for handling users. The user information is stored inMySQL. Once a user is created, updated or deleted, an event is sent toKafka.-

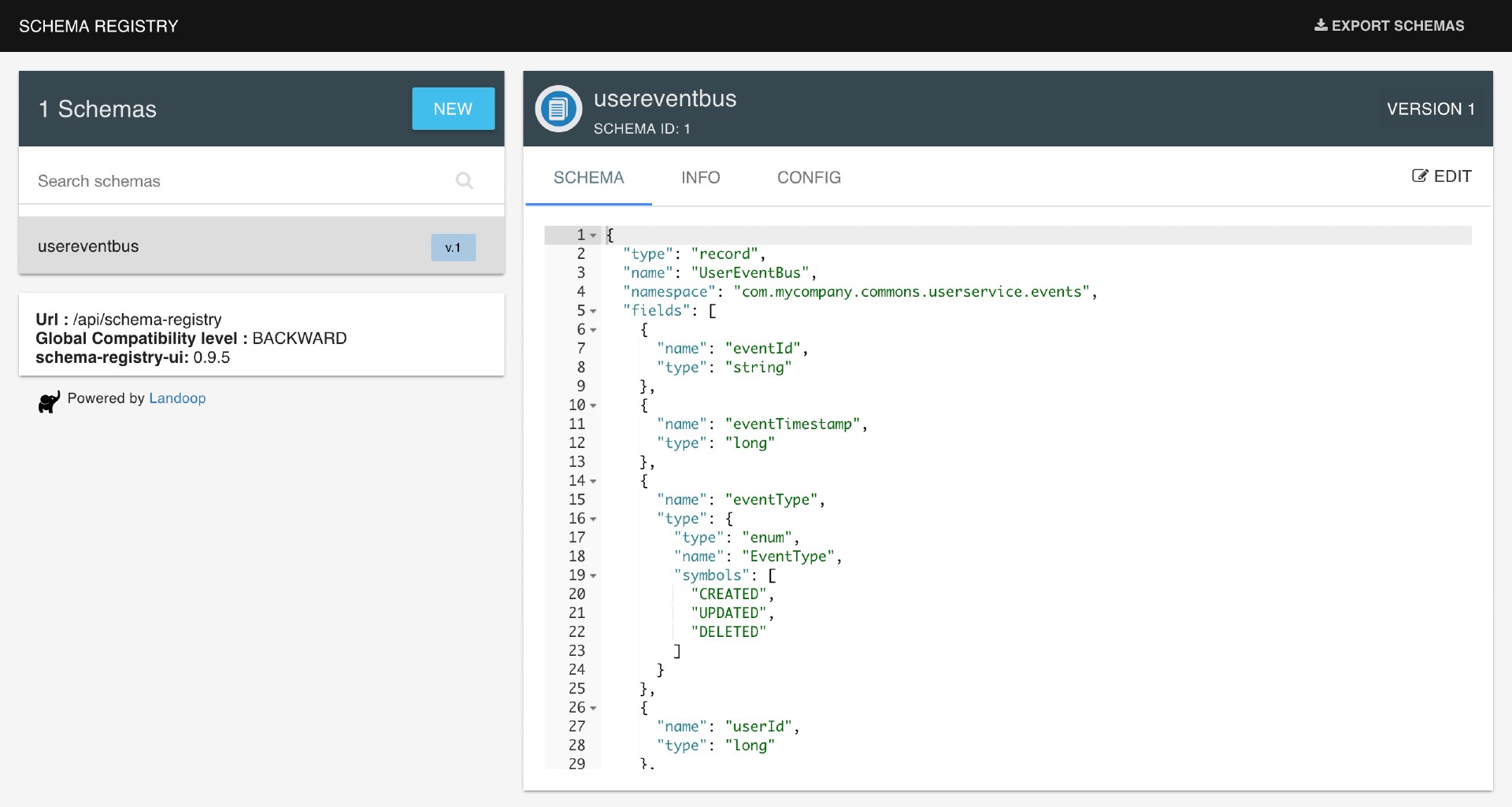

Serialization format

user-servicecan useJSONorAvroformat to serialize data to thebinaryformat used byKafka. If we chooseAvro, both services will benefit by theSchema Registrythat is running as Docker container. The serialization format to be used is defined by the value set to the environment variableSPRING_PROFILES_ACTIVE.Configuration Format SPRING_PROFILES_ACTIVE=defaultJSONSPRING_PROFILES_ACTIVE=avroAvro

-

-

Spring BootWeb Java application responsible for listening events fromKafkaand saving them inCassandra.-

Deserialization

Differently from

user-service,event-servicehas no specific Spring profile to select the deserialization format.Spring Cloud Streamprovides a stack ofMessageConvertersthat handle the conversion of many types of content-types, includingapplication/json. Besides, asevent-servicehasSchemaRegistryClientbean registered,Spring Cloud Streamauto configures an Apache Avro message converter for schema management.In order to handle different content-types,

Spring Cloud Streamhas a "content-type negotiation and transformation" strategy (more here). The precedence orders are: first, content-type present in the message header; second, content-type defined in the binding; and finally, content-type isapplication/json(default).The producer (in the case

user-service) always sets the content-type in the message header. The content-type can beapplication/jsonorapplication/*+avro, depending on with whichSPRING_PROFILES_ACTIVEtheuser-serviceis started. -

Java classes from Avro Schema

Run the following command in

spring-cloud-stream-event-sourcing-testcontainersroot folder. It will re-generate the Java classes from the Avro schema present atevent-service/src/main/resources/avro../mvnw compile --projects event-service

-

-

Spring BootWeb Java application used to perform end-to-end tests onuser-serviceandevent-service. It usesTestcontainerswill start automaticallyZookeeper,Kafka,MySQL,Cassandra,user-serviceandevent-serviceDocker containers before the tests begin and will shut them down when the tests finish.

-

In a terminal and inside

spring-cloud-stream-event-sourcing-testcontainersroot folder run:docker compose up -d -

Wait for Docker containers to be up and running. To check it, run:

docker compose ps

-

user-service

-

In a terminal, make sure you are inside

spring-cloud-stream-event-sourcing-testcontainersroot folder; -

In order to run the application, you can pick between

JSONorAvro:- Using

JSON./mvnw clean spring-boot:run --projects user-service - Using

Avro./mvnw clean spring-boot:run --projects user-service -Dspring-boot.run.profiles=avro

- Using

-

-

event-service

-

In a new terminal, make sure you are inside

spring-cloud-stream-event-sourcing-testcontainersroot folder; -

Run the following command:

./mvnw clean spring-boot:run --projects event-service

-

-

-

In a terminal, make sure you are inside

spring-cloud-stream-event-sourcing-testcontainersroot folder; -

Run the following script to build the Docker images:

- JVM

./docker-build.sh - Native (it's not implemented yet)

./docker-build.sh native

- JVM

-

-

-

user-service

Environment Variable Description MYSQL_HOSTSpecify host of the MySQLdatabase to use (defaultlocalhost)MYSQL_PORTSpecify port of the MySQLdatabase to use (default3306)KAFKA_HOSTSpecify host of the Kafkamessage broker to use (defaultlocalhost)KAFKA_PORTSpecify port of the Kafkamessage broker to use (default29092)SCHEMA_REGISTRY_HOSTSpecify host of the Schema Registryto use (defaultlocalhost)SCHEMA_REGISTRY_PORTSpecify port of the Schema Registryto use (default8081)ZIPKIN_HOSTSpecify host of the Zipkindistributed tracing system to use (defaultlocalhost)ZIPKIN_PORTSpecify port of the Zipkindistributed tracing system to use (default9411) -

event-service

Environment Variable Description CASSANDRA_HOSTSpecify host of the Cassandradatabase to use (defaultlocalhost)CASSANDRA_PORTSpecify port of the Cassandradatabase to use (default9042)KAFKA_HOSTSpecify host of the Kafkamessage broker to use (defaultlocalhost)KAFKA_PORTSpecify port of the Kafkamessage broker to use (default29092)SCHEMA_REGISTRY_HOSTSpecify host of the Schema Registryto use (defaultlocalhost)SCHEMA_REGISTRY_PORTSpecify port of the Schema Registryto use (default8081)ZIPKIN_HOSTSpecify host of the Zipkindistributed tracing system to use (defaultlocalhost)ZIPKIN_PORTSpecify port of the Zipkindistributed tracing system to use (default9411)

-

-

-

In a terminal, make sure you are inside

spring-cloud-stream-event-sourcing-testcontainersroot folder; -

In order to run the application's Docker container, you can pick between

JSONorAvro:- Using

JSON./start-apps.sh - Using

Avro./start-apps.sh avro

- Using

-

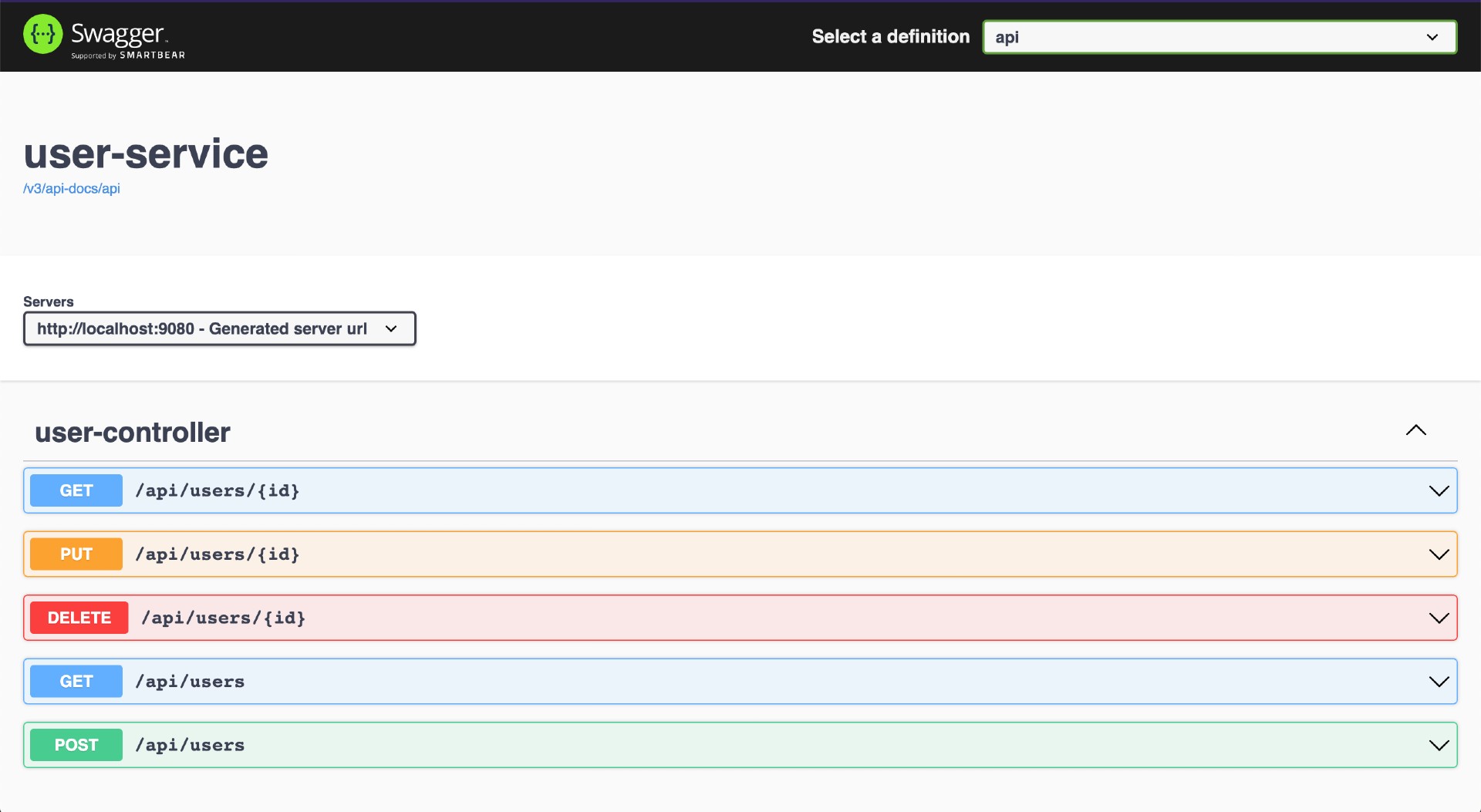

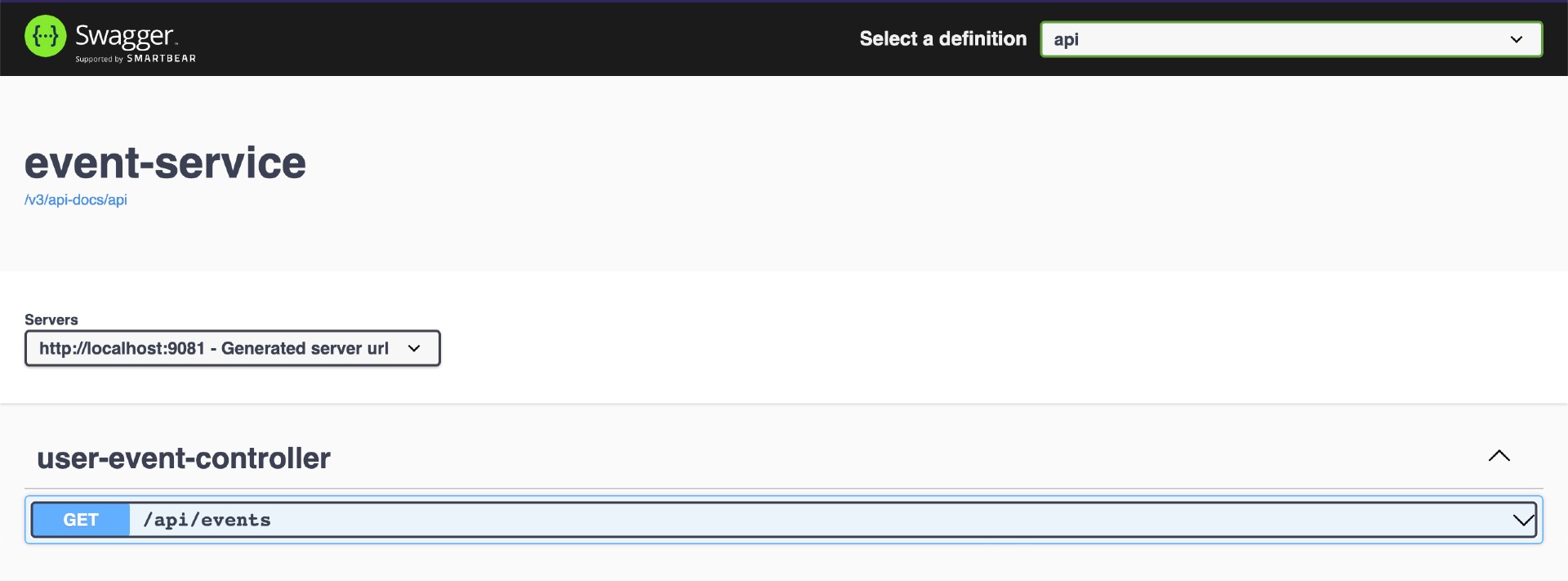

| Application | URL |

|---|---|

| user-service | http://localhost:9080/swagger-ui.html |

| event-service | http://localhost:9081/swagger-ui.html |

-

Create a user:

curl -i -X POST localhost:9080/api/users \ -H "Content-Type: application/json" \ -d '{"email":"ivan.franchin@test.com","fullName":"Ivan Franchin","active":true}' -

Check whether the event related to the user creation was received by

event-service:curl -i "localhost:9081/api/events?userId=1" -

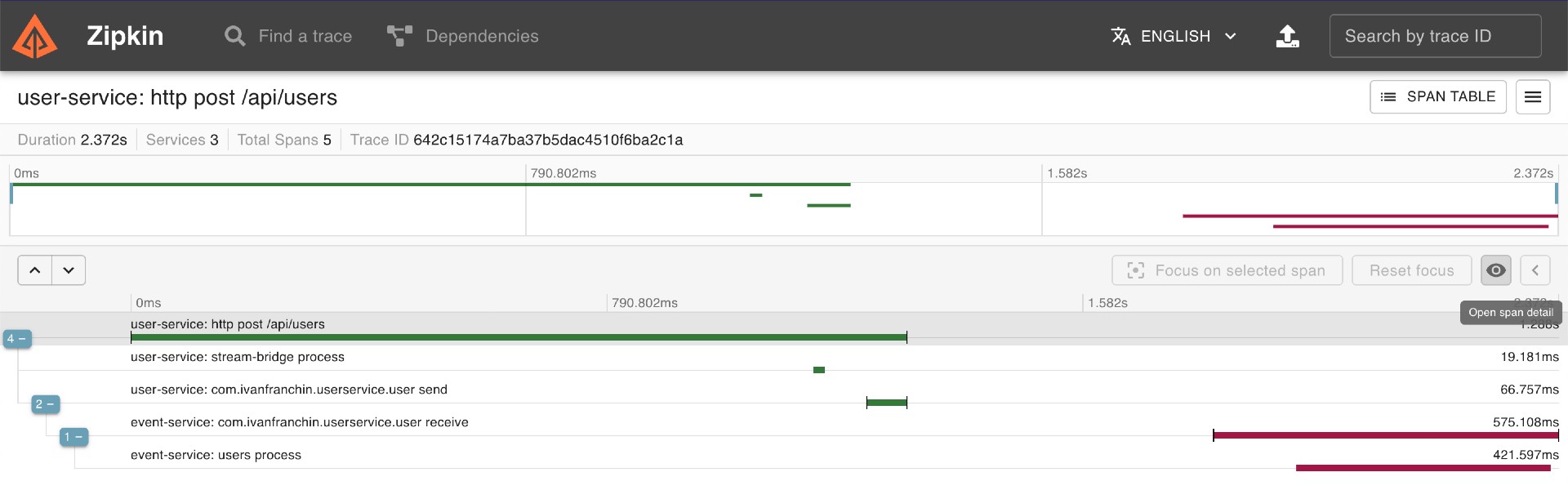

You can check the traces in

Zipkinhttp://localhost:9411. -

Access

user-serviceand create new users and/or update/delete existing ones. Then, accessevent-serviceSwagger website to validate if the events were sent correctly.

-

MySQL

docker exec -it -e MYSQL_PWD=secret mysql mysql -uroot --database userdb SELECT * FROM users;Type

exitto leaveMySQL Monitor -

Cassandra

docker exec -it cassandra cqlsh USE ivanfranchin; SELECT * FROM user_events;Type

exitto leaveCQL shell -

Zipkin

Zipkincan be accessed at http://localhost:9411 -

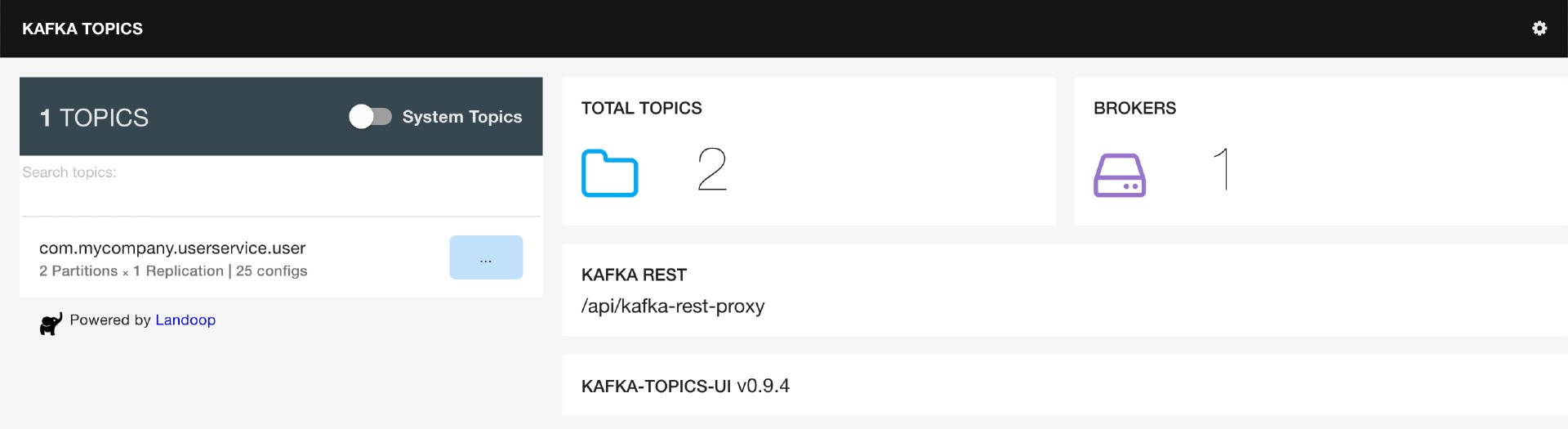

Kafka Topics UI

Kafka Topics UIcan be accessed at http://localhost:8085 -

Schema Registry UI

Schema Registry UIcan be accessed at http://localhost:8001 -

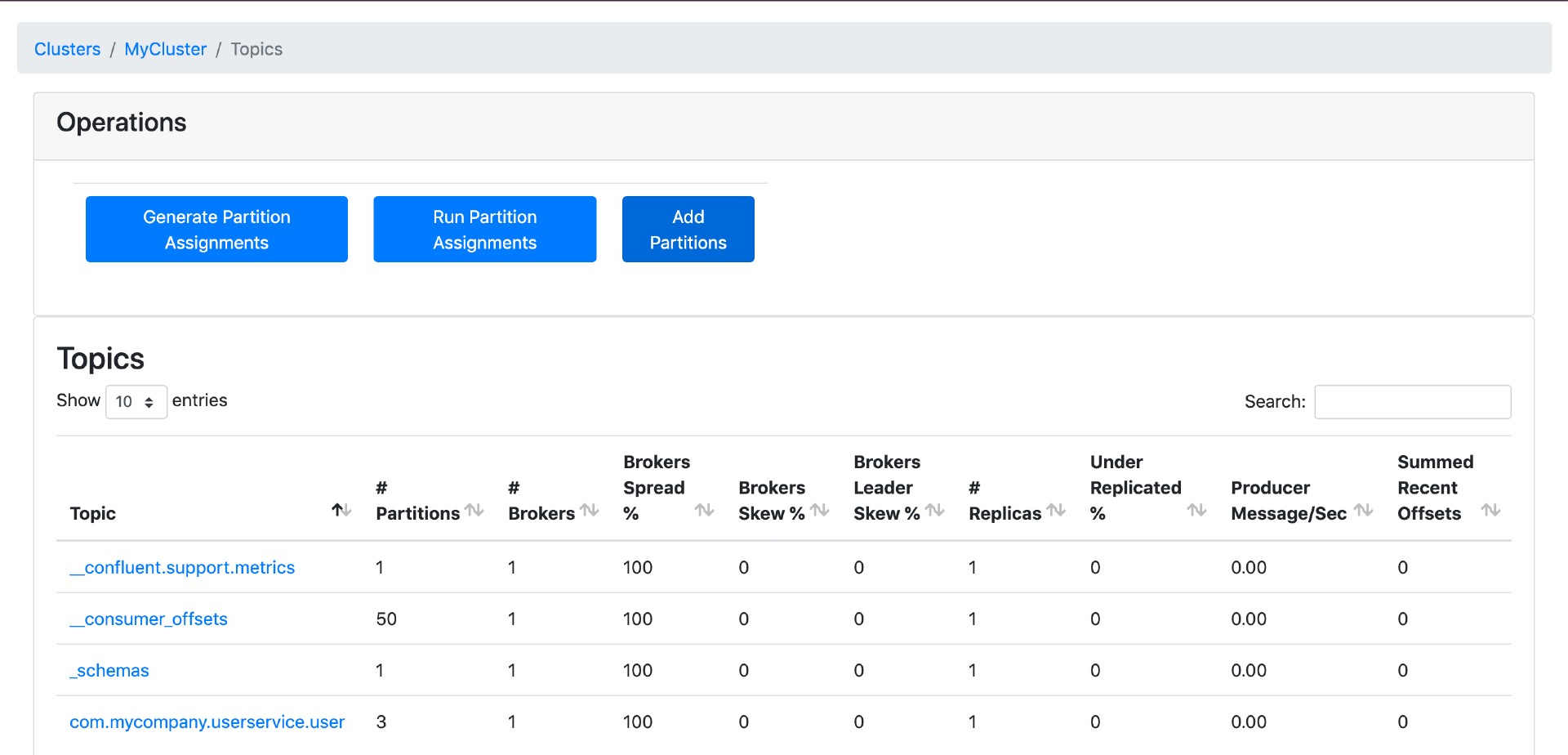

Kafka Manager

Kafka Managercan be accessed at http://localhost:9000Configuration

- First, you must create a new cluster. Click on

Cluster(dropdown button on the header) and then onAdd Cluster - Type the name of your cluster in

Cluster Namefield, for example:MyCluster - Type

zookeeper:2181inCluster Zookeeper Hostsfield - Enable checkbox

Poll consumer information (Not recommended for large # of consumers if ZK is used for offsets tracking on older Kafka versions) - Click on

Savebutton at the bottom of the page.

The image below shows the topics present in Kafka, including the topic

com.ivanfranchin.userservice.userwith3partitions. - First, you must create a new cluster. Click on

-

Stop applications

- If they were started with

Maven, go to the terminals where they are running and pressCtrl+C; - If they were started as a Docker container, run the script below:

./stop-apps.sh

- If they were started with

-

To stop and remove docker compose containers, networks and volumes, make sure you are inside

spring-cloud-stream-event-sourcing-testcontainersroot folder and run:docker compose down -v

-

event-service

- Run the command below to start the Unit Tests

Note:

Testcontainerswill start automaticallyCassandraDocker container before some tests begin and will shut it down when the tests finish../mvnw clean test --projects event-service

- Run the command below to start the Unit Tests

-

user-service

-

Run the command below to start the Unit Tests

./mvnw clean test --projects user-service -

Run the command below to start the End-To-End Tests

Warning: Make sure you have

user-serviceandevent-serviceDocker images.Note:

Testcontainerswill start automaticallyZookeeper,Kafka,MySQL,Cassandra,user-serviceandevent-serviceDocker containers before the tests begin and will shut them down when the tests finish.- Using

JSON./mvnw clean test --projects end-to-end-test -DargLine="-Dspring.profiles.active=test" - Using

Avro./mvnw clean test --projects end-to-end-test -DargLine="-Dspring.profiles.active=test,avro"

- Using

-

To remove the Docker images created by this project, go to a terminal and, inside spring-cloud-stream-event-sourcing-testcontainers root folder, run the following script:

./remove-docker-images.sh