Report | Presentation | Video

Authors: Jan Wiegner, Rudolf Varga, Felix Pfreundtner, Daniil Emtsev and Utkarsh Bajpai

Project for the course Mixed Reality Lab 2020 at ETH.

Check out the report and video linked above for more detailed information.

Learning complex movements can be a time-consuming process, but it is necessary for the mastery of activities like Karate Kata, Yoga and dance choreographies. It is important to have a teacher to demonstrate the correct pose sequences step by step and correct errors in the student’s body postures. In-person sessions can be impractical due to epidemics or travel distance, while videos make it hard to see the 3D postures of the teacher and of the students. As an alternative, we propose the teaching of poses in Augmented Reality (AR) with a virtual teacher and 3D avatars. The focus of our project was on dancing, but it can easily be adapted for other activities.

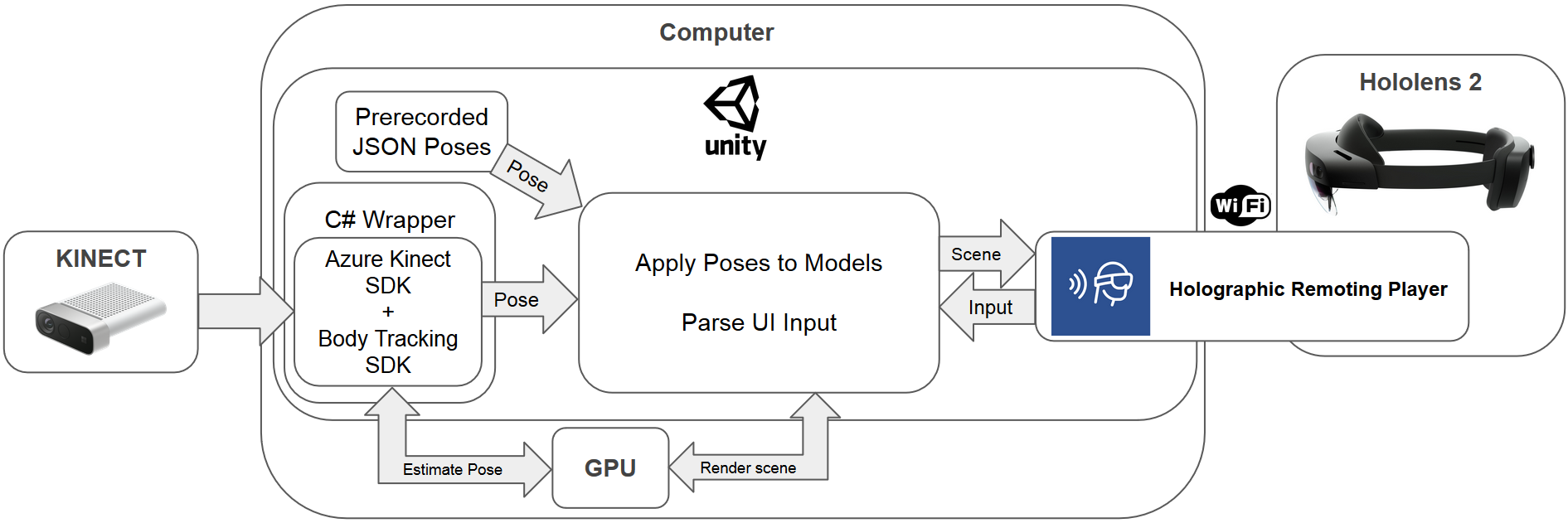

Our main method of pose estimation is using the Azure Kinect with the Sensor SDK and Body tracking SDK and we use the official C# wrapper to connect it to Unity. We rely on Microsoft’s Holographic Remoting Player to display content on the HoloLens 2 which is being run and rendered on a separate computer.

The user has an option of following a guided course, which consists of repeating basic steps to perfect them and testing their skills on choreographies. They can also use freeplay mode to beat their previous highest score.

There are a multitude of visualization options, so the user can change the environment to their own needs and accelerates the learning process. This includes creating multiple instances of his avatar and the one of the teacher, mirroring them, showing a graph of the score, a live RGB feed from the Kinect and others. Changes can be either done in the main menu or trough the hand menu for smaller changes.

Our scoring mechanism is explained in our report.

There are 4 avatar options to choose from in our project:

- Cube avatar (position and orientation of all estimated joints)

- Stick figure avatar (body parts used for score calculation, changing color depending on correctness)

- Robot avatar (rigged model)

- SMPL avatar models (parametrized rigged model)

As of January 24, 2021, this repository has been tested under the following environment:

- Windows 10 Education (10.0.19042 Build 19042)

- All tools required to develop on the HoloLens

- Unity 2019.4.13f1 // Unity 2019 LTS version

A dedicated CUDA compatible graphics card is necessary, NVIDIA GEFORCE GTX 1070 or better. For more information consult the official BT SDK hardware requirements. We used a GTX 1070 for development and testing.

- Clone this repository.

- Open the

unityfolder as a Unity project, withUniversal Windows Platformas the build platform. It might take a while to fetch all packages. - Setup the Azure Kinect Libraries: (same as Sample Unity Body Tracking Application)

- Get the NuGet packages of libraries:

- Open the Visual Studio Solution (.sln) associated with this project. You can create one by opening a csharp file in the Unity Editor.

- In Visual Studio open: Tools->NuGet Package Manager-> Package Manager Console

- Exectue in the console:

Install-Package Microsoft.Azure.Kinect.BodyTracking -Version 1.0.1

- Move libraries to correct folders:

- Execute the file

unity/MoveLibraryFile.bat. You should now have library files inunity/and in the newly createdunity/Assets/Plugins.

- Execute the file

- Get the NuGet packages of libraries:

- Open

Assets/PoseteacherScenein the Unity Editor. - When prompted to import TextMesh Pro, select

Import TMP Essentials. You will need to reopen the scene to fix visual glitches. - (Optional) Connect to the HoloLens with Holographic Remoting using the

Windows XR Plugin Remotingin Unity. Otherwise the scene will only play in the editor. - (Optional) In the

Mainobject inPoseteacherScenesetSelf Pose Input SourcetoKINECT. Otherwise the input of the user is simulated from a file. - Click play inside the Unity editor.

- Most of the application logic is inside of the

PoseteacherMain.csscript which is attached to theMaingame object. - If updating from an old version of the project, be sure to delete the

Libraryfolder generated by Unity, so that packages are handled correctly. - The project uses MRTK 2.5.1 from the Unity Package Manager, not imported into the

Assetsfolder:- MRTK assets have to be searched inside

Packagesin the editor. - The only MRTK files in

Assetsshould be in foldersMixedRealityToolkit.GeneratedandMRTK/Shaders. - Only exception is the

Samples/Mixed Reality Toolkit Examplesif MRTK examples are imported - If there are other MRTK folders, they are from an old version of the project (or were imported manually) and should be removed like when updating.

Remeber to delete the

Libraryfolder after doing this.

- MRTK assets have to be searched inside

- We use the newer XR SDK pipeline instead of the Legacy XR pipeline (which is depreciated)

Use the UI to navigate in the application. This can also be done in the editor, consult the MRTK In-Editor Input Simulation page to see how.

For debugging we added the following keyboard shortcuts:

- H for toggling Hand menu in training/choreography/recording (for use in editor testing)

- O for toggling pause of teacher avatar updates

- P for toggling pause of self avatar updates

- U for toggling force similarity update (even if teacher updates are paused)

All our code and modifications are licensed under the attached MIT License.

We use some code and assets from:

- This fork of the azure-kinect-dk-unity repository (MIT License).

- NativeWebSocket (Apache-2.0 License).

- SMPL (Creative Commons Attribution 4.0 International License).

- Space Robot Kyle (Unity Extension Asset License).

- Lightweight human pose estimation (Apache-2.0 License).

- Official Sample Unity Body Tracking Application (MIT License)

We show an example of using the Websockets for obtaining the pose combined with the Lightweight human pose estimation repository. If you do not have an Azure Kinect or GPU you can use this, but it will be very slow.

Clone the repository and copy alt_pose_estimation/demo_ws.py into it.

Install the required packages according to the repository and run demo_ws.py.

Beware that Pytorch still has issues with Python 3.8, so we recommend using Python 3.7.

It should now be sending pose data over a local Websocket, which can be used if the SelfPoseInputSource value is set to WEBSOCKET for the Main object in the Unity Editor.

Depending on the version of the project, some changes might need to be made in PoseInputGetter.cs to correctly setup the Websocket.