This repository contains the official implementation of our ICCV 2023 paper.

The out-of-distribution detection is implemented for different models.

Since the models run with different pytorch versions and other varying packages, we provide the *_environment.yml files with the required packages.

The files can be used to create the required Anaconda environments.

The file for Monodepth2 is named monodepth2_environment.yml, the file for MonoViT is named monovit_environment.yml.

The correct environment must be loaded to run the code of the respective model.

Prepare Monodepth2:

- Download the

monodepth2repository: Monodepth2 and place it in the foldermonodepth2_ood. - Replace

from kitti_utils import generate_depth_mapwithfrom ..kitti_utils import generate_depth_mapin the filemonodepth2/kitti_dataset.pyinline 14. - Replace

from layers import *withfrom ..layers import *in the filemonodeth2/networks/depth_decoder.pyinline 14. - Replace

class MonodepthOptions:withclass MonodepthOptions(object):in the filemonodepth2/options.pyinline 15. - Add

import sysandsys.path.append("monodepth2")to the filemonodepth2/trainer.pybeforefrom utils.py import *. - In line 86 of the file

kitti_utils.pyreplacenp.intwithnp.int32

Prepare MonoViT:

7. Download the monovit repository: MonoViT and place it in the folder monovit_ood.

8. Download the mpvit-small model as specified by the repository to monovit/ckpt/.

9. In line 785, 815 of the file mpvit.py change ./ckpt/mpvit_xsmall.pth' to ./monovit/ckpt/mpvit_xsmall.pth'

- In both directories (

monodepth2_ood,monovit_ood) create a filenetworks/bayescap.pyand place the model architecture from BayesCap there.

We conduct experiments with models trained with NYU Depth V2 and KITTI as in-distribution data.

NYU Depth V2 is downloaded as provided by FastDepth into the folder nyu_data. KITTI is downloaded according to the instructions from mono-uncertainty into the folder kitti_data.

For NYU Depth V2, Places365 is used as OOD data. Download the files to the folder places365.

For KITTI, Places365, India Driving and virtual KITTI are used as out-of-distribution.

From India Driving, download the IDD Segmentation data to the folder idd.

From virtual KITTI download the rgb and depth data to vkitti_data.

We conduct our evaluations on the datasets NYU Depth V2 and KITTI.

Therefore, Monodepth2 is trained on NYU Depth V2 in supervised manner.

The models Post, Log and Drop can be obtained form GruMoDepth.

In addition, Monodepth2 and MonoViT are trained on KITTI.

The Monodepth2 Post, Log and Drop can be obtained from mono-uncertainty.

All other models are provided here: models.

Save the respective models to monodepth2_ood/weights and monovit_ood/weights.

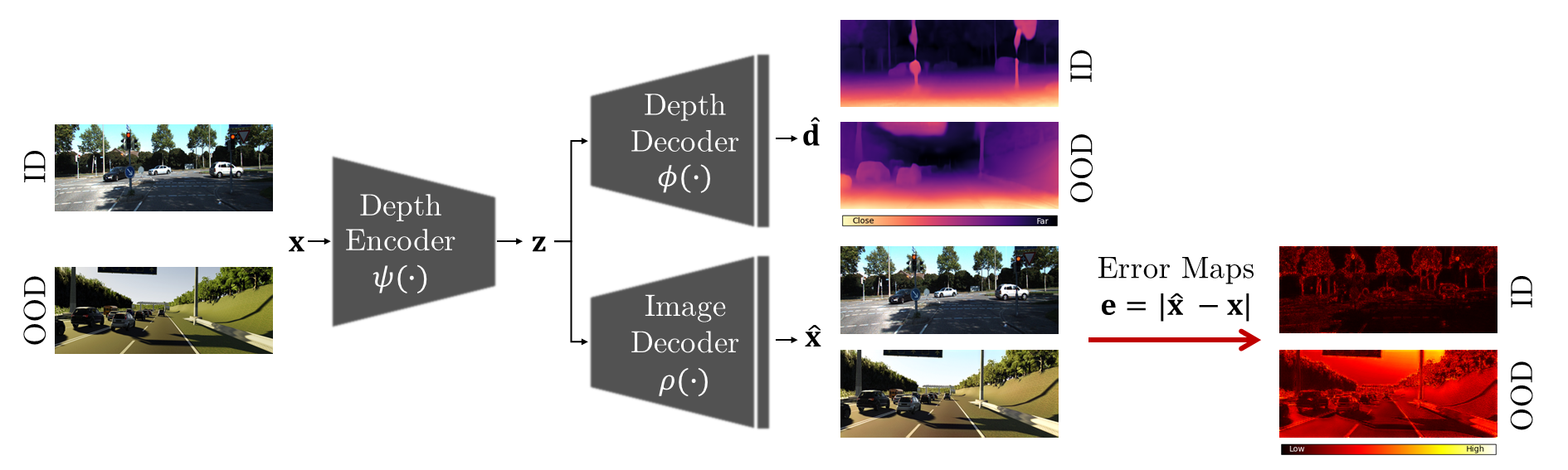

In the following sections, commands to evaluate the image decoder for OOD detection using different datasets are provided.

Next to our method, post-processing (--post_process), log-likelihood maximization (--log), bayescap (--bayescap) and MC dropout (--dropout) can be evaluated by setting the respective argument.

The model provided with --load_weights_folder must be changed accordingly.

cd monodepth2_ood

conda activate monodepth2_env

Evaluate image decoder with KITTI as in-distribution and virtual KITTI as OOD:

python3 evaluate_ood_kitti.py --eval_split eigen_benchmark --load_weights_folder weights/M/Monodepth2-AE/models/weights_19 --data_path ./../kitti_data --ood_data ./../vkitti_data --ood_dataset virtual_kitti --autoencoder

Evaluate image decoder with KITTI as in-distribution and India Driving as OOD:

python3 evaluate_ood_kitti.py --eval_split eigen_benchmark --load_weights_folder weights/M/Monodepth2-AE/models/weights_19 --data_path ./../kitti_data --ood_data ./../idd --ood_dataset india_driving --autoencoder

Evaluate image decoder with KITTI as in-distribution and Places365 as OOD:

python3 evaluate_ood_kitti.py --eval_split eigen_benchmark --load_weights_folder weights/M/Monodepth2-AE/models/weights_19 --data_path ./../kitti_data --ood_data ./../places365 --ood_dataset places365 --autoencoder

Evaluate the image decoder with NYU as in-distribution and Places365 as OOD:

python3 evaluate_ood_nyu.py --load_weights_folder weights/NYU/Monodepth2-AE/models/weights_19/ --data_path ./../nyu_data --ood_data ./../places365 --ood_dataset places365 --autoencoder

--load_weights_folder specifies the path to the model weights to be loaded.

--data_path specifies the path to the in-distribution data.

--ood_data specifies the path to the OOD data.

--ood_dataset selects the OOD test set.

--autoencoder setting this argument selects the image decoder for evaluation.

--output_dir specifies the folder where the output will be saved.

--plot_results setting this argument will save visual results to the selected output directory.

cd monovit_ood

conda activate monovit_env

Evaluate image decoder with KITTI as in-distribution and virtual KITTI as OOD:

python3 evaluate_ood_kitti.py --eval_split eigen_benchmark --load_weights_folder weights/M/MonoViT-AE/models/weights_19 --data_path ./../kitti_data --ood_data ./../vkitti_data --ood_dataset virtual_kitti --autoencoder

Evaluate image decoder with KITTI as in-distribution and India Driving as OOD:

python3 evaluate_ood_kitti.py --eval_split eigen_benchmark --load_weights_folder weights/M/MonoViT-AE/models/weights_19 --data_path ./../kitti_data --ood_data ./../idd --ood_dataset india_driving --autoencoder

Evaluate image decoder with KITTI as in-distribution and Places365 as OOD:

python3 evaluate_ood_kitti.py --eval_split eigen_benchmark --load_weights_folder weights/M/MonoViT-AE/models/weights_19 --data_path ./../kitti_data --ood_data ./../places365 --ood_dataset places365 --autoencoder

Please use the following citations when referencing our work:

Out-of-Distribution Detection for Monocular Depth Estimation Julia Hornauer, Adrian Holzbock, and Vasileios Belagiannis [paper]

@InProceedings{Hornauer_2023_ICCV,

author = {Hornauer, Julia and Holzbock, Adrian and Belagiannis, Vasileios},

title = {Out-of-Distribution Detection for Monocular Depth Estimation},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {1911-1921}

}

We used and modified code parts from the open source projects Monodepth2 and MonoViT, mono-uncertainty and BayesCap. We like to thank the authors for making their code publicly available.