Joshua Cook 4/7/2020

#TidyTuesday is a

tradition in R where every Tuesday, we practice our data analysis skills

on a new “toy” data set.

See all of my notebooks and R scripts in the tuesdays directory. (I conducted a reorganization when I re-started this work in 2023, so some links, etc. may be broken.)

The goals in 2023 is to practice building data visualizations optimized for accurate and easy interpretation of the data. This was inspired by reading Edward Tufte's The Visual Display of Quantitative Information, a book I should have been studying for many years now.

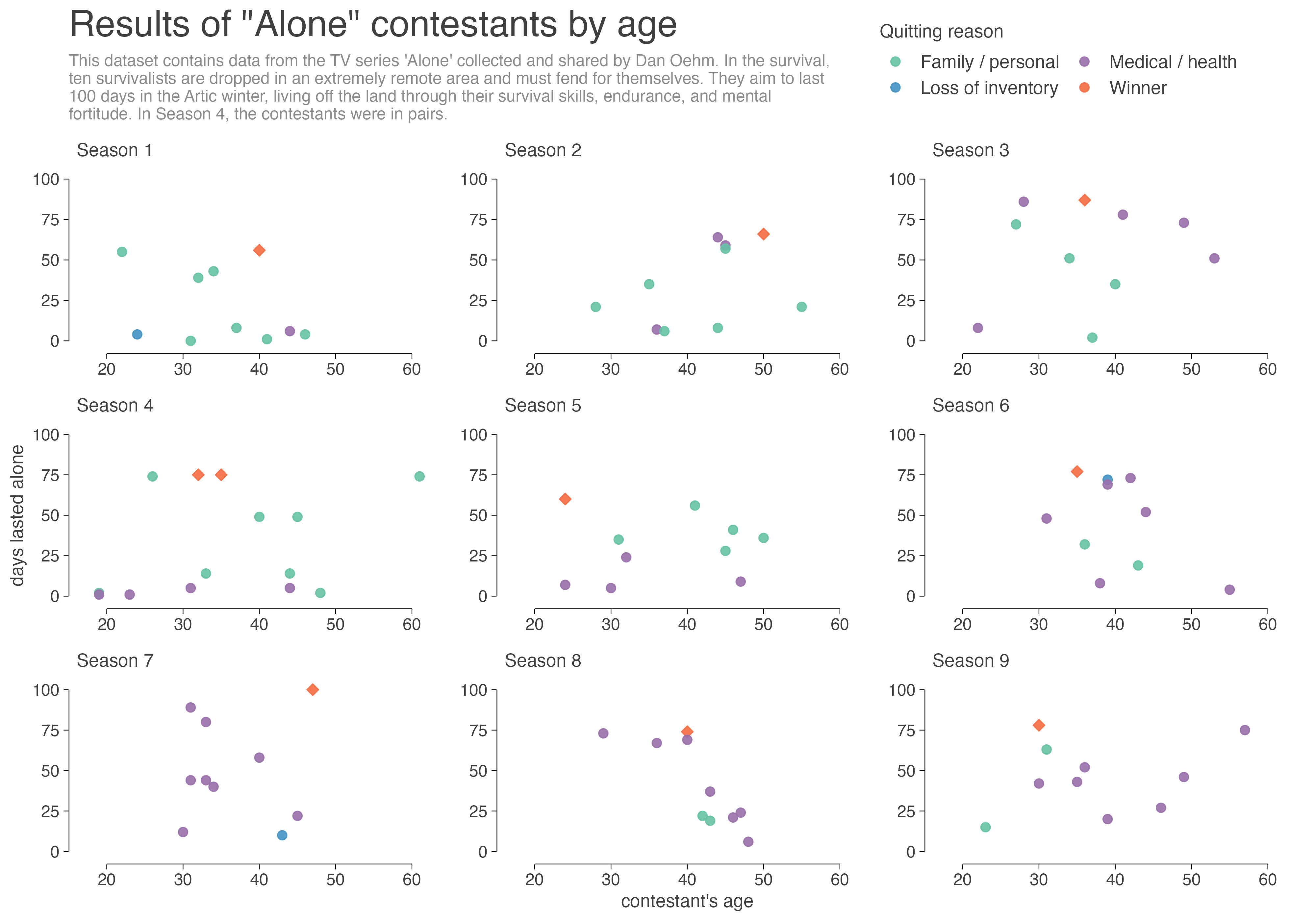

January 24, 2023 - Alone

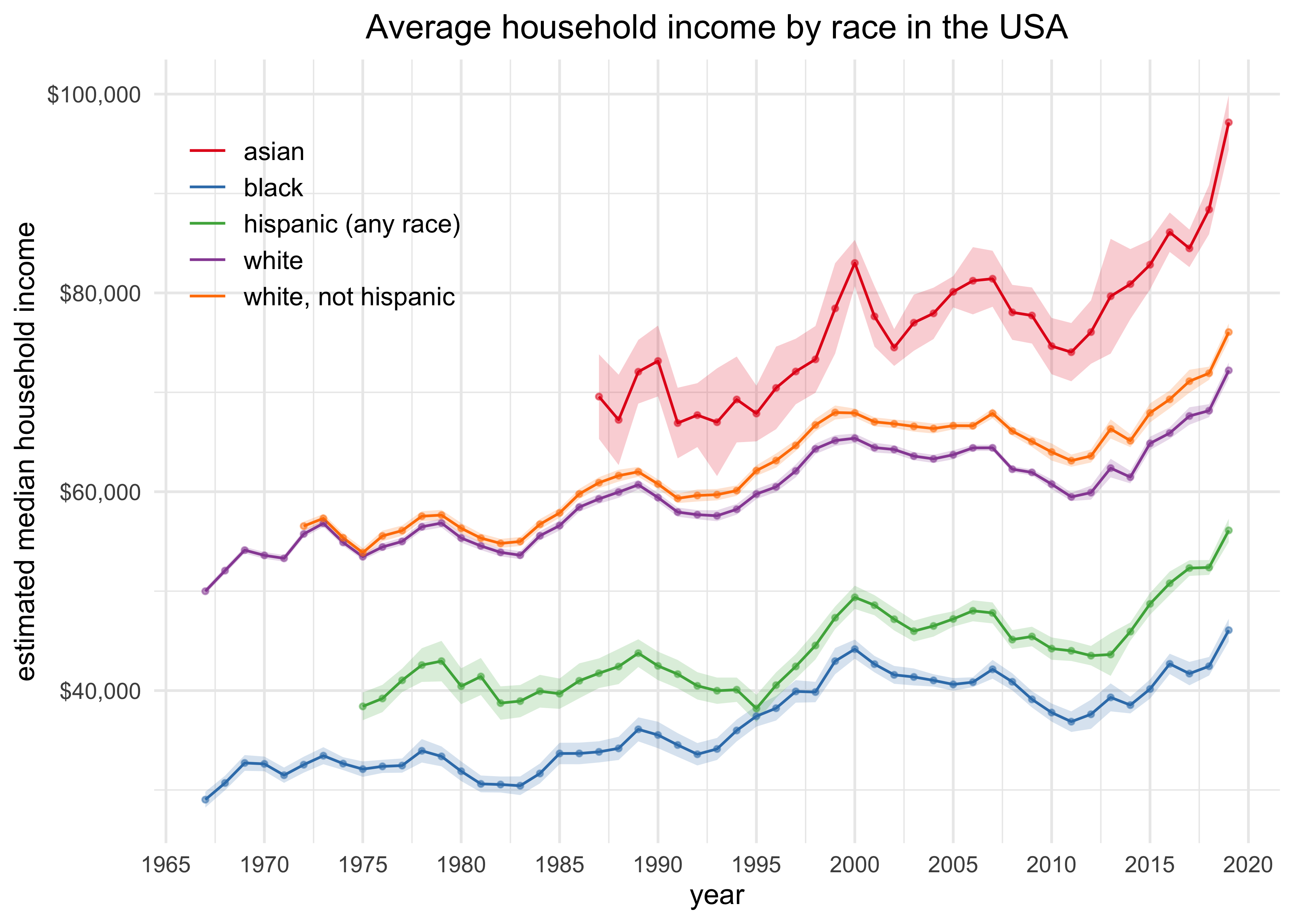

December 29, 2020 - USA Household Income

For the first week of the year, we were told to bring our favorite data from 2020. I decided to prepare my own data on US household income acquired from the US Census Bureau. The processing of that data was conducted in “2020-12-29_usa-household-income.R”. For the analysis, I just conducted simple EDA.

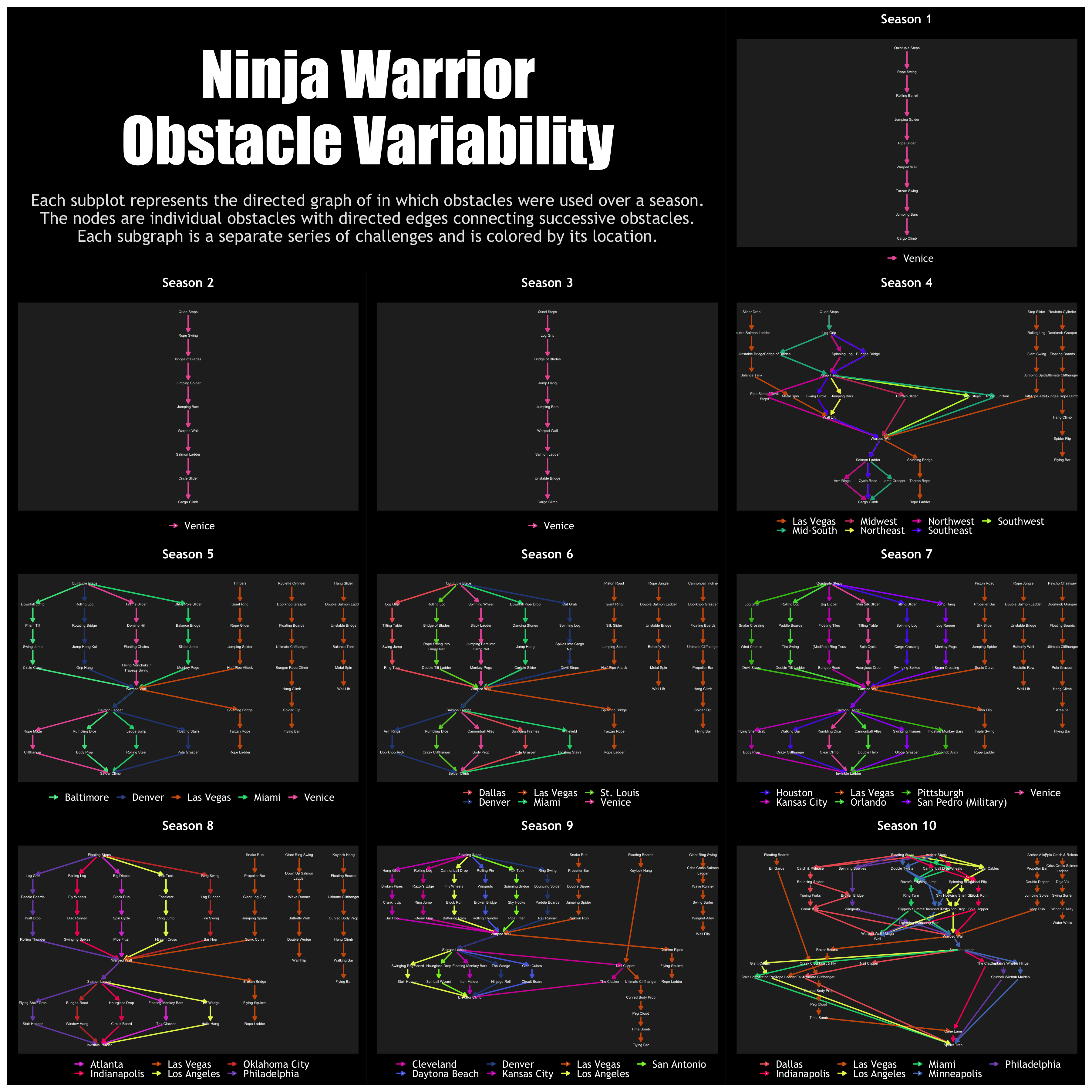

December 14, 2020 - Ninja Warrior

Today’s data set was very challenging because of the limited amount of information. I’m quite pleased with my final product and think it is both visually appealing and clever.

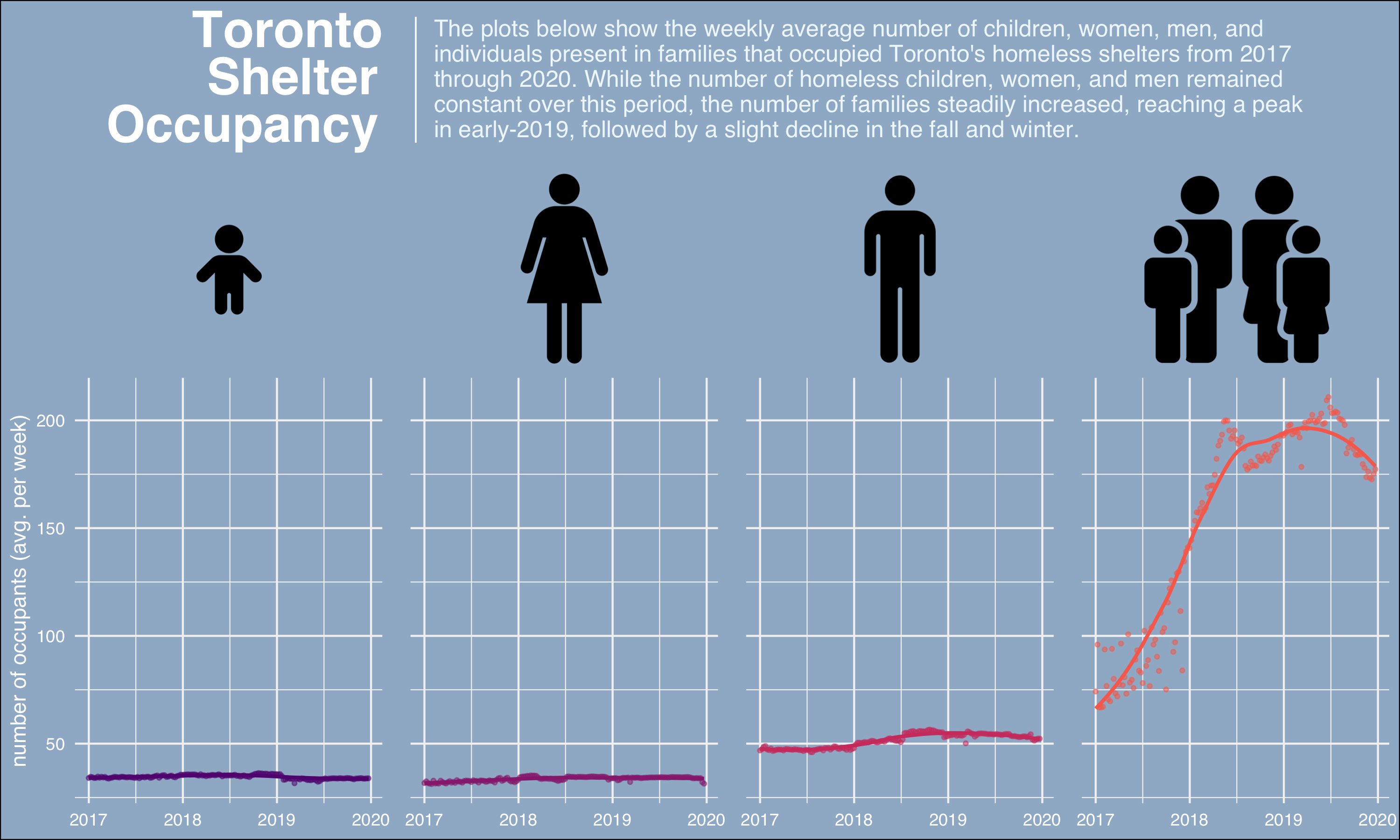

December 1, 2020 - Toronto Shelters

Today I focused on style over substance (so please excuse the relative

lack of creativity in the data presented in the plots), particularly by

purposefully incorporating images from The Noun

Project. Initially, I tried to use the

‘ggimage’ package to

insert the images along the tops of each panel (using

‘patchwork’ to piece

together two plots), but eventually used

‘ggtext’ and inserted the

images in the strip.text of the panels.

October 13, 2020 - Datasaurus Dozen

I used a simplified version of the algorithm used by the original Datasaurus Dozen document from AutoDesk to create an animation of the transition from the dinosaur formation to the slant-down formation. At each step, the summary statistics remain unchanged to a precision of 0.01 units.

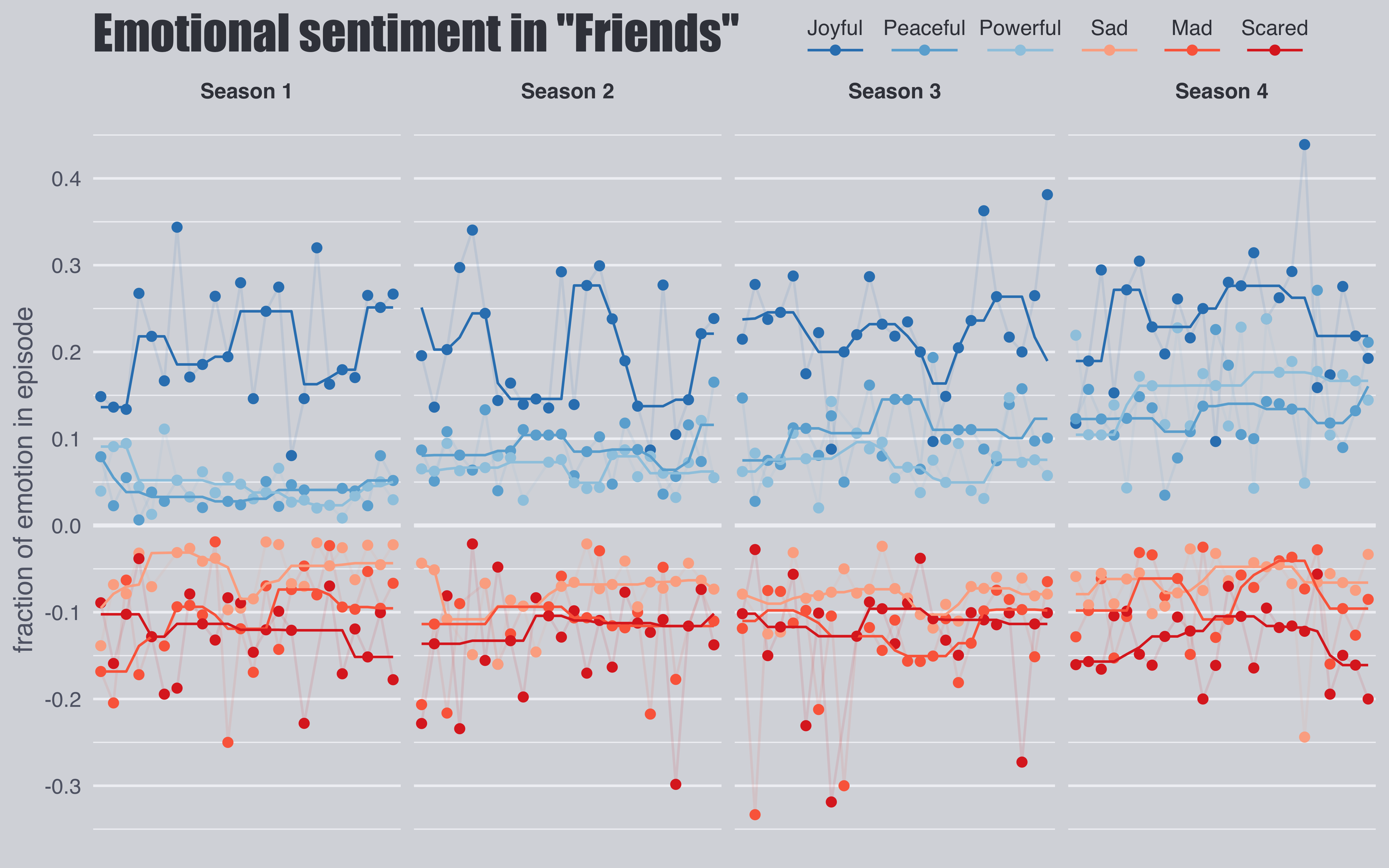

September 8, 2020 - Friends

I just practiced designing a good-looking graphic. I have a long way to go, but it was a good first effort.

August 11, 2020 - Avatar: The Last Airbender

I experimented with prior predictive checks with this week’s data set.

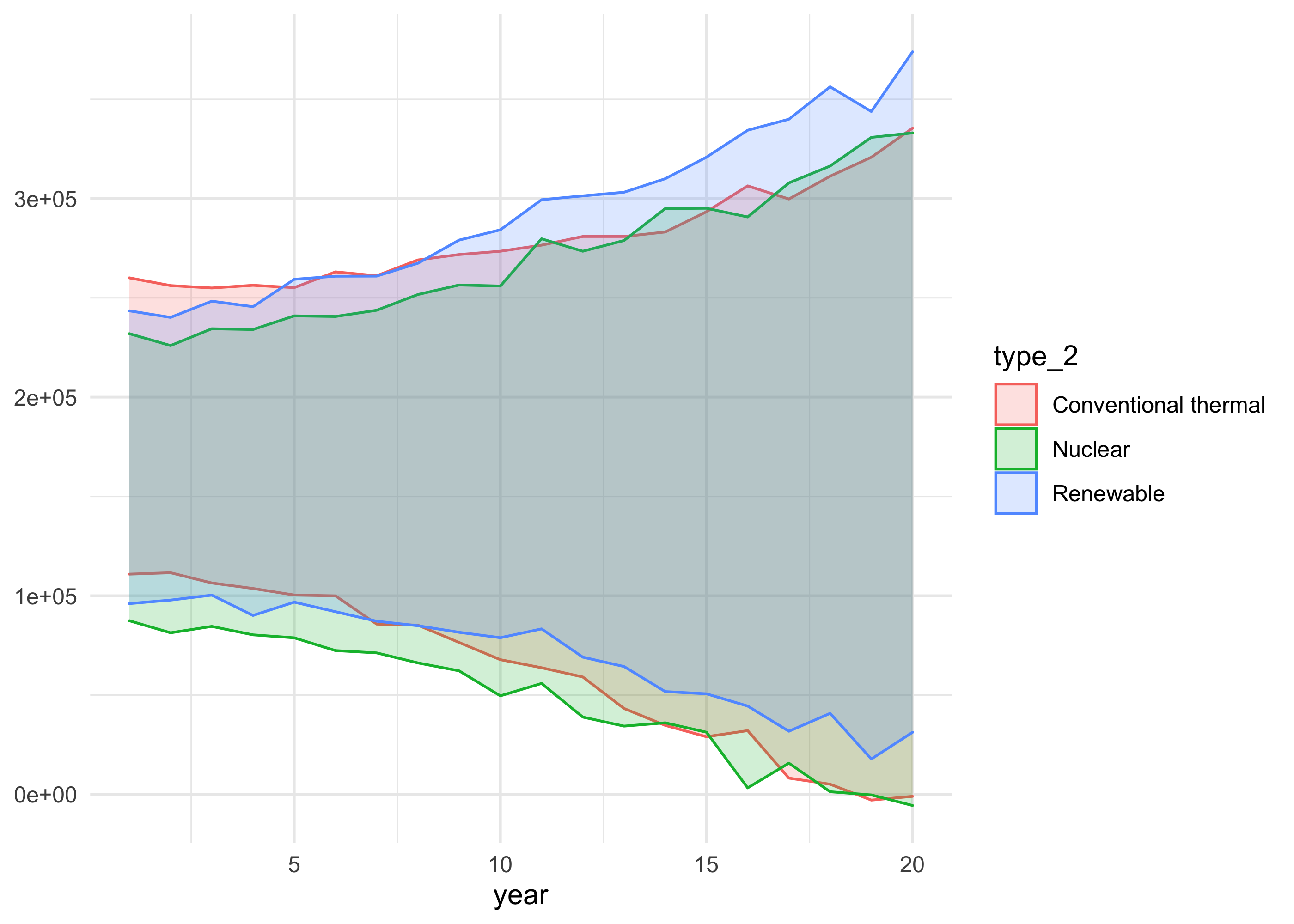

August 4, 2020 - European energy

Today’s was a bit of a bust because I tried to do some modeling, but there is not very much data. This weeks data set favored those who like to do fancy visualizations.

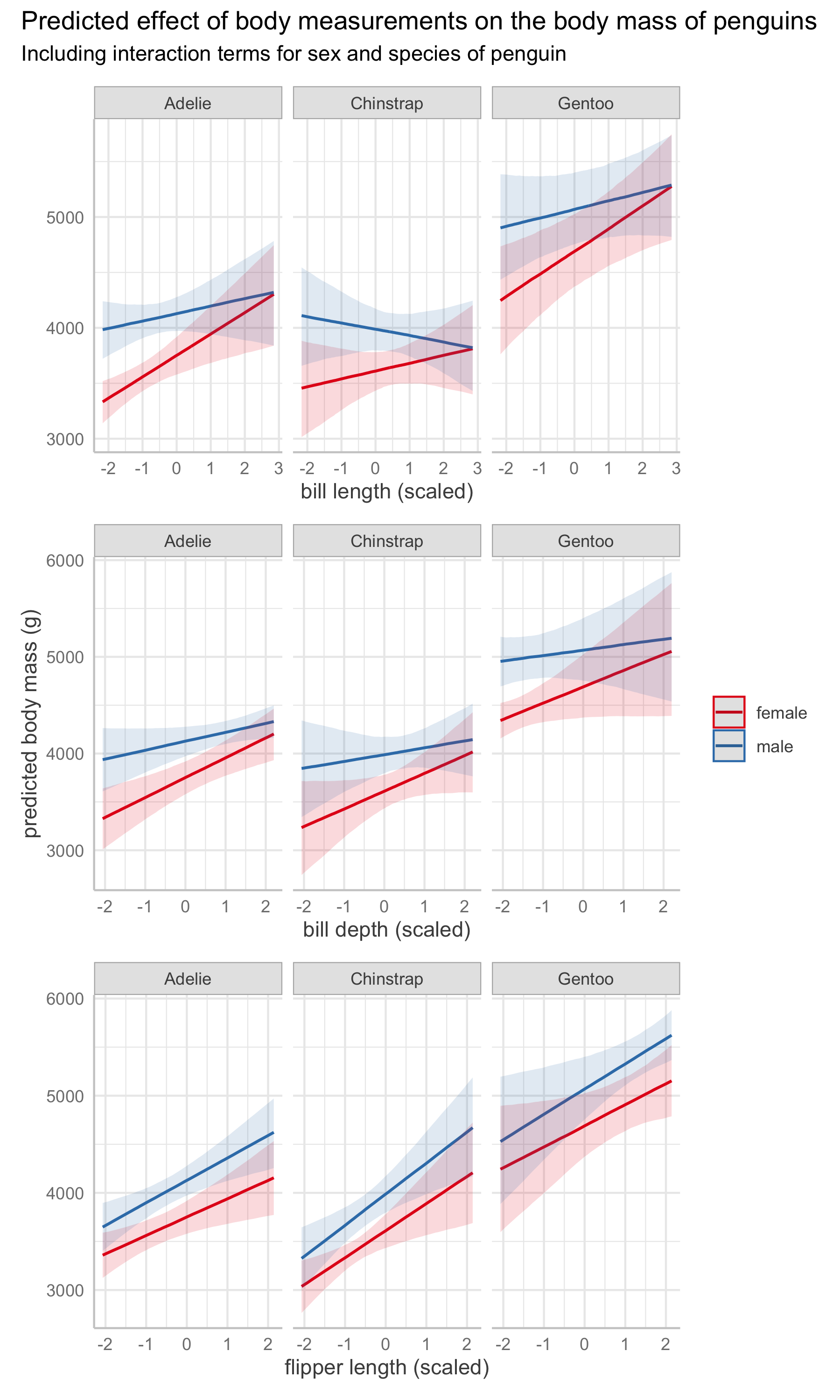

July 28, 2020 - Palmer Penguins

I took this TidyTuesday as an opportunity to try out the ‘ggeffects’ package.

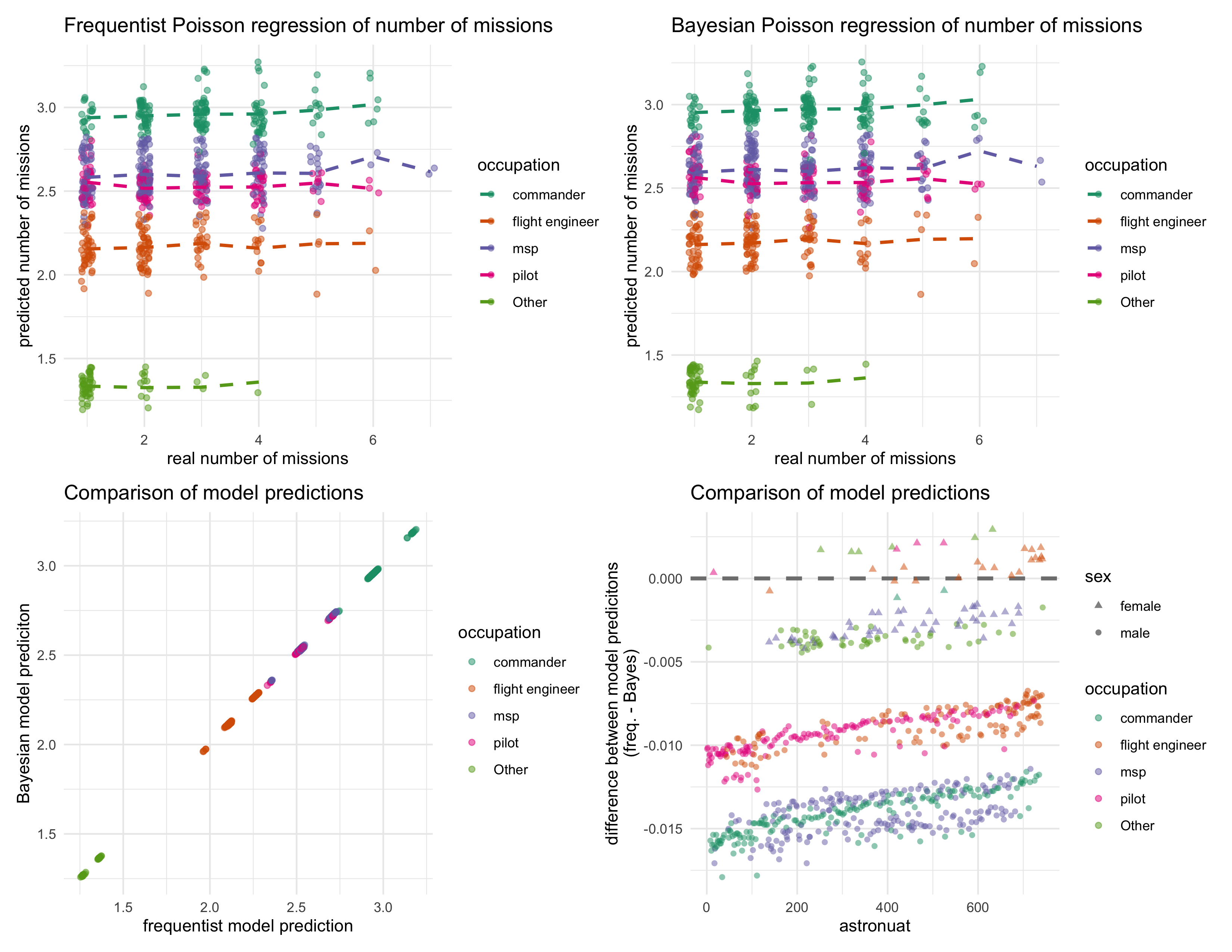

July 14, 2020 - Astronaut database

I compared the same Poisson regression model when fit using frequentist or Bayesian methods.

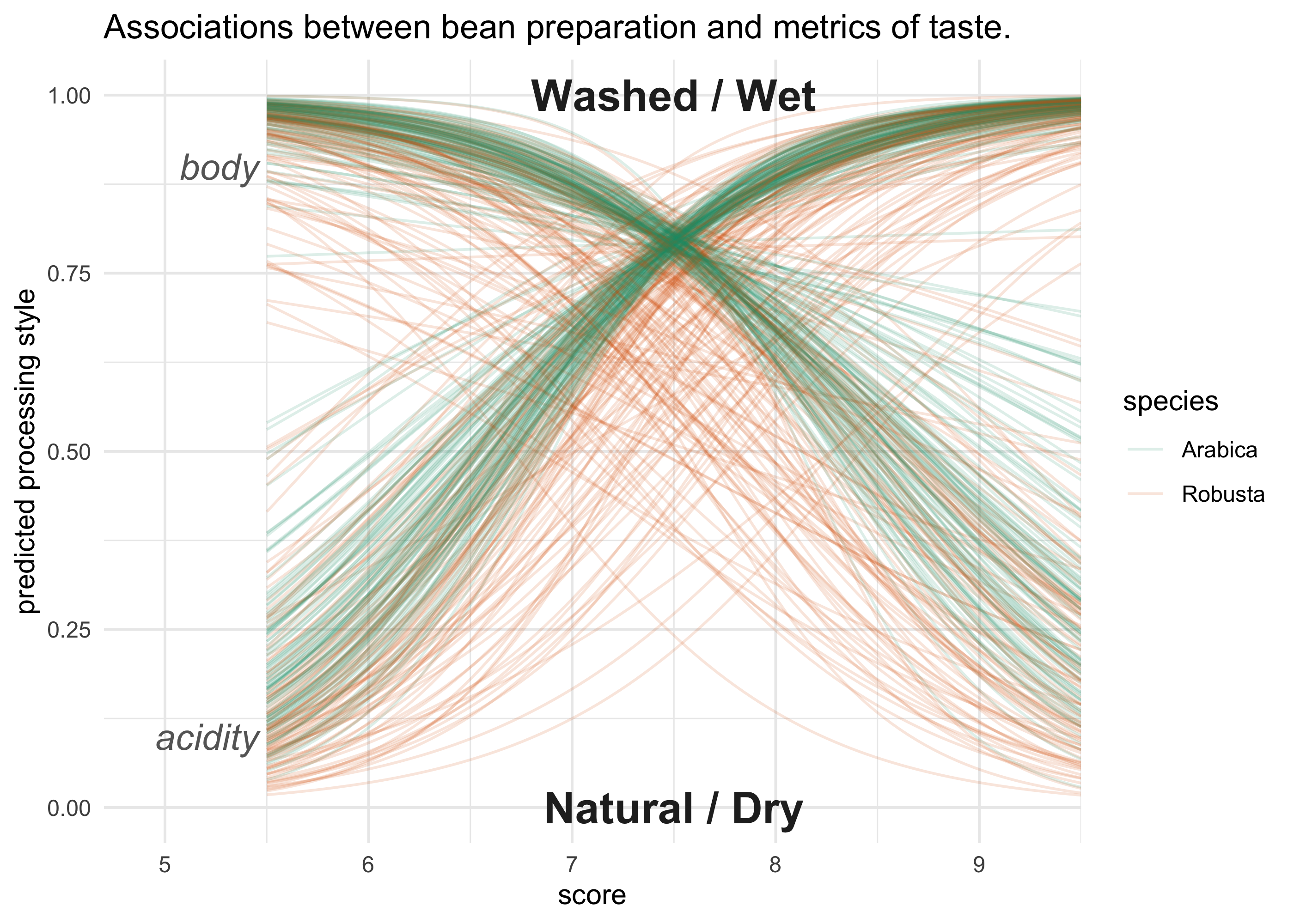

July 7, 2020 - Coffee Ratings

I practiced linear modeling by building a couple of models including a logistic regression of the bean processing method regressed on flavor metrics.

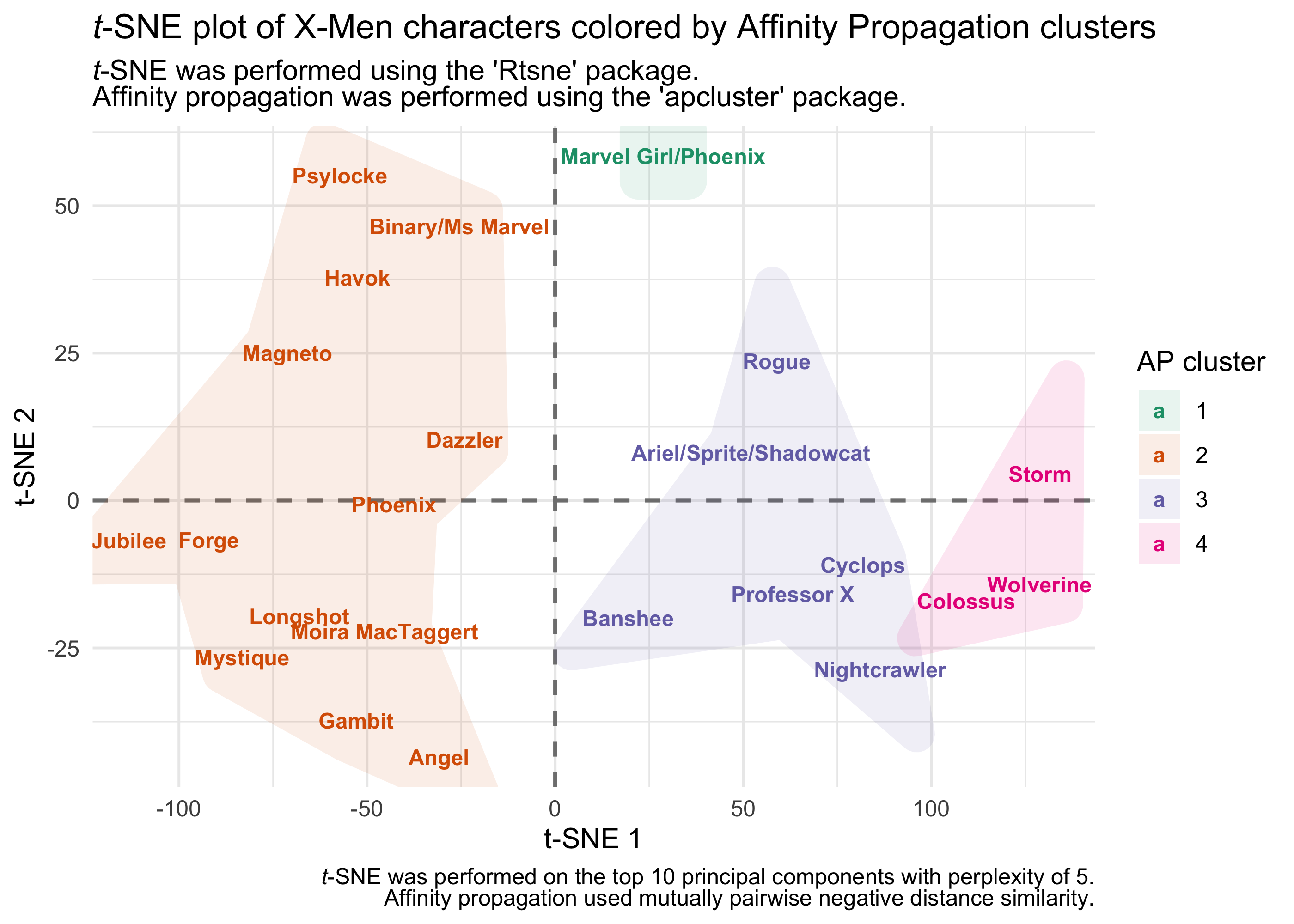

June 30, 2020 - Uncanny X-men

I played around with using DBSCAN and Affinity Propagation clustering.

June 23, 2020 - Caribou Location Tracking

I used a linear model with varying intercepts for each caribou to model the speed of a caribou depending on the season. Without accounting for the unique intercept for each caribou, the difference in speed was not detectable.

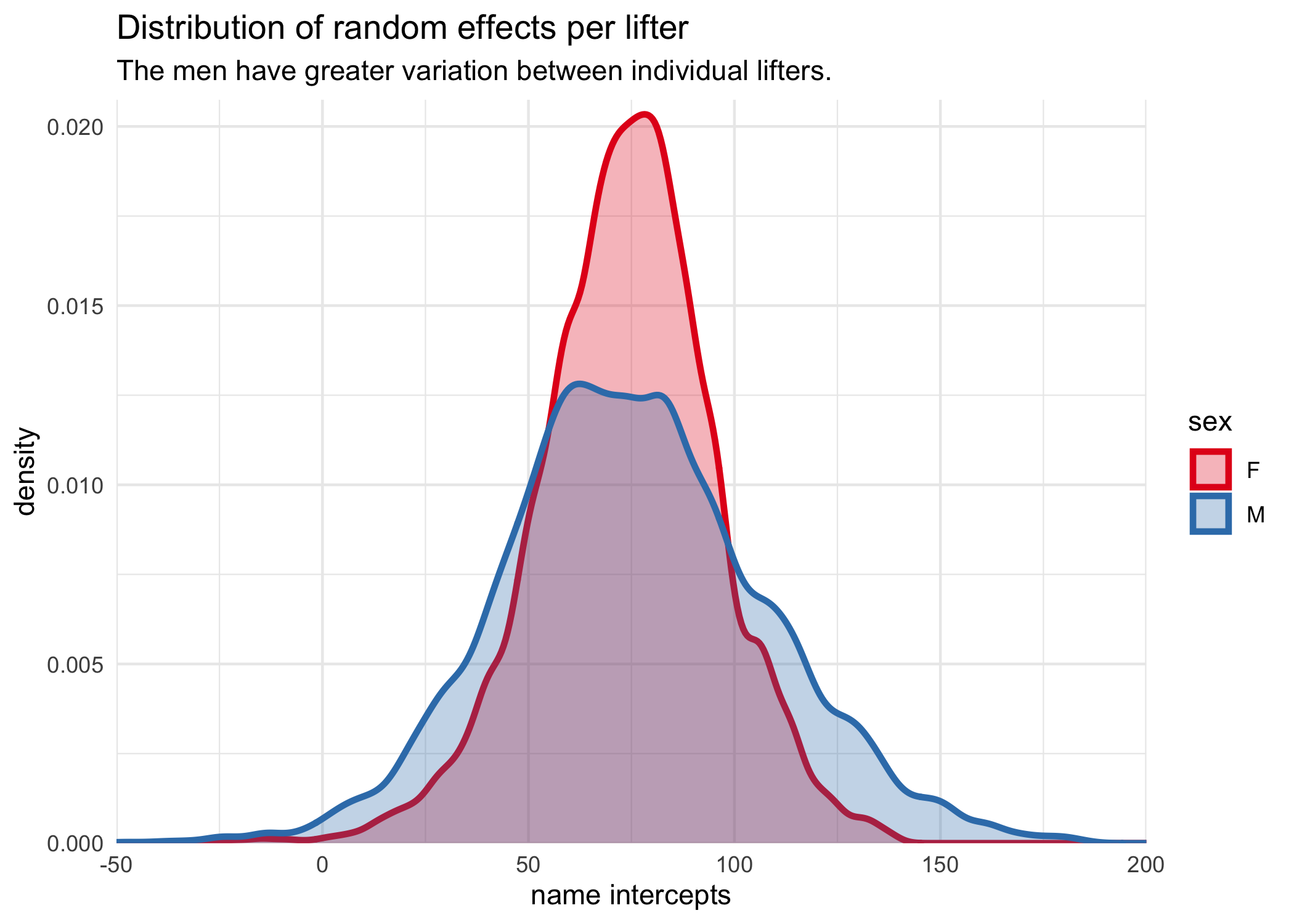

June 16, 2020 - International Powerlifting

I spent more time practicing building and understanding mixed-effects models.

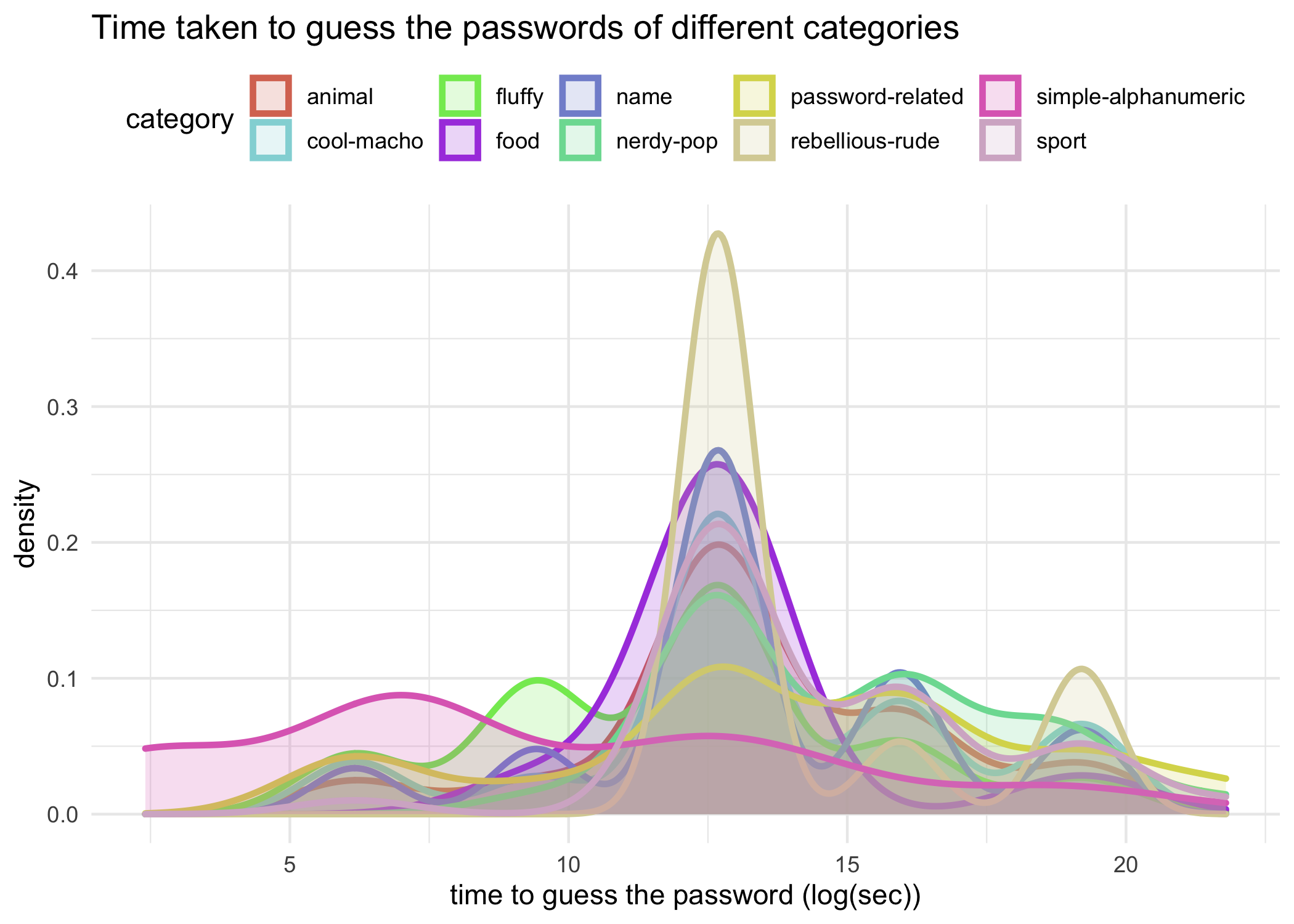

June 9, 2020 - Passwords

I experimented with various modeling methods, though didn’t do anything terribly spectacular this time around.

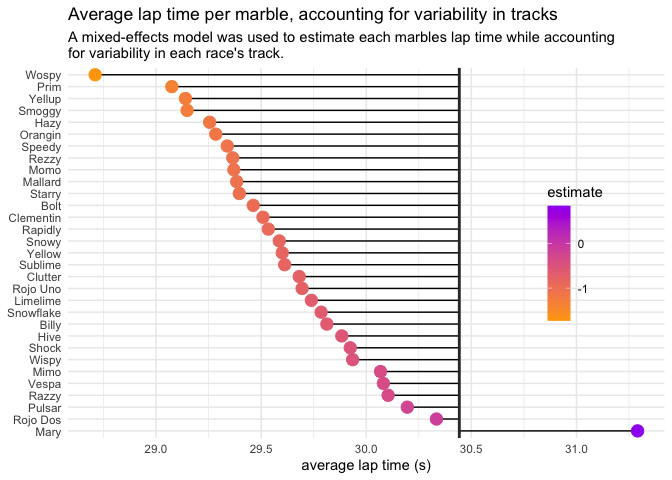

June 2, 2020 - Marble Racing

I played around with the data by asking a few smaller questions about the differences between marbles.

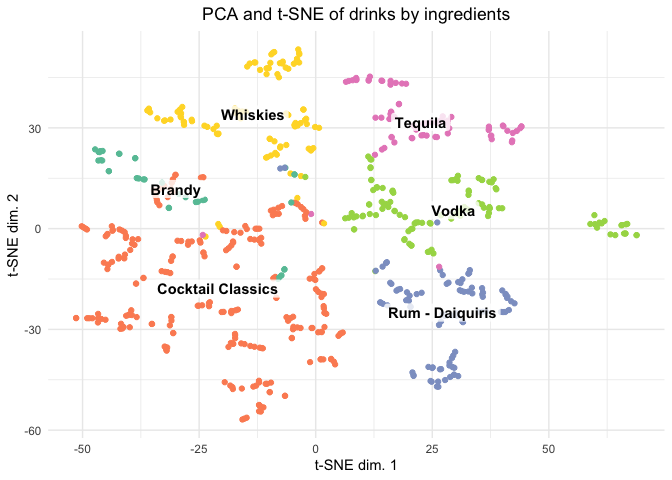

May 26, 2020 - Volcano Eruptions

I kept it simple this week because the data was quite limited. I clustered the drinks by their ingredients after doing some feature engineering to extract information form the list of ingredients.

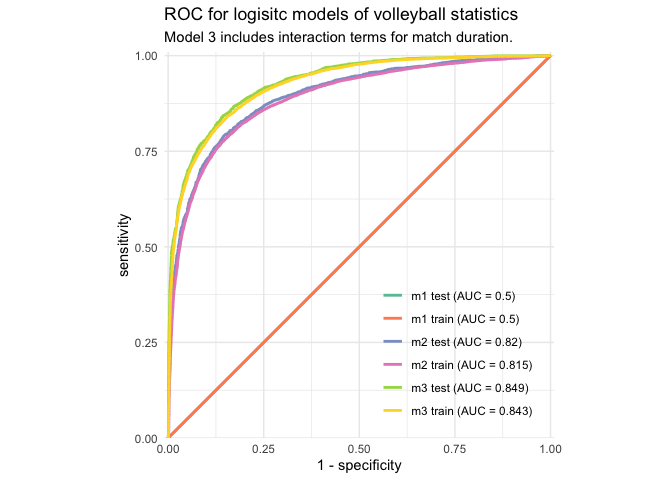

May 19, 2020 - Beach Volleyball

I used logistic models to predict winners and losers of volleyball matches based on gameplay statistics (e.g. number of attacks, errors, digs, etc.). I found that including interactions with game duration increased the performance of the model without overfitting.

May 12, 2020 - Volcano Eruptions

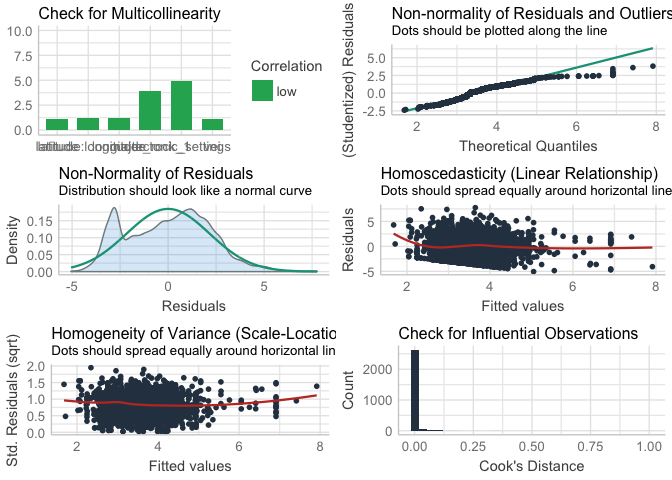

I took this as a chance to play around with the suite of packages from ‘easystats’. Towards the end, I also experiment a bit more with mixed-effects modeling to help get a better understanding of how to interpret these models.

May 5, 2020 - Animal Crossing - New Horizons

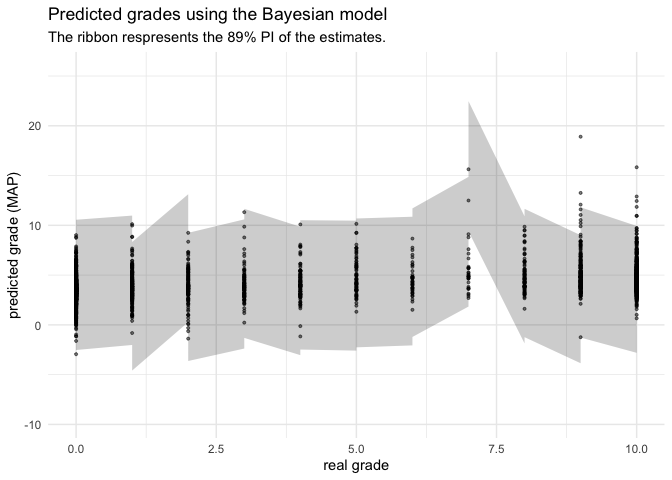

I used sentiment analysis results on user reviews to model their review grade using a multivariate Bayesian model fit with the quadratic approximation. The model was pretty awful, but I was able to get some good practice at this statistical technique I am still learning.

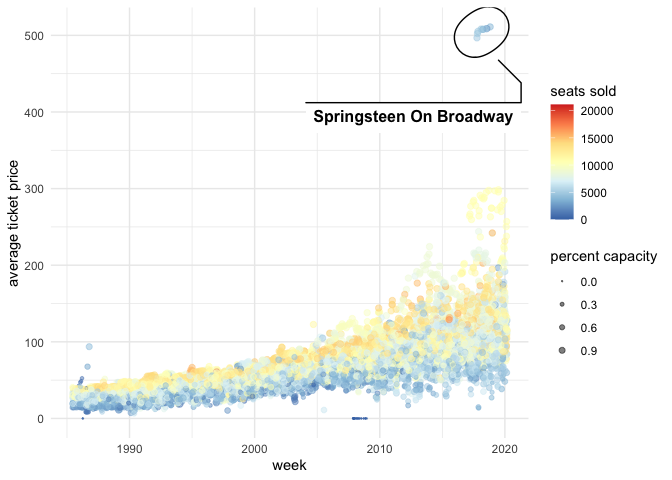

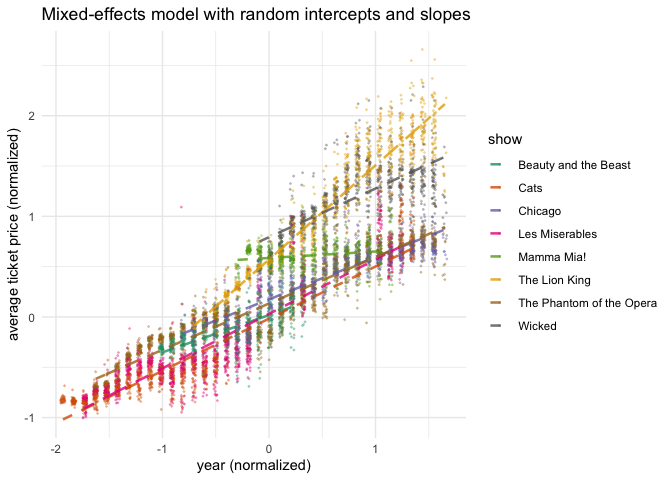

April 28, 2020 - Broadway Weekly Grosses

This data set was not very interesting to me as the numerical values were basically all derived from a single value, making it very difficult to avoid highly correlative covariates when modeling. Still, I got some practice at creating an interpreting mixed-effects models.

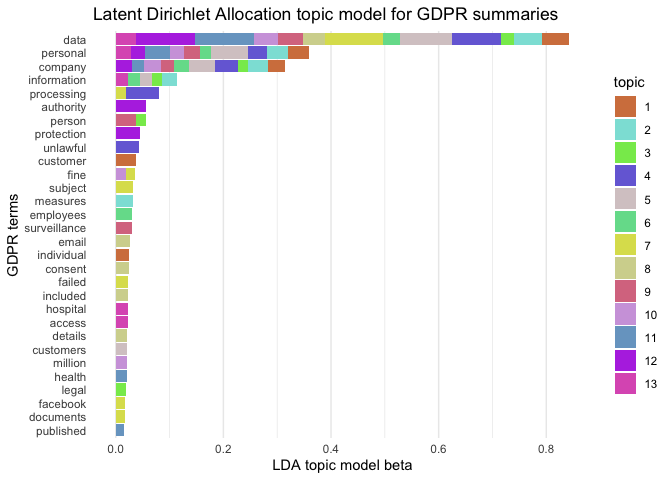

April 21, 2020 - GDPR Violations

I used the ‘tidytext’ and ‘topicmodels’ packages to group the GDPR fines based on summaries about the violations.

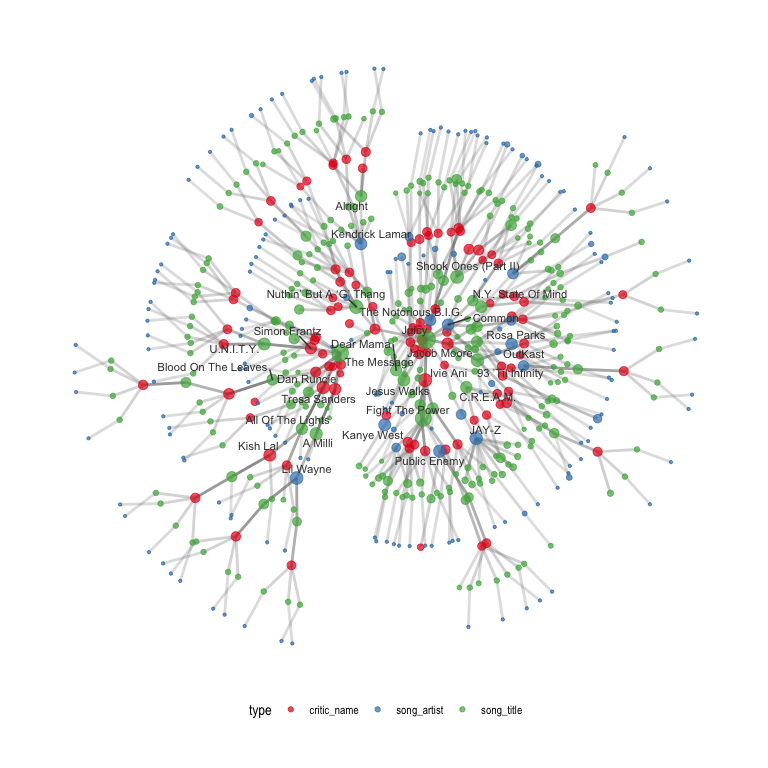

April 14, 2020 - Best Rap Artists

I built a graph of the songs, artists, and critics using Rap song rankings.

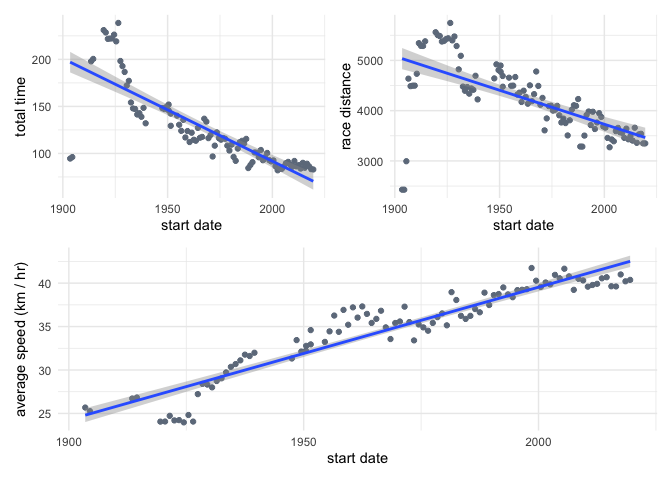

April 7, 2020 - Tour de France

There was quite a lot of data and it took me too long to sort through it all. Next time, I will focus more on asking a single simple question rather than trying to understand every aspect of the data.

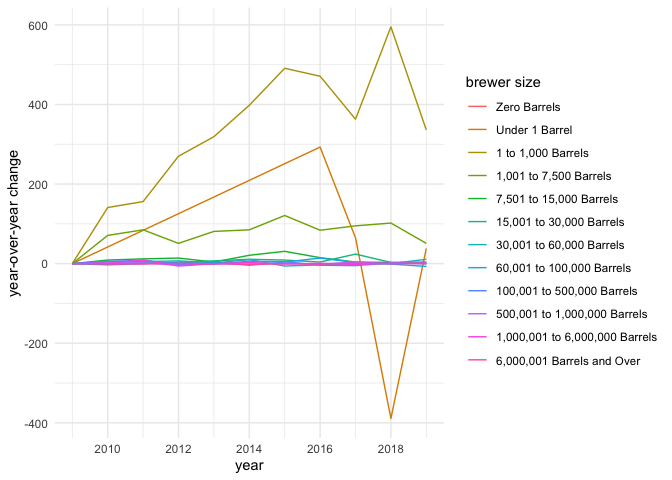

March 31, 2020 - Beer Production

I analyzed the number of breweries at varies size categories and found a jump of very small microbreweries to higher capacity in 2018 and 2019.

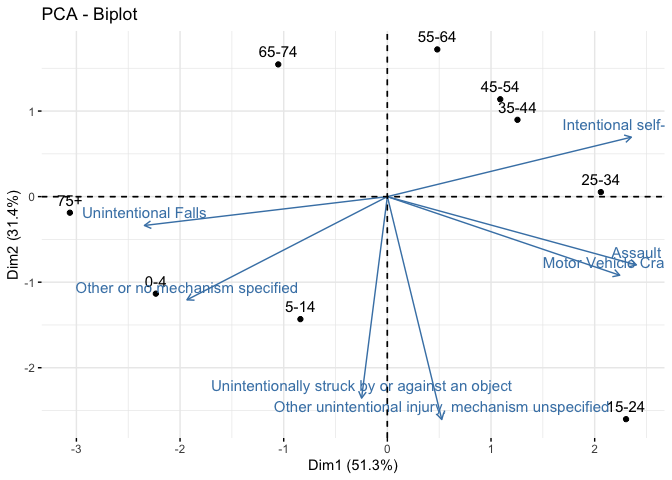

March 24, 2020 - Traumatic Brain Injury

The data was a bit more limiting because we only had summary statistics for categorical variables, but I was able to use PCA to identify some interesting properties of the TBI sustained by the different age groups.

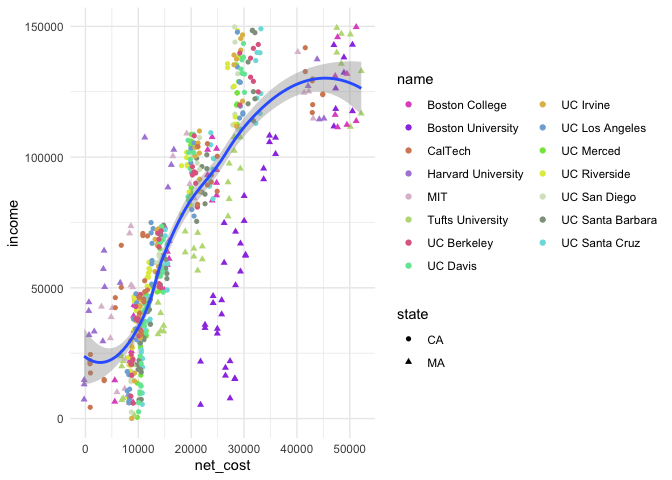

March 10, 2020 - College Tuition, Diversity, and Pay

I tried to do some classic linear modeling and mixed effects modeling, but the data didn’t really require it. Still, I got some practice with this method and read plenty about it online during the process.

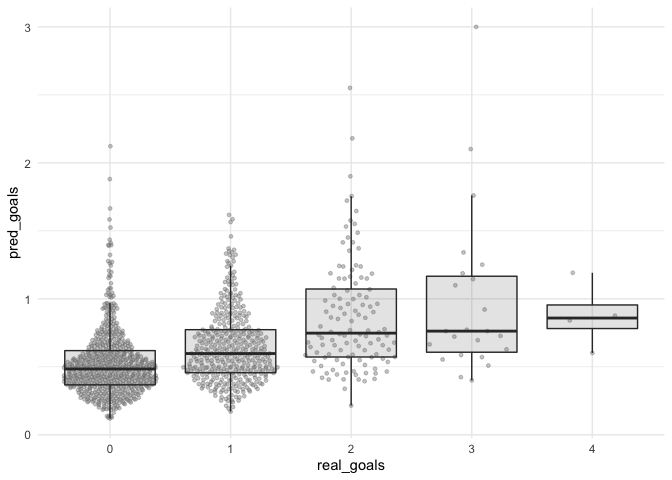

March 3, 2020 - Hockey Goals

I got some practice build regression models for count data by building Poisson, Negative Binomial, and Zero-Inflated Poisson regression models for estimating the effect of various game parameters on the goals scored by Alex Ovechkin.

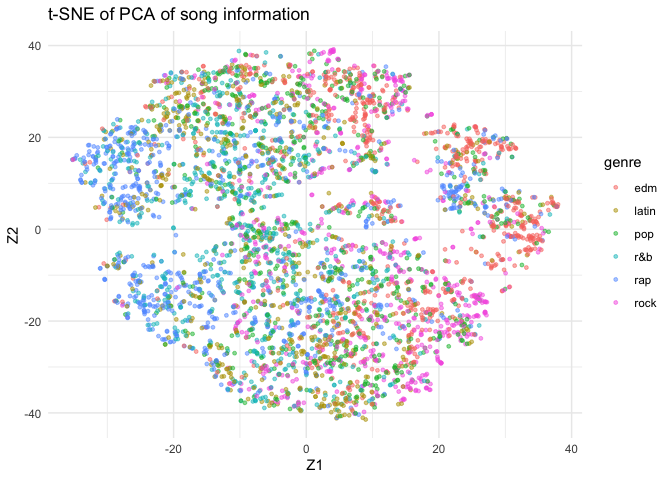

January 21, 2020 - Spotify Songs

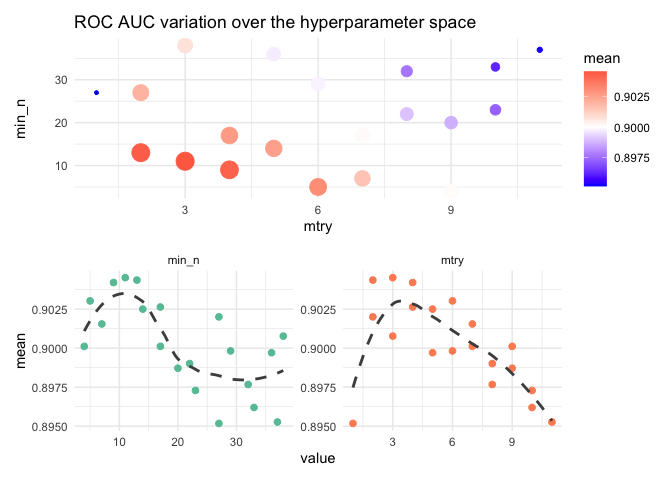

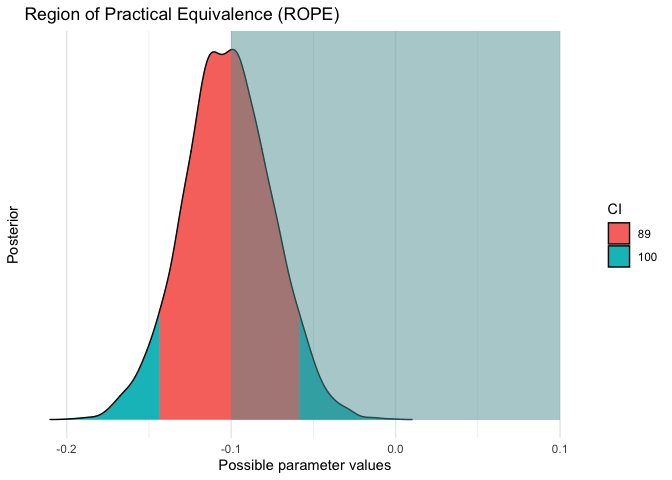

I used a random forest model to predict the genre of a playlist using musical features of their songs. I was able to play around with the ‘tidymodels’ framework.

October 15, 2019

I chose this old TidyTuesday dataset because I wanted to build a simple linear model using Bayesian methods. I didn’t do too much (and probably did a bit wrong), but this was a useful exercise to get to play around with the modeling.