K-CAI NEURAL API is a Keras based neural network API that allows you to:

- Create parameter-efficient neural networks: V1 and V2.

- Create noise-resistant neural networks for image classification and achieve state-of-the-art classification accuracy.

- Use an extremely well tested data augmentation wrapper for image classification (see

cai.util.create_image_generatorbelow). - Add non-standard layers to your neural network.

- Visualize first layer filters, activation maps, heatmaps (see example) and gradient ascent (see example).

- Prototype convolutional neural networks faster (see example).

- Save a Tensorflow dataset for image classification into a local folder structure:

cai.datasets.save_tfds_in_format. See example.

This project is a subproject from a bigger and older project called CAI and is sister to the free pascal based CAI NEURAL API.

All you need is Keras, python and pip. Alternatively, if you prefer running on your web browser without installing any software on your computer, you can run it on Google Colab.

For a quick start, you can try the Simple Image Classification with any Dataset example. This example shows how to create a model and train it with a dataset passed as parameter. Feel free to modify the parameters and to add/remove neural layers directly from your browser.

Installing via shell is very simple:

git clone https://github.com/joaopauloschuler/k-neural-api.git k

cd k && pip install .

Place this on the top of your Google Colab Jupyter Notebook:

import os

if not os.path.isdir('k'):

!git clone https://github.com/joaopauloschuler/k-neural-api.git k

else:

!cd k && git pull

!cd k && pip install .

The documentation is composed by examples and PyDoc.

These examples show how to train a neural network for the task of image classification. Most examples train a neural network with the CIFAR-10 or CIFAR-100 datasets.

- Simple Image Classification with any Dataset: this example shows how to create a model and train it with a dataset passed as parameter.

- DenseNet BC L40 with CIFAR-10: this example shows how to create a densenet model with

cai.densenet.simple_densenetand easily train it withcai.datasets.train_model_on_cifar10. - DenseNet BC L40 with CIFAR-100: this example shows how to create a densenet model with

cai.densenet.simple_densenetand easily train it withcai.datasets.train_model_on_dataset. - Experiment your own DenseNet Architecture: this example allows you to experiment your own DenseNet settings.

- Saving a TensorFlow dataset into png files so you can use the dataset with Keras image generator.

These papers show how to create parameter-efficient models (source code is available):

- An Enhanced Scheme for Reducing the Complexity of Pointwise Convolutions in CNNs for Image Classification Based on Interleaved Grouped Filters without Divisibility Constraints.

- Grouped Pointwise Convolutions Reduce Parameters in Convolutional Neural Networks.

- Color-aware two-branch DCNN for efficient plant disease classification.

- Grouped Pointwise Convolutions Significantly Reduces Parameters in EfficientNet.

- Making plant disease classification noise resistant.

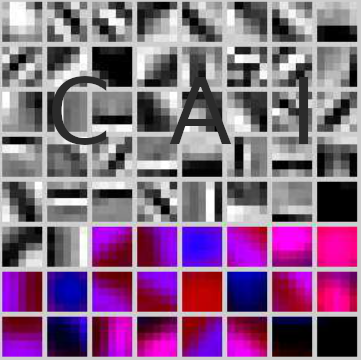

The Heatmap and Activation Map with CIFAR-10 example shows how to quickly display heatmaps (CAM), activation maps and first layer filters/patterns.

These are filter examples:

Above image has been created with a code similar to this:

weights = model.get_layer('layer_name').get_weights()[0]

neuron_patterns = cai.util.show_neuronal_patterns(weights, NumRows = 8, NumCols = 8, ForceCellMax = True)

...

plt.imshow(neuron_patterns, interpolation='nearest', aspect='equal')

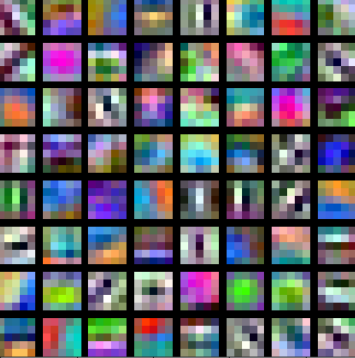

These are activation map examples:

The above shown activation maps have been created with a code similar to this:

conv_output = cai.models.PartialModelPredict(InputImage, model, 'layer_name', False)

...

activation_maps = cai.util.slice_3d_into_2d(aImage=conv_output[0], NumRows=8, NumCols=8, ForceCellMax=True);

...

plt.imshow(activation_maps, interpolation='nearest', aspect='equal')

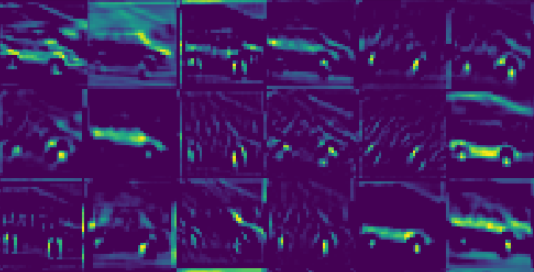

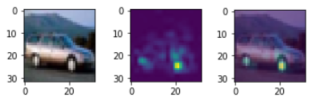

The following image shows a car (left - input sample), its heatmap (center) and both added together (right).

Heatmaps can be produced following this example:

heat_map = cai.models.calculate_heat_map_from_dense_and_avgpool(InputImage, image_class, model, pOutputLayerName='last_conv_layer', pDenseLayerName='dense')

With cai.gradientascent.run_gradient_ascent_octaves, you can easily run gradient ascent to create Deep Dream like images:

base_model = tf.keras.applications.InceptionV3(include_top=False, weights='imagenet')

pmodel = cai.models.CreatePartialModel(base_model, 'mixed3')

new_img = cai.gradientascent.run_gradient_ascent_octaves(img=original_img, partial_model=pmodel, low_range=-4, high_range=1)

plt.figure(figsize = (16, 16))

plt.imshow(new_img, interpolation='nearest', aspect='equal')

plt.show()

Above image was generated from:

There is a ready to use example: Gradient Ascent / Deep Dream Example.

After installing K-CAI, you can find documentation with:

python -m pydoc cai.datasets

python -m pydoc cai.densenet

python -m pydoc cai.layers

python -m pydoc cai.models

python -m pydoc cai.util

These papers were made with K-CAI API:

- An Enhanced Scheme for Reducing the Complexity of Pointwise Convolutions in CNNs for Image Classification Based on Interleaved Grouped Filters without Divisibility Constraints.

- Grouped Pointwise Convolutions Reduce Parameters in Convolutional Neural Networks.

- Grouped Pointwise Convolutions Significantly Reduces Parameters in EfficientNet.

- Reliable Deep Learning Plant Leaf Disease Classification Based on Light-Chroma Separated Branches.

- Color-aware two-branch DCNN for efficient plant disease classification.

- A number of new layer types (see below).

cai.util.create_image_generator: this wrapper has extremely well tested default parameters for image classification data augmentation. For you to get a better image classification accuracy might be just a case of replacing your current data augmentation generator by this one. Give it a go!cai.util.create_image_generator_no_augmentation: image generator for test datasets.cai.densenet.simple_densenet: simple way to create DenseNet models. See example.cai.datasets.load_hyperspectral_matlab_image: downloads (if required) and loads hyperspectral image from a matlab file. This function has been tested with AVIRIS and ROSIS sensor data stored as a matlab files.cai.models.calculate_heat_map_from_dense_and_avgpool: calculates a class activation mapping (CAM) inspired on the paper Learning Deep Features for Discriminative Localization (see example below).cai.util.show_neuronal_patterns: creates an array for visualizing first layer neuronal filters/patterns (see example below).cai.models.CreatePartialModel(pModel, pOutputLayerName, hasGlobalAvg=False): creates a partial model up to the layer name defined in pOutputLayerName.cai.models.CreatePartialModelCopyingChannels(pModel, pOutputLayerName, pChannelStart, pChannelCount): creates a partial model up to the layer name defined in pOutputLayerName and then copies channels starting from pChannelStart with pChannelCount channels.cai.models.CreatePartialModelFromChannel(pModel, pOutputLayerName, pChannelIdx): creates a partial model up to the layer name defined in pOutputLayerName and then copies the channel at index pChannelIdx. Use it in combination withcai.gradientascent.run_gradient_ascent_octavesto run gradient ascent from a specific channel or neuron.cai.models.CreatePartialModelWithSoftMax(pModel, pOutputLayerName, numClasses, newLayerName='k_probs'): creates a partial model up to the layer name defined in pOutputLayerName and then adds a dense layer with softmax. This method was built to be used for image classification with transfer learning.cai.gradientascent.run_gradient_ascent_octaves: allows visualizing patterns recognized by inner neuronal layers. See example. Use it in combination withcai.models.CreatePartialModel,cai.models.CreatePartialModelCopyingChannelsorcai.models.CreatePartialModelFromChannel.cai.datasets.save_tfds_in_format: saves a TensorFlow dataset as image files. Classes are folders. See example.cai.datasets.load_images_from_folders: practical way to load small datasets into memory. It supports smart resizing, LAB color encoding and bipolar inputs.

cai.layers.ConcatNegation: concatenates the input with its negation (input tensor multiplied by -1).cai.layers.CopyChannels: copies a subset of the input channels.cai.layers.EnforceEvenChannelCount: enforces that the number of channels is even (divisible by 2).cai.layers.FitChannelCountTo: forces the number of channels to fit a specific number of channels. The new number of channels must be bigger than the number of input channels. The number of channels is fitted by concatenating copies of existing channels.cai.layers.GlobalAverageMaxPooling2D: adds both global Average and Max poolings.cai.layers.GlobalAverageMaxPooling2Dspeeds up training when used as a replacement for standard average pooling and max pooling.cai.layers.InterleaveChannels: interleaves channels stepping according to the number passed as parameter.cai.layers.kPointwiseConv2D: parameter-efficient pointwise convolution as shown in the papers Grouped Pointwise Convolutions Reduce Parameters in Convolutional Neural Networks and An Enhanced Scheme for Reducing the Complexity of Pointwise Convolutions in CNNs for Image Classification Based on Interleaved Grouped Filters without Divisibility Constraints.cai.layers.Negate: negates (multiplies by -1) the input tensor.cai.layers.SumIntoHalfChannels: divedes channels into 2 halfs and then sums both halfs. This results into an output with the half of the input channels.

This project is an open source project. If you like what you see, please give it a star on github.

You can cite this API in BibTeX format with:

@software{k_cai_neural_api_2021_5810092,

author = {Joao Paulo Schwarz Schuler},

title = {K-CAI NEURAL API},

month = dec,

year = 2021,

publisher = {Zenodo},

doi = {10.5281/zenodo.5810092},

url = {https://doi.org/10.5281/zenodo.5810092}

}