This repo is an extension of the weather-etl example repo. The weather-etl example provides students of data engineering an example of an end-to-end ETL project that runs locally.

This project, weather-etl-aws, provides student an example of the same project hosted on AWS.

data/ # contains static datasets

docs/ # contains additional documentation

images/ # contains images used for the README

lambda_app/

|__ _config.template.sh # template for adding credentials and secrets

|__ _config.template.bat # template for adding credentials and secrets

|__ ddl_create_table.sql # SQL code used to create the target tables

|__ etl_lambda.py # contains the main etl logic

|__ etl_lambda_local.py # contains the etl logic to run locally

|__ test_transformation_functions.py # pytest unit tests

|__ transform_functions.py # custom user-generated transformation functions

|__ requirements.txt # python dependencies for lambda app

|__ build.sh # shell script to build the zip file

|__ build.bat # shell script to build the zip file

README.md # all you need to know is in here

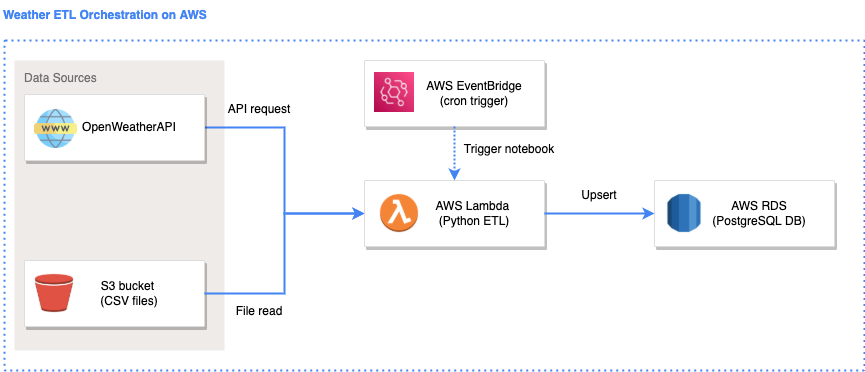

The solution architecture diagram was created using: https://draw.io/

Icons were taken from: https://www.flaticon.com/ and https://www.vecta.io/

Follow the steps below to run the code locally:

If you look into the etl_lambda.py file, you will see that there are several lines for:

# getting CSV_ENDPOINT_CAPITAL_CITIES environment variable

CSV_ENDPOINT_CAPITAL_CITIES = os.environ.get("CSV_ENDPOINT_CAPITAL_CITIES")

# getting API_KEY_OPEN_WEATHER environment variable

API_KEY_OPEN_WEATHER = os.environ.get("API_KEY_OPEN_WEATHER")These lines are used to store variables that are either (1) secrets, or (2) change between environments (e.g. dev, test, production).

We will first need to declare the values for these variables. This can easily be done by running the following in the terminal:

macOS:

export CSV_ENDPOINT_CAPITAL_CITIES="path_to_csv"

export API_KEY_OPEN_WEATHER="secret_goes_here"

export DB_USER="secret_goes_here" # e.g. postgres

export DB_PASSWORD="secret_goes_here" # e.g. postgres

export DB_SERVER_NAME="secret_goes_here" # e.g. localhost

export DB_DATABASE_NAME="secret_goes_here"

windows:

set CSV_ENDPOINT_CAPITAL_CITIES=path_to_csv

set API_KEY_OPEN_WEATHER=secret_goes_here

set DB_USER=secret_goes_here

set DB_PASSWORD=secret_goes_here

set DB_SERVER_NAME=secret_goes_here

set DB_DATABASE_NAME=secret_goes_here

To save time running each variable in the terminal, you may wish to create script files to store the declaration of each variable.

- macOS: store the declaration of the variables in a

config.local.shfile- run using

. ./config.local.sh

- run using

- windows: store the declaration of the variables in a

config.local.batfile- run using

config.local.bat

- run using

To run the application locally, simply run python etl_lambda_local.py.

etl_lambda_local.py will import the main function in etl_lambda.py and run it.

If successful, you should see records appearing in your local postgres database.

Follow these steps to deploy the solution to AWS.

- 1. Deploy PostgreSQL on AWS RDS

- 2. Deploy S3 bucket

- 3. Declare environment variables

- 4. Test locally

- 5. Deploy ETL to AWS Lambda

- 6. Deploy Cron Trigger on AWS EventBridge

- In the AWS Console, search for "RDS".

- Choose the region closest to you on the top-right e.g. Sydney (ap-southeast-2)

- Select "Create database"

- Configure database. Note: Unless specified, leave the settings to default.

- Select "PostgreSQL"

- Select Version "12.9-R1" (for the free tier, you will need to use a version below 13).

- Select templates: "Free tier"

- Provide a DB instance identifier. Note: this is the name that appears on AWS and not the actual name of the database or server.

- Set master username: "postgres"

- Set master password:

<specify your password> - Set confirm password:

<specify your password> - In connectivity, set public access: "Yes"

- In additional configuration, deselect "Enable automated backups"

- Select "Create database"

- After the database has been deployed, select the database and go to "Security group rules" and select the Security Group(s) with Type: "CIDR/IP - Inbound".

- In the Security Group, select "Inbound rules"

- Select "Edit inbound rules"

- Select "Add rule" and add a new rule for:

- Type: "All traffic"

- Protocol: "All"

- Port Range: "All"

- Source: "Anywhere-IPv4"

- Select "Save rules"

- Try connecting to your database now from PgAdmin4. You should be successful.

- After connecting to the database, create a new query tool and run ddl_create_table.sql to create the database tables.

-

In the AWS Console, search for "S3".

-

Select "Create bucket"

-

Configure bucket. Note: Unless specified, leave the settings to default.

- Provide bucket name

- AWS Region: Choose the region closest to you e.g. Sydney (ap-southeast-2)

- Deselect "Block all public access"

- Select "Create bucket"

-

After the bucket is deployed, go to the bucket and into the "Permissions" tab and into the bucket policy. Set the bucket policy to:

{ "Version": "2012-10-17", "Statement": [ { "Sid": "PublicRead", "Effect": "Allow", "Principal": "*", "Action": [ "s3:GetObject", "s3:GetObjectVersion" ], "Resource": "arn:aws:s3:::your-bucket-arn-here/*" } ] }Save changes.

-

Upload australian_capital_cities.csv to the bucket.

-

Keep in mind the URL for newly uploaded CSV file.

If you look into the etl_lambda.py file, you will see that there are several lines for:

# getting CSV_ENDPOINT_CAPITAL_CITIES environment variable

CSV_ENDPOINT_CAPITAL_CITIES = os.environ.get("CSV_ENDPOINT_CAPITAL_CITIES")

# getting API_KEY_OPEN_WEATHER environment variable

API_KEY_OPEN_WEATHER = os.environ.get("API_KEY_OPEN_WEATHER")These lines are used to store variables that are either (1) secrets, or (2) change between environments (e.g. dev, test, production).

We will first need to declare the values for these variables. This can easily be done by running the following in the terminal:

macOS:

export CSV_ENDPOINT_CAPITAL_CITIES="path_to_csv" # from S3 object url

export API_KEY_OPEN_WEATHER="secret_goes_here"

export DB_USER="secret_goes_here" # e.g. postgres

export DB_PASSWORD="secret_goes_here" # e.g. postgres

export DB_SERVER_NAME="secret_goes_here" # e.g. postgres server host name on AWS

export DB_DATABASE_NAME="secret_goes_here"

windows:

set CSV_ENDPOINT_CAPITAL_CITIES=path_to_csv

set API_KEY_OPEN_WEATHER=secret_goes_here

set DB_USER=secret_goes_here

set DB_PASSWORD=secret_goes_here

set DB_SERVER_NAME=secret_goes_here

set DB_DATABASE_NAME=secret_goes_here

To save time running each variable in the terminal, you may wish to create script files to store the declaration of each variable.

- macOS: store the declaration of the variables in a

config.aws.shfile- run using

. ./config.aws.sh

- run using

- windows: store the declaration of the variables in a

config.aws.batfile- run using

config.aws.bat

- run using

At this point, it is a good idea to test that everything still works when running the ETL code using a combination of services deployed on AWS and running locally.

# declare the environment variables (macOS)

. ./config.aws.sh

# or, declare the environment variables (windows)

config.aws.bat

# run the ETL app

python etl_lambda_local.py

If successful, then proceed on to the next step, otherwise troubleshoot the issue.

Before we can deploy the app, we need to first build the app.

Building the app refers to packaging and compiling the app so that it is in a state that can be readily deployed onto the target platform (e.g. AWS, Heroku, Azure, GCP, etc). We can skip the compilation since Python is not a compiled language, however we still need to package the app.

To package the app, we will run the following lines of code:

macOS:

pip install --target ./.package -r ./requirements.lambda.txt

cd .package

zip -r ../lambda_package.zip .

cd ..

zip -g ../lambda_package.zip etl_lambda.py transform_functions.py

windows:

Note for Windows-only - You will need to install 7z (7-zip) which is a command line tool used for zipping files.

- Go to https://www.7-zip.org/ and download the version for your windows PC (usually 64-bit x64)

- Run the installer .exe file

- Add the path

C:\Program Files\7-Zipto your environment variablespath

pip install --target ./.package -r ./requirements.lambda.txt

cd .package

7z a -tzip ../lambda_package.zip .

cd ..

7z a -tzip lambda_package.zip etl_lambda.py transform_functions.py

This will produce a .zip file which contains all the code and library packages required to run the app on AWS Lambda. Note that some libraries like Pandas and Numpy were not packaged in the process as those libraries need to be packaged using a linux machine, whereas we are using Mac or Windows machines. So instead, we will use Layers in AWS Lambda later.

For re-use, we've stored the commands in build.sh and build.bat respectively.

You can just build the app by running either

macOS:

. ./build.sh

windows:

build.bat

- In the AWS Console, search for "Lambda".

- Choose the region closest to you on the top-right e.g. Sydney (ap-southeast-2)

- Select "Create function"

- Configure the lambda function. Note: Unless specified, leave the settings to default.

- Provide function name

- Runtime: Python 3.9

- Select "Create function"

- After the lambda function is deployed, go to the "Code" section, and select "Upload from" > ".zip file" and provide the .zip file you have built. Click "save" and allow up to 2 mins for the file to be uploaded and processed.

- In the "Code" section go to "Runtime settings" and select "Edit".

- We need to tell AWS Lambda which file and function to execute.

- In "Handler", specify: "etl_lambda.lambda_function".

- Select "Save"

- In the "Code" section, scroll down to "Layers" and select "Add a layer".

- As previously mentioned, some libraries need to be installed using a linux machine as AWS Lambda is running on linux machines. However, we have Mac and Windows machines. So we will use a "Layer" which is a set of packaged libraries we can use. We will be using layers provided by https://github.com/keithrozario/Klayers. For a list of available packaged libraries in Sydney (ap-southeast-2), see: https://api.klayers.cloud//api/v2/p3.9/layers/latest/ap-southeast-2/json. The Layer we will be using is the pandas layer.

- In the Layer config, select "Specify an ARN" and provide "arn:aws:lambda:ap-southeast-2:770693421928:layer:Klayers-p39-pandas:1" and select "Verify".

- After the layer is verified, select "Add"

- In the "Configuration" section, go to "Environment variables" and select "Edit". You will now create environment variables that correspond to your

config.aws.shorconfig.aws.batfiles.- Select "Add environment variable" and proceed to populate the "Key" and "Value" pairs for each environment variable corresponding to your

config.aws.shorconfig.aws.batfiles.

- Select "Add environment variable" and proceed to populate the "Key" and "Value" pairs for each environment variable corresponding to your

- In the "Configuration" section, go to "General configuration" and change the timeout to 30 seconds.

- In the "Test" section, click on the "Test" button to trigger your lambda function. If successful, you should see more records appear in your database.

Finally, we can look at automating the scheduling of the ETL job.

- In the AWS Console, search for "EventBridge".

- Choose the region closest to you on the top-right e.g. Sydney (ap-southeast-2)

- Select "Create create"

- Configure EventBridge. Note: Unless specified, leave the settings to default.

- Provide a rule name

- Define pattern: "Schedule"

- Fixed rate every: "2" "minutes" (you can set it to every 2 minutes to try it out)

- Select targets: "Lambda function"

- Function:

select your function name - Select "Create"

- Wait until your trigger runs and check your database again. You should see more records appear.