Lirui Wang, Yiyang Ling, Zhecheng Yuan, Mohit Shridhar, Chen Bao, Yuzhe Qin, Bailin Wang, Huazhe Xu, Xiaolong Wang

Project Page | Arxiv | Gradio Demo | Huggingface Dataset

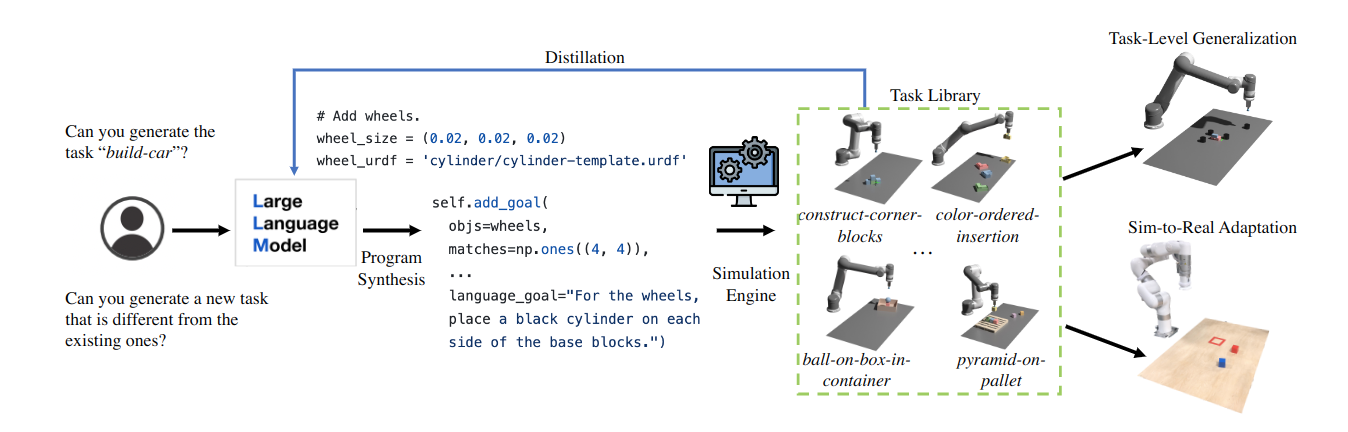

This repo explores the use of an LLM code generation pipeline to write simulation environments and expert goals to augment diverse simulation tasks.

pip install -r requirements.txtpython setup.py developexport GENSIM_ROOT=$(pwd)export OPENAI_KEY=YOUR KEY. We use OpenAI's GPT-4 as the language model. You need to have an OpenAI API key to run task generation with GenSim. You can get one from here.

After the installation process, you can run:

# basic bottom-up prompt

python gensim/run_simulation.py disp=True prompt_folder=vanilla_task_generation_prompt_simple

# bottom-up template generation

python gensim/run_simulation.py disp=True prompt_folder=bottomup_task_generation_prompt save_memory=True load_memory=True task_description_candidate_num=10 use_template=True

# top-down task generation

python gensim/run_simulation.py disp=True prompt_folder=topdown_task_generation_prompt save_memory=True load_memory=True task_description_candidate_num=10 use_template=True target_task_name="build-house"

# task-conditioned chain-of-thought generation

python gensim/run_simulation.py disp=True prompt_folder=topdown_chain_of_thought_prompt save_memory=True load_memory=True task_description_candidate_num=10 use_template=True target_task_name="build-car"

- To remove a task (delete its code and remove it from the task and task code buffer), use

python misc/purge_task.py -f color-sequenced-block-insertion - To add a task (extract task description to add to buffer), use

python misc/add_task_from_code.py -f ball_on_box_on_container

- All generated tasks in

cliport/generated_tasksshould have automatically been imported - Set the task name and then use

demo.pyfor visualization. For instance,python cliport/demos.py n=200 task=build-car mode=test disp=True. - The following is a guide for training everything from scratch (More details in cliport). All tasks follow a 4-phase workflow:

- Generate

train,val,testdatasets withdemos.py - Train agents with

train.py - Run validation with

eval.pyto find the best checkpoint onvaltasks and save*val-results.json - Evaluate the best checkpoint in

*val-results.jsonontesttasks witheval.py

- Generate

-

Prepare data using

python gensim/prepare_finetune_gpt.py. Released dataset is here -

Finetune using openai api

openai api fine_tunes.create --training_file output/finetune_data_prepared.jsonl --model davinci --suffix 'GenSim' -

Evaluate it using

python gensim/evaluate_finetune_model.py +target_task=build-car +target_model=davinci:ft-mit-cal:gensim-2023-08-06-16-00-56 -

Compare with

python gensim/run_simulation.py disp=True prompt_folder=topdown_task_generation_prompt_simple load_memory=True task_description_candidate_num=10 use_template=True target_task_name="build-house" gpt_model=gpt-3.5-turbo-16k trials=3 -

Compare with

python gensim/run_simulation.py disp=True prompt_folder=topdown_task_generation_prompt_simple_singleprompt load_memory=True task_description_candidate_num=10 target_task_name="build-house" gpt_model=gpt-3.5-turbo-16k -

turbo finetuned models.

python gensim/evaluate_finetune_model.py +target_task=build-car +target_model=ft:gpt-3.5-turbo-0613: trials=3 disp=True -

Finetune Code-LLAMA using hugging-face transformer library here

-

offline eval:

python -m gensim.evaluate_finetune_model_offline model_output_dir=after_finetune_CodeLlama-13b-Instruct-hf_fewshot_False_epoch_10_0

- Temperature

0.5-0.8is good range for diversity,0.0-0.2is for stable results. - The generation pipeline will print out statistics regarding compilation, runtime, task design, and diversity scores. Note that these metric depend on the task compexity that LLM tries to generate.

- Core prompting and code generation scripts are in

gensimand training and task scripts are incliport. prompts/folder stores different kinds of prompts to get the desired environments. Each folder contains a sequence of prompts as well as a meta_data file.prompts/datastores the base task library and the generated task library.- The GPT-generated tasks are stored in

generated_tasks/. Usedemo.pyto play with them.cliport/demos_gpt4.pyis an all-in-one prompt script that can be converted into ipython notebook. - Raw text outputs are saved in

output/output_stats, figure results saved inoutput/output_figures, policy evaluation results are saved inoutput/cliport_output. - To debug generated code, manually copy-paste

generated_task.pythen runpython cliport/demos.py n=50 task=gen-task disp=True - This version of cliport should support

batchsize>1and can run with more recent versions of pytorch and pytorch lightning. - Please use Github issue tracker to report bugs. For other questions please contact Lirui Wang

- blender rendering

python cliport/demos.py n=310 task=align-box-corner mode=test disp=True +record.blender_render=True record.save_video=True

If you find GenSim useful in your research, please consider citing:

@inproceedings{wang2023gen,

author = {Lirui Wang, Yiyang Ling, Zhecheng Yuan, Mohit Shridhar, Chen Bao, Yuzhe Qin, Bailin Wang, Huazhe Xu, Xiaolong Wang},

title = {GenSim: Generating Robotic Simulation Tasks via Large Language Models},

booktitle = {Arxiv},

year = {2023}

}