This repository provides the official PyTorch implementation of the following paper:

Learning to Drop Points for LiDAR Scan Synthesis

Kazuto Nakashima and Ryo Kurazume

In IROS 2021

[Project] [Paper]

Overview: We propose a noise-aware GAN for generative modeling of 3D LiDAR data on a projected 2D representation (aka spherical projection). Although the 2D representation has been adopted in many LiDAR processing tasks, generative modeling is non-trivial due to the discrete dropout noises caused by LiDAR’s lossy measurement. Our GAN can effectively learn the LiDAR data by representing such discrete data distribution as a composite of two modalities: an underlying complete depth and the corresponding reflective uncertainty.

Generation: The learned depth map (left), the measurability map for simulating the point-drops (center), and the final depth map (right).

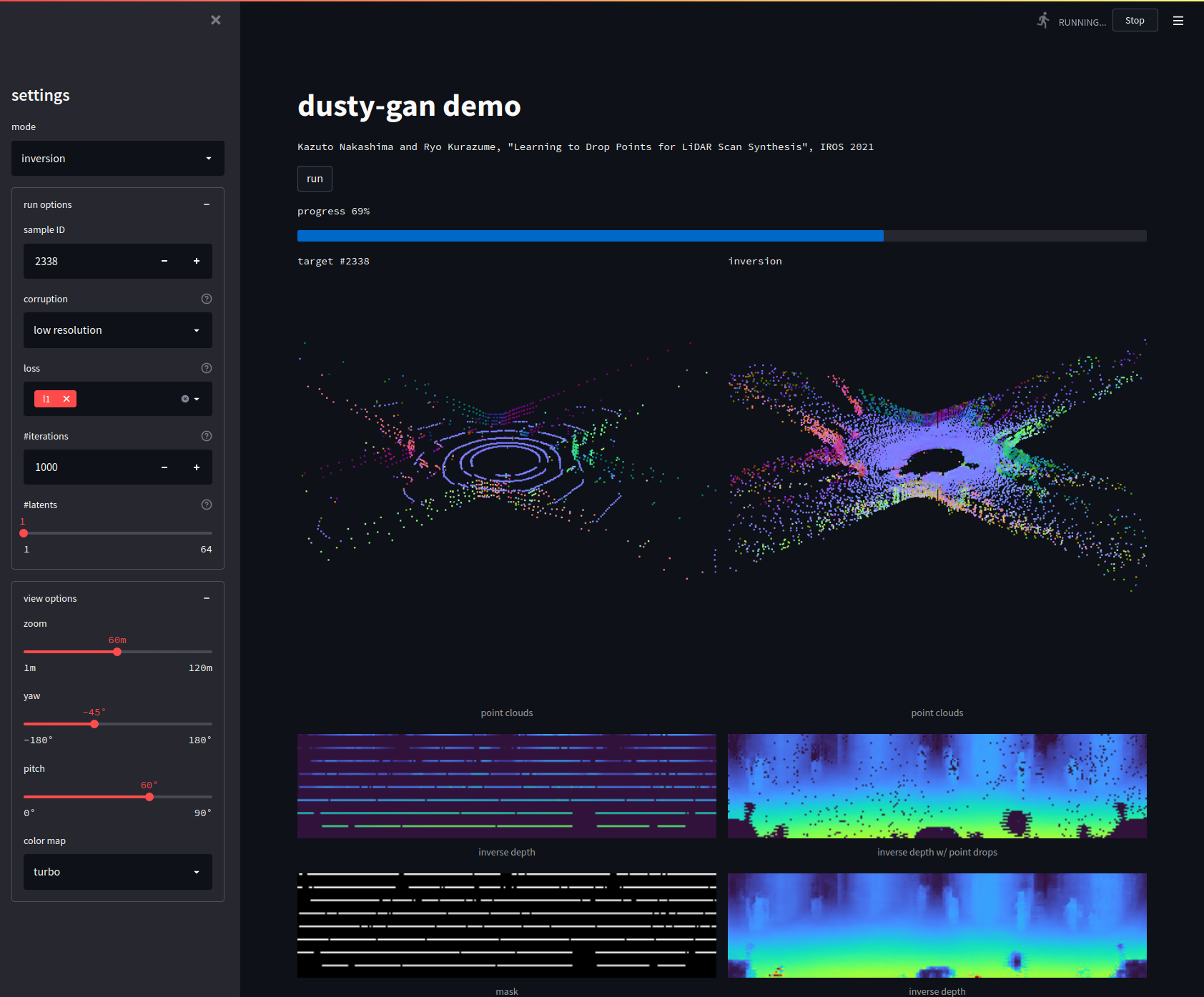

Reconstruction: The trained generator can be used as a scene prior to restore LiDAR data.

To setup with anaconda:

$ conda env create -f environment.yaml

$ conda activate dusty-ganPlease follow the instruction for KITTI Odometry. This step produces the azimuth/elevation coordinates (angles.pt) aligned

with the image grid, which are required for all the following steps.

|

|

|---|

| Dataset | LiDAR | Method | Weight | Configuration |

|---|---|---|---|---|

| KITTI Odometry | Velodyne HDL-64E | Baseline | Download | Download |

| DUSty-I | Download | Download | ||

| DUSty-II | Download | Download |

Please use train.py with dataset=, solver=, and model= options.

The default configurations are defined under configs/ and can be overridden via a console (reference).

$ python train.py dataset=kitti_odometry solver=nsgan_eqlr model=dcgan_eqlr # baseline

$ python train.py dataset=kitti_odometry solver=nsgan_eqlr model=dusty1_dcgan_eqlr # DUSty-I (ours)

$ python train.py dataset=kitti_odometry solver=nsgan_eqlr model=dusty2_dcgan_eqlr # DUSty-II (ours)Each run creates a unique directory with saved weights and log file.

outputs/logs

└── dataset=<DATASET>

└── model=<MODEL>

└── solver=<SOLVER>

└── <DATE>

└── <TIME>

├── .hydra

│ └── config.yaml # all configuration parameters

├── models

│ ├── ...

│ └── checkpoint_0025000000.pth # trained weights

└── runs

└── <TENSORBOARD FILES> # logged losses, scores, and imagesTo monitor losses, scores, and images, run the following command to launch TensorBoard.

$ tensorboard --logdir outputs/logsTo evaluate synthesis performance, run:

$ python evaluate_synthesis.py --model-path $MODEL_PATH --config-path $CONFIG_PATHMODEL_PATH and CONFIG_PATH are .pth file and the corresponding .hydra/config.yaml file, respectively.

A relative tolerance to detect the point-drop can be changed via --tol option (default 0).

To evaluate reconstruction performance, run:

$ python evaluate_reconstruction.py --model-path $MODEL_PATH --config-path $CONFIG_PATHTo optimize the relative tolerance for the baseline in our paper, run:

$ python tune_tolerance.py --model-path $MODEL_PATH --config-path $CONFIG_PATHWe used 0.008 for KITTI.

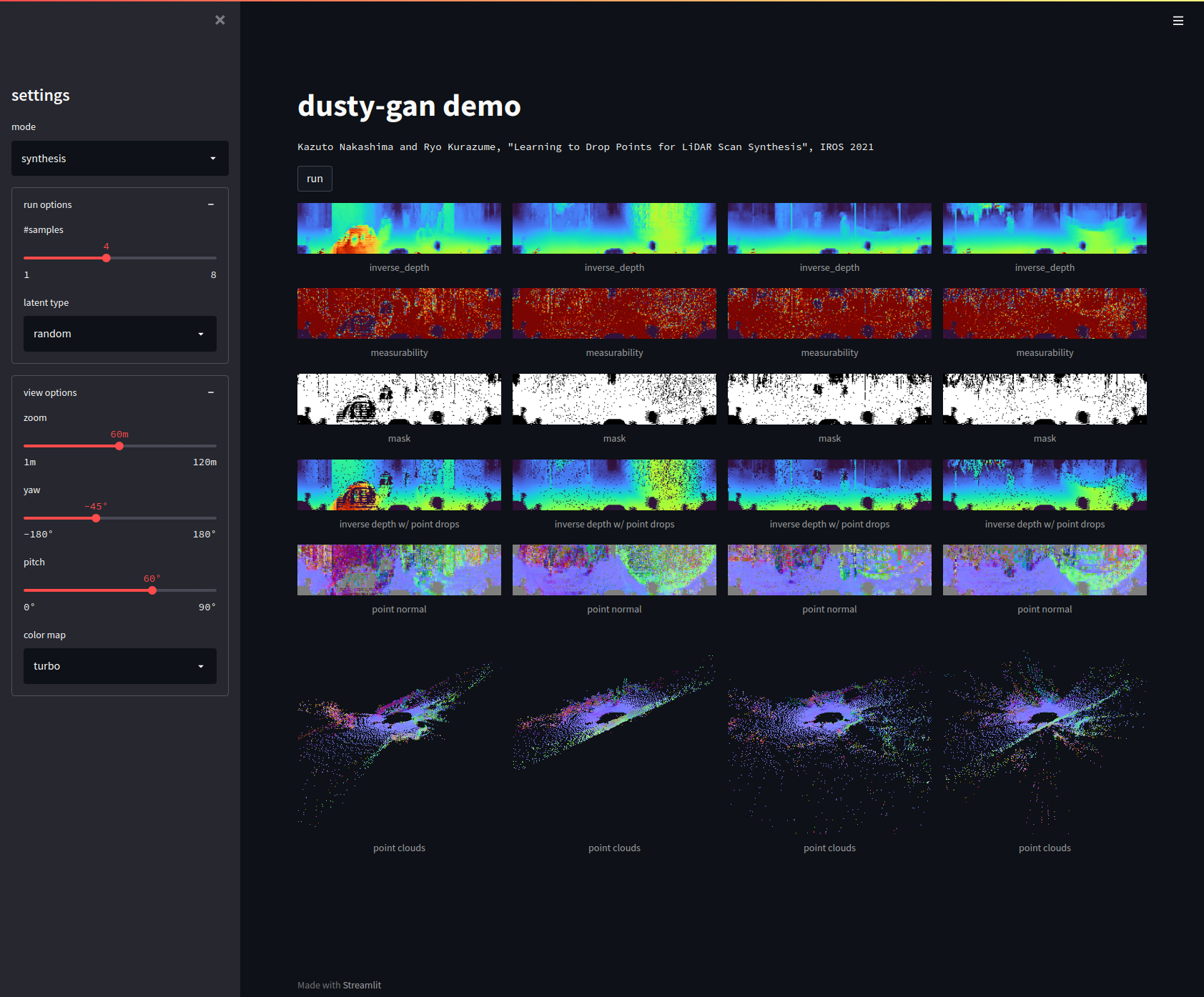

$ streamlit run demo.py $MODEL_PATH $CONFIG_PATH| Synthesis | Reconstruction |

|---|---|

|

|

If you find this code helpful, please cite our paper:

@inproceedings{nakashima2021learning,

title = {Learning to Drop Points for LiDAR Scan Synthesis},

author = {Nakashima, Kazuto and Kurazume, Ryo},

booktitle = {IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages = {222--229},

year = 2021

}