You can see sample videos in the example folder

https://github.com/daswer123/FollowYourEmoji-Webui/tree/main/example

In this fork I implemented the functionality on webui, I modified the original code a bit so that it would be possible to expose as many settings as possible.

Here you can experience the full functionality of FollowYourEmoji.

Here is a short list of what has been added to webui

- Ability to conveniently upload a reference picture and video.

- a tool to crop the reference picture so that it fits the video perfectly.

- Ability to see a preview of the cropped picture, zoom in or shift the cropping.

- The ability to upload any video without the need for additional processing (the interface itself processes everything).

- Ability to upload .npy file, as well as choose from a folder. Each processed video is added to the folder, which allows you to select the same video without re-processing.

- Ability to see how the animation will look like before generation.

- Many different settings, both custom and official.

- Ability to specify the FPS of the output video

- Mechanism to remove "Anomalous frames" in automatic mode

- Possibility to get all frames in the archive in addition to video.

And many more small improvements that will allow you to work conveniently in one interface.

Colab has been tested on the free version, everything works. Processing time is about 5 minutes for 300 frames.

Attention free colab is working at the limit of its capabilities, and I do not advise you to change the generation parameters, because you are likely to crash due to lack of RAM

You can try FollowYourEmoji online by clicking one of the buttons above!

Before you start, make sure you have: CUDA 12.1, ffmpeg, python 3.10

There are two ways to install FollowYourEmoji-Webui: Simple and Manual. Choose the method that suits you best.

- Clone the repository:

git clone https://github.com/daswer123/FollowYourEmoji-Webui.git

cd FollowYourEmoji-Webui

-

Run the installation script:

- For Windows:

install.bat - For Linux:

./install.sh

- For Windows:

-

Start the application:

- For Windows:

start.bat - For Linux:

./start.sh

- For Windows:

# Create virtual environment

python3 -m venv venv

source venv/bin/activate

# Install dependencies

pip install -r requirements.txt

# Install extra dependencies

pip install torch==2.1.1 torchaudio==2.1.1

pip install deepspeed==0.13.1

# Download pretrained model files

git lfs install

git clone https://huggingface.co/YueMafighting/FollowYourEmoji ./ckpt_models

git clone https://huggingface.co/daswer123/FollowYourEmoji_BaseModelPack ./ckpt_models/base# Create virtual environment

python -m venv venv

venv\scripts\activate

# Install dependencies

pip install -r requirements.txt

# Install extra dependencies

pip install torch==2.1.1+cu121 torchaudio==2.1.1+cu121 --index-url https://download.pytorch.org/whl/cu121

pip install https://github.com/daswer123/deepspeed-windows/releases/download/13.1/deepspeed-0.13.1+cu121-cp310-cp310-win_amd64.whl

# Download pretrained model files

git lfs install

git clone https://huggingface.co/YueMafighting/FollowYourEmoji ./ckpt_models

git clone https://huggingface.co/daswer123/FollowYourEmoji_BaseModelPack ./ckpt_models/baseAfter installation, you have several options to launch FollowYourEmoji-Webui:

- For Windows:

start.bat - For Linux:

./start.sh

-

Activate the virtual environment:

- Windows:

venv\Scripts\activate - Linux:

source venv/bin/activate

- Windows:

-

Run the application:

- Windows:

python app.py - Linux:

python3 app.py

- Windows:

To create a tunnel and share your instance with others, add the --share flag:

- Windows:

python app.py --share - Linux:

python3 app.py --share

This will generate a public URL that you can share with others, allowing them to access your FollowYourEmoji-Webui instance remotely.

Yue Ma*, Hongyu Liu*, Hongfa Wang*, Heng Pan*, Yingqing He, Junkun Yuan, Ailing Zeng, Chengfei Cai, Heung-Yeung Shum, Wei Liu and Qifeng Chen

- [2024.07.18] 🔥 Release

inference code,configandcheckpoints! - [2024.06.07] 🔥 Release Paper and Project page!

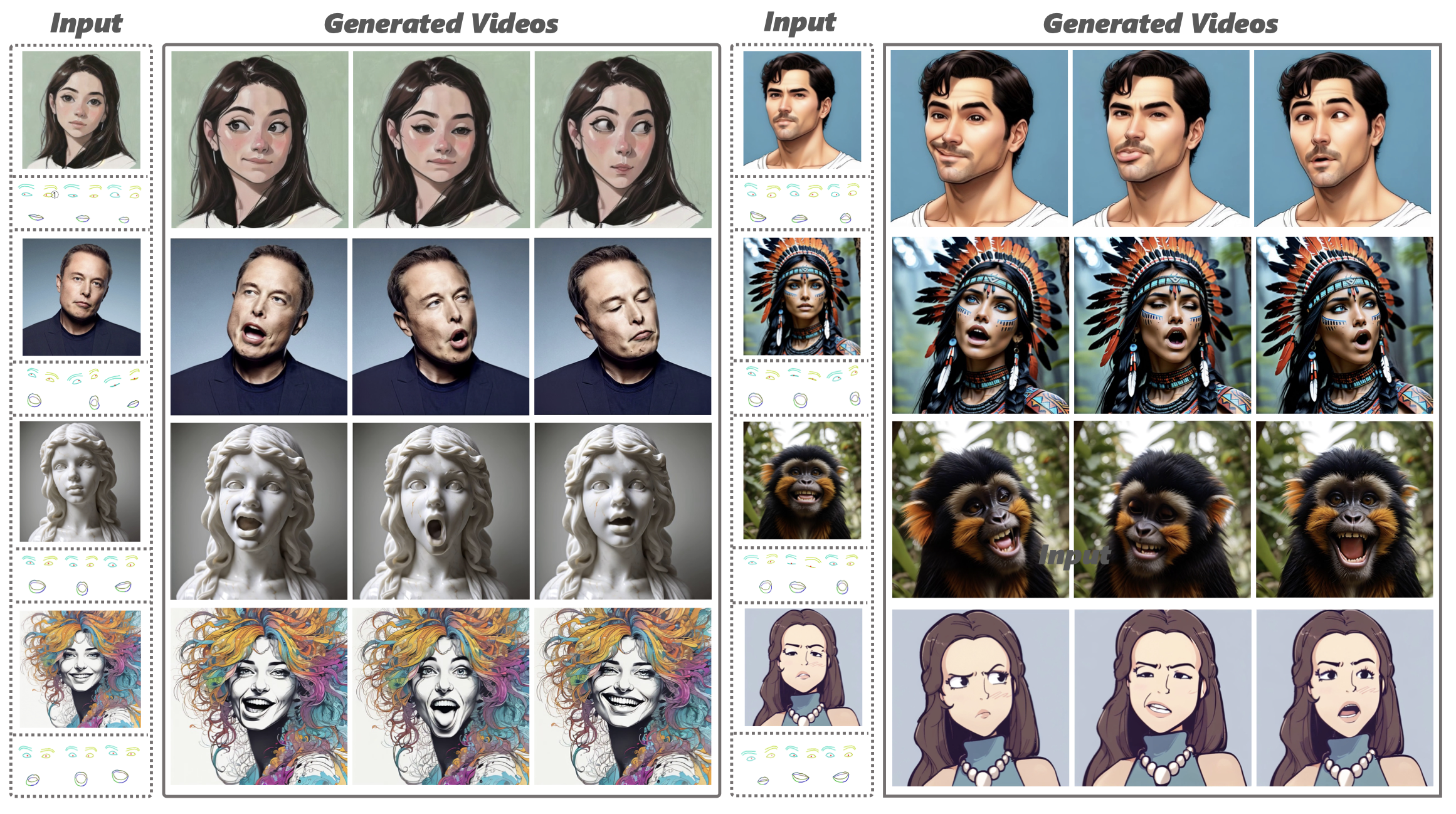

We present Follow-Your-Emoji, a diffusion-based framework for portrait animation, which animates a reference portrait with target landmark sequences.

pip install -r requirements.txt[FollowYourEmoji] We also provide our pretrained checkpoints in Huggingface. you could download them and put them into checkpoints folder to inference our model.

bash infer.shCUDA_VISIBLE_DEVICES=0 python3 -m torch.distributed.run \

--nnodes 1 \

--master_addr $LOCAL_IP \

--master_port 12345 \

--node_rank 0 \

--nproc_per_node 1 \

infer.py \

--config ./configs/infer.yaml \

--model_path /path/to/model \

--input_path your_own_images_path \

--lmk_path ./inference_temple/test_temple.npy \

--output_path your_own_output_pathYou can make your own emoji using our model. First, you need to make your emoji temple using MediaPipe. We provide the script in make_temple.ipynb. You can replace the video path with your own emoji video and generate the .npy file.

CUDA_VISIBLE_DEVICES=0 python3 -m torch.distributed.run \

--nnodes 1 \

--master_addr $LOCAL_IP \

--master_port 12345 \

--node_rank 0 \

--nproc_per_node 1 \

infer.py \

--config ./configs/infer.yaml \

--model_path /path/to/model \

--input_path your_own_images_path \

--lmk_path your_own_temple_path \

--output_path your_own_output_pathFollow-Your-Pose: Pose-Guided text-to-Video Generation.

Follow-Your-Click: Open-domain Regional image animation via Short Prompts.

Follow-Your-Handle: Controllable Video Editing via Control Handle Transformations.

Follow-Your-Emoji: Fine-Controllable and Expressive Freestyle Portrait Animation.

If you find Follow-Your-Emoji useful for your research, welcome to 🌟 this repo and cite our work using the following BibTeX:

@article{ma2024follow,

title={Follow-Your-Emoji: Fine-Controllable and Expressive Freestyle Portrait Animation},

author={Ma, Yue and Liu, Hongyu and Wang, Hongfa and Pan, Heng and He, Yingqing and Yuan, Junkun and Zeng, Ailing and Cai, Chengfei and Shum, Heung-Yeung and Liu, Wei and others},

journal={arXiv preprint arXiv:2406.01900},

year={2024}

}