- Update ddm_const_2, replacing the noise scheduler \sqrt(t) with t.

- 2024-02-27: This work inspired the paper for Multiple Object Tracking: DiffMOT, which was accepted by CVPR-2024.

- 2023-12-09: This work inspired the paper for edge detection: DiffusionEdge, which was accepted by AAAI-2024.

- We now update training for text-2-img, please refer to text-2-img.

- We now modify the two-branch UNet, resulting a single-decoder UNet architecture.

You can use the single-decoder UNet in uncond-unet-sd and cond-unet-sd.

- install torch

pip install torch==1.12.1+cu113 torchvision==0.13.1+cu113 torchaudio==0.12.1 --extra-index-url https://download.pytorch.org/whl/cu113

- install other packages.

pip install -r requirement.txt

- prepare accelerate config.

accelerate config

The file structure should look like:

(a) unconditional cifar10:

cifar-10-python

|-- cifar-10-batches-py

| |-- data_batch_1

| |-- data_batch_2

| |-- XXX

(b) unconditional Celeb-AHQ:

celebahq

|-- celeba_hq_256

| |-- 00000.jpg

| |-- 00001.jpg

| |-- XXXXX.jpg

(c) conditional DIV2K:

DIV2K

|-- DIV2K_train_HR

| |-- 0001.png

| |-- 0002.png

| |-- XXXX.png

|-- DIV2K_valid_HR

| |-- 0801.png

| |-- 0802.png

| |-- XXXX.png

(d) conditional DUTS:

DUTS

|-- DUTS-TR

| |-- DUTS-TR-Image

| | |-- XXX.jpg

| |-- DUTS-TR-Mask

| | |-- XXX.png

|-- DUTS-TE

| |-- DUTS-TE-Image

| | |-- XXX.jpg

| |-- DUTS-TE-Mask

| | |-- XXX.png

accelerate launch train_uncond_dpm.py --cfg ./configs/cifar10/ddm_uncond_const_uncond_unet.yaml

- training auto-encoder:

accelerate launch train_vae.py --cfg ./configs/celebahq/celeb_ae_kl_256x256_d4.yaml

- you should add the model weights in the first step to config file

./configs/celebahq/celeb_uncond_ddm_const_uncond_unet_ldm.yaml(line 41), then train latent diffusion model:

accelerate launch train_uncond_ldm.py --cfg ./configs/celebahq/celeb_uncond_ddm_const_uncond_unet_ldm.yaml

- training auto-encoder:

accelerate launch train_vae.py --cfg ./configs/super-resolution/div2k_ae_kl_512x512_d4.yaml

- training latent diffusion model:

accelerate launch train_cond_ldm.py --cfg ./configs/super-resolution/div2k_cond_ddm_const_ldm.yaml

accelerate launch train_cond_dpm.py --cfg ./configs/saliency/DUTS_ddm_const_dpm_114.yaml

change the sampling steps "sampling_timesteps" in the config file

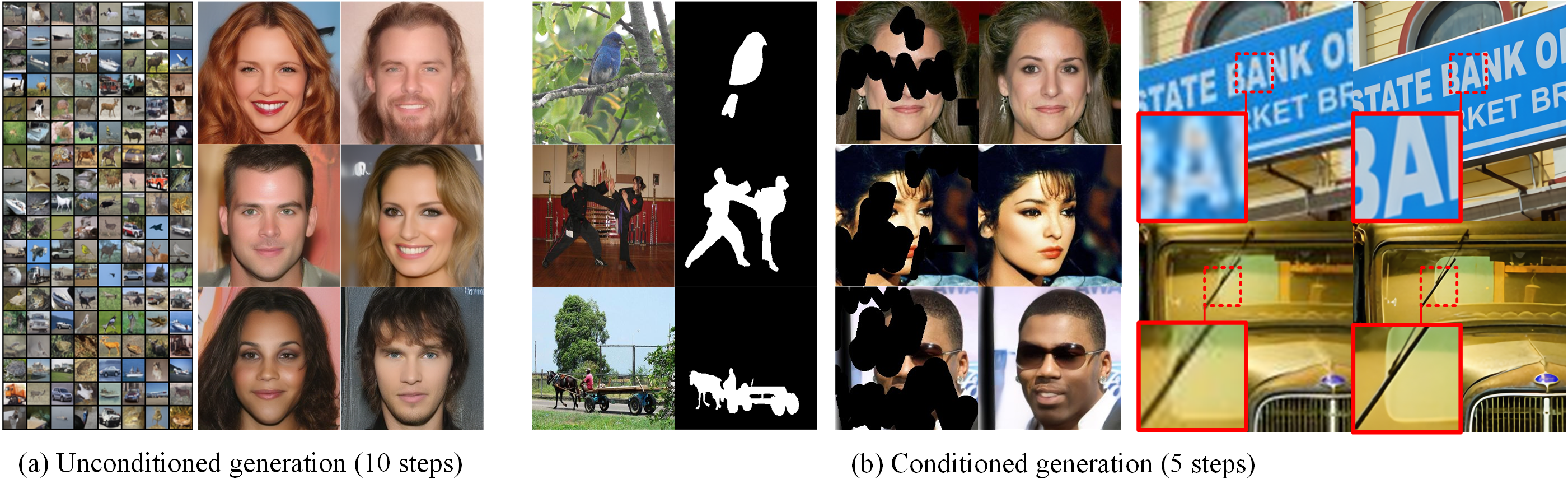

- unconditional generation:

python sample_uncond.py --cfg ./configs/cifar10/ddm_uncond_const_uncond_unet.yaml

python sample_uncond.py --cfg ./configs/celebahq/celeb_uncond_ddm_const_uncond_unet_ldm.yaml

- conditional generation (Latent space model):

- Super-resolution:

python ./eval_downstream/eval_sr.py --cfg ./configs/super-resolution/div2k_sample.yaml

- Inpainting:

python ./eval_downstream/sample_inpainting.py --cfg ./configs/celebahq/celeb_uncond_ddm_const_uncond_unet_ldm_sample.yaml

- Saliency:

python ./eval_downstream/eval_saliency.py --cfg ./configs/saliency/DUTS_sample_114.yaml

- download laion data from laion.

- download metadata using

img2dataset, please refer to here. - install clip.

pip install ftfy regex tqdm

pip install git+https://github.com/openai/CLIP.git

- The final data structure looks like:

|-- laion

| |-- 00000.tar

| |-- 00001.tar

| |-- XXXXX.tar

- training with config file text-2-img.

accelerate launch train_cond_ldm.py --cfg ./configs/text2img/ddm_uncond_const.yaml

Note that the pretrained weight of the AutoEncoder is downloaded from here, and you should unzip the file.

| Task | Weight | Config |

|---|---|---|

| Uncond-Cifar10 | url | url |

| Uncond-Celeb | url | url |

If you have some questions, please concat with huangai@nudt.edu.cn.

Thanks to the public repos: DDPM and LDM for providing the base code.

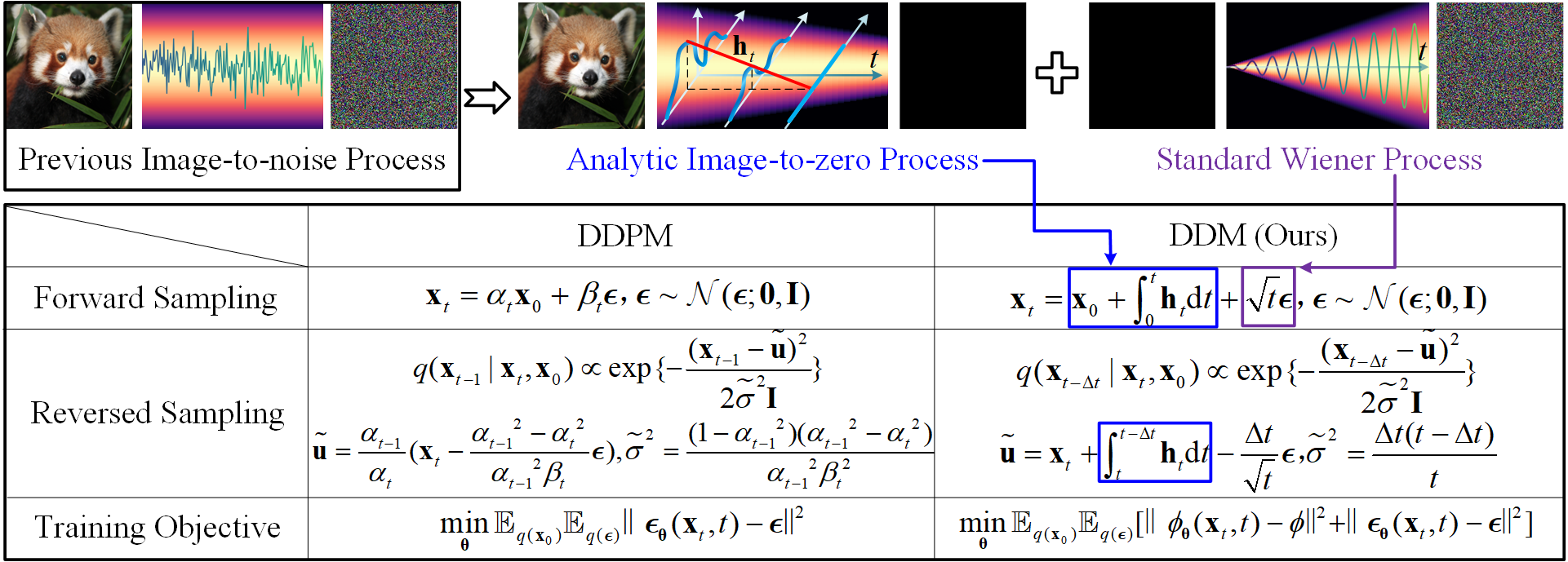

@article{huang2023decoupled,

title={Decoupled Diffusion Models: Simultaneous Image to Zero and Zero to Noise},

author={Huang, Yuhang and Qin, Zheng and Liu, Xinwang and Xu, Kai},

journal={arXiv preprint arXiv:2306.13720},

year={2023}

}