X-Decoder: Generalized Decoding for Pixel, Image, and Language

[Project Page] [Paper] [HuggingFace All-in-One Demo] [HuggingFace Instruct Demo] [Video]

by Xueyan Zou*, Zi-Yi Dou*, Jianwei Yang*, Zhe Gan, Linjie Li, Chunyuan Li, Xiyang Dai, Harkirat Behl, Jianfeng Wang, Lu Yuan, Nanyun Peng, Lijuan Wang, Yong Jae Lee^, Jianfeng Gao^ in CVPR 2023.

🌶️ Getting Started

We release the following contents for both SEEM and X-Decoder❗

- Demo Code

- Model Checkpoint

- Comprehensive User Guide

- Training Code

- Evaluation Code

👉 One-Line SEEM Demo with Linux:

git clone git@github.com:UX-Decoder/Segment-Everything-Everywhere-All-At-Once.git && sh aasets/scripts/run_demo.sh📍 [New] Getting Started:

📍 [New] Latest Checkpoints and Numbers:

| COCO | Ref-COCOg | VOC | SBD | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | Checkpoint | backbone | PQ ↑ | mAP ↑ | mIoU ↑ | cIoU ↑ | mIoU ↑ | AP50 ↑ | NoC85 ↓ | NoC90 ↓ | NoC85 ↓ | NoC90 ↓ |

| X-Decoder | ckpt | Focal-T | 50.8 | 39.5 | 62.4 | 57.6 | 63.2 | 71.6 | - | - | - | - |

| X-Decoder-oq201 | ckpt | Focal-L | 56.5 | 46.7 | 67.2 | 62.8 | 67.5 | 76.3 | - | - | - | - |

| SEEM_v0 | ckpt | Focal-T | 50.6 | 39.4 | 60.9 | 58.5 | 63.5 | 71.6 | 3.54 | 4.59 | * | * |

| SEEM_v0 | - | Davit-d3 | 56.2 | 46.8 | 65.3 | 63.2 | 68.3 | 76.6 | 2.99 | 3.89 | 5.93 | 9.23 |

| SEEM_v0 | ckpt | Focal-L | 56.2 | 46.4 | 65.5 | 62.8 | 67.7 | 76.2 | 3.04 | 3.85 | * | * |

| SEEM_v1 | ckpt | Focal-T | 50.8 | 39.4 | 60.7 | 58.5 | 63.7 | 72.0 | 3.19 | 4.13 | * | * |

| SEEM_v1 | ckpt | SAM-ViT-B | 52.0 | 43.5 | 60.2 | 54.1 | 62.2 | 69.3 | 2.53 | 3.23 | * | * |

| SEEM_v1 | ckpt | SAM-ViT-L | 49.0 | 41.6 | 58.2 | 53.8 | 62.2 | 69.5 | 2.40 | 2.96 | * | * |

SEEM_v0: Supporting Single Interactive object training and inference

SEEM_v1: Supporting Multiple Interactive objects training and inference

🔥 News

- [2023.10.04] We are excited to release ✅ training/evaluation/demo code, ✅ new checkpoints, and ✅ comprehensive readmes for both X-Decoder and SEEM!

- [2023.09.24] We are providing new demo command/code for inference (DEMO.md)!

- [2023.07.19] 🎢 We are excited to release the x-decoder training code (INSTALL.md, DATASET.md, TRAIN.md, EVALUATION.md)!

- [2023.07.10] We release Semantic-SAM, a universal image segmentation model to enable segment and recognize anything at any desired granularity. Code and checkpoint are available!

- [2023.04.14] We are releasing SEEM, a new universal interactive interface for image segmentation! You can use it for any segmentation tasks, way beyond what X-Decoder can do!

- [2023.03.20] As an aspiration of our X-Decoder, we developed OpenSeeD ([Paper][Code]) to enable open-vocabulary segmentation and detection with a single model, Check it out!

- [2023.03.14] We release X-GPT which is an conversational version of our X-Decoder through GPT-3 langchain!

- [2023.03.01] The Segmentation in the Wild Challenge had been launched and ready for submitting results!

- [2023.02.28] We released the SGinW benchmark for our challenge. Welcome to build your own models on the benchmark!

- [2023.02.27] Our X-Decoder has been accepted by CVPR 2023!

- [2023.02.07] We combine X-Decoder (strong image understanding), GPT-3 (strong language understanding) and Stable Diffusion (strong image generation) to make an instructional image editing demo, check it out!

- [2022.12.21] We release inference code of X-Decoder.

- [2022.12.21] We release Focal-T pretrained checkpoint.

- [2022.12.21] We release open-vocabulary segmentation benchmark.

🖌️ DEMO

🫐 [X-GPT] 🍓[Instruct X-Decoder]

🎶 Introduction

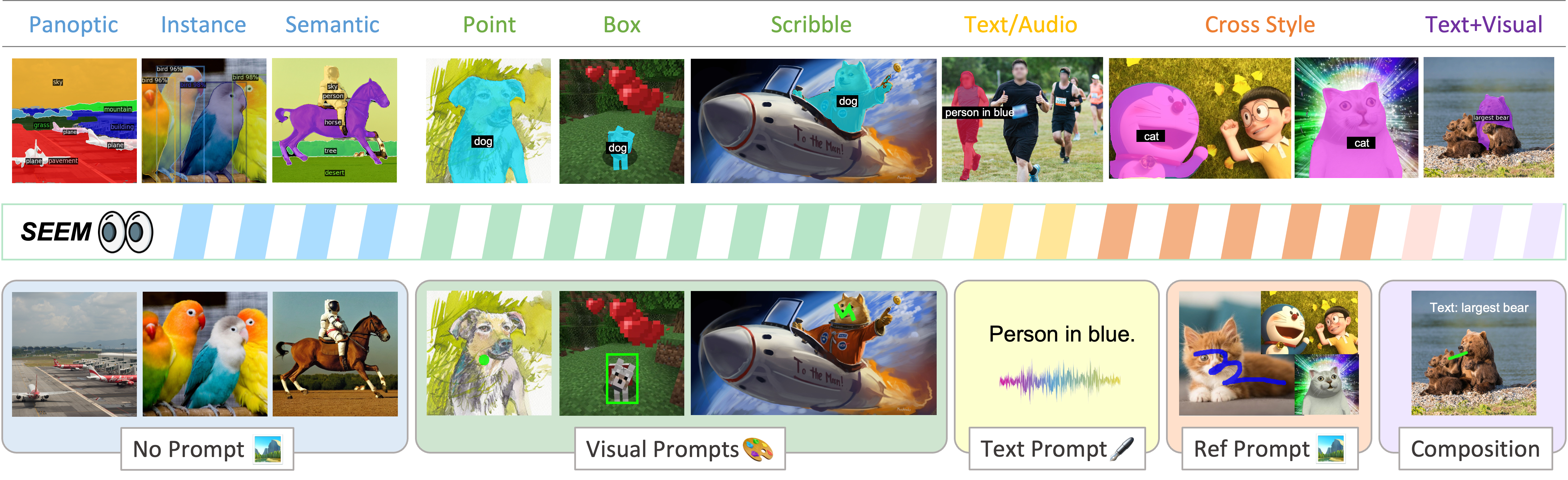

X-Decoder is a generalized decoding model that can generate pixel-level segmentation and token-level texts seamlessly!

It achieves:

- State-of-the-art results on open-vocabulary segmentation and referring segmentation on eight datasets;

- Better or competitive finetuned performance to generalist and specialist models on segmentation and VL tasks;

- Friendly for efficient finetuning and flexible for novel task composition.

It supports:

- One suite of parameters pretrained for Semantic/Instance/Panoptic Segmentation, Referring Segmentation, Image Captioning, and Image-Text Retrieval;

- One model architecture finetuned for Semantic/Instance/Panoptic Segmentation, Referring Segmentation, Image Captioning, Image-Text Retrieval and Visual Question Answering (with an extra cls head);

- Zero-shot task composition for Region Retrieval, Referring Captioning, Image Editing.

Acknowledgement

- We appreciate the contructive dicussion with Haotian Zhang

- We build our work on top of Mask2Former

- We build our demos on HuggingFace 🤗 with sponsored GPUs

- We appreciate the discussion with Xiaoyu Xiang during rebuttal

Citation

@article{zou2022xdecoder,

author = {Zou*, Xueyan and Dou*, Zi-Yi and Yang*, Jianwei and Gan, Zhe and Li, Linjie and Li, Chunyuan and Dai, Xiyang and Wang, Jianfeng and Yuan, Lu and Peng, Nanyun and Wang, Lijuan and Lee*, Yong Jae and Gao*, Jianfeng},

title = {Generalized Decoding for Pixel, Image and Language},

publisher = {arXiv},

year = {2022},

}