VQGAN-CLIP has been in vogue for generating art using deep learning. Searching the r/deepdream subreddit for VQGAN-CLIP yields quite a number of results. Basically, VQGAN can generate pretty high fidelity images, while CLIP can produce relevant captions for images. Combined, VQGAN-CLIP can take prompts from human input, and iterate to generate images that fit the prompts.

Huge Thanks to the creators for sharing detailed explained notebooks on Google Colab, the VQGAN-CLIP technique has been very popular even among the arts communities.

Originally made by Katherine Crowson. The original BigGAN+CLIP method was by advadnoun.Special thanks to Ahsen Khaliq for gradeio code.

The original colab Notebook Link

Taming Transformers Github Repo and CLIP Github Repo

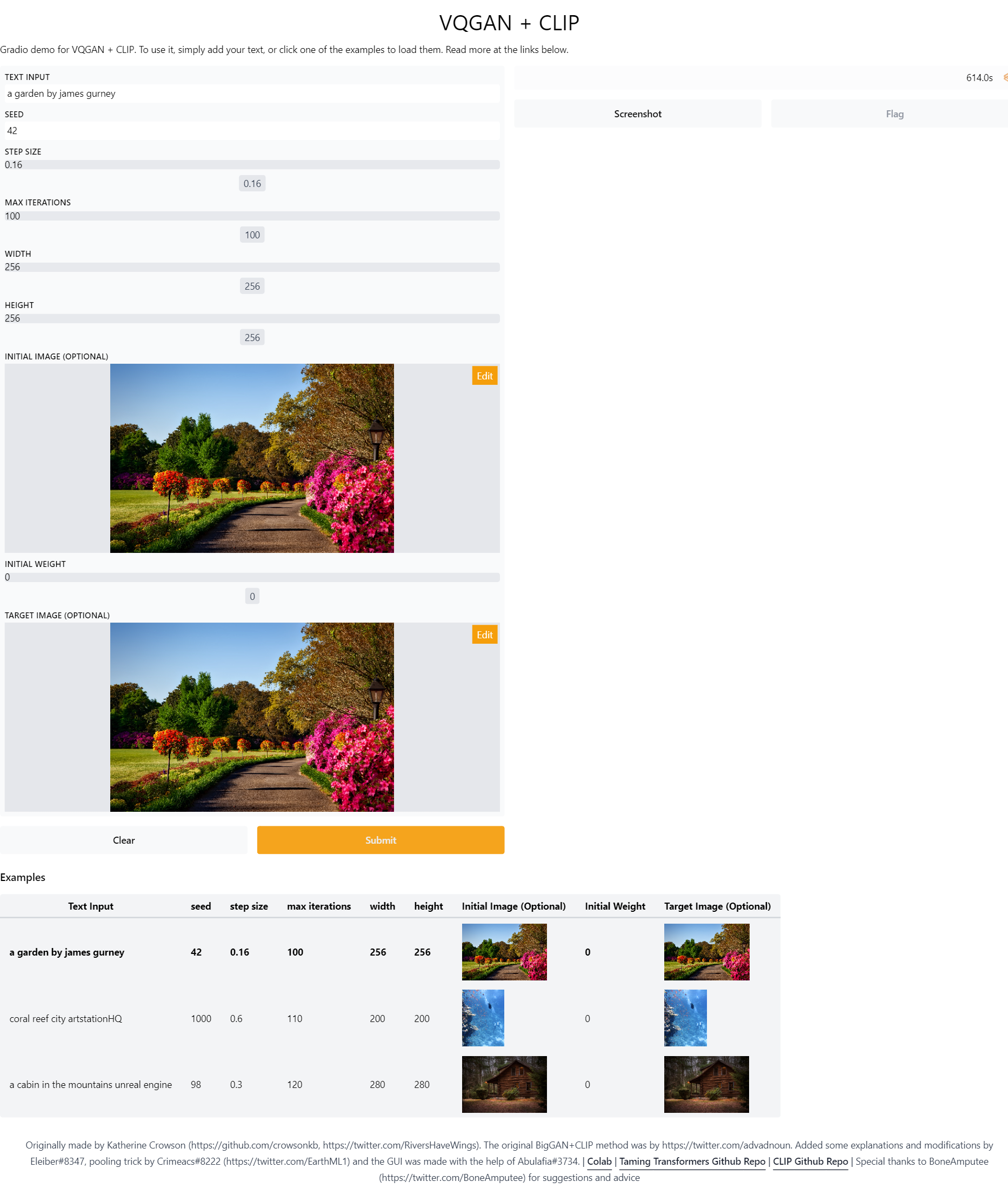

This simple Gradeio app for generating VQGAN-CLIP images on a local environment. Screenshot of the UI as below:

The steps for setup are based on the Colab referenced above.

- Git clone this repo.

git clone https://github.com/krishnakaushik25/VQGAN-CLIP - After that,

cdinto the repo and run:pip install gradiofor gradeio istallation. - Install the required Python libraries.Run

pip install -r requirements.txt - And then Run

python app.py - Wait for few minutes till it executes and then it shows it is running on localhost ann open it in a browser on http://localhost:7860

- You can select any exampples listed in the app, and enter the text and parameters of your choice, it can take 30 min - 1 hour time for some examples if the the model iterations are more.

How many steps to run VQGAN-CLIP? There is no ground rule on how many steps to run to get a good image. Images generated are also not guaranteed to be interesting. Experiment!

Less training steps can produce some images which can be very bad.

app_file: Path to your main application file (which contains either gradio or streamlit Python code).

The path file is app.py which is based on gradio.

- Long, descriptive prompts can have surprisingly pleasant effects: Reddit post

- Unreal engine trick

- Appending "by James Gurney" for stylization

- Rotation and zoom effect with VQGAN+CLIP and RIFE

- The Streamlit version of VQGAN-CLIP