The goal of this project is to use computer vision to automatically segment debris and ice glaciers from satellite images

Requirements are specified in requirements.txt. The full package sources is

available in the glacier_mapping directory. Raw training data are Landsat 7

tiff images from the Hindu-Kush-Himalayan region. We consider the region of

Bhutan and Nepal. Shapefile labels of the glaciers are provided by

ICIMOD

The full preprocessing and training can be viewed at https://colab.research.google.com/drive/1ZkDtLB_2oQpSFDejKZ4YQ5MXW4c531R6?usp=sharing Besides the raw tiffs and shapefiles, the required inputs are,

conf/masking_paths.yaml: Says how to burn shapefiles into image masks.conf/postprocess.yaml: Says how to filter and transform sliced images.conf/train.yaml: Specifies training options.

At each step, the following intermediate files are created,

generate_masks()--> writes mask_{id}.npy's and mask_metadata.csvwrite_pair_slices()--> writes slice_{tiff_id}img{slice_id}, slice_{tiff_id}label{slice_id}, and slice_0-100.geojson (depending on which lines from mask_metadata are sliced)postproces()--> copies slices*npy from previous step into train/, dev/, test/ folders, and writes mean and standard deviations to path specified in postprocess.yamlglacier_mapping.train.*--> creates data/runs/run_name folder, containing logs/ with tensorboard logs and models/ with all checkpoints

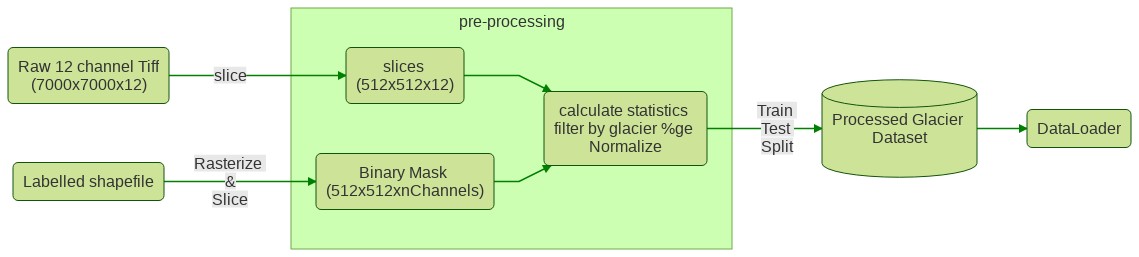

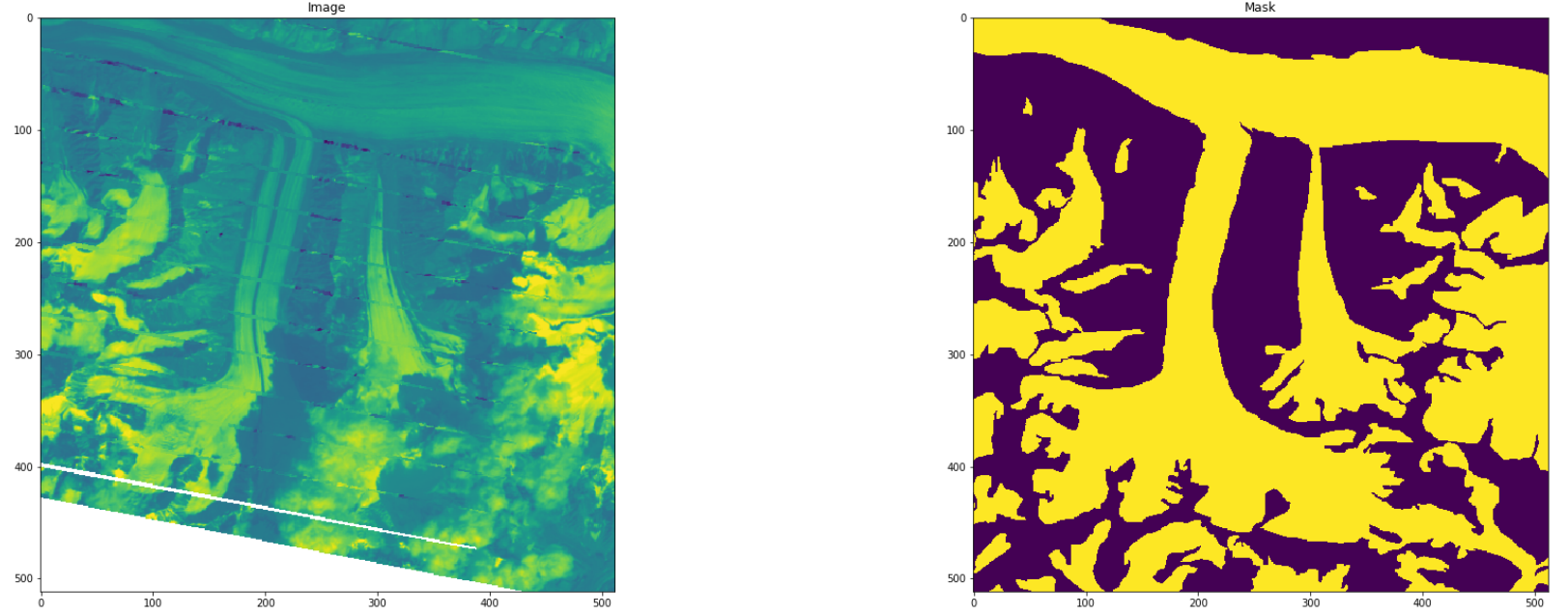

- Slicing: We slice the input tiffs into 512x512 tiles. The resulting tiles

along with corresponding shapefile labels are stored. Metadata of the slices

are stored in a geojson file"

slicemetadata.geojsonTo slice,run: python3 src/slice.py - Transformation: For easy processing, we convert the input image and

labels into multi-dimensional numpy

.npyfiles. - Masking: The input shapefiles are transformed into masks. The masks are

needed for use as labels. This involves transforming the label as

multi-channel images with each channel representing a label class ie. 0 -

Glacier, 1 debris etc To run transformation and masking:

python3 src/mask.py

- Filtering: Returns the paths for pairs passing the filter criteria for a specific channel. Here we filter by the percentage of 1's in the filter channel.

- Random Split: The final dataset is saved in three folders:

train/ test/ dev/ - Reshuffle: Shuffle the images and masks in the output directory

- Imputation: Given and input, we check for missing values (NaNs) and replace with 0

- Generate stats: Generate statistics of the input image channels: returns a dictionary with keys for means and standard deviations accross the channels in input images.

- Normalization: We normalize the final dataset based on the means and standard deviations calclualted.

Model: Unet with dropout (default dropout rate is 0.2).

Labels : ICIMOD

- (2000, Nepal): Polygons older/newer than 2 years from 2000 are filtered out. Original collection contains few polygons from 1990s

- (2000, Bhutan): Used as it's

- (2010, Nepal): Polygons older/newer than 2 years from 2010 are filtered out. Original collection is for 1980-2010

- (2010, Bhutan): Used as it's

Code open source for anyone to use as it's under MIT License