J. Krishna Murthy, G.V. Sai Krishna, Falak Chhaya, and K. Madhava Krishna

Note: This is an abridged and simplified re-implementation of the ICRA 2017 paper Reconstructing vehicles from a single image: shape priors for road scene understanding. Several factors, including an agreement, delayed and prevented this release for over a year-and-a-half. Apologies for the inconvenience.

Contributors: Original implementation by Krishna Murthy Re-implemented by Junaid Ahmed Ansari and Sarthak Sharma

Should you find this code useful in your research, please consider citing the following publication.

@article{ Krishna_ICRA2017,

author = { Krishna Murthy, J. and Sai Krishna, G.V. and Chhaya, Falak and Madhava Krishna, K. },

title = { Reconstructing Vehicles From a Single Image: Shape Priors for Road Scene Understanding },

journal = { ICRA },

year = { 2017 },

}

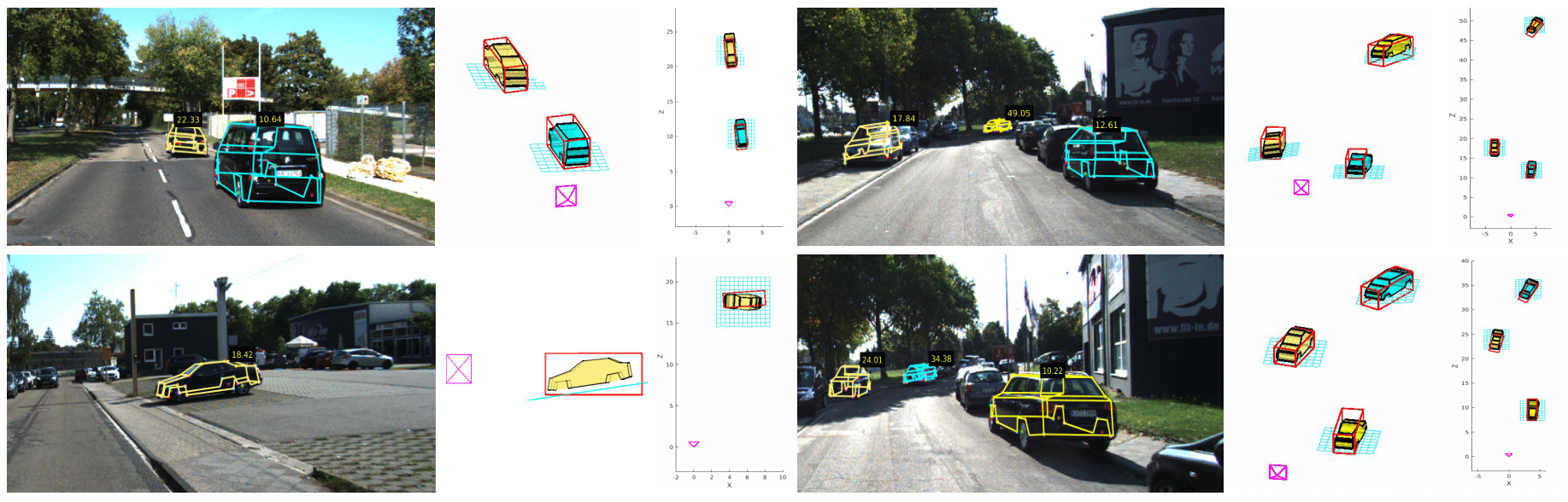

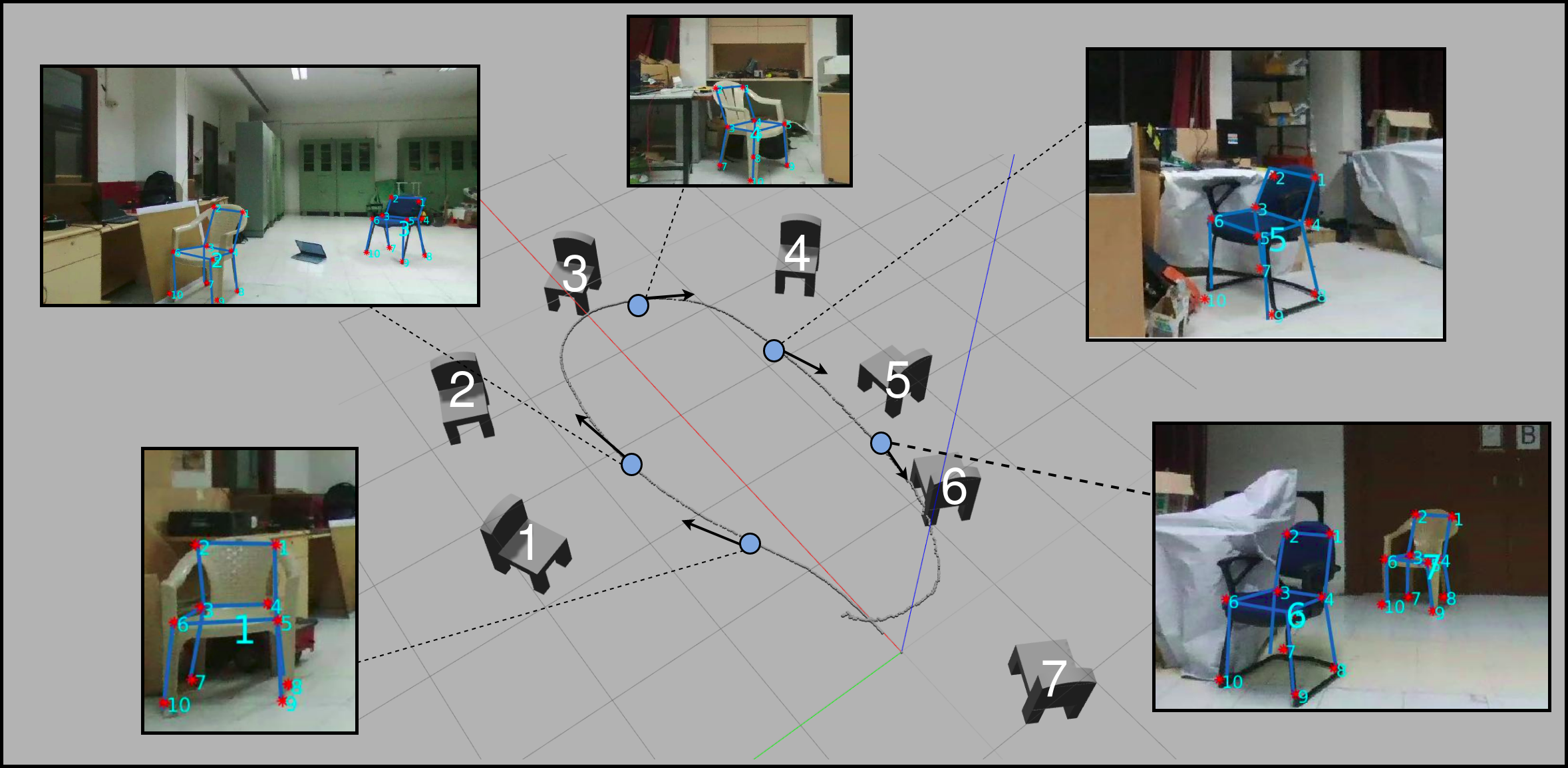

Our work presents one of the first techniques to reconstruct wireframe models of objects given just a single image. It is inspired by the fact that humans exploit prior knowledge of 3D shapes of objects to get a sense of how a 2D image of an object looks in 3D. We construct shape priors that can be used to formulate a bundle adjustment-like optimization problem and help reconstruct 3D wireframe models of an object, given a single image.

This implementation used Python as well as C++ libraries. Here is how to set everything up and get started.

If you are using a conda environment or a virtuanenv, first activate it.

Install dependencies by running

pip install requirements.txt

Install Ceres Solver by following instructions from here.

Switch to the directory named ceresCode.

cd ceresCode

Build the shape and pose adjuster scripts.

mkdir build

cd build

cmake ..

make

Ensure that Ceres Solver has been installed, before building these scripts.

For now, we have included two examples from the KITTI Tracking dataset. Run the demo.py script to view a demo.

python demo.py

Note: This script MUST be run from the root directory of this repository.

If you are looking to run this code on some of your own examples of car images, you will first need the following information:

- Height above the ground at which the camera that captured the image is mounted.

- A bounding box of the car in (

x,y,w,h) format, wherexandyare the X and Y coordinates in the image of the top left corner of the bounding box,wis the bounding box width, andhis the bounding box height. - Keypoint locations. Our approach relies on a predefined set of semantic keypoints (wireframe vertices). These are obtained by extracting the region of the image inside of the bounding box, and passing it through a keypoint detector. We repurpose a stacked-hourglass model for the same. Get our keypoint detection inference code here

- Viewpoint guess. Our approach also relies on an initial (poor) guess of the azimuth (heading/yaw) of the car being observed. We follow the KITTI convention (KITTI calls this variable

ryin their Tracking dataset ground-truth).

Look at the directory data/examples to get a sense of how to represent this data in a format that the demo.py script can process.

This approach has successfully been ported to other object categories. (More on this to be added in due course.)