Genetic Algorithm with a SciPy-like interface:

# Minimization with box constraints

minimize(fun, bounds, x0=None, args=(), callback=None, options={}, workers=None)

# Minimization with box and inequality constraints

con_minimize(fun, bounds, constr, x0=None, args=(), callback=None, options={}, workers=None)

Main features:

- single-CPU and parallel modes,

- rectangular bounds and inequality constraints,

- adaptive mutation,

- suitable for large-scale non-convex problems,

- pure Python,

- continuous testing on latest Ubuntu and Python 3.7-3.10 but should work also on other distros and on Windows.

Two functions are available:

modestga.minimize()for minimization with simple rectangular bounds on parameters,modestga.con_minimize()for full constrained minimization (possibly nonlinear, noncontinuous).

The function con_minimize() is a wrapper over minimize() and has only one

extra argument constr with a list of constraint functions. The algorithm

tries to keep the constraint functions outputs above zero. The constraint

functions can be nonlinear and noncontinuous (actually any Python code is fine).

By default modestga.minimize() and modestga.con_minimize() run on all CPUs

and divides the population into smaller subpopulations (1 per CPU)

which exchange genes among one another after each generation.

To use multiple CPUs the cost function (and constraints) must be serializable.

If you want to minimize some function which cannot be serialized with

cloudpickle, run

the minimization on a single CPU (workers=1).

pip install modestga

To get the latest version, clone the repository and install using setup.py:

git clone https://github.com/krzysztofarendt/modestga

cd modestga

pip install -e .

Clone the repository and run:

pip install -e .

pip install pytest

pytest

You can also run some examples, e.g. optimization of the 50-dimensional Rastrigin function:

python modestga/examples/min_rastrigin_fun.py

...or run all examples at once:

bin/run_examples.sh

Minimal:

import random

import numpy as np

from modestga import minimize

# Define function to be minimized (can be noisy and non-convex)

def fun(x, *args):

return np.sum(x ** 2) + random.random()

# Specify parameter bounds (here: 100 parameters allowed to vary from 0 to 10)

bounds = [(0, 10) for i in range(100)]

# Overwrite default evolution options

options = {

'generations': 1000, # Max. number of generations

'pop_size': 500 # Population size

}

# Minimize

# (it uses all available CPUs by default)

res = minimize(fun, bounds, options=options)

# Print optimization result

print(res)Extended:

import logging

import random

import numpy as np

from modestga import minimize

# Set up logging if needed

logging.basicConfig(filename='ga.log', level='INFO', filemode='w')

# Define function to be minimized (can be noisy and non-convex)

def fun(x, *args):

return np.sum(x ** 2) + random.random()

# Specify parameter bounds (here: 100 parameters allowed to vary from 0 to 10)

bounds = [(0, 10) for i in range(100)]

# Overwrite default evolution options

options = {

'generations': 1000, # Max. number of generations

'pop_size': 500, # Population size

'mut_rate': 0.01, # Initial mutation rate (adaptive mutation)

'trm_size': 20, # Tournament size

'tol': 1e-3 # Solution tolerance

}

def callback(x, fx, ng, *args):

"""Callback function called after each generation"""

print(f"\nx={x}\nf(x)={fx}\n")

# Minimize using 3 CPUs

res = minimize(fun, bounds, callback=callback, options=options, workers=3)

# Print optimization result

print(res)

# Final parameters

x = res.x

# Final function value

fx = res.fx

# Number of function evaluations

nfev = res.nfevFor constrained optimization examples check out the following scripts:

modestga/examples/con_min_mishra_fun.pymodestga/examples/con_min_rosenbrock_fun.pymodestga/examples/con_min_rastrigin_fun.py

The algorithm has been benchmarked against Differential Evolution (SciPy) and naive Monte Carlo (modestga.benchmark.methods.monte_carlo) using the Rastrigin function. Fig. 2 shows mean results from five runs for each case. The main parameters were as follows:

- population = 100,

- maximum number of generations = 1000,

- tolerance = 1e-3,

- mutation rate - three scenarios for GA and DE,

- workers (CPUs) = 1.

The Monte Carlo method did not take into account the tolerance and was simply stopped at 1000 iteration.

Note that unlike in modestga, in Differentian Evolution the population size is multiplied by the number of parameters. For exact meaning of the mutation parameter in Differential Evolution please refer to the SciPy documention.

Summary:

- in almost all considered mutation cases

modestgaachieves similar or better result in significantly shorter time for large-scale problems (N > 32), modestgais slower for small-scale problems, especially when the cost function evaluation is fast, as in this case,- the increasing time in Differential Evolution is due to the increasing size of population (it's multiplied by the number of parameters), but larger population seems to be inefective in solving the minimization problem.

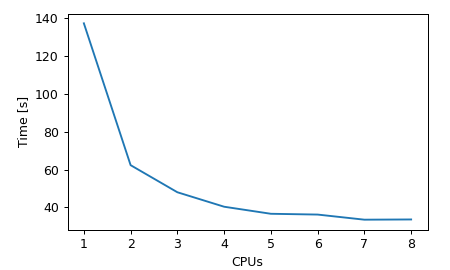

The performance of the parallel optimization was evaluated on a 64-dimensional Rastrigin function. Since the evaluation of this function is very fast, multiprocessing is beneficial only up to around 3 CPUs. Beyond that point considerable time is spend on inter-process communication. Thus, the optimum number of CPUs depends mainly on the function evaluation time.