💻 Welcome to the "Efficiently Serving Large Language Models" course! Instructed by Travis Addair, Co-Founder and CTO at Predibase, this course will deepen your understanding of serving LLM applications efficiently.

In this course, you'll delve into the optimization techniques necessary to efficiently serve Large Language Models (LLMs) to a large number of users. Here's what you can expect to learn and experience:

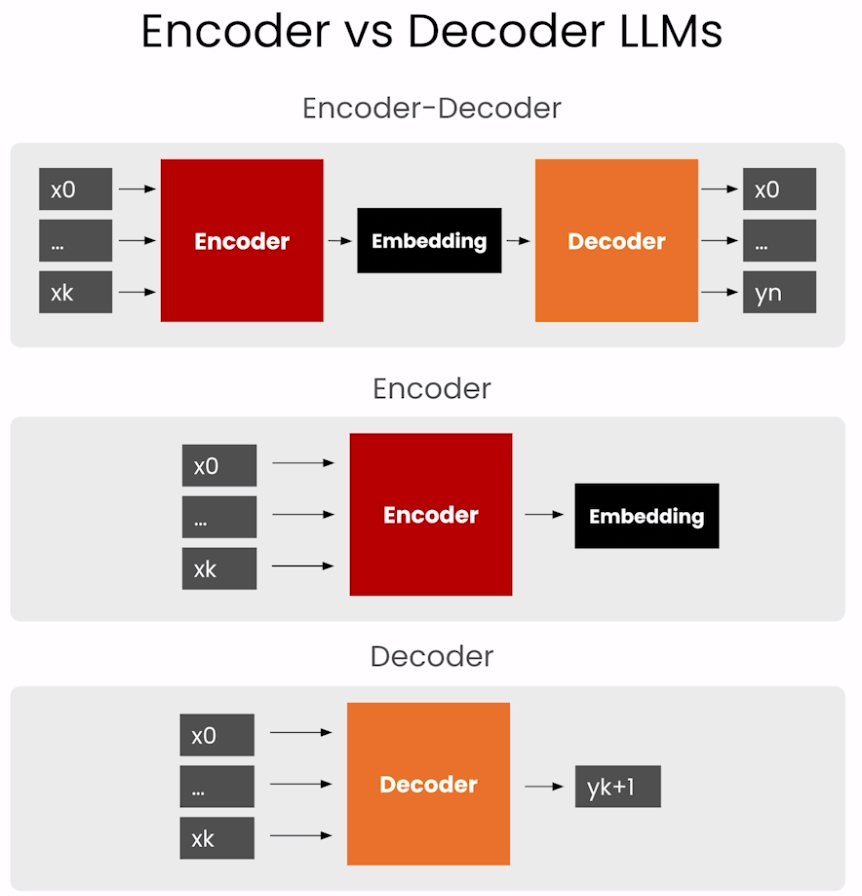

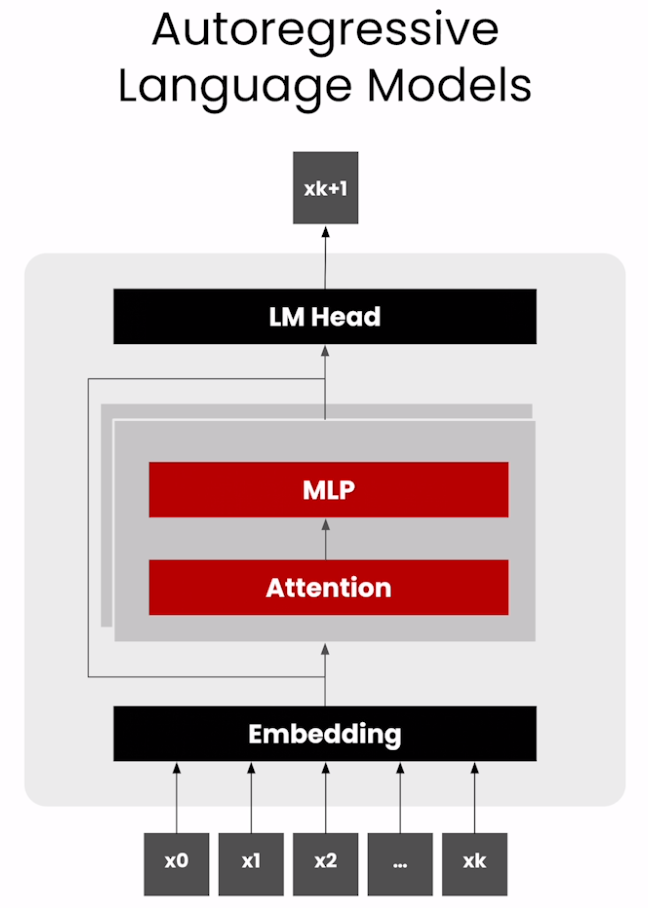

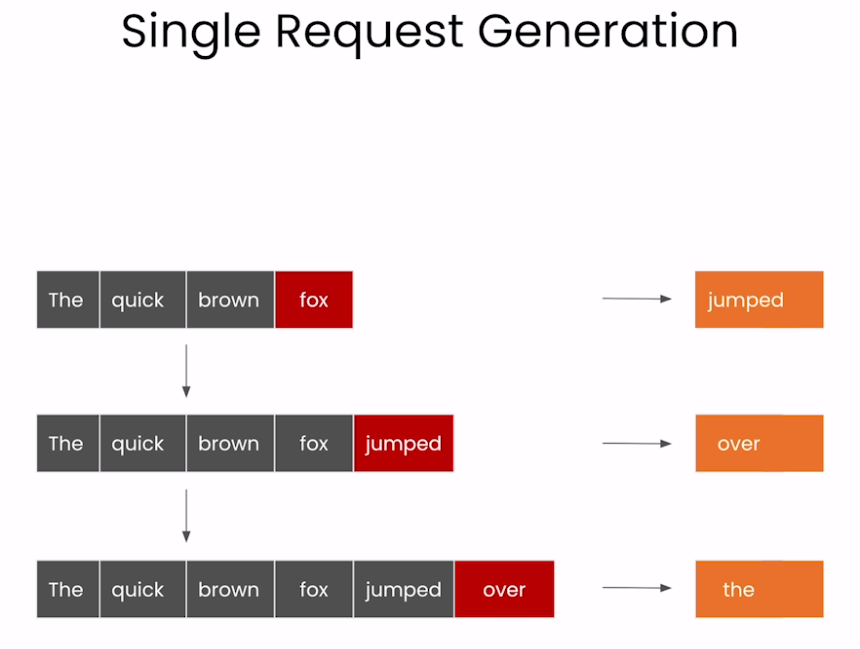

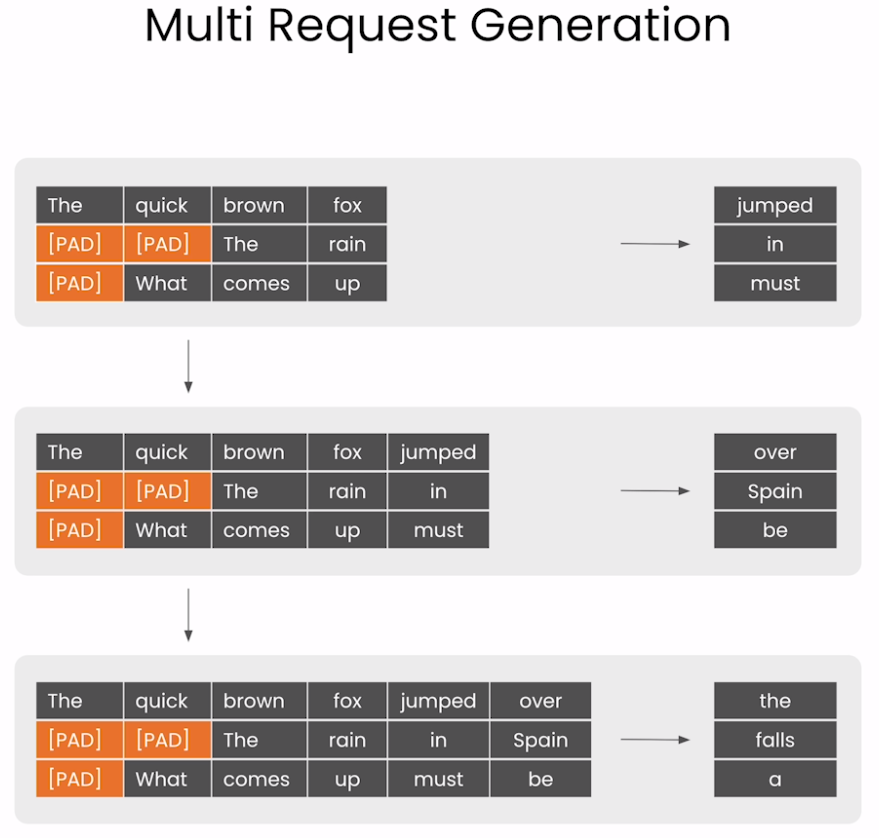

- 🤖 Auto-Regressive Models: Understand how auto-regressive large language models generate text token by token.

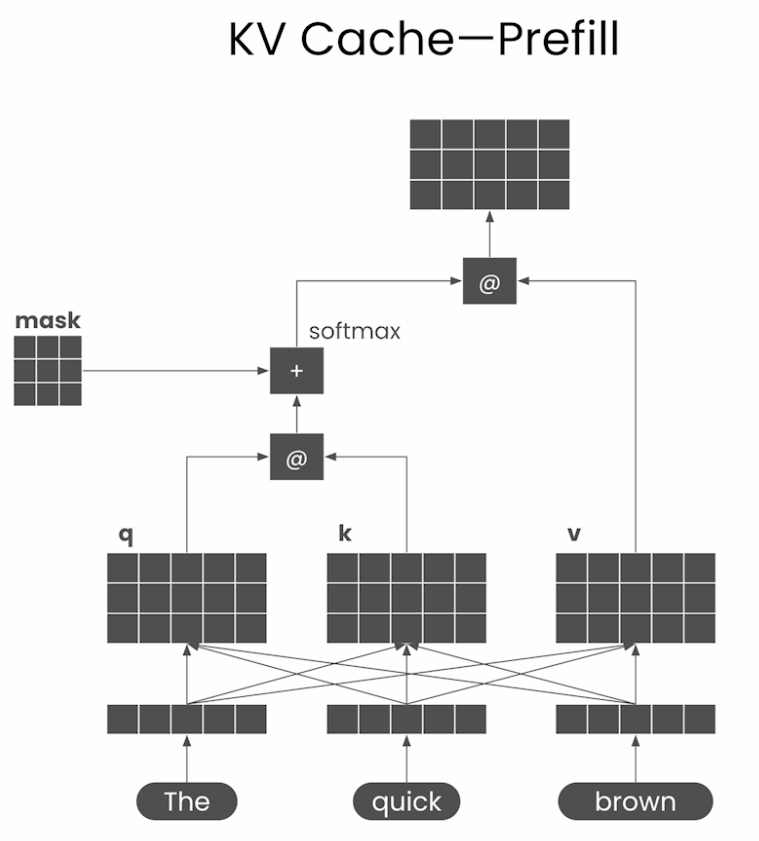

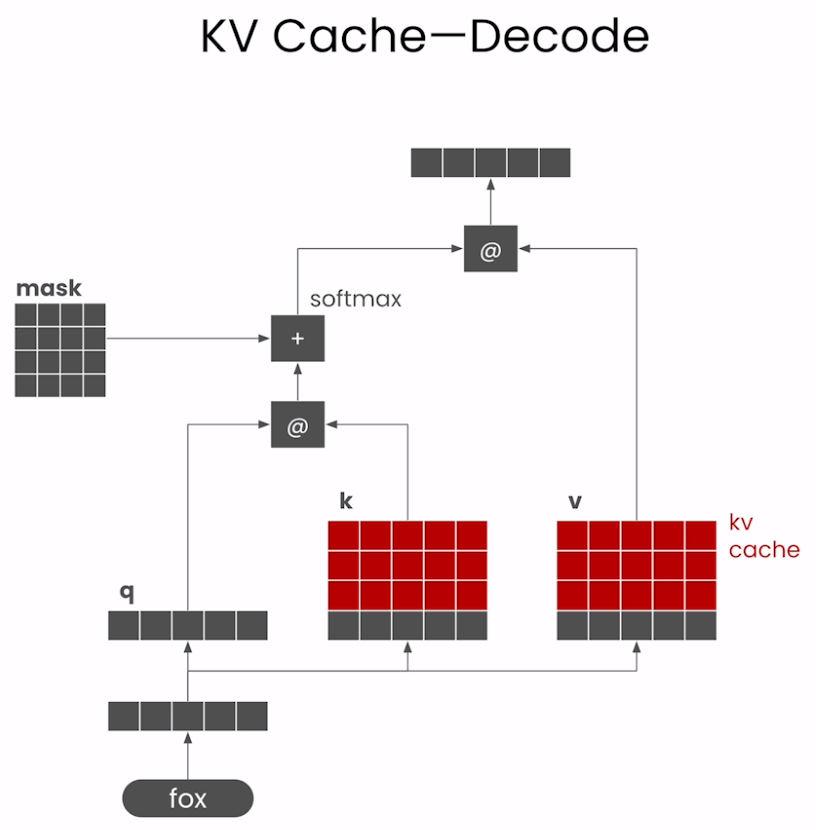

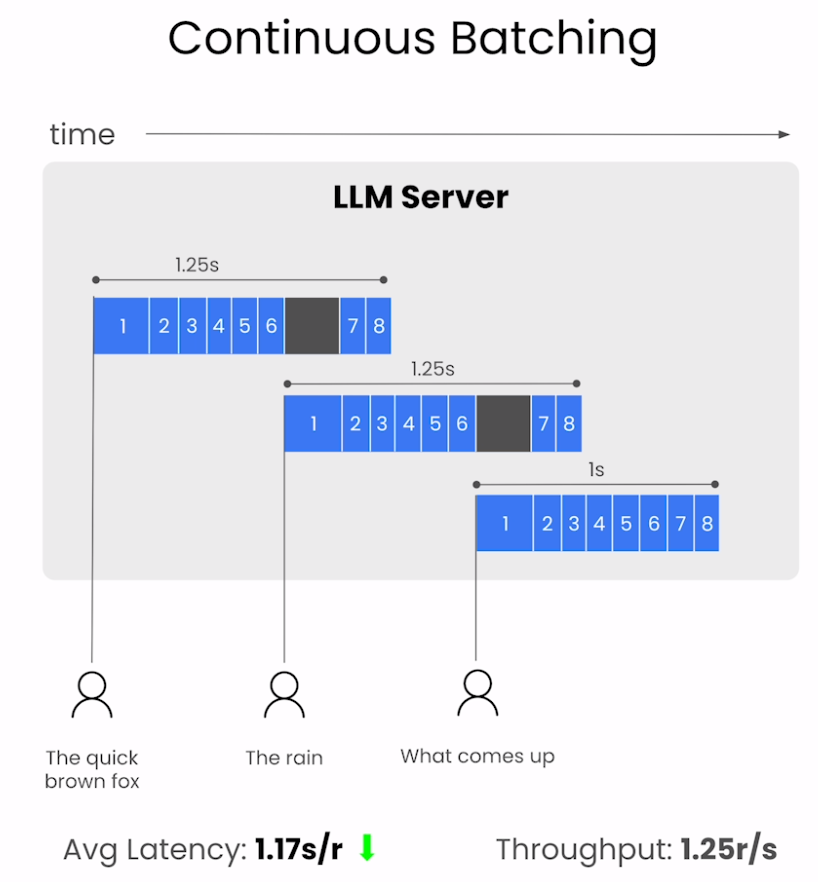

- 💻 LLM Inference Stack: Implement foundational elements of a modern LLM inference stack, including KV caching, continuous batching, and model quantization.

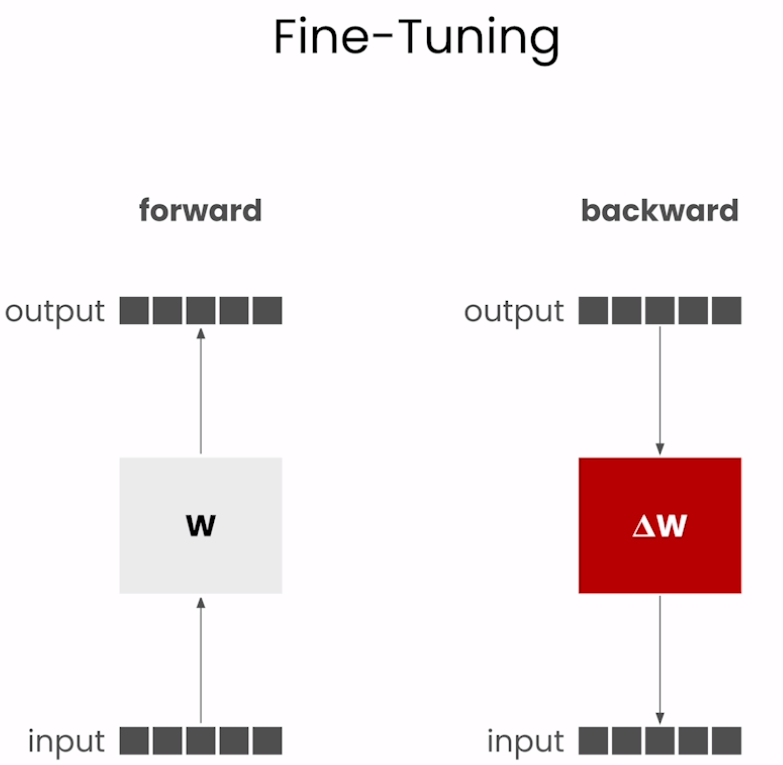

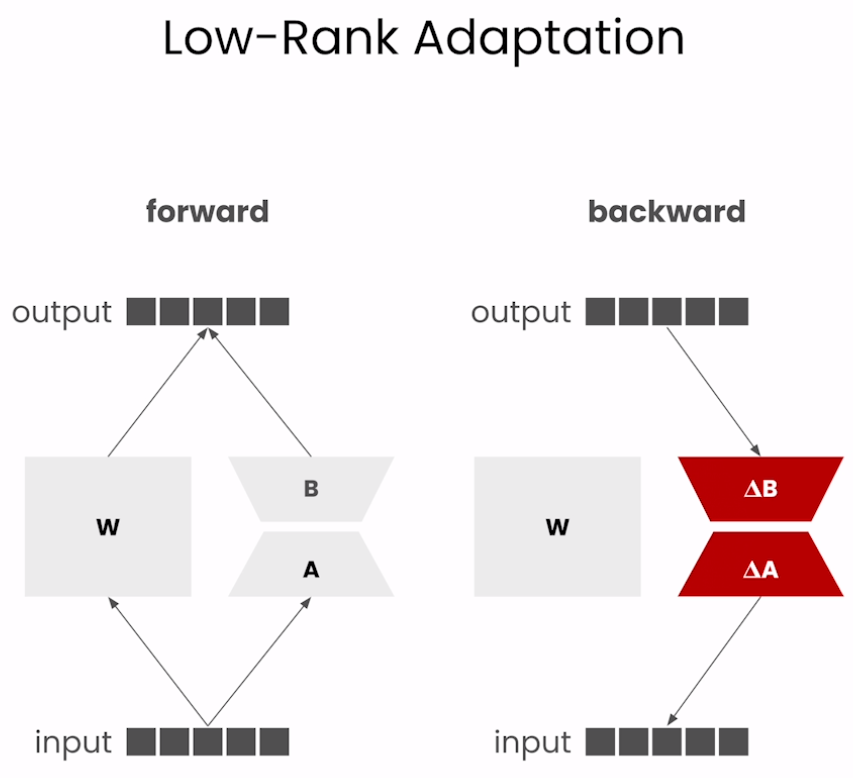

- 🛠️ LoRA Adapters: Explore the details of how Low Rank Adapters (LoRA) work and how batching techniques allow different LoRA adapters to be served to multiple customers simultaneously.

- 🚀 Hands-On Experience: Get hands-on with Predibase’s LoRAX framework inference server to see optimization techniques in action.

- 🔎 Learn techniques like KV caching to speed up text generation in Large Language Models (LLMs).

- 💻 Write code to efficiently serve LLM applications to a large number of users while considering performance trade-offs.

- 🛠️ Explore the fundamentals of Low Rank Adapters (LoRA) and how Predibase implements them in the LoRAX framework inference server.

🌟 Travis Addair is the Co-Founder and CTO at Predibase, bringing extensive expertise to guide you through efficiently serving Large Language Models (LLMs).

🔗 To enroll in the course or for further information, visit deeplearning.ai.