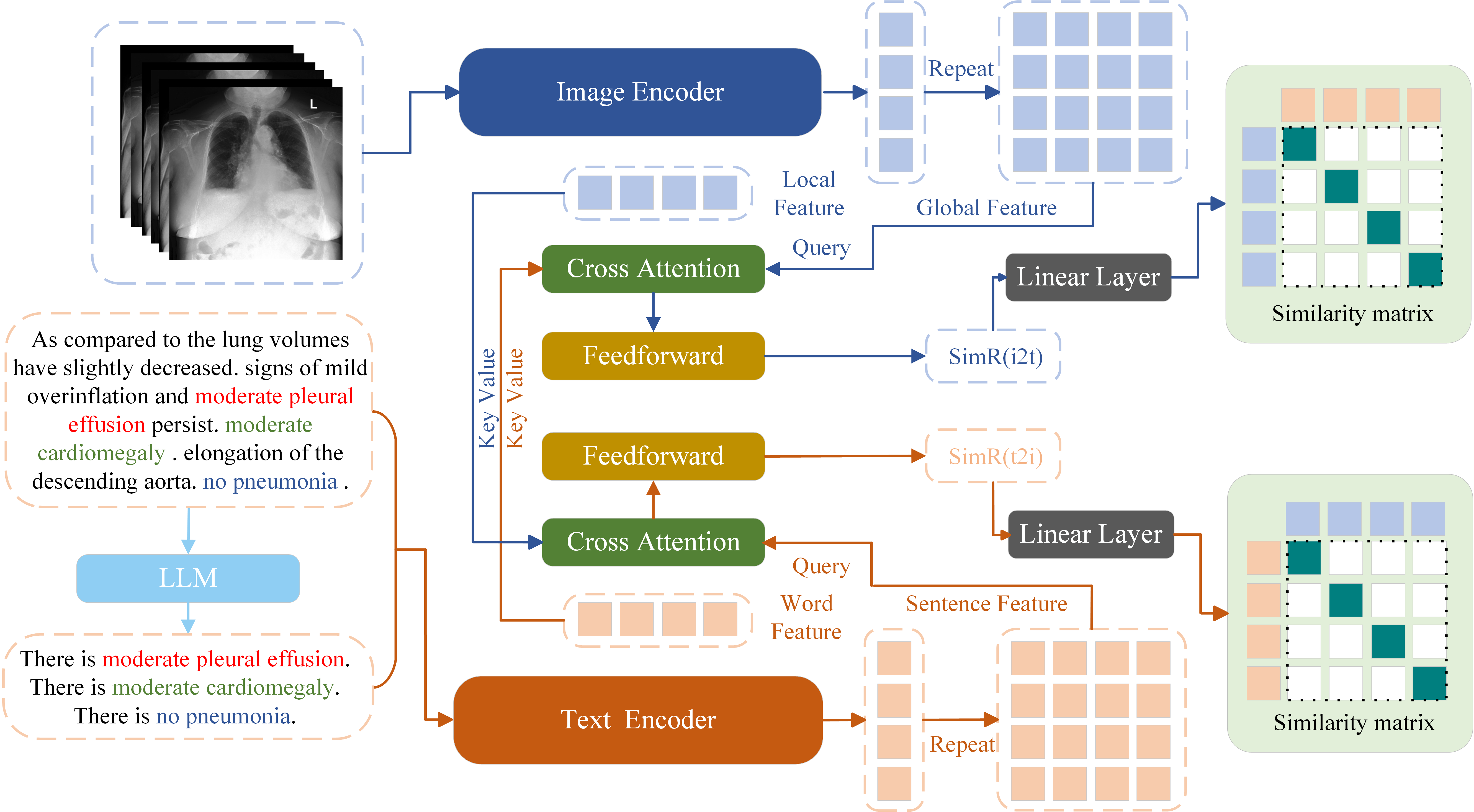

CARZero (Cross-attention Alignment for Radiology Zero-Shot Classification) is a pioneering multimodal representation learning framework designed for enhancing medical image recognition with minimal labeling effort. It leverages the power of cross-attention mechanisms to intelligently align disparate data modalities, facilitating label-efficient learning and accurate classification in the medical imaging domain.

CARZero Manuscript

Haoran Lai, Qingsong Yao, Zihang Jiang. Rongsheng Wang, Zhiyang He, Xiaodong Tao, S. Kevin Zhou

University of Science and Technology of China

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2024

- MIMIC-CXR: PhysioNet - Contains 377,110 images from 227,835 radiographic studies of 65,379 patients.

- Open-I: Open-I's FAQ page - Features 3,851 reports and 7,470 chest X-ray images, annotated for 18 multi-label diseases.

- PadChest: BIMCV - Includes 160,868 chest X-ray images of 192 different diseases, with 27% manually annotated.

- ChestXray14: NIH's Box storage - Comprises 112,120 images with 14 disease labels from 30,805 patients.

- CheXpert: Stanford ML Group - Consists of 224,316 chest X-rays from 65,240 patients, with a consensus-annotated test set of 500 patients.

- ChestXDet10: GitHub - A subset of NIH ChestXray14, containing 3,543 images with box-level annotations for 10 diseases.

File names are saved in the Dataset folder, which can be dowload in here Please update the PATH in the filename list to your image storage location for integration.

For the image encoder, Vit/B-16 is utilized, pre-trained using MAE and M3AE techniques. Our pre-trained models are available here for download in the ./pretrain_model folder.

For text encoding, BioBert is fine-tuned on MIMIC and Padchest reports and available through Hugging Face:

from transformers import AutoModel, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("Laihaoran/BioClinicalMPBERT")

model = AutoModel.from_pretrained("Laihaoran/BioClinicalMPBERT")Start by installing PyTorch 1.12.1 with the right CUDA version, then clone this repository and install the dependencies.

$ conda install pytorch==1.12.1 torchvision==0.13.1 torchaudio==0.12.1 cudatoolkit=11.3 -c pytorch

$ pip install git@github.com:laihaoran/CARZero.git

$ conda env create -f environment.ymlIf you want to test the performance of our model, you can dowload the trained CARZero and place is in the ./pretrain_model folder. Run the script file for test.

sh test.shExample configurations for pretraining can be found in the ./configs. All training are done using the run.py script. Run the script file for training:

sh train.shNote: The batch size increases sequentially from 64, to 128, and finally to 256. It is advisable to remember this progression and utilize a GPU equipped with 80 GB of memory to accommodate these changes efficiently.

@article{lai2024carzero,

title={CARZero: Cross-Attention Alignment for Radiology Zero-Shot Classification},

author={Lai, Haoran and Yao, Qingsong and Jiang, Zihang and Wang, Rongsheng and He, Zhiyang and Tao, Xiaodong and Zhou, S Kevin},

journal={arXiv preprint arXiv:2402.17417},

year={2024}

}

Our sincere thanks to the contributors of MAE, M3AE, and BERT for their foundational work, which greatly facilitated the development of CARZero.