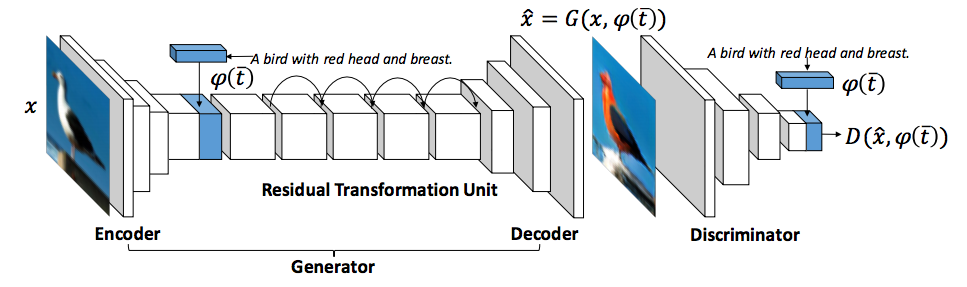

This is a PyTorch implementation of the paper Semantic Image Synthesis via Adversarial Learning.

- PyTorch 0.2

- Torchvision

- Pillow

- fastText.py (Note: if you have a problem when loading a pretrained model, try my fixed code)

- NLTK

Download a pretrained English word vectors. You can see the list of pretrained vectors on this page.

The caption data is from this repository. After downloading, modify CONFIG file so that all paths of the datasets point to the data you downloaded.

scripts/train_text_embedding_[birds/flowers].sh

Train a visual-semantic embedding model using the method of Kiros et al..scripts/train_[birds/flowers].sh

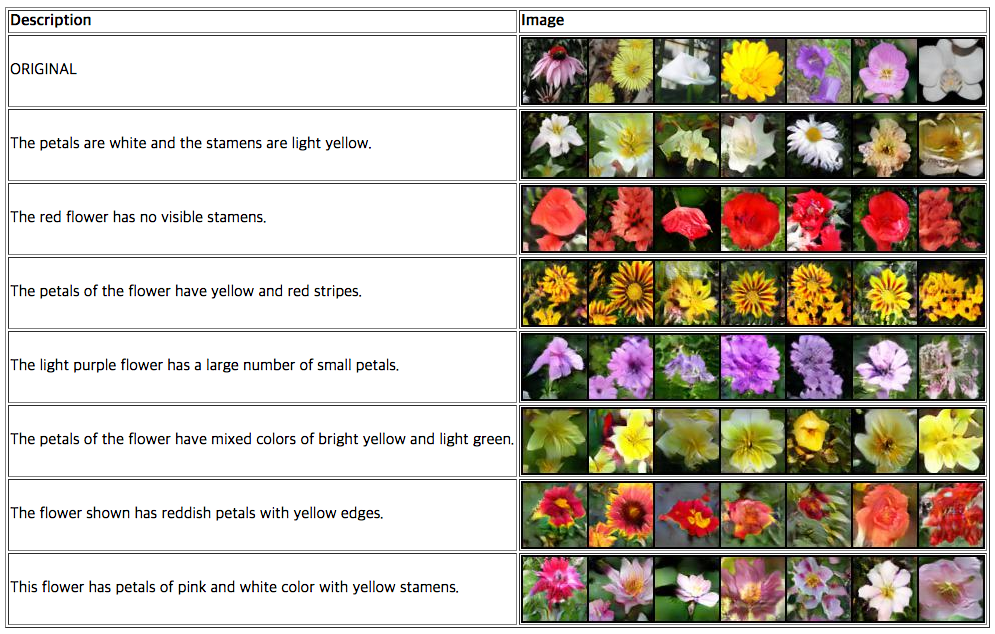

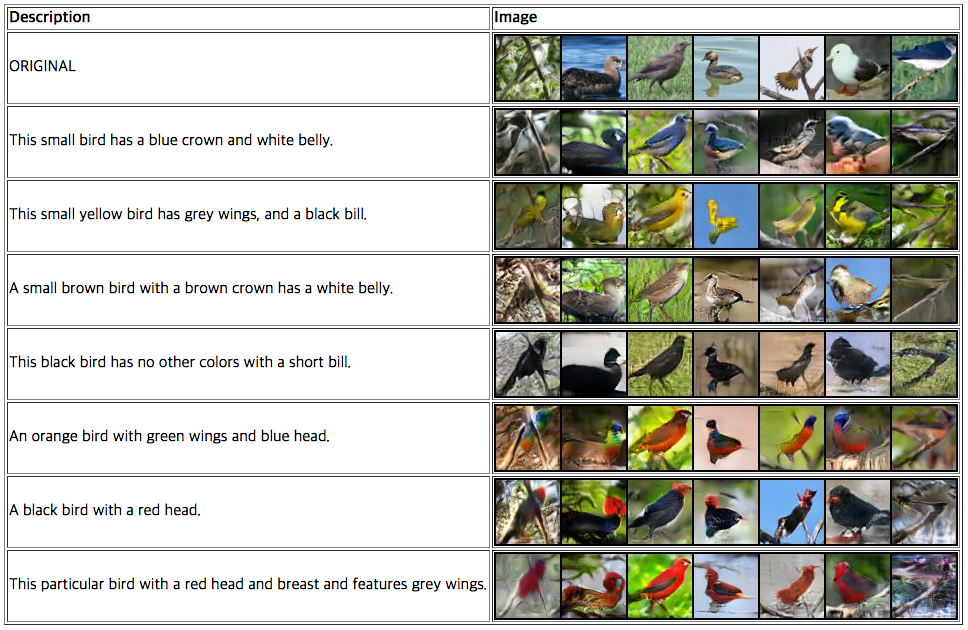

Train a GAN using a pretrained text embedding model.scripts/test_[birds/flowers].sh

Generate some examples using original images and semantically relevant texts.

We would like to thank Hao Dong, who is one of the first authors of the paper Semantic Image Synthesis via Adversarial Learning, for providing helpful advice for the implementation.