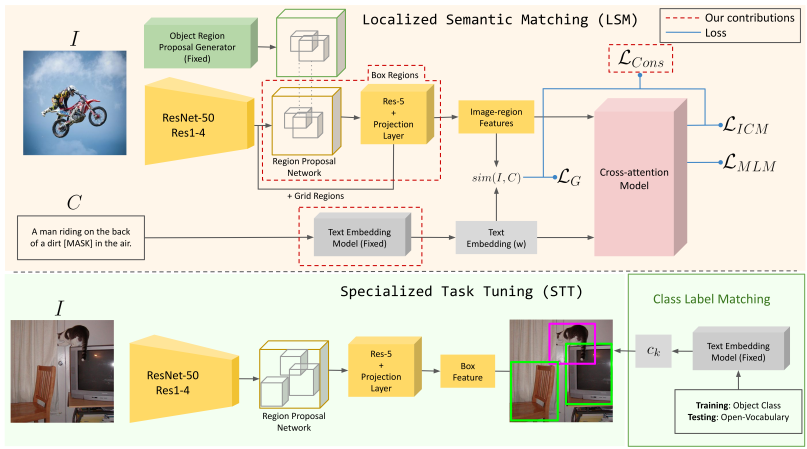

2022-07 (v0.1): This repository is the official PyTorch implementation of our GCPR 2022 paper: Localized Vision-Language Matching for Open-vocabulary Object Detection

- News

- Table of Contents

- Installation

- Prepare datasets

- Train and validate Open Vocabulary Detection

- Acknowledgements

- License

- Citation

- Linux or macOS with Python ≥ 3.6

- PyTorch ≥ 1.8. Install them together at pytorch.org to make sure of this. Note, please check the PyTorch version matches the one required by Detectron2 and your CUDA version.

- Detectron2: follow Detectron2 installation instructions.

Originally the code was tested on python=3.8.13, torch=1.10.0, cuda=11.2 and OS Ubuntu 20.04.

git clone https://github.com/lmb-freiburg/locov.git

cd locov- Download MS COCO training and validation datasets. Download detection and caption annotations for retrieval from the original page.

- Save the data in datasets_data

- Run the script to create the annotation subsets that include only base and novel categories

python tools/convert_annotations_to_ov_sets.py- Run the script to save and calculate the object embeddings.

python tools/coco_bert_embeddings.py- Or download the precomputed ones Embeddings

- Train OLN on MSCOCO known classes and extract the proposals for all the training set.

- Or download the precomputed proposals for MSCOCO Train on known classes only Proposals (3.9GB)

Run the script to train the Localized Semantic Matching stage

python train_ovnet.py --num-gpus 8 --resume --config-file configs/coco_lsm.yaml Run the script to train the Localized Semantic Matching stage

python train_ovnet.py --num-gpus 8 --resume --config-file configs/coco_stt.yaml MODEL.WEIGHTS path_to_final_weights_lsm_stagepython train_ovnet.py --num-gpus 8 --resume --eval-only --config-file configs/coco_stt.yaml \

MODEL.WEIGHTS output/model-weights.pth \

OUTPUT_DIR output/eval_locovPretrained models can be found in the models directory

| Model | AP-novel | AP50-novel | AP-known | AP50-known | AP-general | AP50-general | Weights |

|---|---|---|---|---|---|---|---|

| LocOv | 17.219 | 30.109 | 33.499 | 53.383 | 28.129 | 45.719 | LocOv |

This work was supported by Deutscher Akademischer Austauschdienst - German Academic Exchange Service (DAAD) Research Grants - Doctoral Programmes in Germany, 2019/20; grant number: 57440921.

The Deep Learning Cluster used in this work is partially funded by the German Research Foundation (DFG) - 417962828.

We especially thank the creators of the following github repositories for providing helpful code:

- Zareian et al. for their open-vocabulary setup and code: OVR-CNN

This work is licensed under a Creative Commons Attribution 3.0 Unported License To view a copy of this license, visit http://creativecommons.org/licenses/by/3.0/ or send a letter to Creative Commons, PO Box 1866, Mountain View, CA 94042, USA.

If you use our repository or find it useful in your research, please cite the following paper:

@InProceedings{Bravo2022locov,

author = "M. Bravo and S. Mittal and T. Brox",

title = "Localized Vision-Language Matching for Open-vocabulary Object Detection",

booktitle = "German Conference on Pattern Recognition (GCPR) 2022",

year = "2022"

}