- To run mujoco environments, first install mujoco-py and suggested modified version of gym which supports mujoco 1.50.

- Make sure the version of Pytorch is at least 0.4.0.

- If you have a GPU, you are recommended to set the OMP_NUM_THREADS to 1, since PyTorch will create additional threads when performing computations which can damage the performance of multiprocessing. (This problem is most serious with Linux, where multiprocessing can be even slower than a single thread):

export OMP_NUM_THREADS=1

- Code structure: Agent collects samples;

Trainer facilitates learning and training;

Evaluator tests trained models in new environments.

All examples are placed under

configfile. - After training several agents on one environment, you can plot the training process in one figure by

python utils/plot.py --env-name <ENVIRONMENT_NAME> --algo <ALGORITHM1,...,ALGORITHMn> --x_len <ITERATION_NUM> --save_data

- Trust Region Policy Optimization (TRPO) -> config/pg/trpo_gym.py

- Proximal Policy Optimization (PPO) -> config/pg/ppo_gym.py

- Synchronous A3C (A2C) -> config/pg/a2c_gym.py

python config/pg/ppo_gym.py --env-name Hopper-v2 --max-iter-num 1000 --gpu

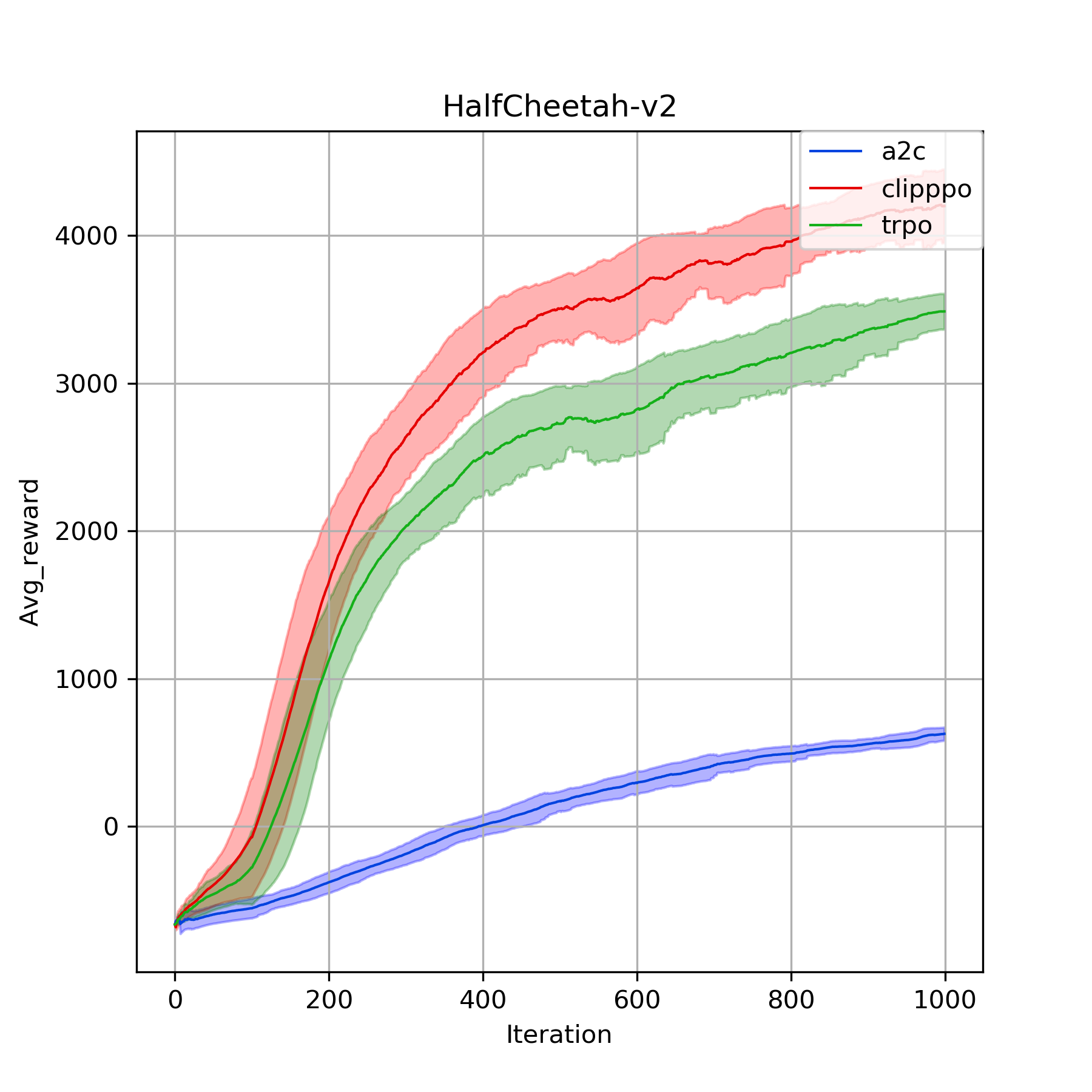

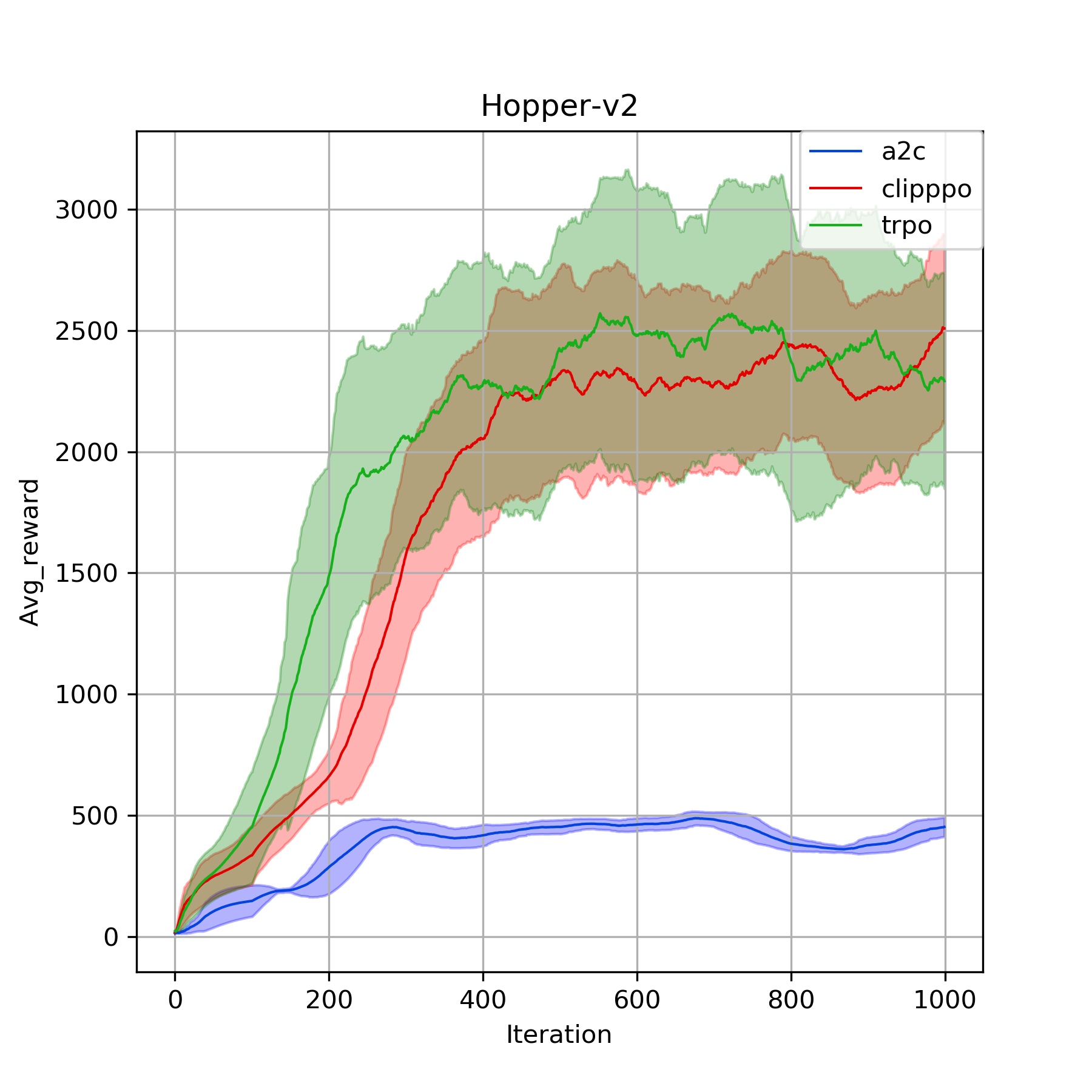

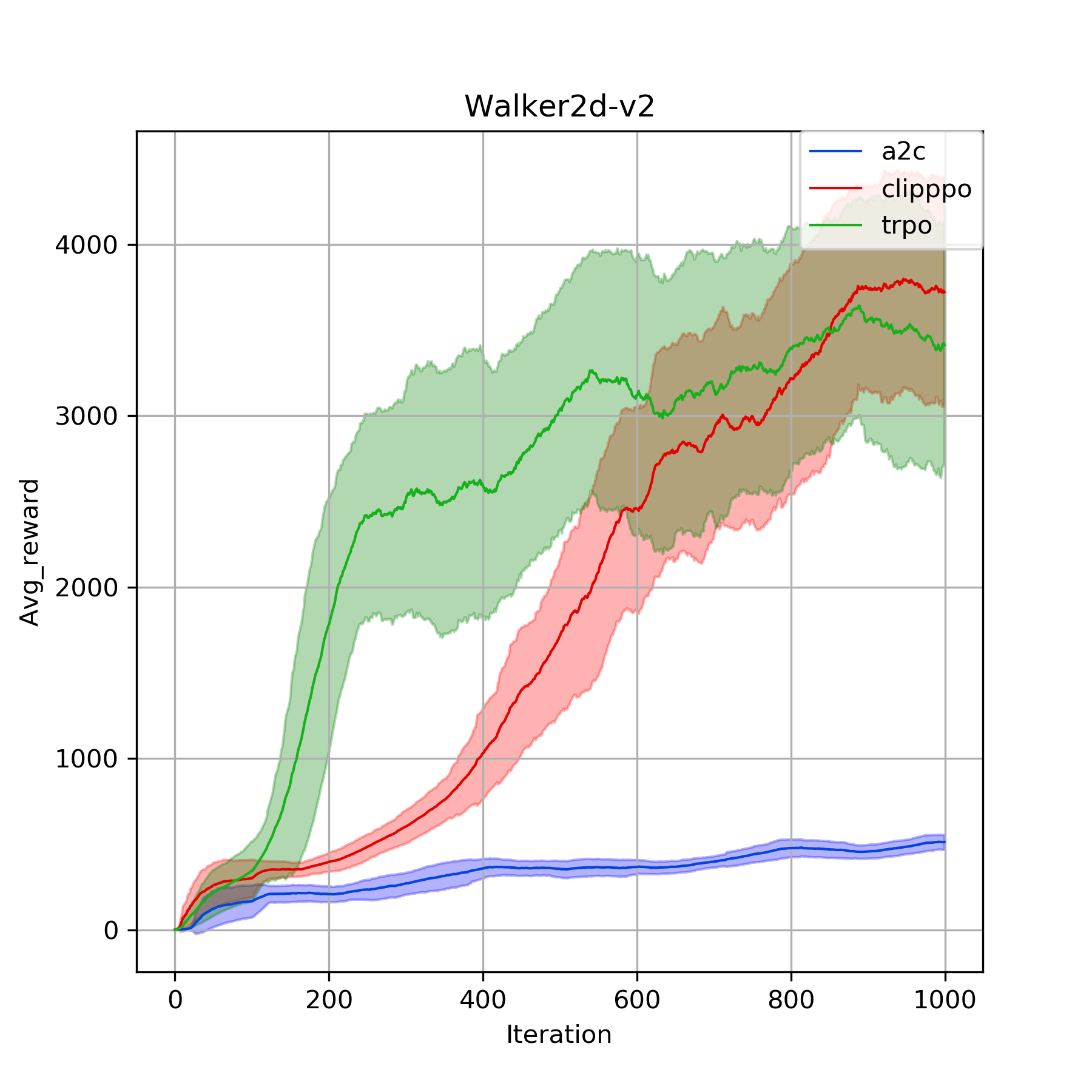

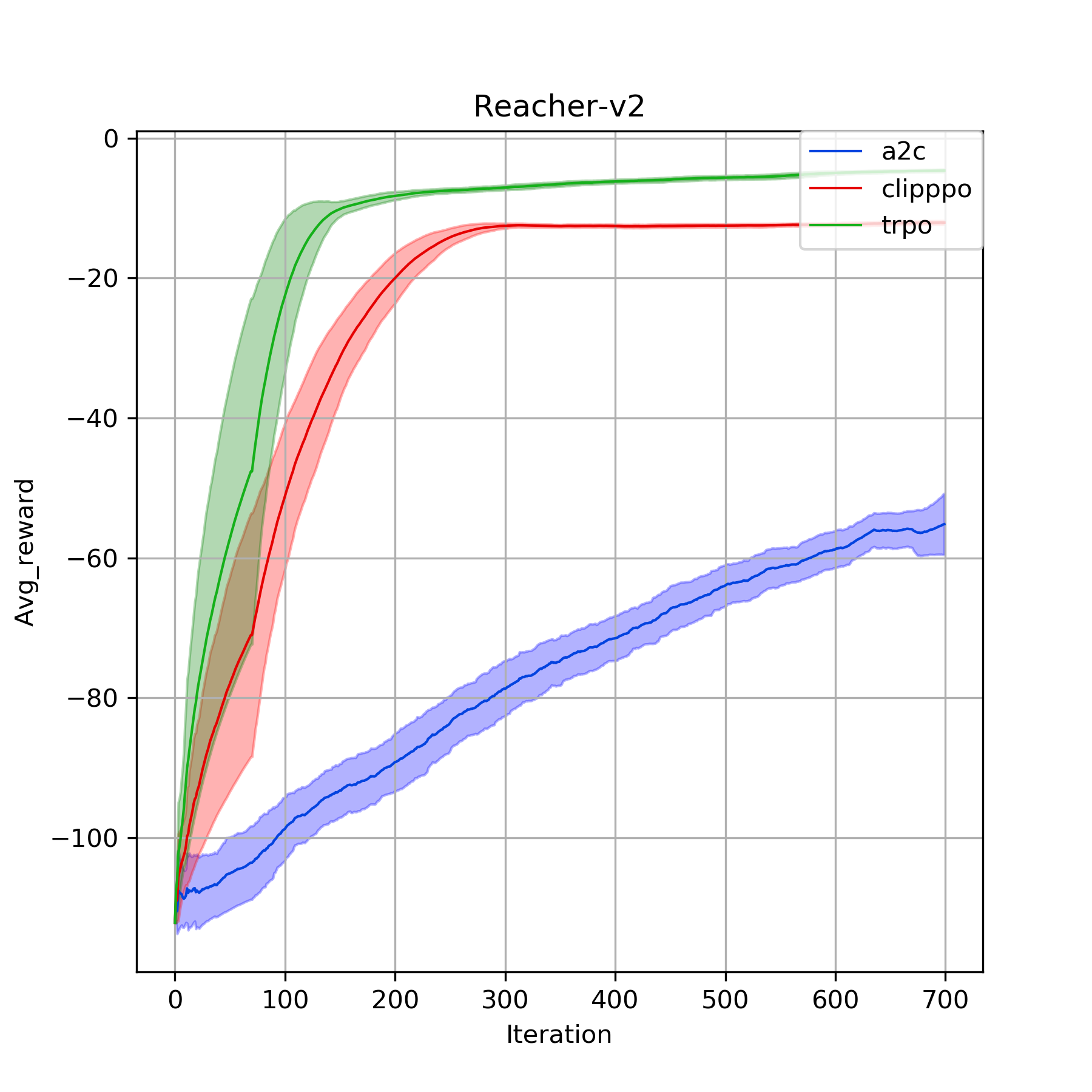

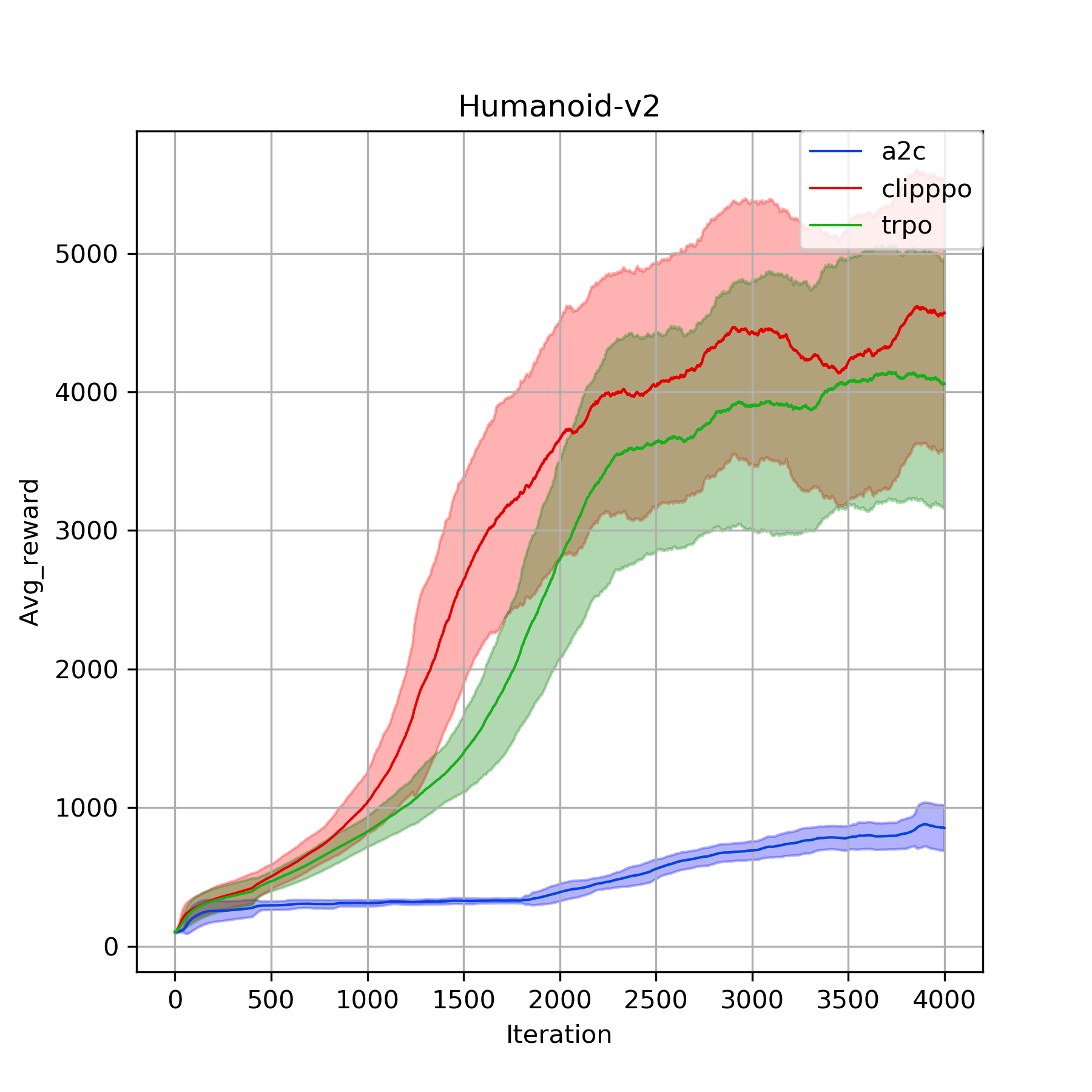

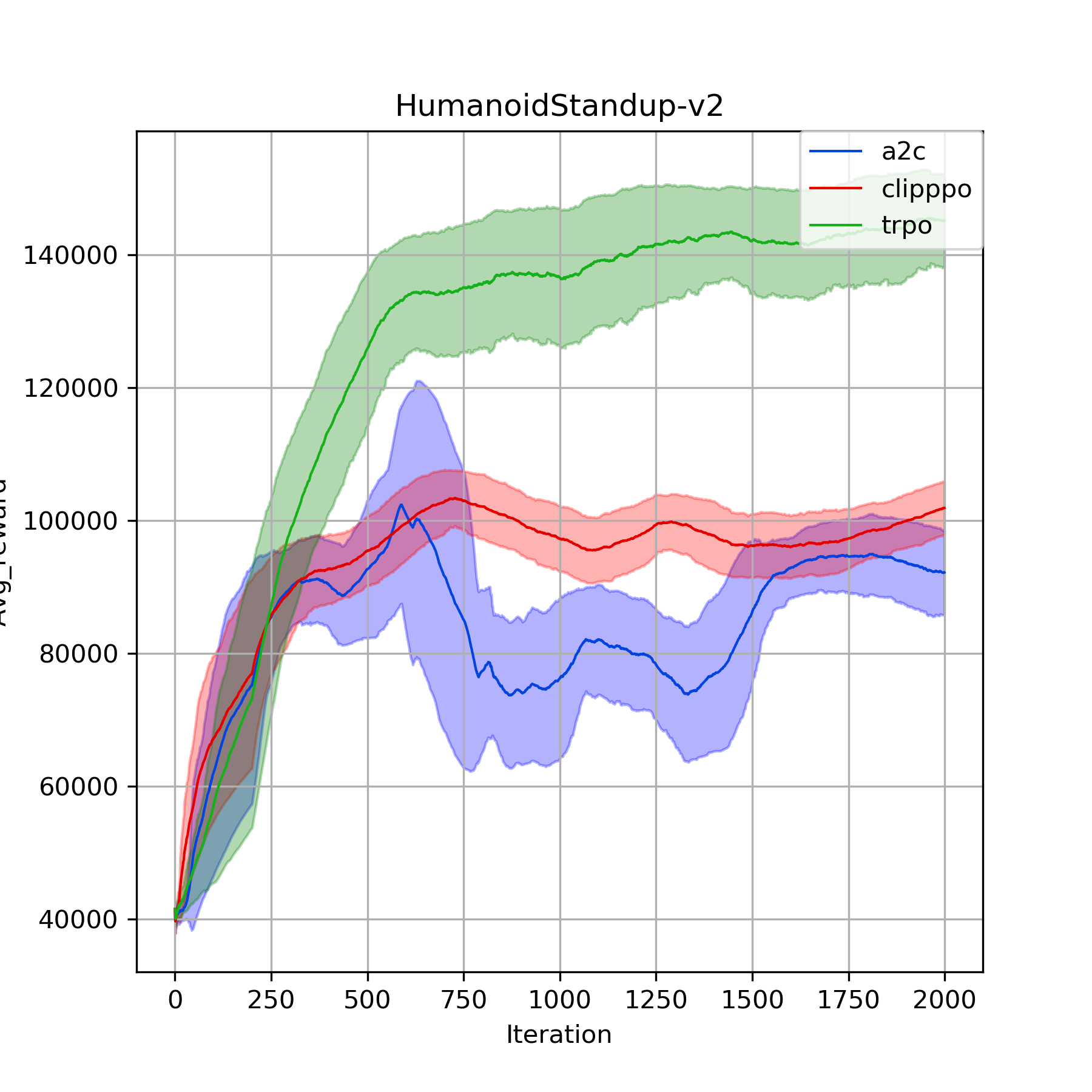

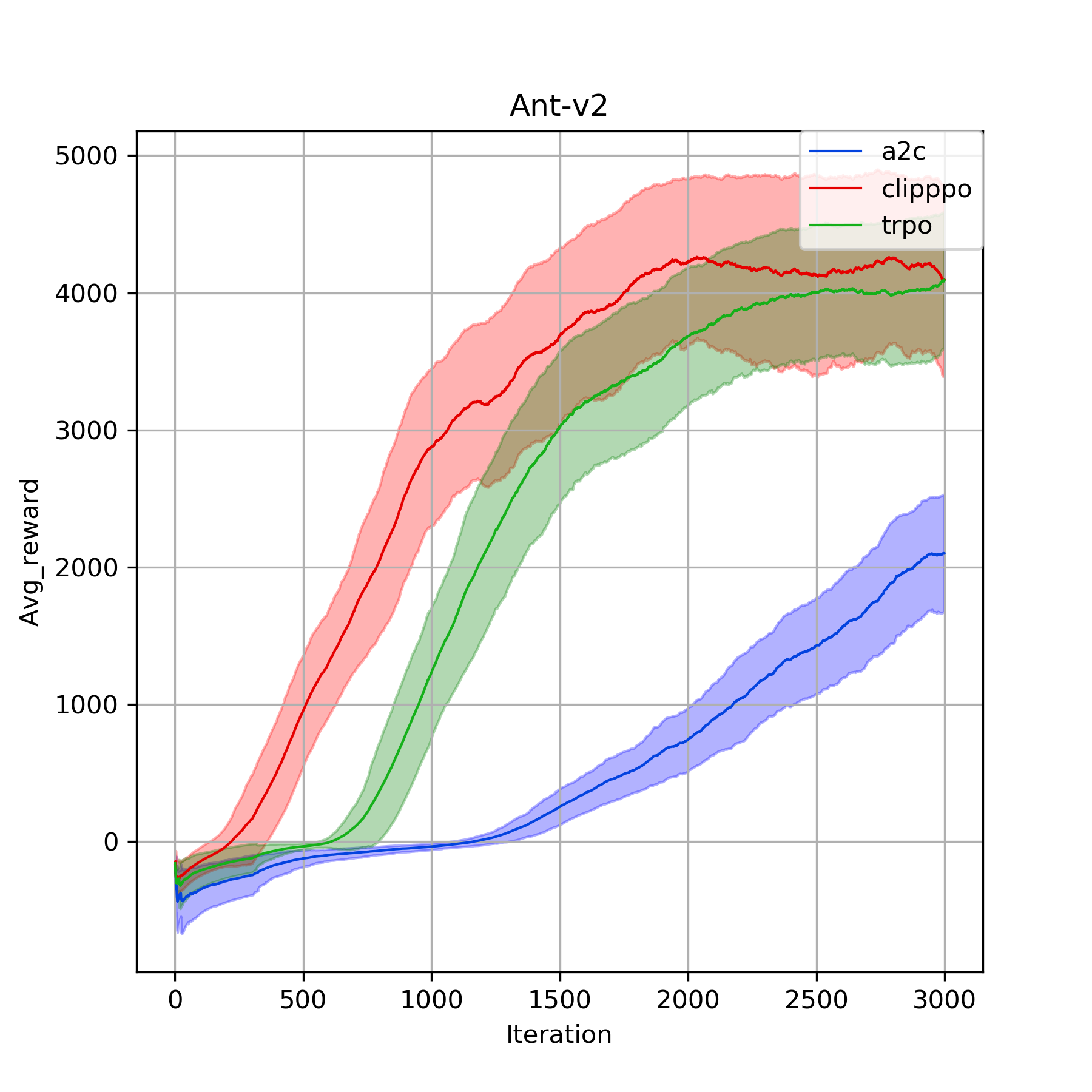

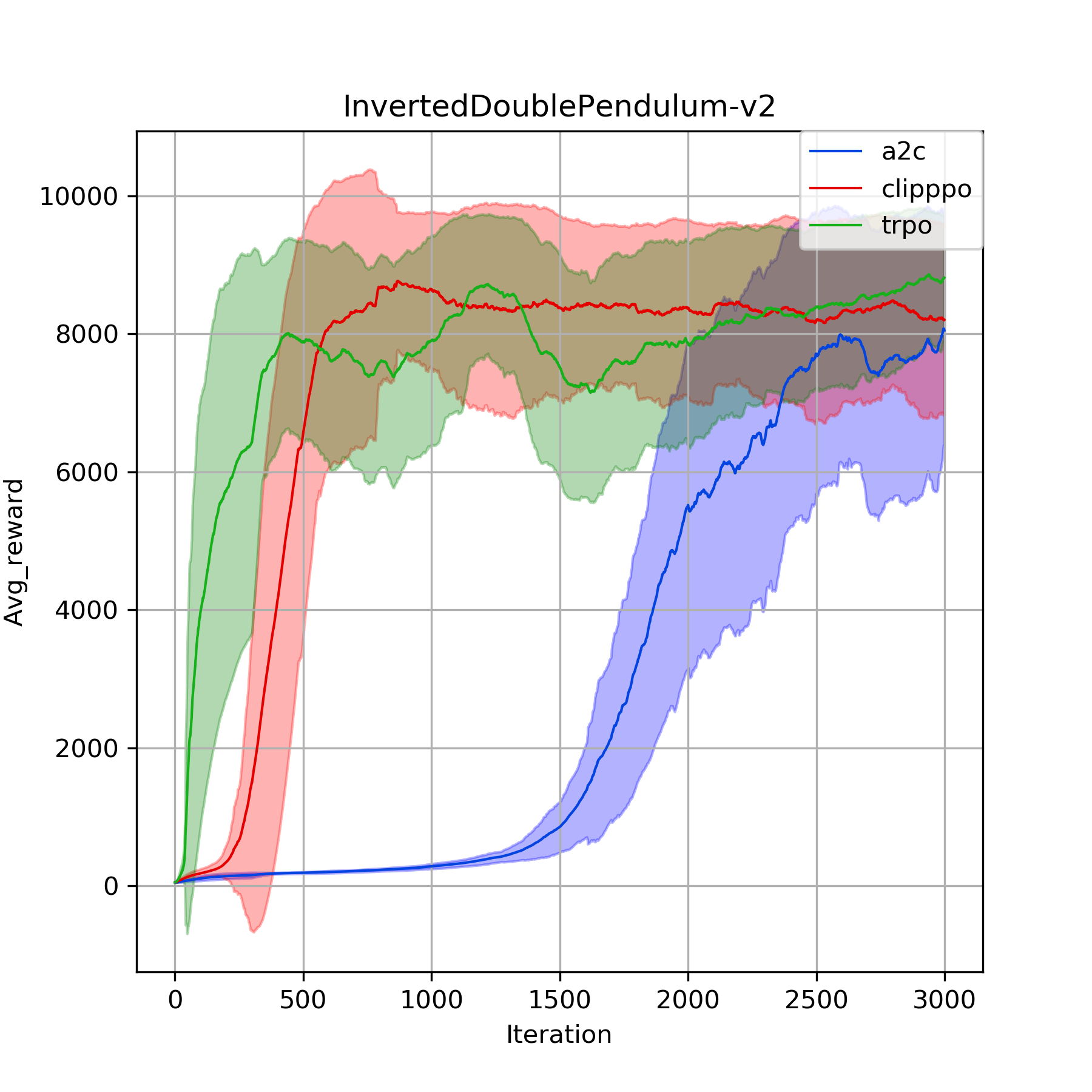

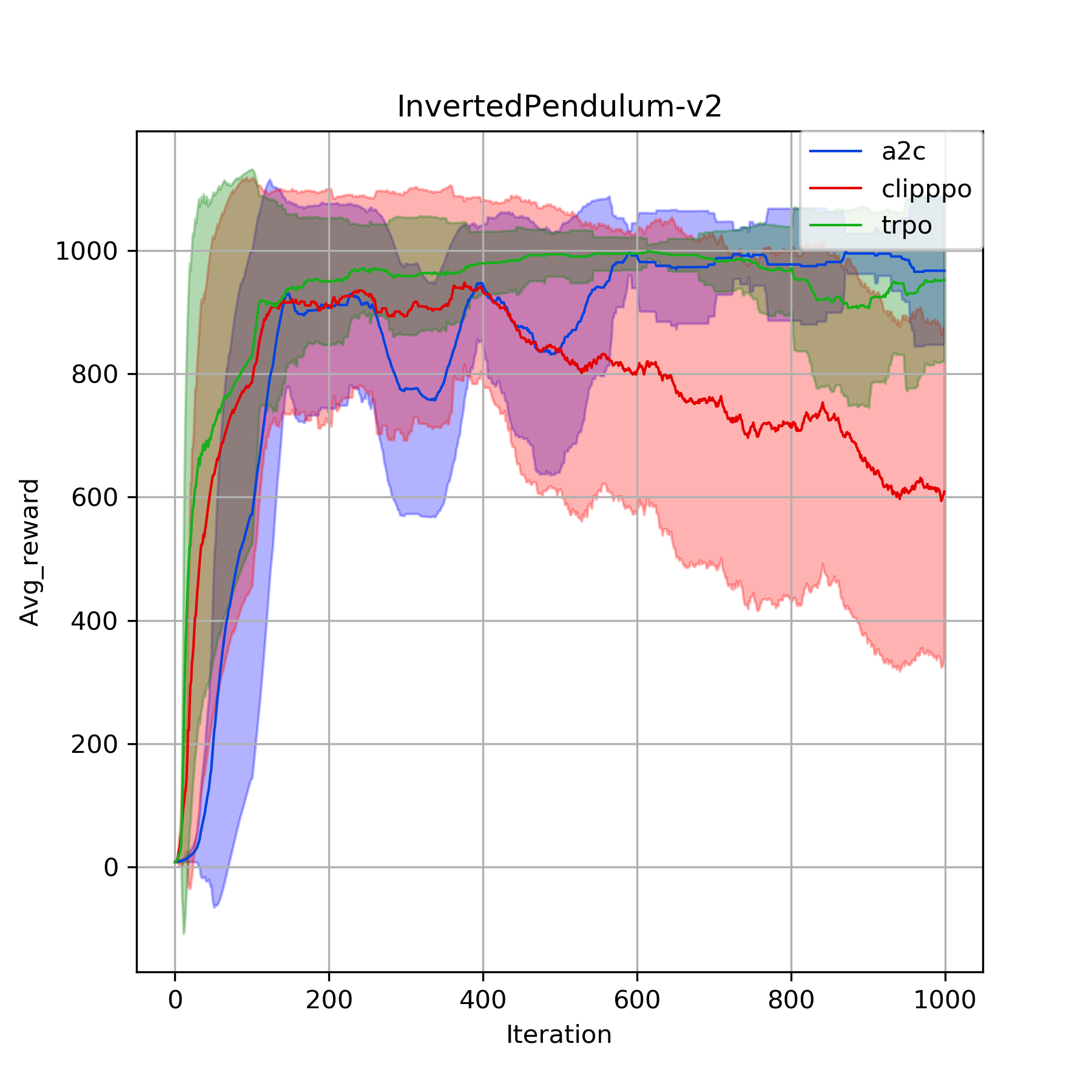

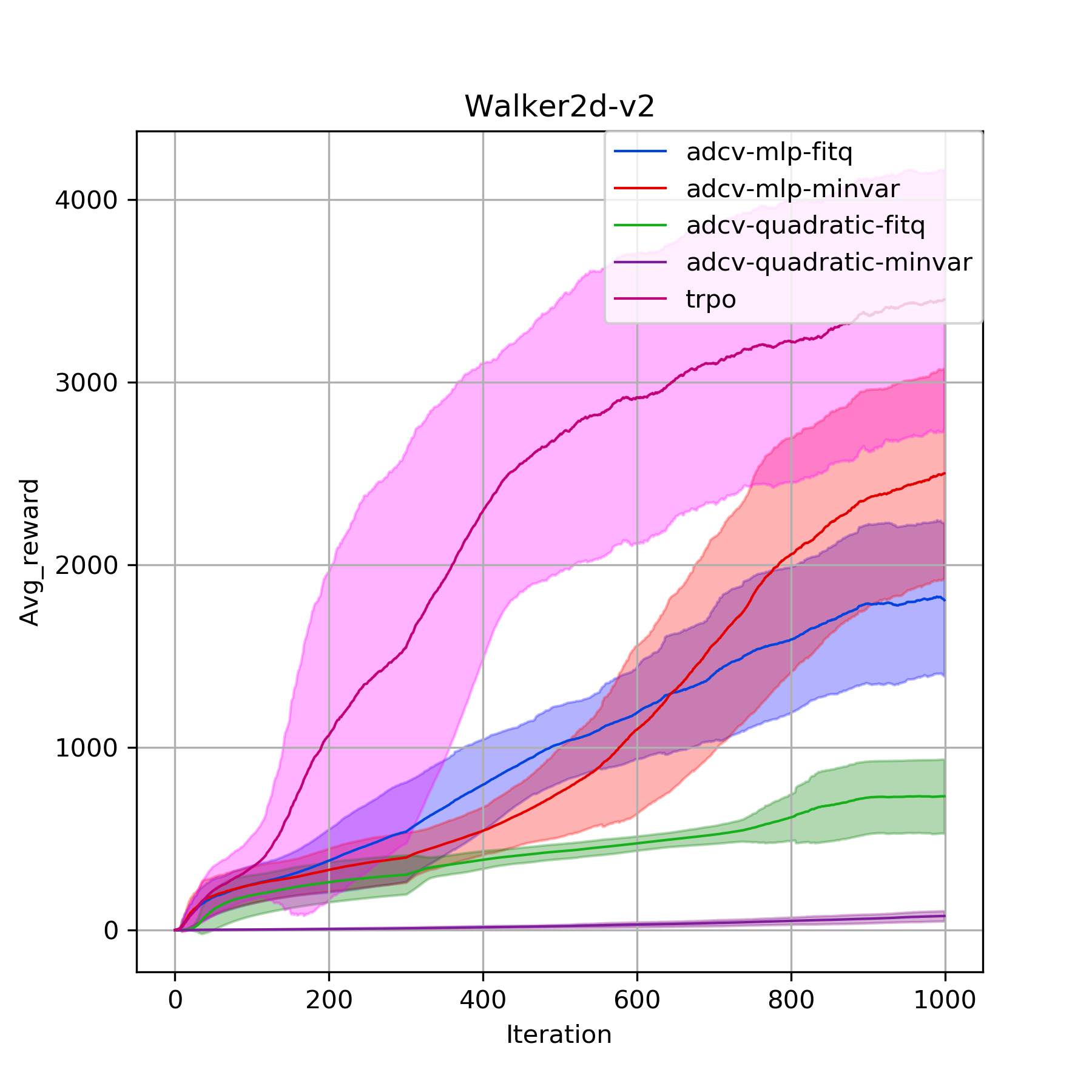

We test the policy gradient codes in these Mujoco environments with default parameters.

If you want to do GAIL but without existing expert trajectories, TrajGiver will help us generate it. However, make sure the export policy has been generated and saved (i.e. train a TRPO or PPO agent on the same environment) such that TrajGiver would automatically first find the export directory, then load the policy network and running states, and eventually run the well-trained policy on desired environment.

python config/gail/gail_gym.py --env-name Hopper-v2 --max-iter-num 1000 --gpu

python config/adcv/v_gym.py --env-name Walker2d-v2 --max-iter-num 1000 --variate mlp --opt minvar --gpu