Project Page | ArXiv | Paper | Online Viewer

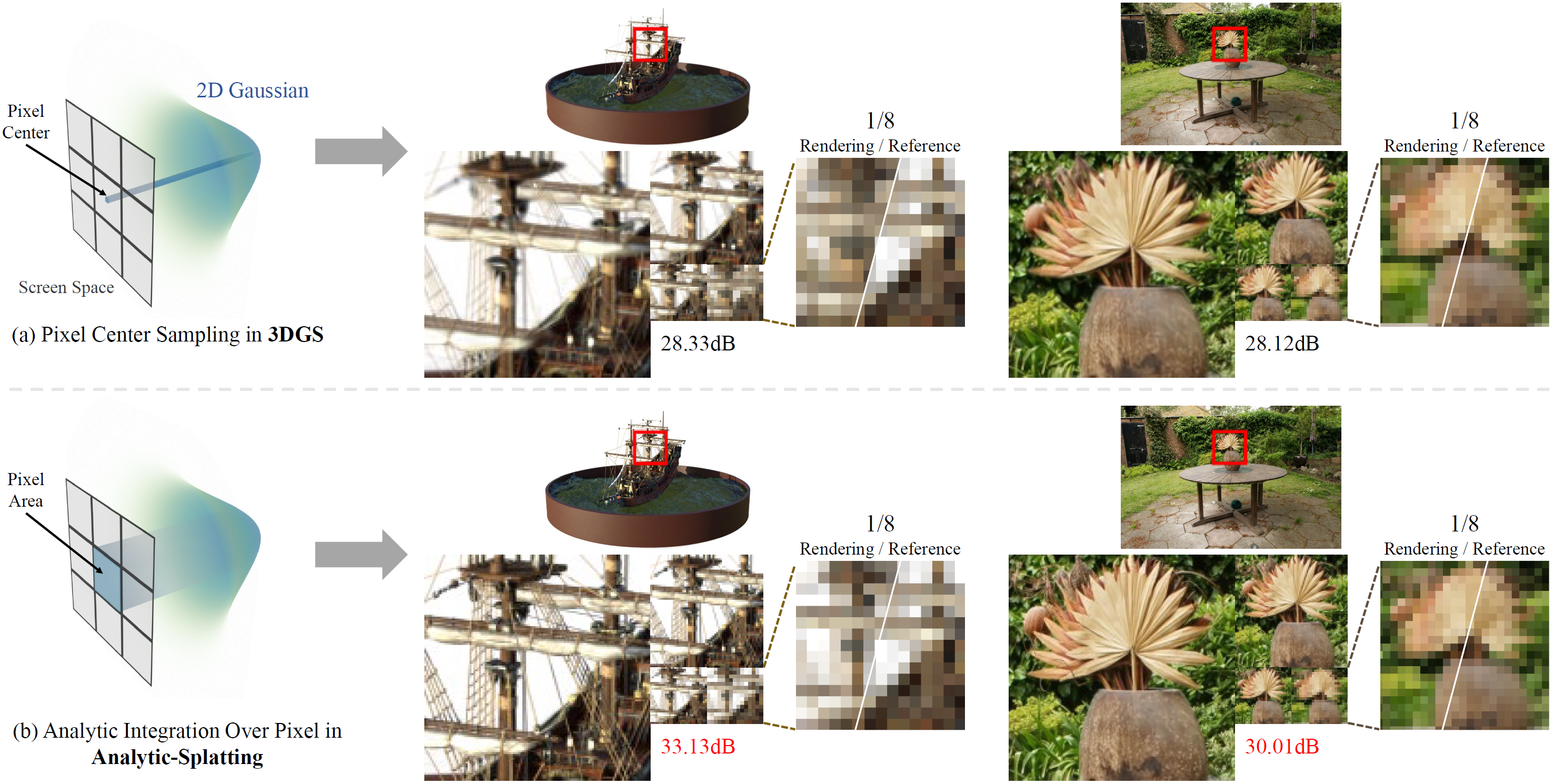

We present Analytic-Splatting that improves the pixel shading in 3DGS to achieve anti-aliasing by analytically approximating the pixel area integral response of Gaussian signals.

Welcome to the Online Viewer to view the results of Analytic-Splatting.

Clone the repository and create an anaconda environment using

git clone https://github.com/lzhnb/Analytic-Splatting

cd Analytic-Splatting

conda create -y -n ana-splatting python=3.8

conda activate ana-splatting

pip install torch==1.12.1+cu113 torchvision==0.13.1+cu113 -f https://download.pytorch.org/whl/torch_stable.html

conda install cudatoolkit-dev=11.3 -c conda-forge

pip install -r requirements.txt

pip install submodules/diff-gaussian-rasterization

pip install submodules/simple-knn/Please download and unzip nerf_synthetic.zip from the NeRF official Google Drive.

Please run the follow script and get the multi-scale version:

python convert_blender_data.py \

--blenderdir dataset/nerf_synthetic \

--outdir dataset/nerf_synthetic_multiThe MipNeRF360 scenes are hosted by the paper authors here. You can find our SfM data sets for Tanks&Temples and Deep Blending here.

We evaluate our method on NeRF-Synthetic and Mip-NeRF 360 datasets.

Please note that we use -i images_4/images_2 instead of -r 4/2 for single-scale training on Mip-NeRF 360 dataset, which will lead to artificially high metrics!!!

Take the lego case as an example.

Multi-Scale Training and Evaluation

python train.py \

-m outputs/chair-ms/ \

-s dataset/nerf_data/nerf_synthetic_multi/chair/ \

--white_background \

--eval \

--load_allres \

--sample_more_highresSet

--filter3dto enable 3D filtering proposed in Mip-Splatting; Set--denseto enable densification proposed in GOF.

python render.py -m outputs/chair-ms/ --skip_train --lpipsset

--visto save testing results.

Single-Scale Training and Evaluation

python train.py \

-m outputs/chair/ \

-s dataset/nerf_data/nerf_synthetic/chair/ \

--white_background \

--evalSet

--filter3dto enable 3D filtering proposed in Mip-Splatting; Set--denseto enable densification proposed in GOF.

python render.py -m outputs/chair/ --skip_train --lpipsSet

--visto save testing results.

Take the bicycle case as an example.

Multi-Scale Training and Evaluation

python train_ms.py \

-m outputs/bicycle-ms \

-s dataset/nerf_data/nerf_real_360/bicycle/ \

-i images_4 \

--eval \

--sample_more_highrespython render_ms.py -m outputs/bicycle-ms/ --skip_train --lpipsSingle-Scale Training and Evaluation

python train.py \

-m outputs/bicycle/ \

-s dataset/nerf_data/nerf_real_360/bicycle/ \

-i images_4 \

--evalpython render.py -m outputs/bicycle/ --skip_train --lpipsFor convenience, you can run the corresponding script in scripts to get the result of the corresponding setting.

Please refer to our supplmentary and my Google Sheet for more comparison details.

We also report the results under the Mip-Splatting's STMT setting (

360STMT-PSNR↑-r,360STMT-SSIM↑-r, and360STMT-LPIPS↑-r). And the results show that 3D filtering is very useful for the STMT-MipNeRF 360.

Following Mip-Splatting, we also support the online viewer for real-time viewing.

After training, the exported point_cloud.ply can be loaded in our online viewer for visualization.

For better visualization, we recommand setting --filter3d during training to enable 3D filtering. 3D filtering proposed in Mip-Splatting is a great way to eliminate aliasing that appears at extreme zooming in.

Here is a comparison of extreme zooming in with (left) and without (right) 3D filtering.

python create_fused_ply.py -m ${model_path} --output_ply fused/{scene}_fused.ply"If you find this work useful in your research, please cite:

@article{liang2024analytic,

title={Analytic-Splatting: Anti-Aliased 3D Gaussian Splatting via Analytic Integration},

author={Liang, Zhihao and Zhang, Qi and Hu, Wenbo and Feng, Ying and Zhu, Lei and Jia, Kui},

journal={arXiv preprint arXiv:2403.11056},

year={2024}

}