The official code repository for "STAP: Sequencing Task-Agnostic Policies," presented at ICRA 2023. For a brief overview of our work, please refer to our project page. Further details can be found in our paper available on arXiv.

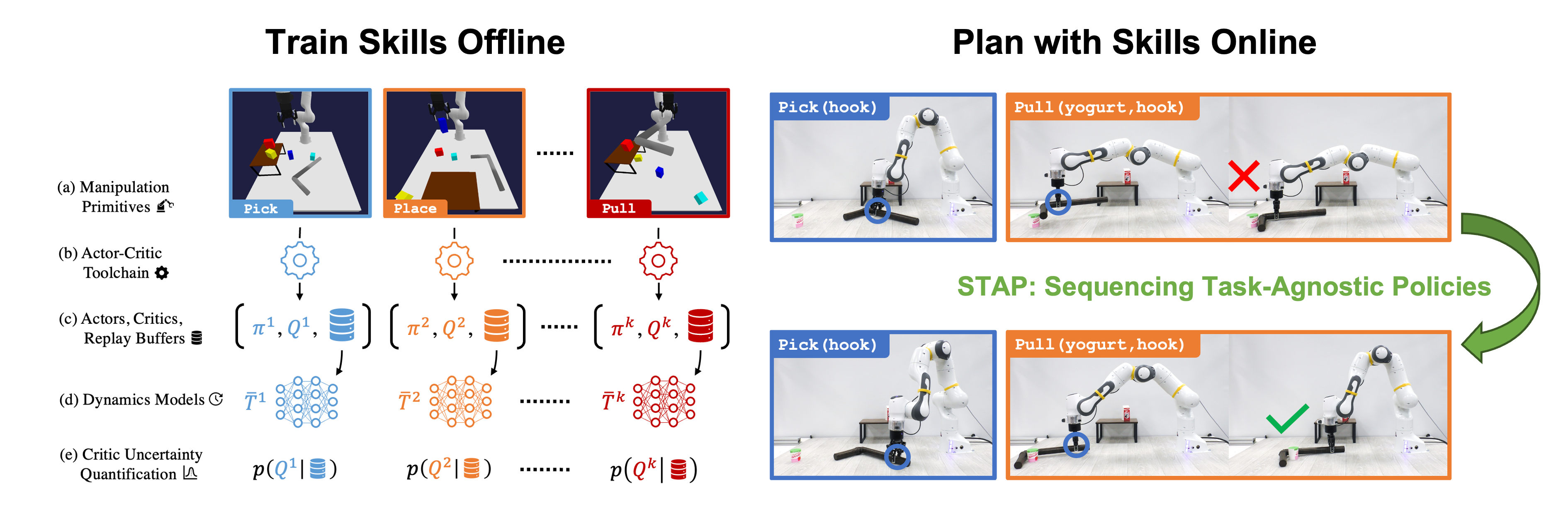

The STAP framework can be broken down into two phases: (1) train skills offline (i.e. policies, Q-functions, dynamics models, uncertainty quantifers); 2) plan with skills online (i.e. motion planning, task and motion planning). We provide implementations for both phases:

- Skill library: A suite of reinforcement learning (RL) and inverse RL algorithms to learn four skills:

Pick,Place,Push,Pull. - Dynamics models: Trainers for learning skill-specific dynamics models from off-policy transition experience.

- UQ models: Sketching Curvature for Out-of-Distribution Detection (SCOD) implementation and trainers for Q-network epistemic uncertainty quantification (UQ).

- Motion planners (STAP): A set of sampling-based motion planners including randomized sampling, cross-entropy method, planning with uncertainty-aware metrics, and combinations.

- Task and motion planners (TAMP): Coupling PDDL-based task planning with STAP-based motion planning.

- Baseline methods: Implementations of Deep Affordance Foresight (DAF) and parameterized-action Dreamer.

- 3D Environments: PyBullet tabletop manipulation environment with domain randomization.

This repository is primarily tested on Ubuntu 20.04 and macOS Monterey with Python 3.8.10.

Python packages are managed through Pipenv. Follow the installation procedure below to get setup:

# Install pyenv.

curl https://pyenv.run | bash

exec $SHELL # Restart shell for path changes to take effect.

pyenv install 3.8.10 # Install a Python version.

pyenv global 3.8.10 # Set this Python to default.

# Clone repository.

git clone https://github.com/agiachris/STAP.git --recurse-submodules

cd STAP

# Install pipenv.

pip install pipenv

pipenv install --dev

pipenv syncUse pipenv shell The load the virtual environment in the current shell.

STAP supports training skills, dynamics models, and composing these components at test-time for planning.

- STAP module: The majority of the project code is located in the package

stap/. - Scripts: Code for launching experiments, debugging, plotting, and visualization is under

scripts/. - Configs: Training and evaluation functionality is determined by

.yamlconfiguration files located inconfigs/.

We provide launch scripts for training STAP's required models below. The launch scripts also support parallelization on a cluster managed by SLURM, and will otherwise default to sequentially processing jobs.

As an alternative to training skills and dynamics models from scratch, we provide checkpoints that can be downloaded and directly used to evaluate STAP planners.

Run the following commands to download the model checkpoints to the default models/ directory (this requires ~10GBs of disk space):

pipenv shell # script requires gdown

bash scripts/download/download_checkpoints.shOnce the download has finished, the models/ directory will contain:

- Skills trained with RL (

agents_rl) and their dynamics models (dynamics_rl) - Skills trained with inverse RL (

policies_irl) and their dynamics models (dynamics_irl) - Demonstration data used to train inverse RL skills (

datasets) - Checkpoints for the Deep Affordance Foresight baseline (

baselines)

We also provide the planning results that correspond to evaluating STAP on these checkpoints.

To download the results to the default plots/ directory, run the following command (this requires ~3.5GBs of disk space):

pipenv shell # script requires gdown

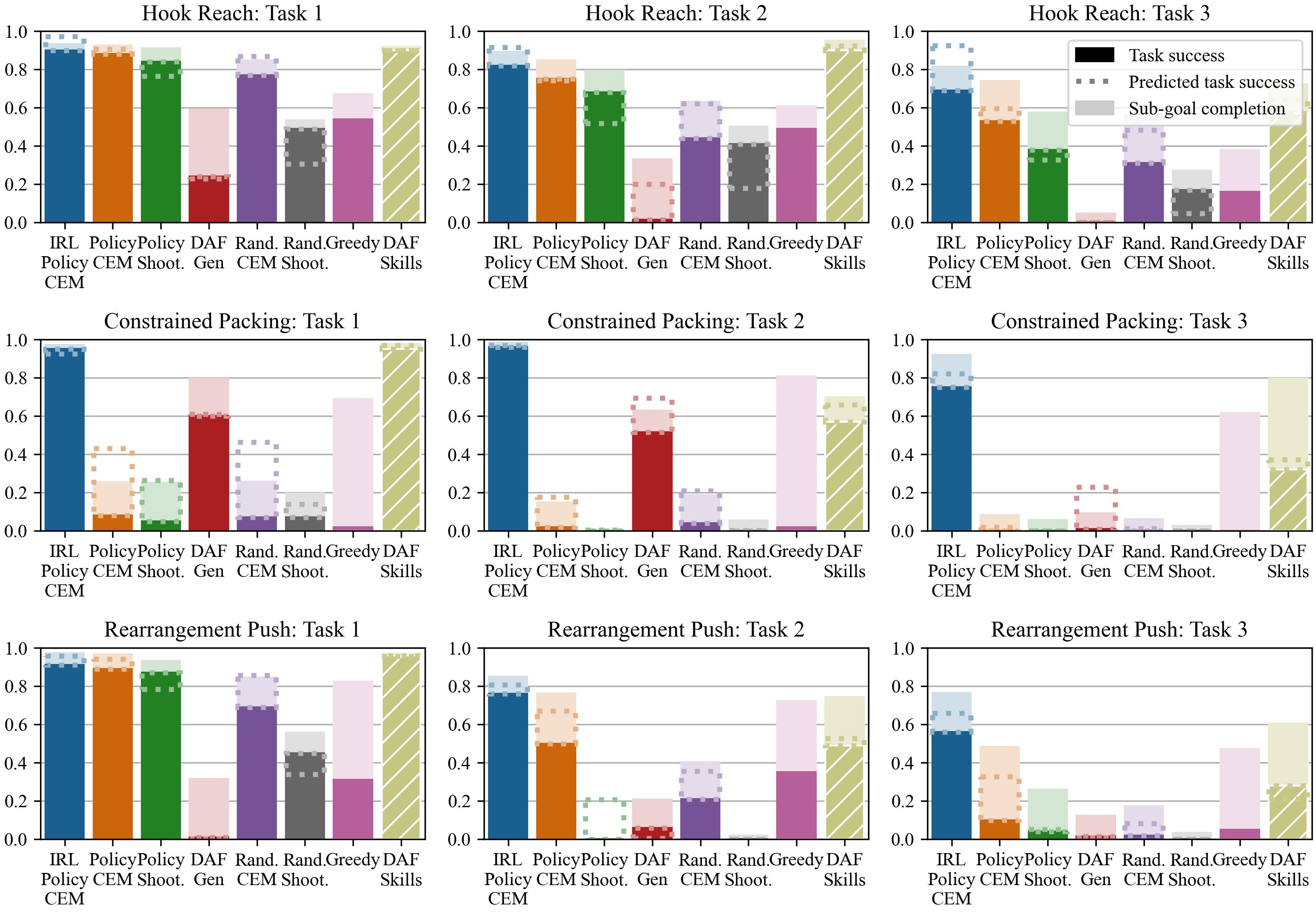

bash scripts/download/download_results.shThe planning results can be visualized by running bash scripts/visualize/generate_figures.sh which will save the figure shown below to plots/planning-result.jpg.

Skills in STAP are trained independently in custom environments. We provide two pipelines, RL and inverse RL, for training skills. While we use RL in the paper, skills learned via inverse RL yield significantly higher planning performance. This is because inverse RL offers more control over the training pipeline, allowing us to tune hyperparameters for data generation and skill training. We have only tested SCOD UQ with skills learned via RL.

To simultaneously learn an actor-critic per skill with RL, the relevant command is:

bash scripts/train/train_agents.shWhen the skills have finished training, copy and rename the desired checkpoints.

python scripts/debug/select_checkpoints.py --clone-name official --clone-dynamics TrueThese copied checkpoints will be used for planning.

Training SCOD is only required if the skills are intended to be used with an uncertainty-aware planner.

bash scripts/train/train_scod.shTo instead use inverse RL to learn a critic, then an actor, we first generate a dataset of demos per skill:

bash scripts/data/generate_primitive_datasets.sh # generate skill data

bash scripts/train/train_values.sh # train skill critics

bash scripts/train/train_policies.sh # train skill actorsOnce the skills have been learned, we can train a dynamics model with:

bash scripts/train/train_dynamics.shWith skills and dynamics models, we have all the essential pieces required to solve long-horizon manipulation problems with STAP.

To evaluate the motion planners at specified agent checkpoints:

bash scripts/eval/eval_planners.shTo evaluate variants of STAP, or test STAP on a subset of the 9 evaluation tasks, minor edits can be made to the above launch file.

To evaluate TAMP involving a PDDL task planner and STAP at specified agent checkpoints:

bash scripts/eval/eval_tamp.shOur main baseline is Deep Affordance Foresight (DAF). DAF trains a new set of skills for each task, in contrast to STAP which trains a set of skills that are used for all downstream tasks. DAF is also evaluated on the task it is trained on, whereas STAP must generalize to each new task it is evaluated on.

To train a DAF model on each of the 9 evaluation tasks:

bash scripts/train/train_baselines.shWhen the models have finished training, evaluate them with:

bash scripts/eval/eval_daf.shSequencing Task-Agnostic Policies is offered under the MIT License agreement. If you find STAP useful, please consider citing our work:

@article{agia2022taps,

title={STAP: Sequencing Task-Agnostic Policies},

author={Agia, Christopher and Migimatsu, Toki and Wu, Jiajun and Bohg, Jeannette},

journal={arXiv preprint arXiv:2210.12250},

year={2022}

}