Created by Wenliang Zhao*, Yongming Rao*, Zuyan Liu*, Benlin Liu, Jie Zhou, Jiwen Lu†

This repository contains PyTorch implementation for paper "Unleashing Text-to-Image Diffusion Models for Visual Perception".

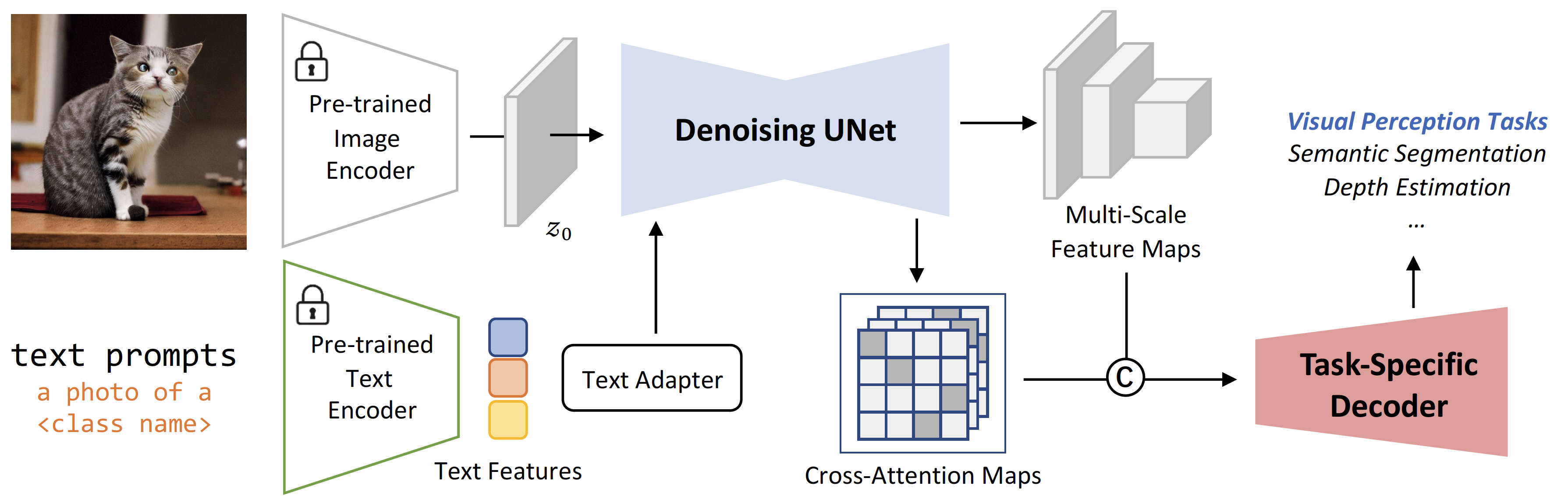

VPD (Visual Perception with Pre-trained Diffusion Models) is a framework that leverages the high-level and low-level knowledge of a pre-trained text-to-image diffusion model to downstream visual perception tasks.

Clone this repo, and run

git submodule init

git submodule update

Download the checkpoint of stable-diffusion (we use v1-5 by default) and put it in the checkpoints folder. Please also follow the instructions in stable-diffusion to install the required packages.

Equipped with a lightweight Semantic FPN and trained for 80K iterations on

Please check segmentation.md for detailed instructions.

VPD achieves 73.46, 63.93, and 63.12 oIoU on the validation sets of RefCOCO, RefCOCO+, and G-Ref, repectively.

| Dataset | P@0.5 | P@0.6 | P@0.7 | P@0.8 | P@0.9 | OIoU | Mean IoU |

|---|---|---|---|---|---|---|---|

| RefCOCO | 85.52 | 83.02 | 78.45 | 68.53 | 36.31 | 73.46 | 75.67 |

| RefCOCO+ | 76.69 | 73.93 | 69.68 | 60.98 | 32.52 | 63.93 | 67.98 |

| RefCOCOg | 75.16 | 71.16 | 65.60 | 55.04 | 29.41 | 63.12 | 66.42 |

Please check refer.md for detailed instructions on training and inference.

VPD obtains 0.254 RMSE on NYUv2 depth estimation benchmark, establishing the new state-of-the-art.

| RMSE | d1 | d2 | d3 | REL | log_10 | |

|---|---|---|---|---|---|---|

| VPD | 0.254 | 0.964 | 0.995 | 0.999 | 0.069 | 0.030 |

Please check depth.md for detailed instructions on training and inference.

MIT License

This code is based on stable-diffusion, mmsegmentation, LAVT, and MIM-Depth-Estimation.

If you find our work useful in your research, please consider citing:

@article{zhao2023unleashing,

title={Unleashing Text-to-Image Diffusion Models for Visual Perception},

author={Zhao, Wenliang and Rao, Yongming and Liu, Zuyan and Liu, Benlin and Zhou, Jie and Lu, Jiwen},

journal={arXiv preprint arXiv:2303.02153},

year={2023}

}