I can't maintain this repogitory due to lack of time and official implementation is available now. Please check out the official implementation here.

An unofficial PyTorch implementation of ICCV 2019 paper "OmniMVS: End-to-End Learning for Omnidirectional Stereo Matching".

You need Python 3.6 or later for f-Strings.

Python libraries:

- PyTorch >= 1.3.1

- torchvision

- SciPy >= 1.4.0 (scipy.spatial.transform)

- OpenCV

- tensorboard

- tqdm

- Open3D >= 0.8 (only for visualization)

Please run the following command. On the first line, Python OcamCalib undistortion library is installed for undistortion of Davide Scaramuzza's OcamCalib camera model.

pip install git+git://github.com/matsuren/ocamcalib_undistort.git

git clone https://github.com/matsuren/omnimvs_pytorch.gitDownload OmniThings in Omnidirectional Stereo Dataset from here. After extraction, please put the dataset folder in the following places.

omnimvs_pytorch/

├── ...

└── datasets/

└── omnithings/

├── cam1/

├── cam2/

├── cam3/

├── cam4/

├── depth_train_640/

├── ocam1.txt

├── ...

❗Attention❗

For some reasons, some filenames are inconsistent in OmniThings.

For instance, the first image is named 00001.png in cam1, but, it is named 0001.png for cam2, cam3, and cam4. So please rename 0001.png, 0002.png, and 0003.png so that they have five-digit numbers.

Run with default parameter (input image size: 500x480, output depth size: 512x256, disparity: 64).

python train.py ./datasets/omnithingsThese default parameters are smaller than the ones reported in their paper due to GPU memory limitation.

You can change parameters by arguments (-h option for details).

A pre-trained model (ndisp=48) is available here.

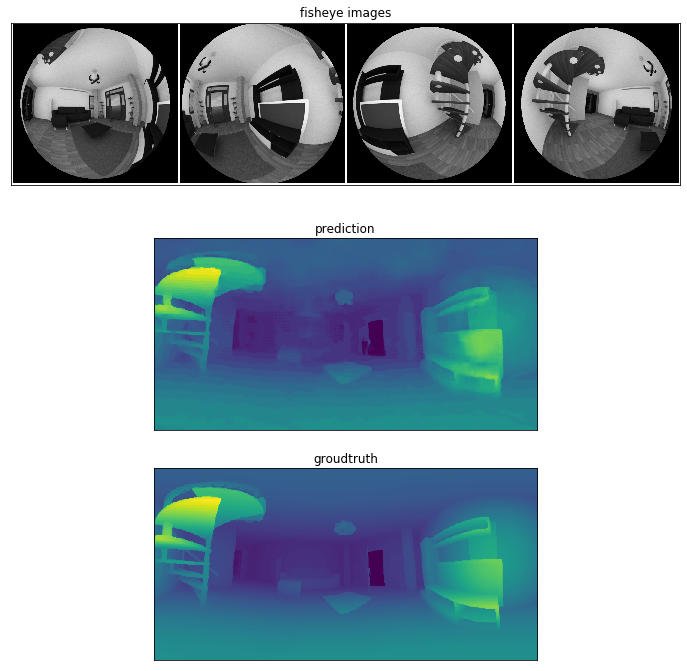

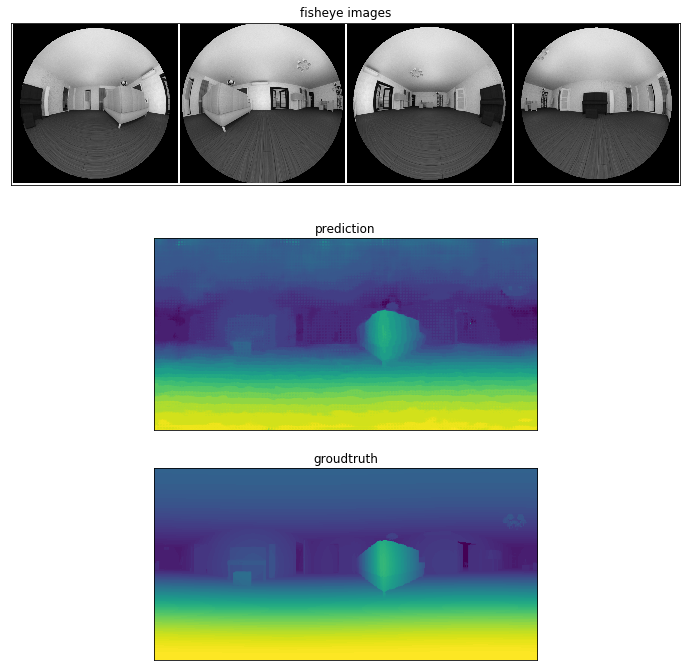

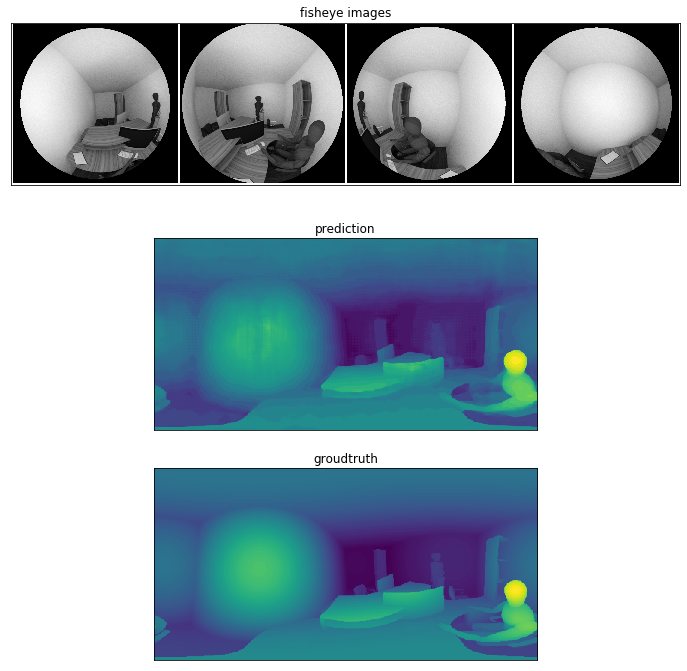

Predictions on OmniHouse after training on OmniThings (ndips=48).