Clone and create the environment.

git clone https://github.com/charlesxu90/helm-gpt.git

cd helm-gpt

mamba env create -f environment.yml

mamba activate helm-gpt-env

conda install conda-forge::git-lfs

git lfs pull

mamba install -c conda-forge rdkit

pip install torch==1.13.1+cu117 torchvision==0.14.1+cu117 torchaudio==0.13.1 --extra-index-url https://download.pytorch.org/whl/cu117

pip install -r requirements.txt

# Train prior model

python train_prior.py --train_data data/prior/chembl32/biotherapeutics_dict_prot_flt.csv --valid_data data/prior/chembl32/biotherapeutics_dict_prot_flt.csv --output_dir result/prior/chembl_5.0 --n_epochs 200 --max_len 200 --batch_size 1024

# Train agent model

python train_agent.py --prior result/prior/perm_tune/gpt_model_final_0.076.pt --output_dir result/agent/cpp/pep_perm_5.1_reinvent --batch_size 32 --n_steps 500 --sigma 60 --task permeability --max_len 140

# Generate molecules from a model

python generate.py --model_path result/prior/chembl_5.0/gpt_model_34_0.143.pt --out_file result/prior/chembl_5.0/1k_samples.csv --n_samples 1000 --max_len 200 --batch_size 128This code is licensed under MIT License.

If you're using HELM-GPT in your research or applications, please cite using this BibTeX:

@article{xu2024helm,

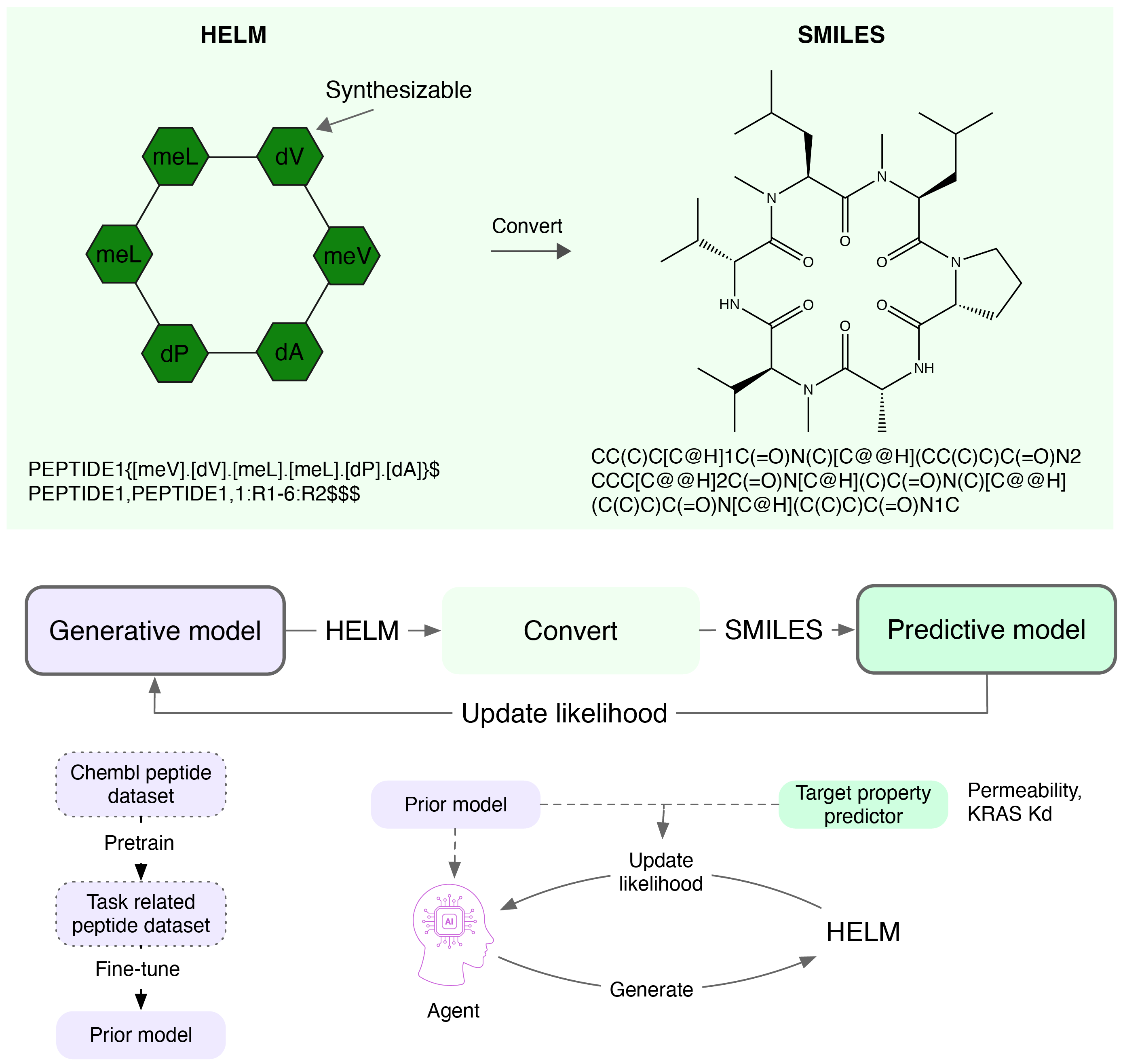

title={HELM-GPT: de novo macrocyclic peptide design using generative pre-trained transformer},

author={Xu, Xiaopeng and Xu, Chencheng and He, Wenjia and Wei, Lesong and Li, Haoyang and Zhou, Juexiao and Zhang, Ruochi and Wang, Yu and Xiong, Yuanpeng and Gao, Xin},

journal={Bioinformatics},

pages={btae364},

year={2024},

publisher={Oxford University Press}

}