Click the GIF abobe for link to YouTube video of the result

- Python 3.5.x

- NumPy

- CV2

- matplotlib

- glob

- PIL

- moviepy

- Compute the camera calibration matrix and distortion coefficients using a set of chessboard images.

- Apply a distortion correction to video frames.

- Use color transforms, gradients, to create a thresholded binary image.

- Apply a perspective transform to rectify binary image ("birds-eye view").

- Detect lane pixels and fit to find the lane boundary.

- Determine the curvature of the lane and vehicle position with respect to center.

- Warp the detected lane boundaries back onto the original image.

- Output visual display of the lane boundaries and numerical estimation of lane curvature and vehicle position.

camera_calibration.py : To calculate Calibration Matrix

line.py : Line class, contains functions to detect lane lines

threshold.py : Contains functions for thresholding an image

process.py : Contains the image processing pipeline and main function

find_parameters.py : Run GUI tool to find right parameters for various inputs

guiutils.py : GUI builder class

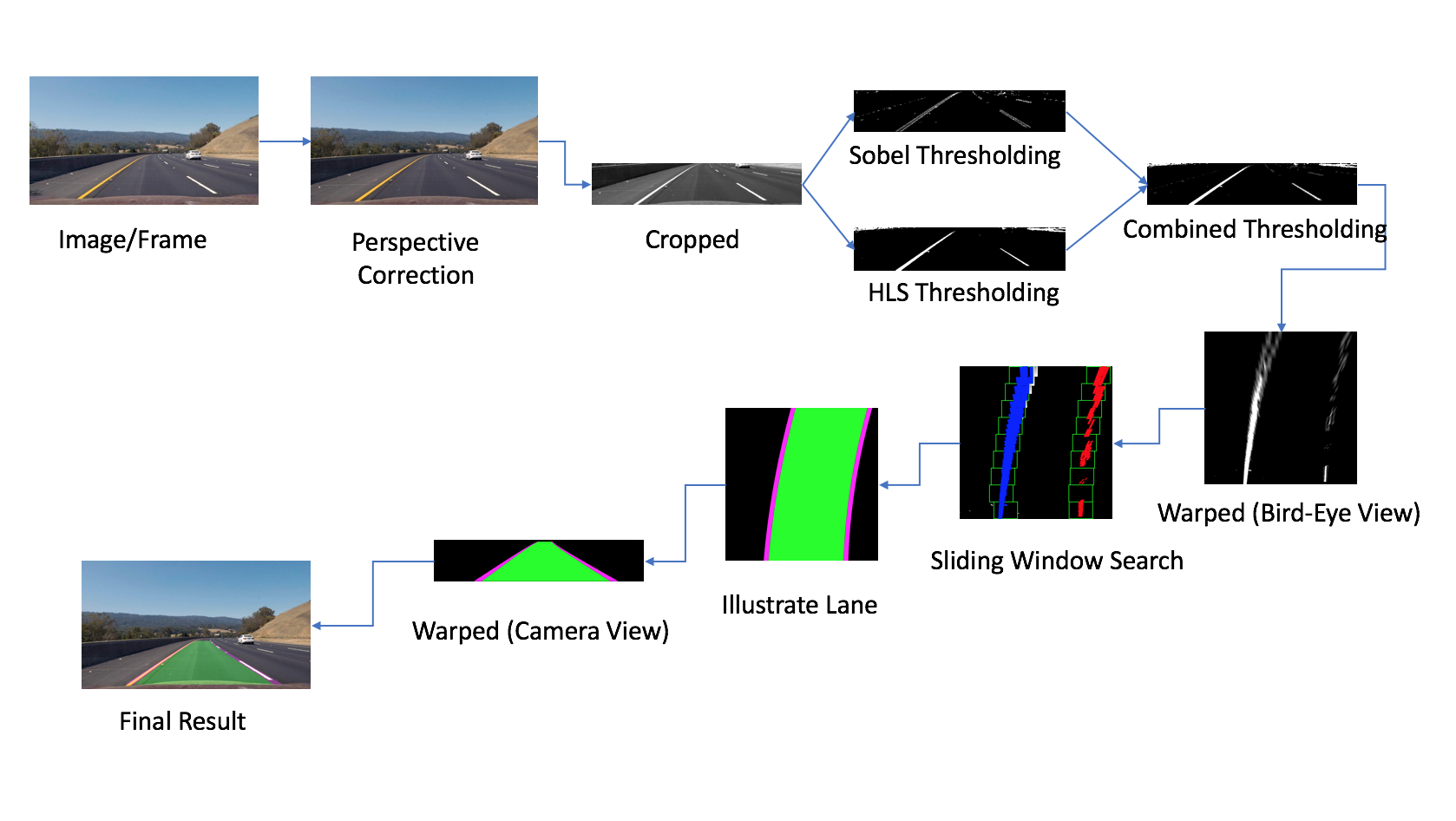

[Pipeline for detecting Lanes]

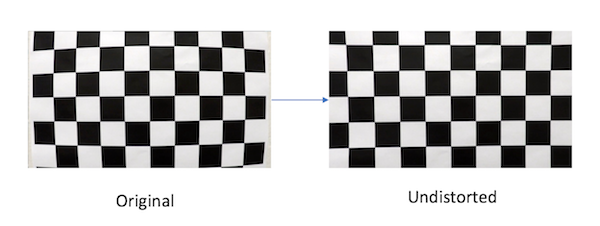

The camera that is being used may have distortion, which can cause erros in calculations. So we first need to calibrate the camera and calculate the calibration matrix. Camera looks at World-Points (3D) and converts them to Image-Points (2D). Using some chessboard images, that would have predictable patterns, I am calibrating the camera.

The code for camera calibration step is contained in the camera_calibration.py.

I start by preparing "object points", which will be the (x, y, z) coordinates of the chessboard corners in the world. Here I am assuming the chessboard is fixed on the (x, y) plane at z=0, such that the object points are the same for each calibration image. Thus, objp is just a replicated array of coordinates, and objpoints will be appended with a copy of it every time I successfully detect all chessboard corners in a test image. imgpoints will be appended with the (x, y) pixel position of each of the corners in the image plane with each successful chessboard detection.

I then used the output objpoints and imgpoints to compute the camera calibration and distortion coefficients using the cv2.calibrateCamera() function. I applied this distortion correction to the test image using the cv2.undistort() function and obtained this result:

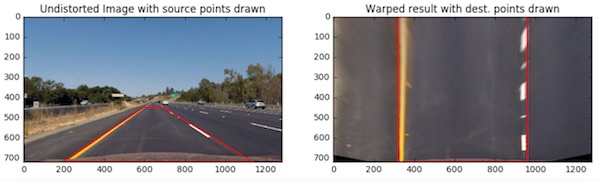

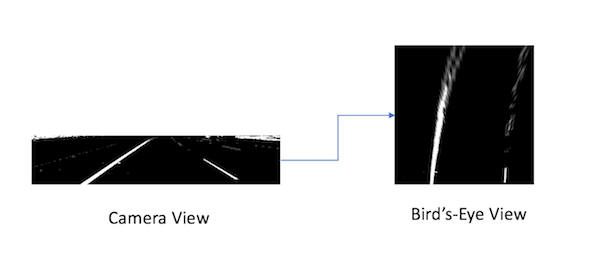

Objects appear smaller, the farther they are from view point and parallel lines seem to converge to a point when we project the 3D points to camera's 2D points.

This phenomenon needs to be taken into account when trying to find parallel lines of a lane. With perspective transform, we can transform road image to a bird-eye view image, in which, it is easier to detect the curving angles of lanes.

The code for Perspective Transformation is contain in the line.py.

Below is the outcome of perspective transform:

For the purpose of detecting lane lines, we only need to focus on the regions where we are likely to see the lanes. For this reason, I am cropping the image and doing the further image processing only on certain regions of the image. I also resize the image to smaller dimensions. This helps with making the image processing pipeline faster.

Below is the outcome of cropping the image.

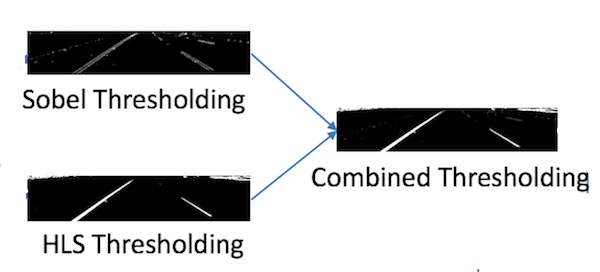

I used two methods of thresholding: Gradient Thresholing & HLS Thresholding.

I used Sobel Kernel for gradient thresholding in both X and Y directions. Since lane lines are likely to be vertical, I put more weight on the gradient in Y direction. I took absolute gradient values and normalized them for appropriate scaling.

In addition, I used HLS color channel, to handle cases when the road color is too bright or too light. I discarted L channel, which contains the information about Lightness (bright or dark), and put more emphasis on H and L channels. This way I could eliminate the lightness of the pixel out of the equation.

Then I combined both of the Gradient and HLS (color) threshoding into one for the final thresholded binary image. The code for this thresholding approach is contained in the threshold.py.

Below is the outcome of thresholding:

When we do perspective transform (as discussed above), we can get birds-eye view. In the example shown above, the road is a flat plane. This isn't strictly true, but we can still get a good approximation. I take 4 points in a trapezoidal shape that would represent a rectangle when looking down from road above. This allows use to eliminate the phenomenon by which the parallel lines seem to converge to a single point. In Birds-Eye View, we can view the lane lines as actual parallel lines.

The code for this is contained in the line.py.

Below is the outcome of transforming road to birds-eye view perspective:

As we can see, it is very difficult to see in camera-view that the lane is curving to the right. However, in Birds-Eye view, we can easily detect that the parallel lane lines are curving to right few meters ahead.

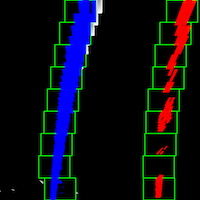

Once we have already detected lane lines in an earlier frames, we can use that information and use a sliding window, placed around the line centers, to find and follow lane lines from bottom to the top of the image/frame. This allows us to do a highly restricted search and saves a lot of processing time.

Although, it is not always possible to detect lane lines from the history that is saved in Line class object. So if we lose track of the lines, we ca go back to the method of using thresholding and begin searching lane lines from scratch.

I do this using two functions:

Below is the visualization of Sliding Window search:

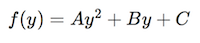

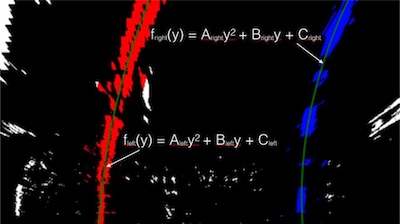

Now with the located the lane line pixels, we can use their x and y pixel positions, to fit a second order polynomial curve:

I am fitting for f(y), rather than f(x), because the lane lines in the warped image are nearly vertical and may have the same X value for more than one Y values.

Parameter Tuning is tricky, especially for the challenge video.

In my pipeline, parameters can be tuned in process.py.

To Update parameters, modify this code: Parameter Tuning

I implemented a GUI tool - similar to the one I had implemented in Basic Lane Line Detection.

This was very helpful to determine better parameters for the Challenge Video. The code for this tool is contained in guiutils.py and find_parameters.py

- Below is a demo of the GUI tool:

Here I am trying to determine the correct threshold values for Gradient in X direction and Gradient in Y direction.

Once we have detected the lane lines, we can illustrate the lane on the current frame/image, by overlaying color pixels on top of the image.

I am illustrating Lane Lines, Measurement Info and the Birds-Eye View on each frame, using following functions:

With everything combined, when we run the pipeline on an image, we get the follwing image as a final result.

YouTube Link: https://youtu.be/Boe5HvpGnMQ

Getting good results on Harder Challenge Video was very difficult. I did not try Convolution method, but I am leaving it for future experiements. I love Computer Vision, but having tried both the Deep Learning Approach to drive the car autonomously, and the Computer Vision Approach to detect lane lines, the experiece has made me really appreciate the potential of Deep Learning in Self-Driving Car domain.