Here is a short recap of the project steps and the respective results:

The first task involved performing the tracking of a single vehicle in 3D by implementing a Kalman Filter. A constant velocity model was used caractherized by the following system matrix:

And by the following process noise:

$$

\mathbf{Q}(\Delta t, q)=\begin{pmatrix} \frac 1{3} \left(\Delta t\right)^3 q & 0 & 0 & \frac 1{2} \left(\Delta t\right)^2 q& 0 & 0\ 0 & \frac 1{3} \left(\Delta t\right)^3 q& 0 & 0 & \frac 1{2} \left(\Delta t\right)^2 q & 0 \ 0 & 0 & \frac 1{3} \left(\Delta t\right)^3 q& 0 & 0 & \frac 1{2} \left(\Delta t\right)^2 q & \ \frac 1{2} \left(\Delta t\right)^2 q& 0 & 0 & \Delta t q& 0 & 0 \ 0 & \frac 1{2} \left(\Delta t\right)^2 q& 0 & 0 & \Delta t q & 0 \ 0 & 0 & \frac 1{2} \left(\Delta t\right)^2 q& 0 & 0 & \Delta t q\end{pmatrix}

$$

Where q is the acceleration uncertainty and is a design parameter set to

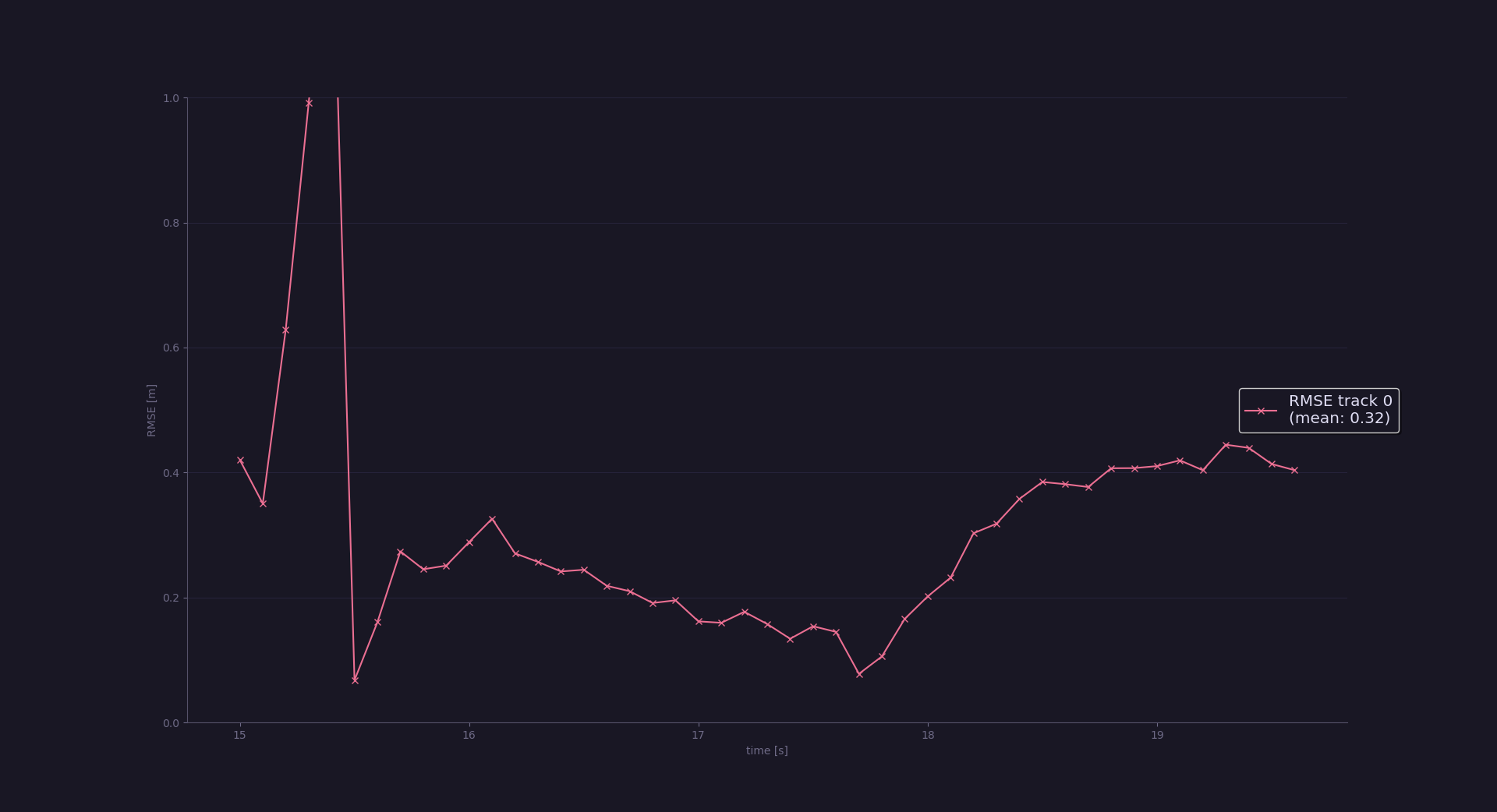

In this step only a single vehicle was tracked, using only the measurements from the lidar sensor.

Here are the results for this step: the RMSE result is below the expected

The second task involved implementing most of the track management algorithm. This required initializing new tracks as well managing and deleting ghost tracks. For the deletion the following heuristic was implemented wich delted tracks if their uncertainty grew outside of a

$$ \mathbf{P}{11}> 3^2 \cup \mathbf{P}{22}> 3^2 $$

Here are the results for this step: the RMSE result is below the expected

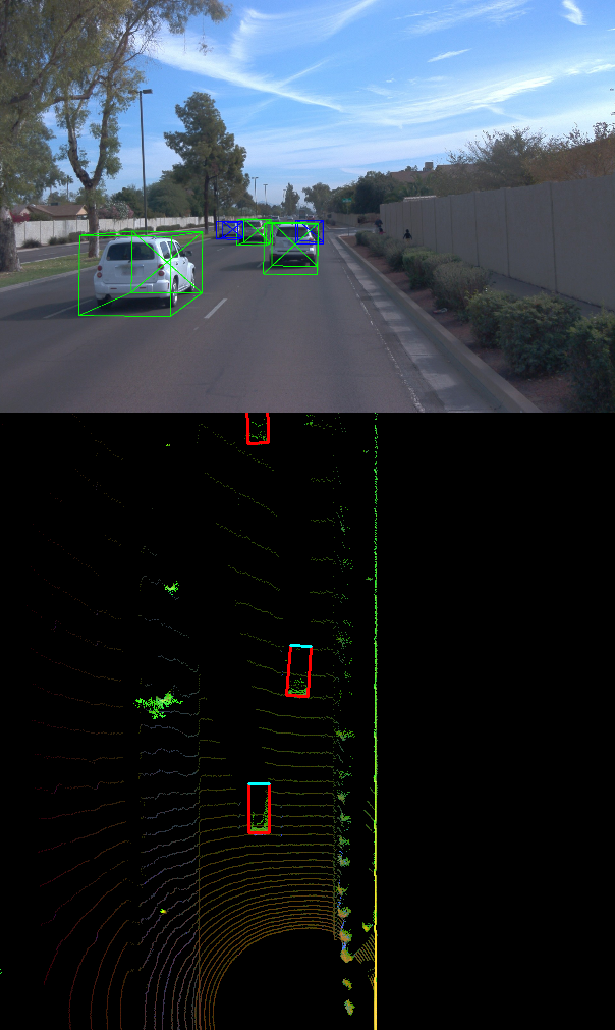

The third task involved implementing the Simple Nearest Neighbour(SNN) data association algorithm. Here is when the project became more complex because multiple vehicles are tracked simultaneusly, and debugging is more difficult. The SNN algorithm is implemented via association matrix A wich requires computing the Mahalanobis distance for each track/measure pair and associating the nearest measures to their track.

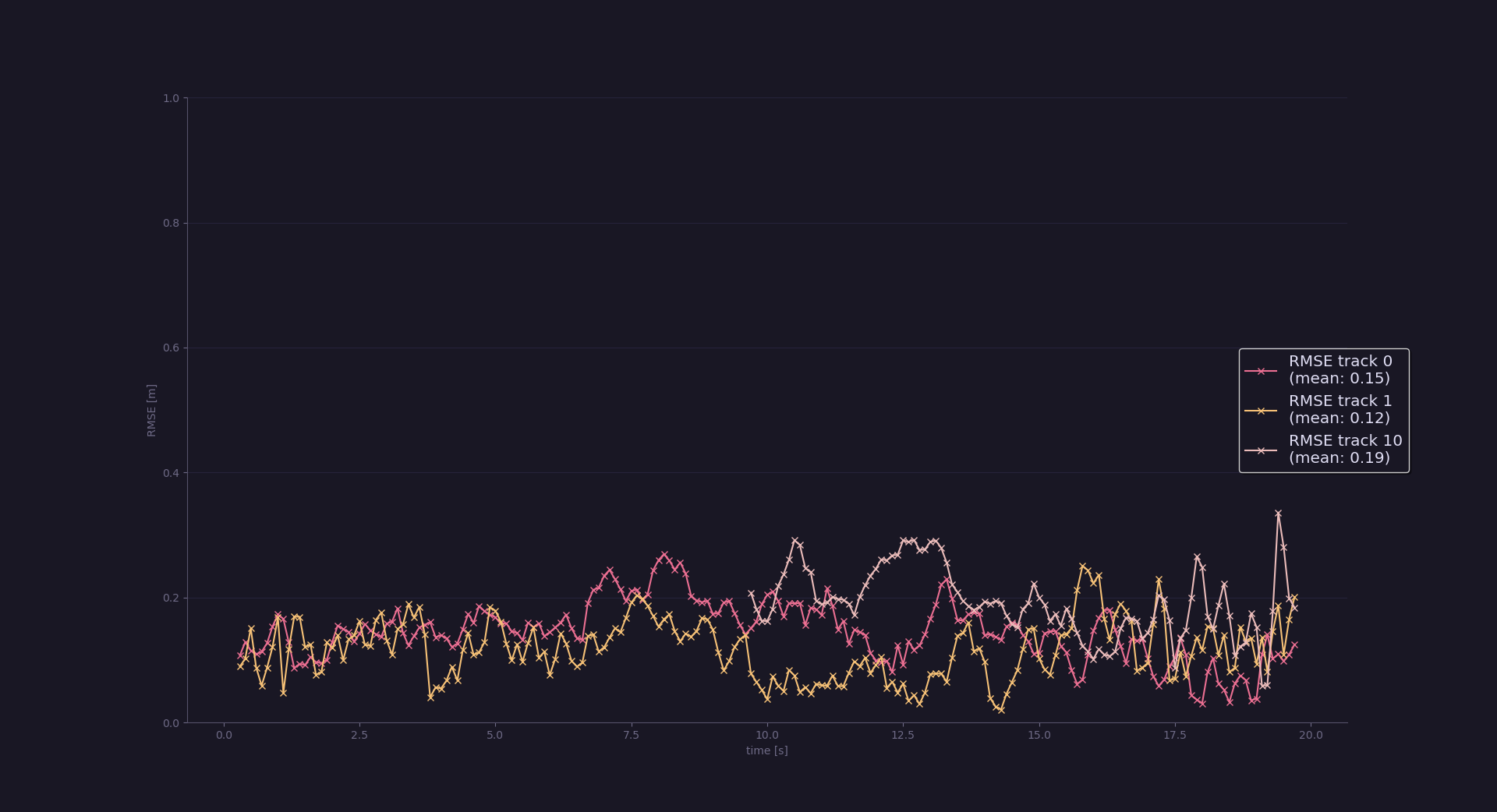

Here are the results for this step: the RMSE shows there are only 3 tracks with no extra ghost tracks as specified in the project rubric.

The final task involved improving step 3 by including camera measurements in the filter. This required including camera measurements when initializing tracks as well implementing the non-linear measurement function h:

Where the camera calibration parameter f and c were provided.

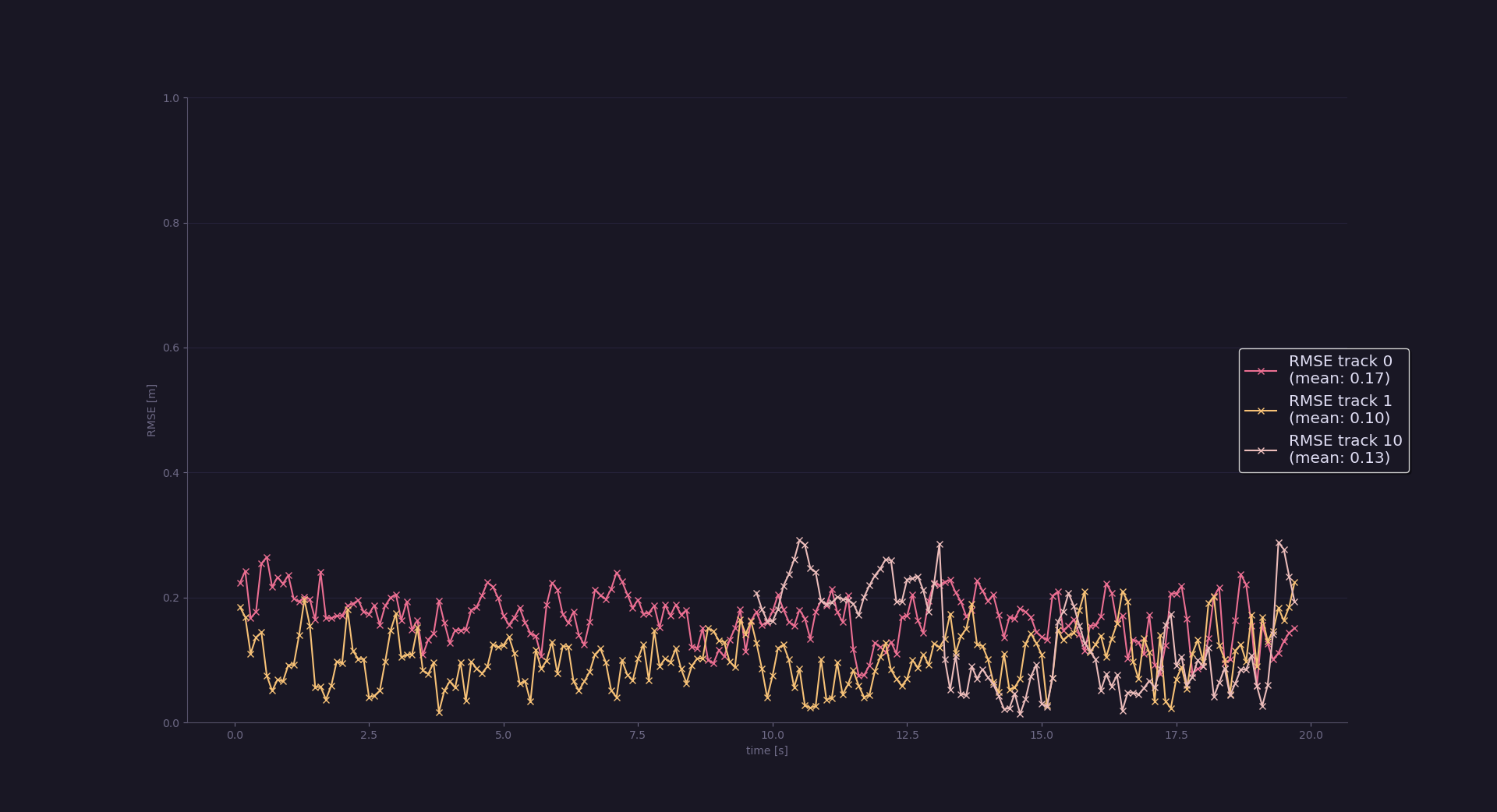

Here are the results for the final step: the RMSE is below

The final step clearly shows that there are benefits in camera-lidar fusion: the tracking accuracy is overall increased as shown by the last two plots. Moreover in real scenarios using multiple (and even redundant) sensors can improve the safety of the self driving vehicle.

In my view one major challenge a sensor fusion system will face in real-life scenario has to be with sensor timing: we could not see in this project because curated data from WAYMO was provided, but in real-life we may encounter poorly synchronized sensors with delayed time stamps which can cause the filter to have poor results.

We could see improvements when integrating camera measurements, although the camera has a limited field of view when compared to lidar, so in my view it could be a good idea to include additional cameras to cover the blind spots of the front camera. This should allow to discard ghost tracks on the sides more quickly. An other idea could be using an IMU to estimate the current velocity of our vehicle: in the lecures it was explained that the parameters of the filter have tobe tuned depending on the current driving scenario, this way we could change them dynamically depending on the car driving speed, potentially improving the reliability of our tracking system.

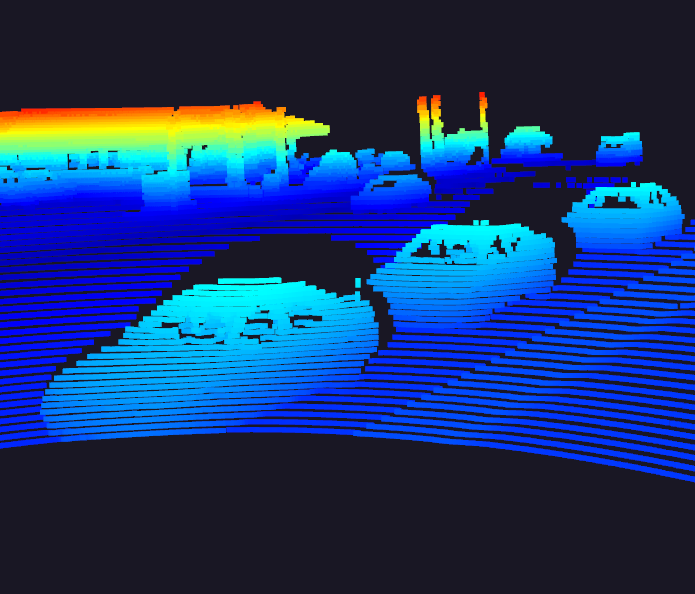

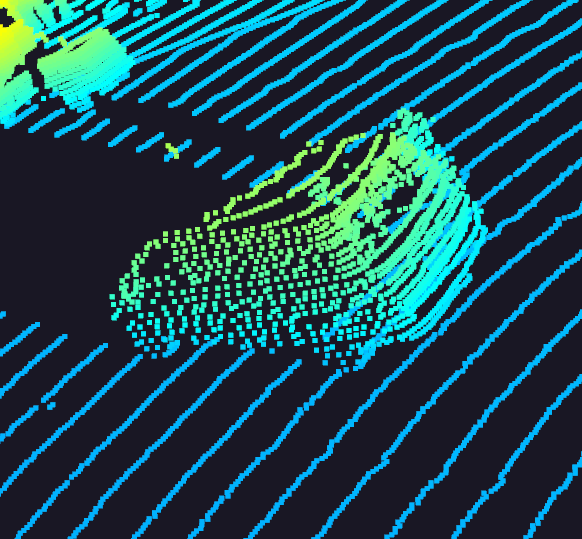

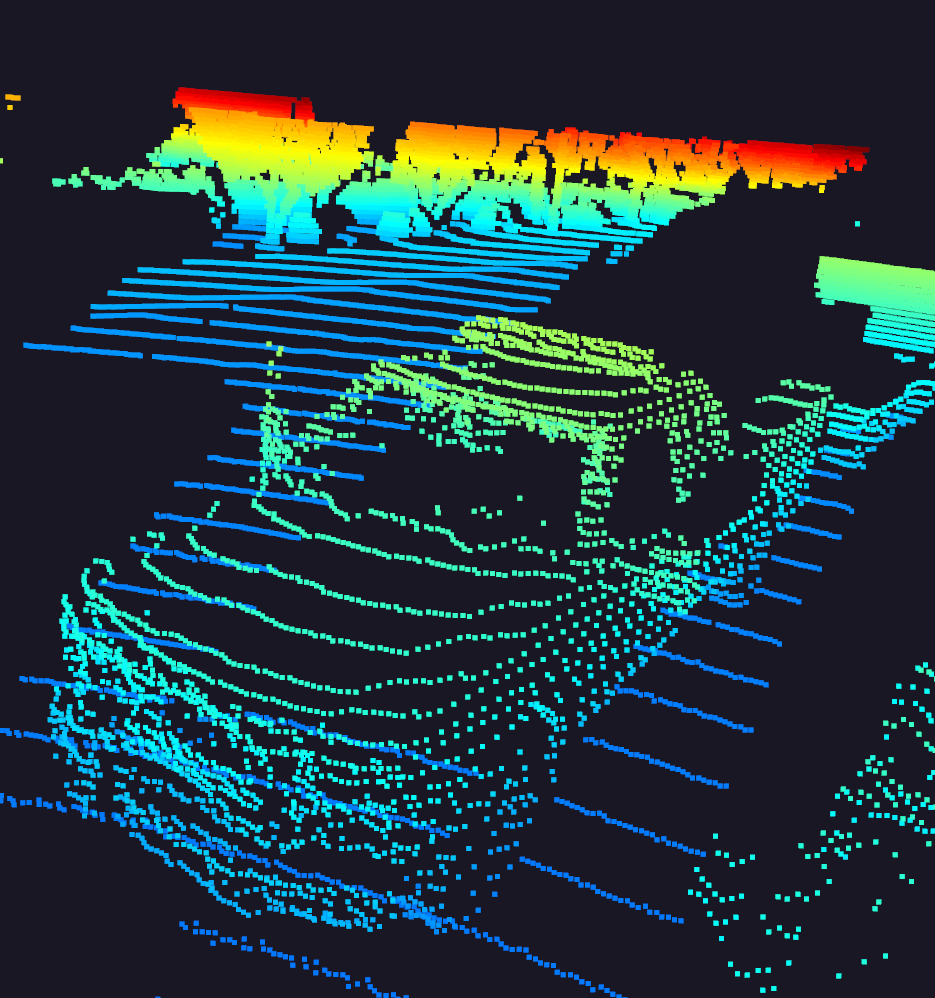

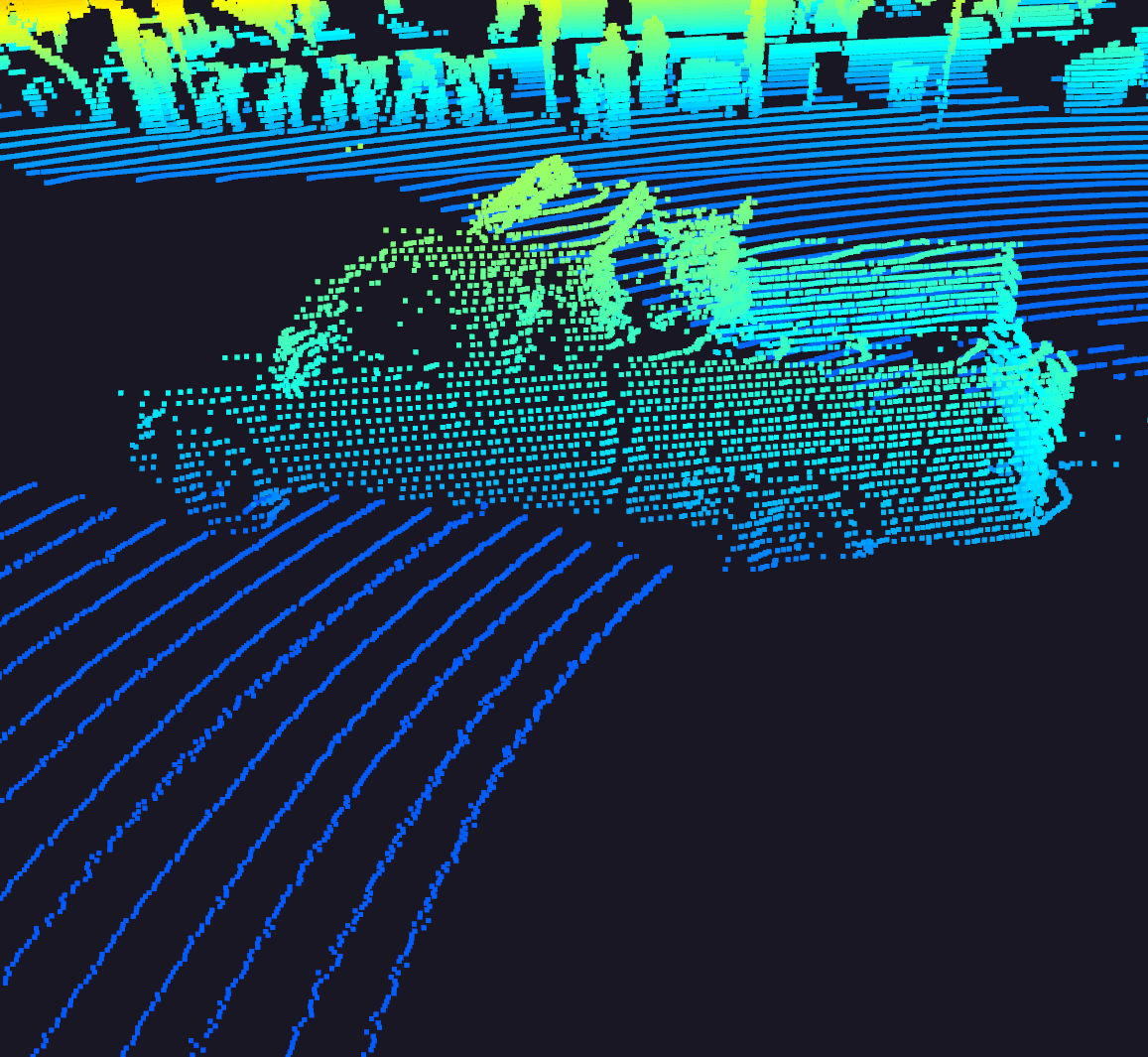

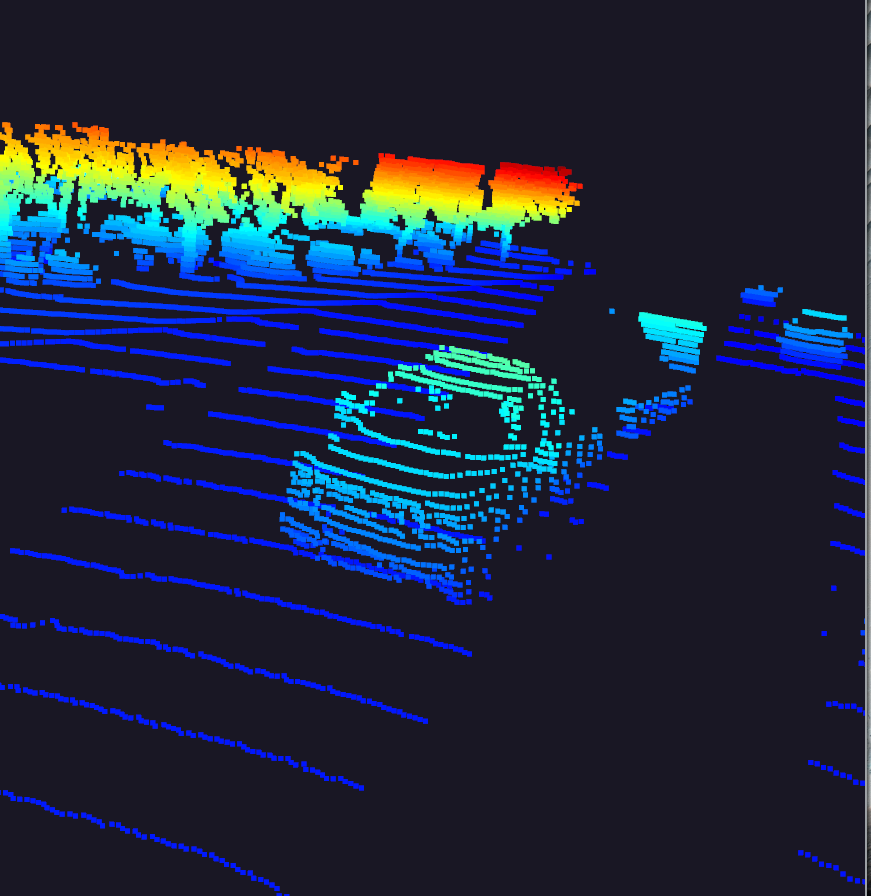

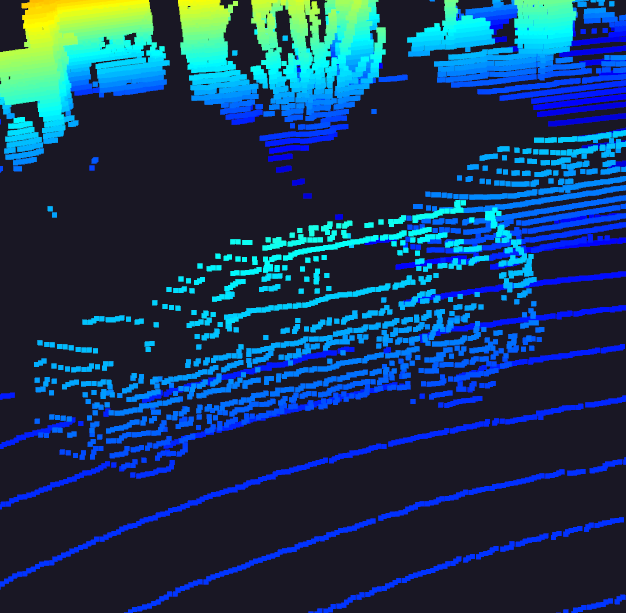

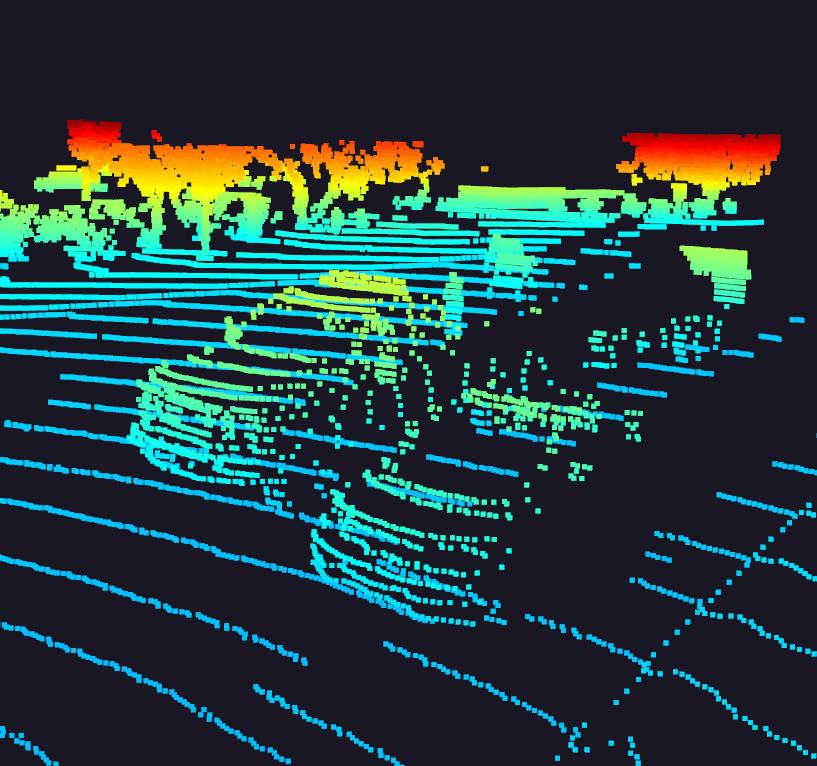

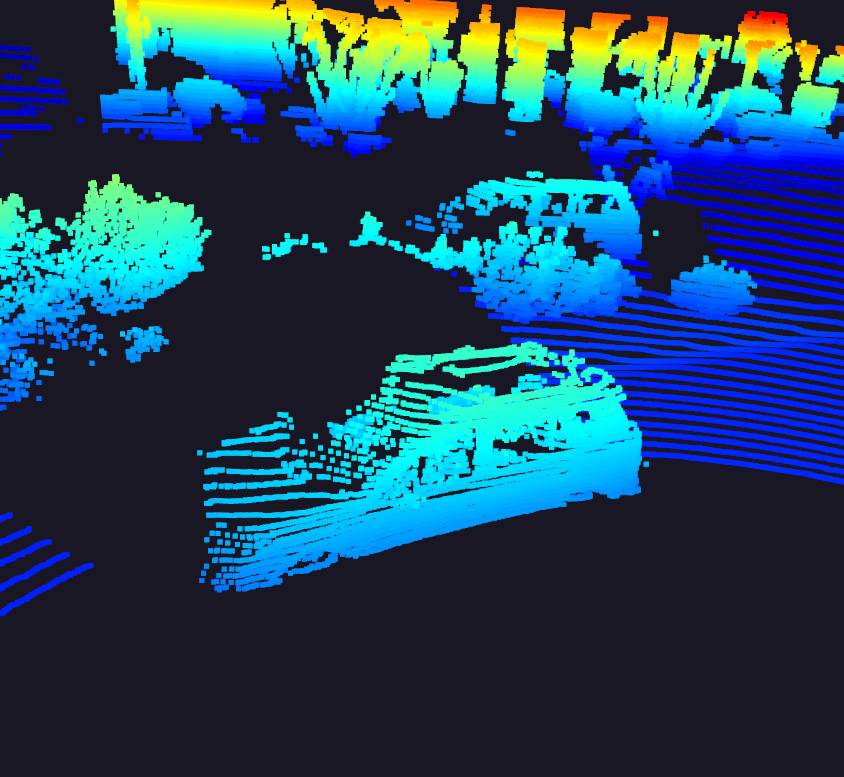

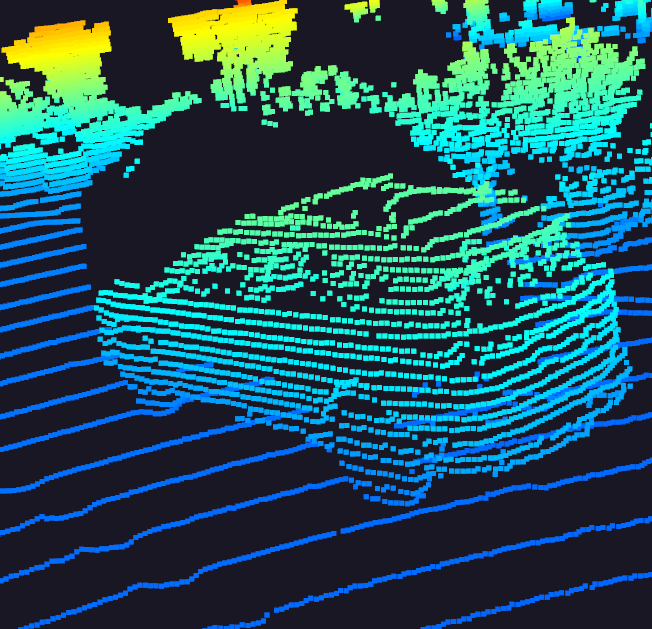

The following are some car examples gathered from the pointclouds in the dataset by setting exec_visualization = ["show_pcl"]:

| Car Samples from PCL | ||

|---|---|---|

|

|

|

|

|

|

|

|

|

Some features that sand out that can be useful for object detection:

- Aspect Ratio: all vehicles have appear to have a similar aspect ratio, expecitally in a bev representation, including otherwise outliers such as pick up trucks.

- Windshields: glass does not reflect and does not cause a energy return, as such in all vehicles it appears as a "hole" in the pointcloud wich is very characteristic.

- Side mirrors: despite being small, they stand out quite a lot. I also suspect they create a stronger intensity return signal being reflective.

- Tires tires are easily cut-off in the top lidar due to limitied field of view, but still manage to stand out.

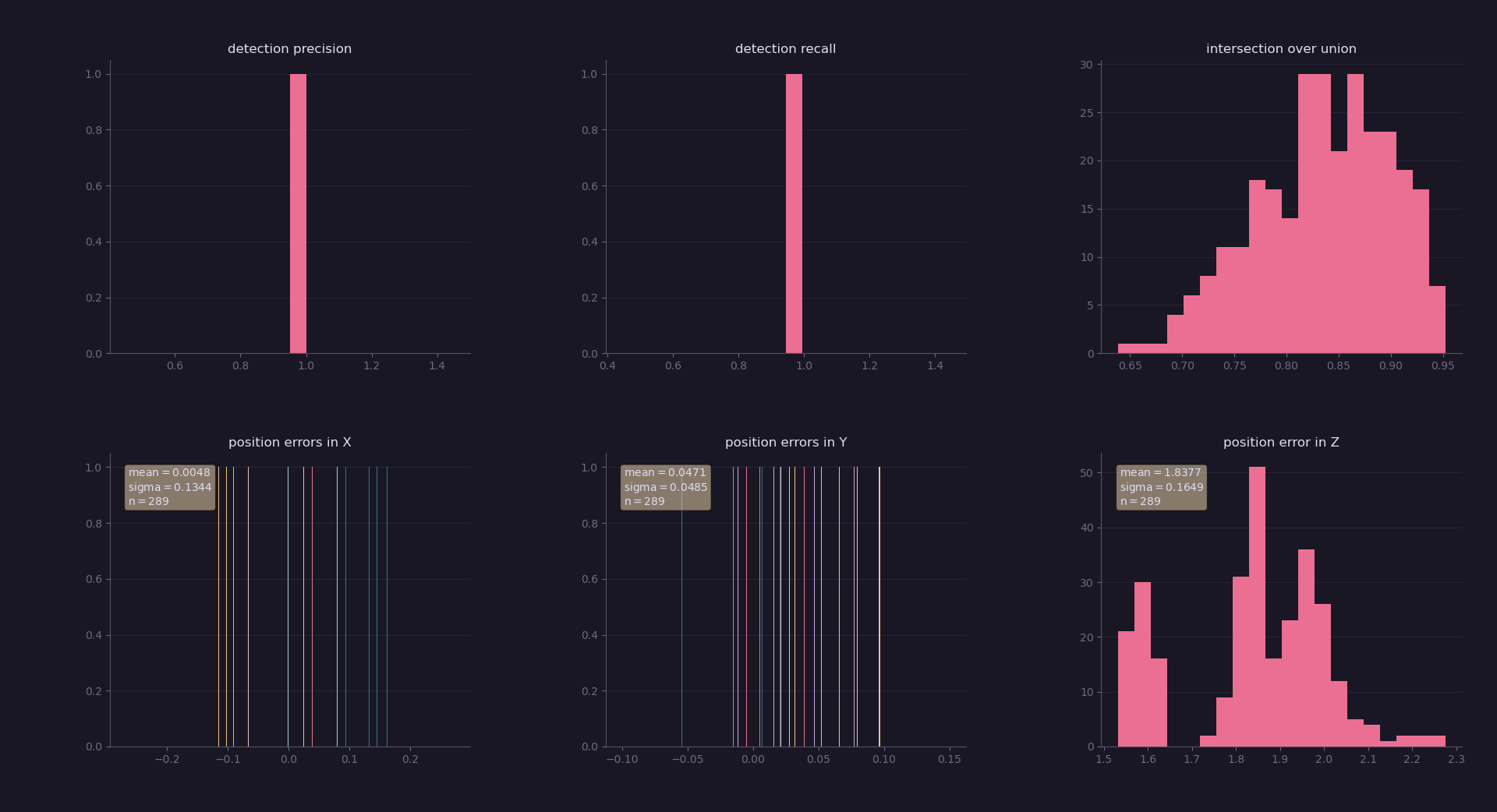

Final results are precision = 0.9506578947368421, recall = 0.9444444444444444 with iou_treshold of 0.5

📦project

┣ 📂dataset --> contains the Waymo Open Dataset sequences

┃

┣ 📂misc

┃ ┣ evaluation.py --> plot functions for tracking visualization and RMSE calculation

┃ ┣ helpers.py --> misc. helper functions, e.g. for loading / saving binary files

┃ ┗ objdet_tools.py --> object detection functions without student tasks

┃ ┗ params.py --> parameter file for the tracking part

┃

┣ 📂results --> binary files with pre-computed intermediate results

┃

┣ 📂student

┃ ┣ association.py --> data association logic for assigning measurements to tracks incl. student tasks

┃ ┣ filter.py --> extended Kalman filter implementation incl. student tasks

┃ ┣ measurements.py --> sensor and measurement classes for camera and lidar incl. student tasks

┃ ┣ objdet_detect.py --> model-based object detection incl. student tasks

┃ ┣ objdet_eval.py --> performance assessment for object detection incl. student tasks

┃ ┣ objdet_pcl.py --> point-cloud functions, e.g. for birds-eye view incl. student tasks

┃ ┗ trackmanagement.py --> track and track management classes incl. student tasks

┃

┣ 📂tools --> external tools

┃ ┣ 📂objdet_models --> models for object detection

┃ ┃ ┃

┃ ┃ ┣ 📂darknet

┃ ┃ ┃ ┣ 📂config

┃ ┃ ┃ ┣ 📂models --> darknet / yolo model class and tools

┃ ┃ ┃ ┣ 📂pretrained --> copy pre-trained model file here

┃ ┃ ┃ ┃ ┗ complex_yolov4_mse_loss.pth

┃ ┃ ┃ ┣ 📂utils --> various helper functions

┃ ┃ ┃

┃ ┃ ┗ 📂resnet

┃ ┃ ┃ ┣ 📂models --> fpn_resnet model class and tools

┃ ┃ ┃ ┣ 📂pretrained --> copy pre-trained model file here

┃ ┃ ┃ ┃ ┗ fpn_resnet_18_epoch_300.pth

┃ ┃ ┃ ┣ 📂utils --> various helper functions

┃ ┃ ┃

┃ ┗ 📂waymo_reader --> functions for light-weight loading of Waymo sequences

┃

┣ basic_loop.py

┣ loop_over_dataset.py

In order to create a local copy of the project, please click on "Code" and then "Download ZIP". Alternatively, you may of-course use GitHub Desktop or Git Bash for this purpose.

I recommend using anaconda to create an isolated project python environment. All dependencies required for recreating the project environment project-dev have been listed in the file environment.yml.

Create the env:

conda env create --file environment.yml

Activate the env:

conda activate project-dev

The Waymo Open Dataset Reader is a very convenient toolbox that allows you to access sequences from the Waymo Open Dataset without the need of installing all of the heavy-weight dependencies that come along with the official toolbox. The installation instructions can be found in tools/waymo_reader/README.md.

This project makes use of three different sequences to illustrate the concepts of object detection and tracking. These are:

- Sequence 1 :

training_segment-1005081002024129653_5313_150_5333_150_with_camera_labels.tfrecord - Sequence 2 :

training_segment-10072231702153043603_5725_000_5745_000_with_camera_labels.tfrecord - Sequence 3 :

training_segment-10963653239323173269_1924_000_1944_000_with_camera_labels.tfrecord

To download these files, you will have to register with Waymo Open Dataset first: Open Dataset – Waymo, if you have not already, making sure to note "Udacity" as your institution.

Once you have done so, please click here to access the Google Cloud Container that holds all the sequences. Once you have been cleared for access by Waymo (which might take up to 48 hours), you can download the individual sequences.

The sequences listed above can be found in the folder "training". Please download them and put the tfrecord-files into the dataset folder of this project.

The object detection methods used in this project use pre-trained models which have been provided by the original authors. They can be downloaded here (darknet) and here (fpn_resnet). Once downloaded, please copy the model files into the paths /tools/objdet_models/darknet/pretrained and /tools/objdet_models/fpn_resnet/pretrained respectively.

In the main file loop_over_dataset.py, you can choose which steps of the algorithm should be executed. If you want to call a specific function, you simply need to add the corresponding string literal to one of the following lists:

-

exec_data: controls the execution of steps related to sensor data.pcl_from_rangeimagetransforms the Waymo Open Data range image into a 3D point-cloudload_imagereturns the image of the front camera

-

exec_detection: controls which steps of model-based 3D object detection are performedbev_from_pcltransforms the point-cloud into a fixed-size birds-eye view perspectivedetect_objectsexecutes the actual detection and returns a set of objects (only vehicles)validate_object_labelsdecides which ground-truth labels should be considered (e.g. based on difficulty or visibility)measure_detection_performancecontains methods to evaluate detection performance for a single frame

In case you do not include a specific step into the list, pre-computed binary files will be loaded instead. This enables you to run the algorithm and look at the results even without having implemented anything yet. The pre-computed results for the mid-term project need to be loaded using this link. Please use the folder darknet first. Unzip the file within and put its content into the folder results.

-

exec_tracking: controls the execution of the object tracking algorithm -

exec_visualization: controls the visualization of resultsshow_range_imagedisplays two LiDAR range image channels (range and intensity)show_labels_in_imageprojects ground-truth boxes into the front camera imageshow_objects_and_labels_in_bevprojects detected objects and label boxes into the birds-eye viewshow_objects_in_bev_labels_in_cameradisplays a stacked view with labels inside the camera image on top and the birds-eye view with detected objects on the bottomshow_tracksdisplays the tracking resultsshow_detection_performancedisplays the performance evaluation based on all detectedmake_tracking_movierenders an output movie of the object tracking results

Even without solving any of the tasks, the project code can be executed.

The final project uses pre-computed lidar detections in order for all students to have the same input data. If you use the workspace, the data is prepared there already. Otherwise, download the pre-computed lidar detections (~1 GB), unzip them and put them in the folder results.

Parts of this project are based on the following repositories: