Neural Network based Extended Kalman Filter Localization. For the reason behind the name, google duke cone :)

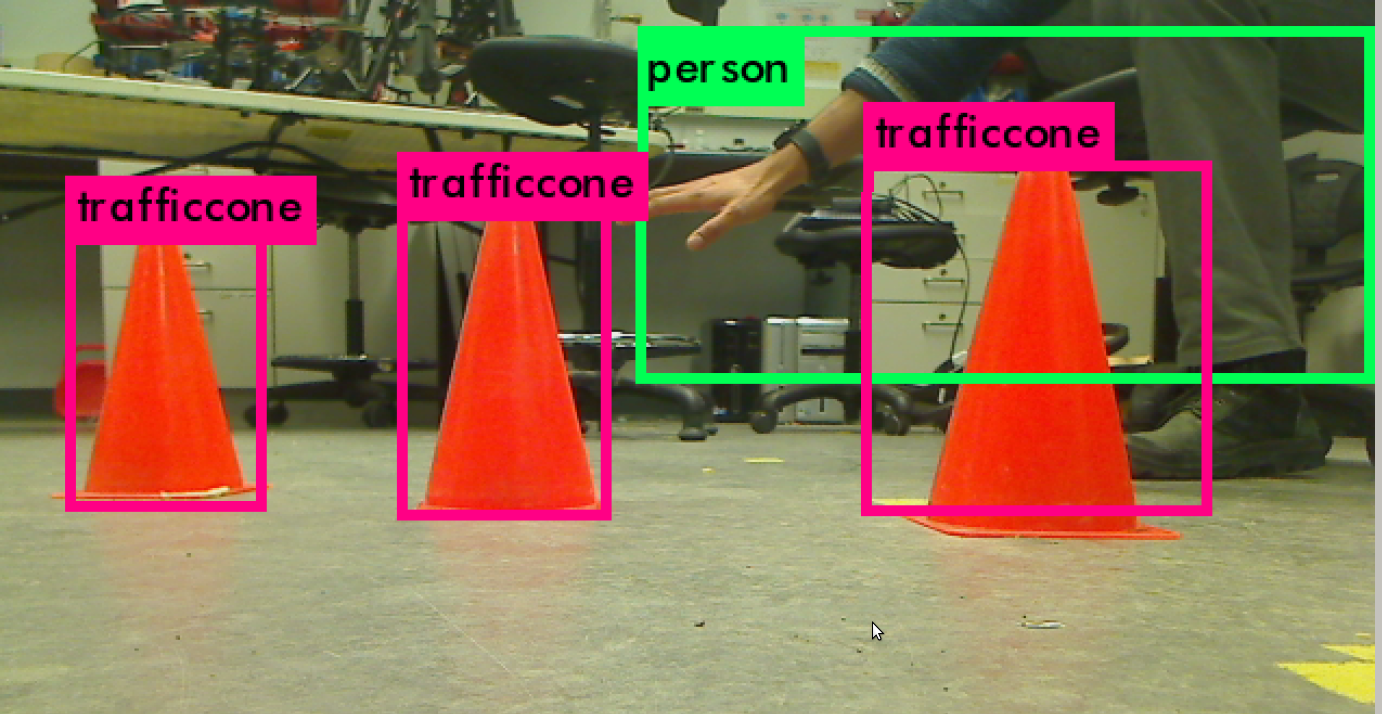

Yolo was trained on traffic cone images.

- Traffic cone dataset Collected from google

- Yolo model Yolo trained on Pascal VOC + additional class (traffic cone)

All code has been developed in Python (with the exception of external motion capture code), with Robot Operating System (ROS) wrappers and tested on Ubuntu 14.04.

Link to the demo video : https://youtu.be/OcQGvapoJCg

This project relies on the following open-source projects :

- Turtlebot Robot

- NVIDIA Titan X GPU

- Mocap_optitrack

An implementation of object-based localization method using convolutional neural networks. The platform used for this project is the TurtleBot. This two-wheeled robot is equipped with a Microsoft Kinect camera and depth sensor, as well as an on-board gyroscope and wheel encoders. Robot localization is a key problem in creating truly autonomous robots. If the robot is unable to determine where it is, it can be difficult to decide what to do next. In order to localize itself, a robot has access to sensory information which provides the robot with feedback about its current location in the world. In this project we experimented with an object based localization, as opposed to traditional feature based approaches.