This repository is a fork of RAFT-Stereo (see later section) for the STIR Challenge

The main addition is the raft_stereo_to_onnx.py file which converts from the RAFT model to both a torchscript raft_stereoSTIR.pt and ONNX raft_stereoSTIR.onnx which can be used as the RAFT model for STIRMetrics

To export the models:

- Create a folder

./demo-framesand add a pair ofleft.{png, jpg},right.{png, jpg} - Download the models from the link below and export them to

./models - Install RAFT-Stereo dependencies from the following section. Then run

python rafttoonnx.py

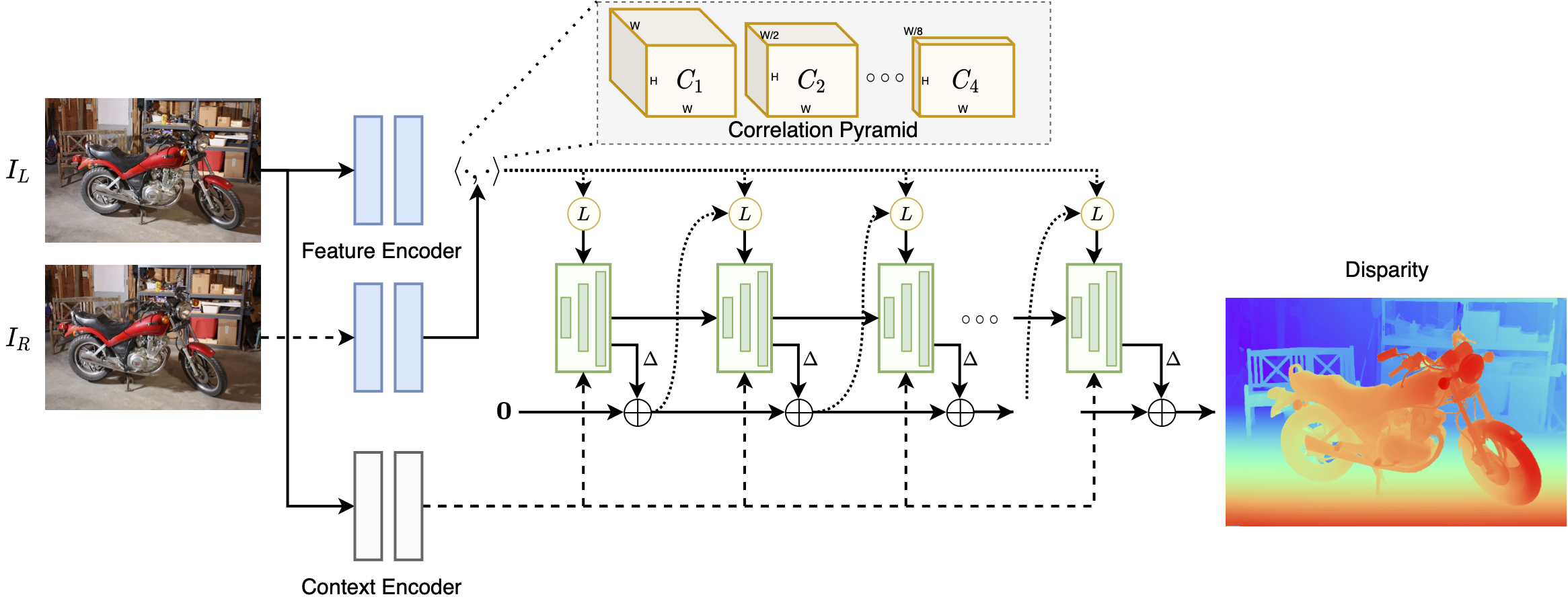

This repository contains the source code for our paper:

RAFT-Stereo: Multilevel Recurrent Field Transforms for Stereo Matching

3DV 2021, Best Student Paper Award

Lahav Lipson, Zachary Teed and Jia Deng

@inproceedings{lipson2021raft,

title={RAFT-Stereo: Multilevel Recurrent Field Transforms for Stereo Matching},

author={Lipson, Lahav and Teed, Zachary and Deng, Jia},

booktitle={International Conference on 3D Vision (3DV)},

year={2021}

}

The code has been tested with PyTorch 1.7 and Cuda 10.2

conda env create -f environment.yaml

conda activate raftstereoand with PyTorch 1.11 and Cuda 11.3

conda env create -f environment_cuda11.yaml

conda activate raftstereoTo evaluate/train RAFT-stereo, you will need to download the required datasets.

- Sceneflow (Includes FlyingThings3D, Driving & Monkaa)

- Middlebury

- ETH3D

- KITTI

To download the ETH3D and Middlebury test datasets for the demos, run

bash download_datasets.shBy default stereo_datasets.py will search for the datasets in these locations. You can create symbolic links to wherever the datasets were downloaded in the datasets folder

├── datasets

├── FlyingThings3D

├── frames_cleanpass

├── frames_finalpass

├── disparity

├── Monkaa

├── frames_cleanpass

├── frames_finalpass

├── disparity

├── Driving

├── frames_cleanpass

├── frames_finalpass

├── disparity

├── KITTI

├── testing

├── training

├── devkit

├── Middlebury

├── MiddEval3

├── ETH3D

├── two_view_testingiRaftStereo_RVC ranked 2nd on the stereo leaderboard at the Robust Vision Challenge at ECCV 2022.

To use the model, download + unzip models.zip and run

python demo.py --restore_ckpt models/iraftstereo_rvc.pth --context_norm instance -l=datasets/ETH3D/two_view_testing/*/im0.png -r=datasets/ETH3D/two_view_testing/*/im1.png

Thank you to Insta360 and Jiang et al. for their excellent work.

See their manuscript for training details: An Improved RaftStereo Trained with A Mixed Dataset for the Robust Vision Challenge 2022

Pretrained models can be downloaded by running

bash download_models.shor downloaded from google drive. We recommend our Middlebury model for in-the-wild images.

You can demo a trained model on pairs of images. To predict stereo for Middlebury, run

python demo.py --restore_ckpt models/raftstereo-middlebury.pth --corr_implementation alt --mixed_precision -l=datasets/Middlebury/MiddEval3/testF/*/im0.png -r=datasets/Middlebury/MiddEval3/testF/*/im1.pngOr for ETH3D:

python demo.py --restore_ckpt models/raftstereo-eth3d.pth -l=datasets/ETH3D/two_view_testing/*/im0.png -r=datasets/ETH3D/two_view_testing/*/im1.pngOur fastest model (uses the faster implementation):

python demo.py --restore_ckpt models/raftstereo-realtime.pth --shared_backbone --n_downsample 3 --n_gru_layers 2 --slow_fast_gru --valid_iters 7 --corr_implementation reg_cuda --mixed_precisionTo save the disparity values as .npy files, run any of the demos with the --save_numpy flag.

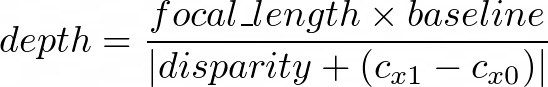

If the camera intrinsics and camera baseline are known, disparity predictions can be converted to depth values using

Note that the units of the focal length are pixels not millimeters. (cx1-cx0) is the x-difference of principal points.

To evaluate a trained model on a validation set (e.g. Middlebury), run

python evaluate_stereo.py --restore_ckpt models/raftstereo-middlebury.pth --dataset middlebury_HOur model is trained on two RTX-6000 GPUs using the following command. Training logs will be written to runs/ which can be visualized using tensorboard.

python train_stereo.py --batch_size 8 --train_iters 22 --valid_iters 32 --spatial_scale -0.2 0.4 --saturation_range 0 1.4 --n_downsample 2 --num_steps 200000 --mixed_precisionTo train using significantly less memory, change --n_downsample 2 to --n_downsample 3. This will slightly reduce accuracy.

To finetune the sceneflow model on the 23 scenes from the Middlebury 2014 stereo dataset, download the data using

chmod ug+x download_middlebury_2014.sh && ./download_middlebury_2014.shand run

python train_stereo.py --train_datasets middlebury_2014 --num_steps 4000 --image_size 384 1000 --lr 0.00002 --restore_ckpt models/raftstereo-sceneflow.pth --batch_size 2 --train_iters 22 --valid_iters 32 --spatial_scale -0.2 0.4 --saturation_range 0 1.4 --n_downsample 2 --mixed_precisionWe provide a faster CUDA implementation of the correlation sampler which works with mixed precision feature maps.

cd sampler && python setup.py install && cd ..Running demo.py, train_stereo.py or evaluate.py with --corr_implementation reg_cuda together with --mixed_precision will speed up the model without impacting performance.

To significantly decrease memory consumption on high resolution images, use --corr_implementation alt. This implementation is slower than the default, however.