Table of Contents

This repository is a tutorial for the implementation of Biased Random Key Genetic Algorithmn for 3D Bin Packing Problem based on heuristic and design propsed from the paper "A biased random key genetic algorithm for 2D and 3D bin packing problems" by Gonçalves & Resende (2013). I wrote this tutorial as a showcase of application for the course "Operations Research Applications and Implementation" intructed by professor Chia-Yen Lee. It is not for commercial use. If there is any sign of copyright infringement, please inform me as I will remove the repository.

I will first define the problem statement of 3D-bpp. Next, I will introduce the idea of Biased Random-Key Genetic Algorithmn (BRKGA) and Placement Strategy proposed in the paper with code and example. Finally, the visualization for instance solution is presented.

My implementation is entirely based on Python code, with used of default module that can be found in most Python distribution, which means no external library is required. However, the reader should have basic understanding of genetic algorithmn, namely the idea of chorosome representation, encoding & decoding process, and evolutionary process. For more details, Tutorialspoint had a great article as the introduction of GA.

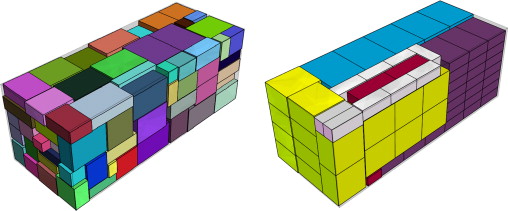

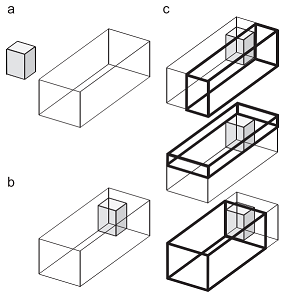

"example of bin packing problem" from [Gonçalves & Resende (2013)]In a three dimensional bin-packing problem, items (or boxes) with different volumes must be orthogonally packed into a set of bins (or containers) with fixed volume, in a way that minimizes the number of total used bins. The "three dimensional" means the volumes of each item consist of 3 dimension, namely depth, width and height.

This problen is strongly NP-hard (as it generalize from 2D-bpp and 1D-bpp, which are also NP-hard), meaning a faster heuristic is prefered even with the existence of a mixed integer programming formulation that can garuantee an optimal solution. Given this idea, the genetic algorithmn, one of the most common heuristic for combinatorial problem, can be applied to slove the problem in acceptable amount of time, though it does not ensures an optimal solution.

In 3D-bpp, there are n rectangular boxes with length in three dimensions (d, w, h), and a fixed-size container where boxes are placed. Without loss of general�ity, we assume that all boxes to be packed are smaller than the container. For the implementation, simply use an object with two array of 3D vector to denote shape of boxes and containers respectively.

inputs: {

# boxes with different shape

'v': [(188, 28, 58), (61, 9, 79), (188, 28, 58), ..., (145, 80, 96)],

# containers with fixed shape

'V': [(610, 244, 259), .., (610, 244, 259)]

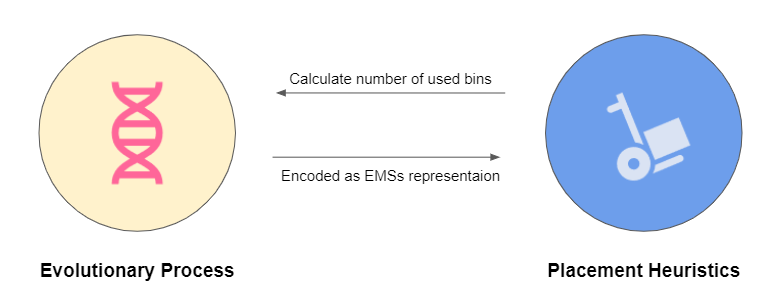

}The algorithmn for this article is composed of two parts, a Biased Random-Key Genetic Algorithmn (BRKGA) to search the solution space, and a heuristic called placement procedure to evalute each solution representation in GA. Briefly speacking, the algorithm encode each solution (packing scenario) by a sequence that can be evaluated by a heuristic, which enables genetic algorithm to find good solution through selection. In this section, the setting of BRKGA and the idea of placement procedure will be discussed.

algorithmn architectureThe BRKGA, proposed by Gonçalves & Resende (2011), is essentially a derivative of genetic algorithm (GA) with extensions of random-key representation and biased selection from population pool. It is worth to notice that in most of the meta-heuristics for 3d-bpp, instead of representing every coordinates of items placed, they are designed to find the best packing sequence (the order in which the items are packed) that follows a rule-based packing procedure like the Deepest Bottom-Left-Fill packing method. In this way, the representation of a solution can be greatly simplified, while empirically obtaining solution with good quality. In addition, the packing sequence can always be mapped to a feasible packing scenario without worrying about coordinates overlapping, meaning there is no need for chromosome-repairing in this GA implementation.

Next, I will explain the idea of Random Key, Biased Selection for BRKGA in the following pragragh.

The random key is a way to encode a chromosome (solution), where the solution are represented as a vector of real numbers within [0, 1]. Let assume the number of items to be packed is n. In this implementation, the length of a random key is always 2n. With Numpy’s random number routines, we can easily generate random key representation in 1 line.

# random key representation

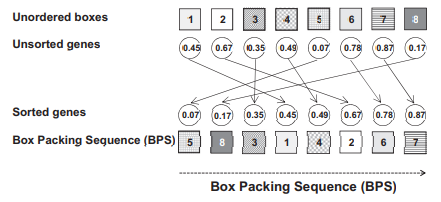

solution = np.random.uniform(low=0.0, high=1.0, size= 2*n)In each solution, the first n genes, named Box Packing Sequence (BPS) by the author, represents the order of the n items to be packed, and can be decoded by sorting in ascending order of the corresponding gene values (Fig. 3). We can use the argsort method to obtain the indices of the sorted array.

# sort by ascending order

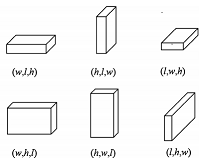

box_packing_sequence = np.argsort(solution[:n])The last n genes, named Vector of Box Orientations (VBO), represent the orientation of the boxes. In the setting of three dimension, there are total six orientations (Fig. 4) to place a box. In some scenario, some boxes cannot be placed upside down, or limited by the vertical orientation, so to speak. To consider all possible orientations, the decoding of each gene (called BO) in VBO is defined as

selected orientation = BOs⌈BO×nBOs⌉

, where BO is the given value of VBO, BOs denotes all possible orientations allowed for that box, and nBOs is the number of BOs. Or in code:

# value of BO

BO = 0.82

# posiible orientations

BOs = [1,2,3,4,5,6]

# selected orientation

orientation = BOs[math.ceil(BO*len(BOs))] # orientation = 5In summary, BRKGA use a vector of real numbers between [0,1] to represent a solution, which consist of box packing sequence (BPS) with length of n, and Vector of Box Orientations (VBO) also with length n. The former represents the order to pack the boxes, while representing the oreientation of corresponding box. With a packing procedure that will be explained later, a solution can converted to a real packing scenario whose quality can be further evaluated.

The most noticeable difference between BRKGA and other genetic algorithmn implementations are the fact that the population in each generation is partitioned into two group, Elite and Non-Elite, based on the fitness value. This biased selection will greatly influence operations in GA such as crossover and survivor selection that will be explained latter. For now, let's define a function to partition the population into elite and non-elite group based on the fitness value and the number of elite individual denoted as num_elites.

def partition(population, fitness_list, num_elites):

# sorted indices based on fitness value

sorted_indexs = np.argsort(fitness_list)

# return elite & non-elite group

return population[sorted_indexs[:num_elites]], population[sorted_indexs[num_elites:]]In BRKGA, the parameterized uniform crossover is used to implement the crossover operation. For each mating, there will always be one parent chosen from the Elite and the other from the Non-Elite. Then, it will create an offspring whose i-th gene is inherited the i-th gene from either elite parent or non-elite one based on a prespecified probality denoted as eliteCProb. Generally, this probability will favor the inheritance from the elite parent. For the implementation, I define a function to select parents, and a function to perform crossver given the selected parents.

def crossover(elite, non_elite):

# initialize chromosome

offspring = [0]*(2*n)

# choose each gene from elite and non_elite

for i in range(2*n):

# inherit from elite with probability of eliteCProb

if np.random.uniform(low=0.0, high=1.0) < eliteCProb:

offspring][i] = elite[i]

else:

offspring][i] = non_elite[i]

return offspring

def mating(self, elites, non_elites):

offspring_list = []

num_offspring = num_individuals - num_elites - num_mutants

for i in range(num_offspring):

# biased selection for parents: 1 elite & 1 non_elite

offspring = crossover(random.choice(elites), random.choice(non_elites))

offspring_list.append(offspring)

return offspring_listThe number of offsprings (from crossover) depends on the number of individuals in each population (num_individuals) and the number of mutants (num_mutants) that will be explained in the next section.

Instead of performing mutation operation (e.g. swap mutation), the authors create new individuals that are randomly generated in each generation as a mean to add randomness into the population. Given the number of mutants, denoted as num_mutants, we can define a function to create new individuals like how we initialize the population.

def mutation(num_mutants):

# return mutants

return np.random.uniform(low=0.0, high=1.0, size=(num_mutants, 2*n))For each generation, all elite individuals are kept for the next population without any modification. In addition, mutants and offsprings created from crossover are directly added to the next population. Since the problem is about minimizing the number of used bins, we will update the minimum fitness value in each generation. Together, we can define the function for evolutionary process as:

def evolutionary_process(n, num_generations, num_individuals, num_elites, num_mutants):

# initialization

## initial poulation

population = np.random.uniform(low=0.0, high=1.0, size=(num_individuals, 2*n))

## calculate fitness function

fitness_list = cal_fitness(population)

## minimum fitness value

best_fitness = min(fitness_list)

for g in range(num_generations):

# seperate elite group & non-elite group

elites, non_elites = partition(population, fitness_list, num_elites)

# biased mating & crossover

offsprings = mating(elites, non_elites)

# generate mutants

mutants = mutation(num_mutants)

# next population

population = np.concatenate((elites, mutants, offsprings), axis=0)

# calculate fitness

fitness_list = cal_fitness(population)

# update minimum fitness value

for fitness in fitness_list:

if fitness < best_fitness:

best_fitness = fitnessThe only element has not yet been mentioned is how to evaluate the fitness value of a solution (with function cal_fitness). Remember a solution tells us the order (BPS) and the orientations (VBO) of the boxes to be packed. In order to evaluate the fitness function for each solution, we simply try to pack those boxes by following exactly the info provided in the solution, and then count how many containers are used. Therefore, we require a system to pack boxes in three dimension into fixed-size containers by following the instruction of a solution. This system is called Placement Procedure and will be discussed in the following section.

In terms of implementation and intricacy, perhaps the placement procedure is more complex than GA. First, it requires a model to reflect the rectangle boxes and containers in three dimentions with while identifying condition of overlapping and out-of-bound. Second, a heuristic rule to place the boxes must be define. Last, two compoment mentioned above and other states must all be put into integration. Let's start with the model that is specifically designed for 3D dimension placement in this problem.

The maximal-space is a concept to represent a rectangular space by its minimum and maximum coordinats, which works only if the object is placed orthogonally to three dimensions as in this problem. For example, a box with shape of (10, 20, 30) placed in the origin can be encoded as:

MS = [(0,0,0), (10,20,30)] # [minimum coordinats, maximum coordinates]The heuristic — difference process (DP) — developed by Lai and Chan (1997) keeps track of boxes placement by recording available spaces left in the container called Empty Maximal-Spaces (EMSs). With the use of EMSs, we can see box placement in a container as the collection of following processes:

- Select a EMS from existing EMSs.

- Generate new EMSs from the intersection of the box with existing EMSs and remove the intersected EMSs.

- Remove EMSs which have infinite thinness (no length in any dimension), or are totally inscribed by other EMSs

- Remove EMSs which are smaller than existing boxes to be placed.

For each pair of box (demote its space as ems) and intersected EMS in step 2., we can compute new EMSs as follow. Notice that there will be six empty space generated by the intersection in three dimentional space.

# minimem & maximum coordinates for intersected EMS

x1, y1, z1 = EMS[0]

x2, y2, z2 = EMS[1]

# minimem & maximum coordinates for space of box

x3, y3, z3 = ems[0]

x4, y4, z4 = ems[1]

# six new EMSs for 3D space

new_EMSs = [

[(x1, y1, z1), (x3, y2, z2)],

[(x4, y1, z1), (x2, y2, z2)],

[(x1, y1, z1), (x2, y3, z2)],

[(x1, y4, z1), (x2, y2, z2)],

[(x1, y1, z1), (x2, y2, z3)],

[(x1, y1, z4), (x2, y2, z2)]

]In practice, however, we will place the minimum coordinate of box against that of the selected EMS (Fig. 5), because interspace between two boxes will usually result in unfit gap for others boxes. As the result, we will rewrite the prpcess as:

# minimem & maximum coordinates for intersected EMS

x1, y1, z1 = EMS[0]

x2, y2, z2 = EMS[1]

# minimem & maximum coordinates for space of box

x3, y3, z3 = ems[0]

x4, y4, z4 = ems[1]

# three new EMSs for 3D space if ems[0] = EMS[0]

new_EMSs = [

[(x4, y1, z1), (x2, y2, z2)],

[(x1, y4, z1), (x2, y2, z2)],

[(x1, y1, z4), (x2, y2, z2)]

]To check if a EMS overlaps or is totally inscribed by another EMS, we can define the following functions to compute two conditions. (In the implmentation, every EMS is converted into numpy array to receive the property of element-wise boolean operations)

def overlapped(EMS_1, EMS_2):

if np.all(EMS_1[1] > EMS_2[0]) and np.all(EMS_1[0] < EMS_2[1]):

return True

return False

def inscribed(EMS_1, EMS_2):

if np.all(EMS_2[0] <= EMS_1[0]) and np.all(EMS_1[1] <= EMS_2[1]):

return True

return FalseIn sum, the psuedo function for dfference process of a box placement with selected EMS can be written as:

def difference_process(box, selected_EMS, existing_EMSs):

# 1. compute maximal-space for box with selected EMS

ems = [selected_EMS[0], selected_EMS[0] + boxToPlace]

# 2. Generate new EMSs resulting from the intersection of the box

for EMS in existing_EMSs:

if overlapped(ems, EMS):

# eliminate overlapped EMS

existing_EMSs.remove(EMS)

# three new EMSs in 3D

x1, y1, z1 = EMS[0]; x2, y2, z2 = EMS[1]

x3, y3, z3 = ems[0]; x4, y4, z4 = ems[1]

new_EMSs = [

[(x4, y1, z1), (x2, y2, z2)],

[(x1, y4, z1), (x2, y2, z2)],

[(x1, y1, z4), (x2, y2, z2)]

]

for new_EMS in new_EMSs:

isValid = True

# 3. Eliminate new EMSs which are totally inscribed by other EMSs

for other_EMS in self.EMSs:

if self.inscribed(new_EMS, other_EMS):

isValid = False

# 4. Remove _EMSs_ which are smaller than existing boxes to be placed

## (1) new EMS smaller than the volume of remaining boxes

new_box = new_EMS[1] - new_EMS[0]

if np.min(new_box) < min_dim:

isValid = False

## (2) new EMS having smaller dimension of the smallest dimension of remaining boxes

if np.product(new_box) < min_vol:

isValid = False

# add new EMS if valid

if isValid:

existing_EMSs.append(new_EMS)Now we have a way to update the state of container and boxes in 3D space after each box placement. Next, I will introduce a placement heuristic to decide which EMS to select for each box placement in a sequence of boxes.

The Back-Bottom-Left-Fill Heuristic is a rule to pack a sequence of boxes, in which it will always select the empty space with smallest minimum coordinates to fit the box. The heuristic aims to place box in the deepest space for each iteration in hope that all boxes will be placed tight together in the end.

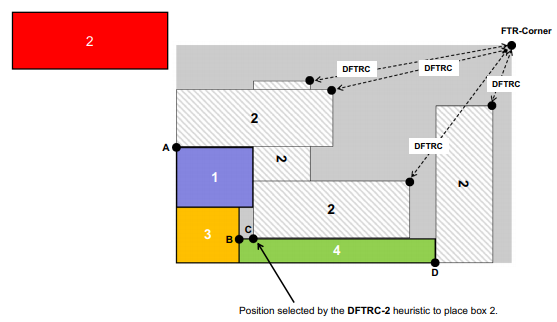

As observed by Liu and Teng (1999), some optimal solutions could not be constructed by this heuritic. To deal with this problem, Gonçalves & Resende (2013) developed an improved version of the placement heuristic rule named Distance to the Front-Top-Right Corner (DFTRC). As the title suggests, the heuristc will always place the box in the empty space such that it maximizes the distance of the box to the maximal coordinates of the container (Fig. 6).

Fig. 6. Example of heuristic DFTRC placement ruleThe psuedo function for DFTRC placement rule is:

# D, W, H are the depth, width and height of a container

def DFTRC(box, existing_EMSs):

maxDist = -1

selectedEMS = None

for EMS in existing_EMSs:

# for different orientation

for direction in [1,2,3,4,5,6]:

d, w, h = orient(box, direction)

# if box fit in the current EMS

if fitin((d, w, h), EMS):

# minimum coordinate of ENS

x, y, z = EMS[0]

# distance between maximum coordinate of box and container

distance = pow(D-x-d, 2) + pow(W-y-w, 2) + pow(H-z-h, 2)

# find maximal distance

if distance > maxDist:

maxDist = distance

selected_EMS = EMS

return selected_EMSwhere orient is a helper function to orient box given the orientation and fitin is another to check whether the space can fit in a EMS:

def orient(box, BO):

d, w, h = box

# rotate box based on selected orientation BO

if BO == 1: return (d, w, h)

elif BO == 2: return (d, h, w)

elif BO == 3: return (w, d, h)

elif BO == 4: return (w, h, d)

elif BO == 5: return (h, d, w)

elif BO == 6: return (h, w, d)

def fitin(self, box, EMS):

# ensure box is totally inscribed by EMS

for d in range(3):

if box[d] > EMS[1][d] - EMS[0][d]:

return False

return TrueThe DFTRC placement rule is used to select a EMS from existing EMSs for box placement. In a solution, this placement rule will be used n times to place n boxes following the order of BPS. If the box cannot fit in the existing EMSs, we will open a new empty container and resume the ongoing placement process. With consideration of VBO, we can finally write down the placement procedure as following code. Let boxes be the set of boxes , Bins be the set of containers, and num_opend_bins be the number of currently opened containers.

def placement_procedure(BPS, VBO):

# pack box in the order of BPS

items_sorted = [boxes[i] for i in BPS]

for i, box in enumerate(items_sorted):

# selection Bin and EMS to place the box

selected_bin = None

selected_EMS = None

for k in range(num_opend_bins):

# select EMS using DFTRC heuristic rule

EMS = DFTRC(box, Bins[k].existing_EMSs)

# select successfully

if EMS != None:

selected_bin = k

selected_EMS = EMS

break

# Open new empty bin if failed

if selected_bin == None:

num_opend_bins += 1

selected_bin = num_opend_bins - 1

# select the first and only EMS from the new Bin

selected_EMS = Bins[selected_bin].EMSs[0]

# Box orientation selection

BO = selecte_box_orientaion(VBO[i], box, selected_EMS)

# pack the box to the bin & update state information

# remember it is perform on

difference_process(orient(box, BO), selected_EMS, Bins[selected_bin].existing_EMSs)where selecte_box_orientaion is the function to compute and select the orientations for the box:

def selecte_box_orientaion(BO, box, selected_EMS):

# compute possible direction

BOs = []

for direction in [1,2,3,4,5,6]:

if fitin(orient(box, direction), selected_EMS):

BOs.append(direction)

# select orientation (decoding of BO)

return BOs[math.ceil(VBO*len(BOs))-1]Now, do you remember why I introduce placement procedure in the first place? We need a system to pack a sequence of boxes into containers in 3D space, so we can count the number of used containers as the fitness value for the given solution. In the paper, the fitness value is modified with a small adjustment — an additional term of the load of the least laoded container (Eq. 1). The rationale for this measure is that if two solutions use the same number of containers, they will result in the same fitness value. Nevertheless, the one having the least load in the least loaded bin will more likely have more compact placement in other containers, thus, more potential for improvement.

Eq. 1. Adjusted number of binsdef fitness(num_opend_bins, Bins):

# find least load

leastLoad = 1

for k in range(num_opend_bins):

load = Bins[k].load()

if load < leastLoad:

leastLoad = load

# remainder of 1 in case 100% load

return self.num_opend_bins + leastLoad % 1Finally, we can define the cal_fitness to calculate fitness value for each solution:

def cal_fitness(population)

fitness_list = list()

for solution in population:

decoder = placement_procedure(BPS, VBO)

fitness_list.append(fitness(decoder))

return fitness_listwhere decoder is the instance of placement_procedure. Feel free to customerize your class for placement procedure, or compare it with mine.