This is a minimal version of the dense (per-pixel) CLIP feature extractor proposed in Language-driven semantic segmentation at ICLR 2022.

Note: I am not an author on the paper. I merely took their original implementation and extracted the core components therein.

First, install a recent pytorch release by following instructions on https://pytorch.org/

Now, install the OpenAI CLIP package by running

pip install git+https://github.com/openai/CLIP.gitBuild the lseg package by running

python setup.py build developDownload the weights of the pretrained model from this OneDrive link.

Copy this over to the examples/checkpoints directory.

Note: Source images used in the examples are from Wikimidea and the original LSeg repo.

cd examples

python extract_lseg_features.pyCommandline options supported

usage: extract_lseg_features.py [-h] [--checkpoint-path STR|PATH]

[--backbone STR] [--num-features INT]

[--arch-option INT] [--block-depth INT]

[--activation STR] [--crop-size INT]

[--query-image STR|PATH] [--prompt STR]

╭─ arguments ────────────────────────────────────────────────────────────────╮

│ -h, --help show this help message and exit │

│ --checkpoint-path STR|PATH │

│ (default: │

│ lseg-minimal/examples/checkpoi… │

│ --backbone STR (default: clip_vitl16_384) │

│ --num-features INT (default: 256) │

│ --arch-option INT (default: 0) │

│ --block-depth INT (default: 0) │

│ --activation STR (default: lrelu) │

│ --crop-size INT (default: 480) │

│ --query-image STR|PATH (default: │

│ lseg-minimal/images/teddybear.… │

│ --prompt STR (default: teddy) │

╰────────────────────────────────────────────────────────────────────────────╯

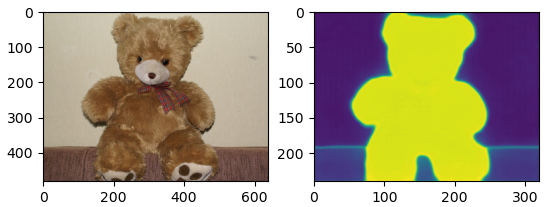

This script should produce an output that looks like:

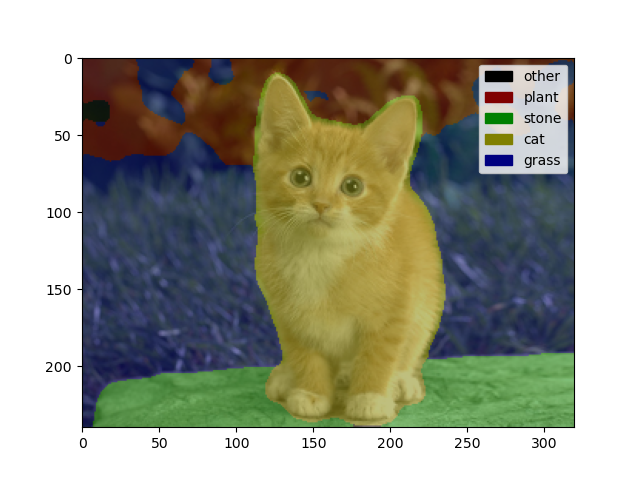

This example loads an image, takes an arbitrary set of class labels specified as comma separated text WITHOUT ANY WHITE SPACES, and performs open-set segmentation.

cd examples

python lseg_openset_seg.pyCommandline options supported

usage: lseg_openset_seg.py [-h] [--checkpoint-path STR|PATH] [--backbone STR]

[--num-features INT] [--arch-option INT]

[--block-depth INT] [--activation STR]

[--crop-size INT] [--query-image STR|PATH]

[--segclasses STR]

╭─ arguments ────────────────────────────────────────────────────────────────╮

│ -h, --help show this help message and exit │

│ --checkpoint-path STR|PATH │

│ (default: │

│ lseg-minimal/examples/checkpoi… │

│ --backbone STR (default: clip_vitl16_384) │

│ --num-features INT (default: 256) │

│ --arch-option INT (default: 0) │

│ --block-depth INT (default: 0) │

│ --activation STR (default: lrelu) │

│ --crop-size INT (default: 480) │

│ --query-image STR|PATH (default: │

│ lseg-minimal/images/cat.jpg) │

│ --segclasses STR (default: plant,grass,cat,stone) │

╰────────────────────────────────────────────────────────────────────────────╯

When run with the default options, this should produce the following image