Changes

- fix bugs

- refactor code

- accerate detection by adding nms on gpu

Changes

- bug fix (Thanks @JieChen91 and @yingsen1 for bug reporting).

- using batch for feature extracting for each frame, which lead to a small speed up.

- code improvement.

Futher improvement direction

- Train detector on specific dataset rather than the official one.

- Retrain REID model on pedestrain dataset for better performance.

- Replace YOLOv3 detector with advanced ones.

Any contributions to this repository is welcome!

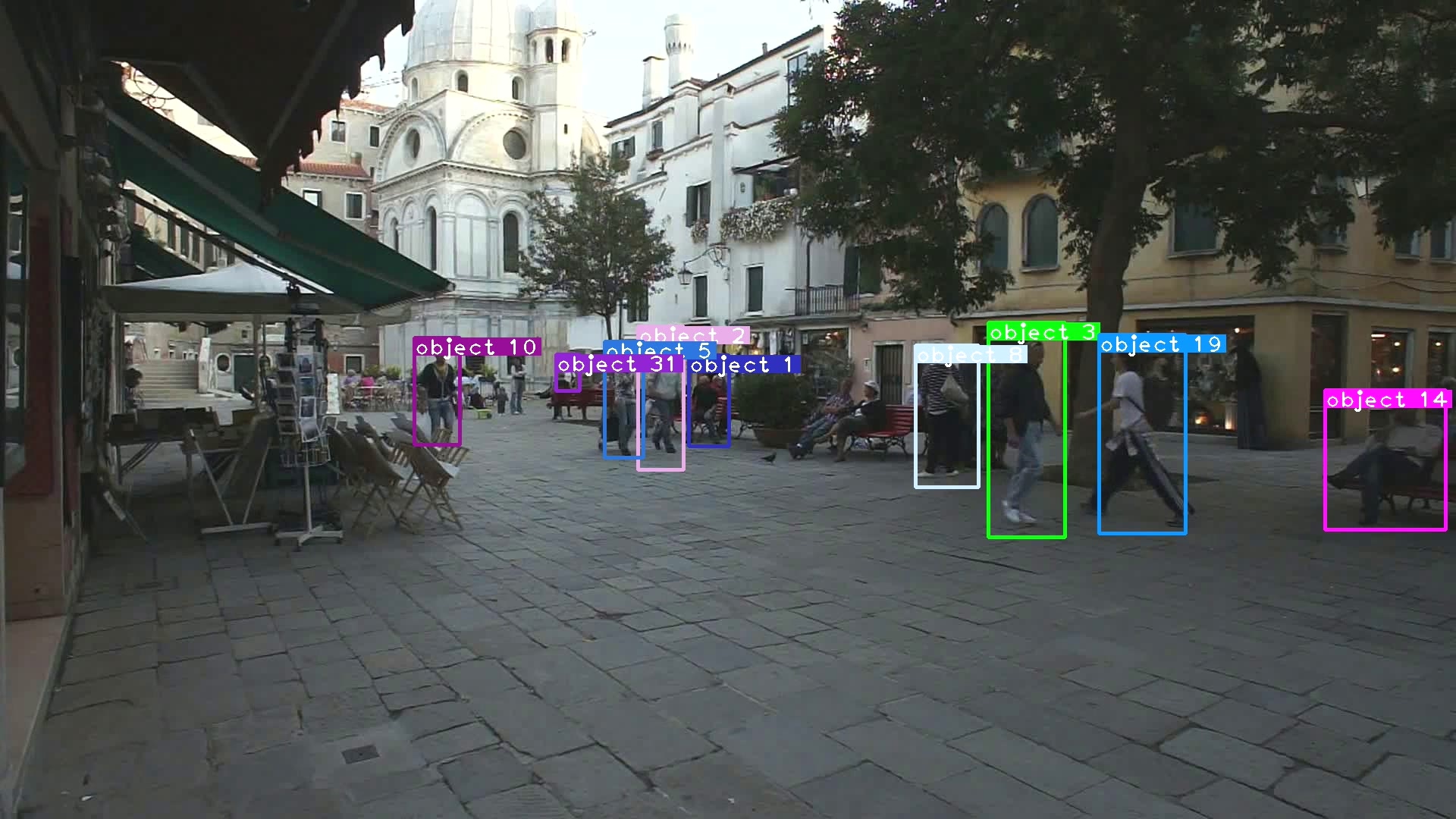

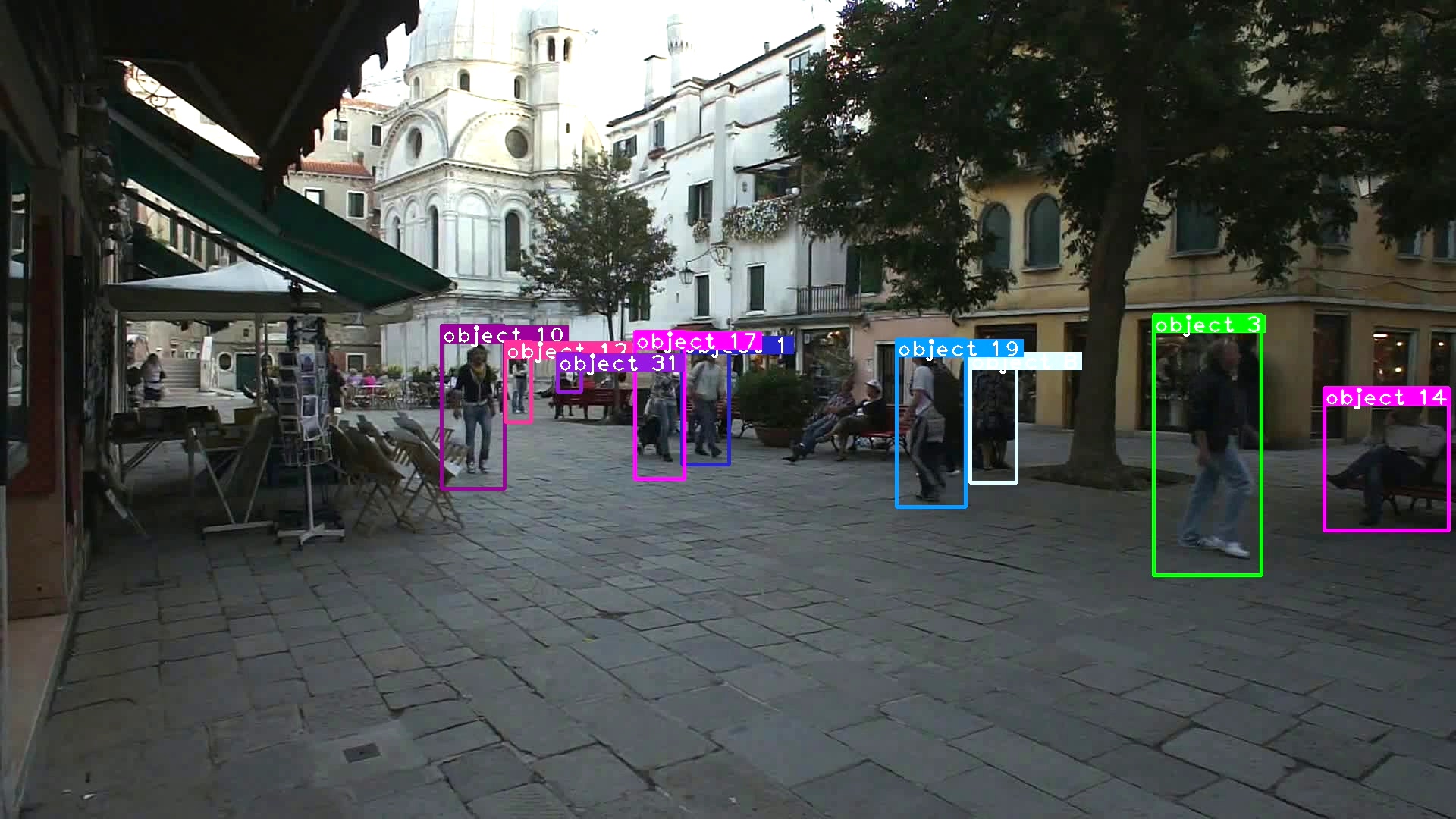

This is an implement of MOT tracking algorithm deep sort. Deep sort is basicly the same with sort but added a CNN model to extract features in image of human part bounded by a detector. This CNN model is indeed a RE-ID model and the detector used in PAPER is FasterRCNN , and the original source code is HERE.

However in original code, the CNN model is implemented with tensorflow, which I'm not familier with. SO I re-implemented the CNN feature extraction model with PyTorch, and changed the CNN model a little bit. Also, I use YOLOv3 to generate bboxes instead of FasterRCNN.

- python 3 (python2 not sure)

- numpy

- scipy

- opencv-python

- sklearn

- torch >= 0.4

- torchvision >= 0.1

- pillow

- vizer

- edict

- Check all dependencies installed

pip install -r requirements.txtfor user in china, you can specify pypi source to accelerate install like:

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple- Clone this repository

git clone git@github.com:ZQPei/deep_sort_pytorch.git

- Download YOLOv3 parameters

cd detector/YOLOv3/weight/

wget https://pjreddie.com/media/files/yolov3.weights

wget https://pjreddie.com/media/files/yolov3-tiny.weights

cd ../../../

- Download deepsort parameters ckpt.t7

cd deep_sort/deep/checkpoint

# download ckpt.t7 from

https://drive.google.com/drive/folders/1xhG0kRH1EX5B9_Iz8gQJb7UNnn_riXi6 to this folder

cd ../../../

- Compile nms module

cd detector/YOLOv3/nms

sh build.sh

cd ../../..Notice:

If compiling failed, the simplist way is to **Upgrade your pytorch >= 1.1 and torchvision >= 0.3" and you can avoid the troublesome compiling problems which are most likely caused by either gcc version too low or libraries missing.

- (Optional) Prepare third party submodules

This library supports bagtricks, AGW and other mainstream ReID methods through providing an fast-reid adapter.

to prepare our bundled fast-reid, then follow instructions in its README to install it.

Please refer to configs/fastreid.yaml for a sample of using fast-reid. See Model Zoo for available methods and trained models.

This library supports Faster R-CNN and other mainstream detection methods through providing an MMDetection adapter.

to prepare our bundled MMDetection, then follow instructions in its README to install it.

Please refer to configs/mmdet.yaml for a sample of using MMDetection. See Model Zoo for available methods and trained models.

Run

git submodule update --init --recursive

- Run demo

usage: deepsort.py [-h]

[--fastreid]

[--config_fastreid CONFIG_FASTREID]

[--mmdet]

[--config_mmdetection CONFIG_MMDETECTION]

[--config_detection CONFIG_DETECTION]

[--config_deepsort CONFIG_DEEPSORT] [--display]

[--frame_interval FRAME_INTERVAL]

[--display_width DISPLAY_WIDTH]

[--display_height DISPLAY_HEIGHT] [--save_path SAVE_PATH]

[--cpu] [--camera CAM]

VIDEO_PATH

# yolov3 + deepsort

python deepsort.py [VIDEO_PATH]

# yolov3_tiny + deepsort

python deepsort.py [VIDEO_PATH] --config_detection ./configs/yolov3_tiny.yaml

# yolov3 + deepsort on webcam

python3 deepsort.py /dev/video0 --camera 0

# yolov3_tiny + deepsort on webcam

python3 deepsort.py /dev/video0 --config_detection ./configs/yolov3_tiny.yaml --camera 0

# fast-reid + deepsort

python deepsort.py [VIDEO_PATH] --fastreid [--config_fastreid ./configs/fastreid.yaml]

# MMDetection + deepsort

python deepsort.py [VIDEO_PATH] --mmdet [--config_mmdetection ./configs/mmdet.yaml]

Use --display to enable display.

Results will be saved to ./output/results.avi and ./output/results.txt.

All files above can also be accessed from BaiduDisk!

linker:BaiduDisk

passwd:fbuw

The original model used in paper is in original_model.py, and its parameter here original_ckpt.t7.

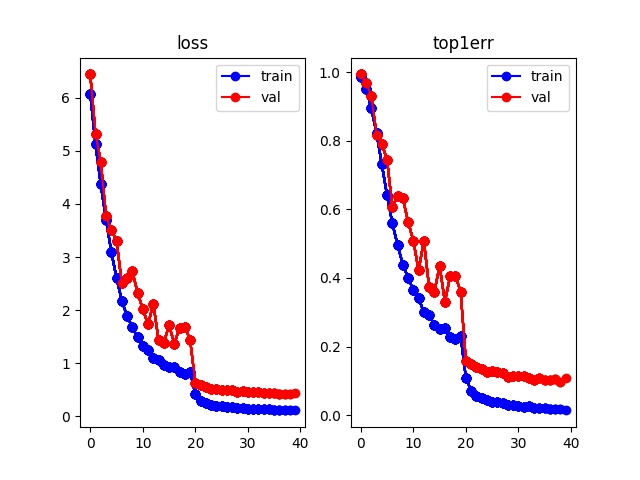

To train the model, first you need download Market1501 dataset or Mars dataset.

Then you can try train.py to train your own parameter and evaluate it using test.py and evaluate.py.

-

paper: Simple Online and Realtime Tracking with a Deep Association Metric

-

code: nwojke/deep_sort

-

paper: YOLOv3

-

code: Joseph Redmon/yolov3