Some tips / things of notes for myself while I'm learning Nextflow

- write notes on the newest nextflow release https://www.nextflow.io/blog/2024/nextflow-2404-highlights.html

Nextflow is a framework used for writing parallel and distributed computational pipelines, common in bioinformatics, genomics, and other fields where complex data analysis pipelines are common. It simplifies the process of creating complex workflows that involve processing large volumes of data.

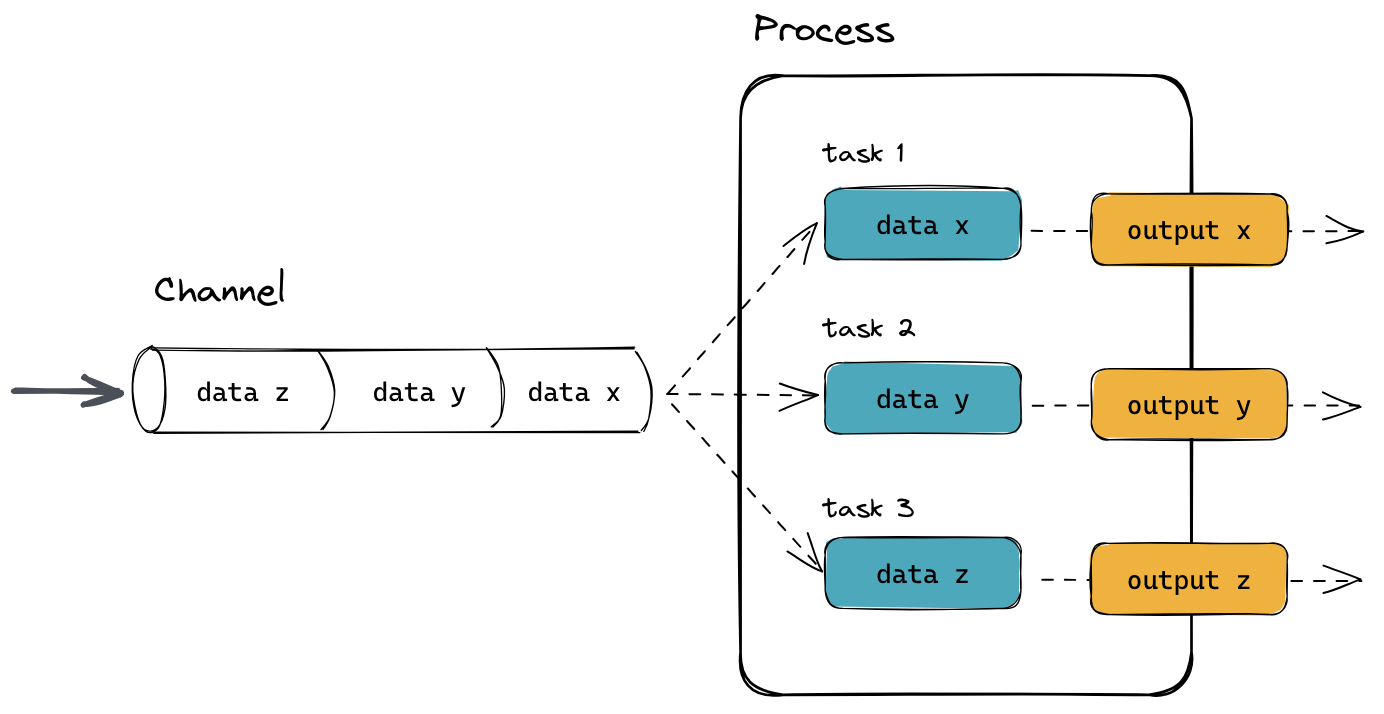

Nextflow pipelines are partitioned into channels, processes, and workflows. Channels represent data streams, processes perform computations on that data, and workflows orchestrate the execution of processes and manage the flow of data through the pipeline.

The central tenant of Nextflow is generating pipelines with 100% reproducibility. Most bioinformatic studies use multiple softwares and languages all with different versions. This makes reproducibility very difficult as you have to go back and figure out what softwares, versions and what dependancies were used. Worst off different softwares might not work on certain machines or even can work differently depending on what machine you are running the analysis on, resulting in different statistical calculations and outputs from the same input data (see https://doi.org/10.1038/nbt.3820 for more information). With Nextflow, if you use it correctly and follow all the best practices, your data analysis pipelines should be fully reproducible regardless of when or where it is run.

Nextflow pipelines need to be written into files with the .nf extension.

Example, create a file called main.nf and provide it the following code:

#!/usr/bin/env nextflow

process FASTQC {

input:

path input

output:

path "*_fastqc.{zip, html}"

script:

"""

fastqc -q $input

"""

}

workflow {

Channel.fromPath("*.fastq.gz") | FASTQC

}Note

The shebang (#!/usr/bin/env nextflow) is a line that helps the operating system decide what program should be used to interpret this script code. If you always use Nextflow to call this script, this line is optional.

Nextflow can then run by using the following command

nextflow run main.nfOnly run it like this the first time. If you run it again it will begin running the entire workflow over again. It's better to not rerun processes that don't need to be rerun to save a lot of time.

When you run a Nextflow pipeline, you will get an output like this in your terminal

N E X T F L O W ~ version 23.10.0

Launching `main.nf` [focused_noether] DSL2 - revision: 197a0e289a

executor > local (3)

[18/f6351b] process > SPLITLETTERS (1) [100%] 1 of 1 ✔

[2f/007bc5] process > CONVERTTOUPPER (1) [100%] 2 of 2 ✔

WORLD!

HELLO

This is what this all means

N E X T F L O W ~

# THE VERSION OF NEXTFLOW THAT WAS USED FOR THIS RUN SPECIFICALLY

version 23.10.0

# THE NAME OF THE SCRIPT FILE THAT WAS RUN WITH NEXTFLOW

Launching `main.nf`

# A GENERATED MNEMONIC WHICH IS AN RANDOM OBJECTIVE AND A RANDOM SURNAME OF A FAMOUS SCIENTIST

[focused_noether]

# THE VERSION OF THE NEXTFLOW LANGUAGE

DSL2

# THE REVISION HASH, WHICH IS LIKE AN ID OF YOUR PIPELINE.

# IF YOU CHANGE THE NEXTFLOW SCRIPT CODE, THIS REVISION ID WILL CHANGE

- revision: 197a0e289a

# THE EXECUTOR FOR THE PIPELINE

executor > local

# NEXTFLOW'S GUESS AT HOW MANY TASKS THAT WILL OCCUR IN YOUR PIPELINE

(3)

# A LIST OF PROCESSES AND TASKS

[18/f6351b] process > SPLITLETTERS (1) [100%] 1 of 1 ✔

[2f/007bc5] process > CONVERTTOUPPER (1) [100%] 2 of 2 ✔

## EVERY TASK WILL HAVE A UNIQUE HASH

## EVERY TASK IS ISOLATED FROM EACH OTHER

## THESE HASHES CORRESPOND TO DIRECTORY NAMES WHERE YOU CAN GO AND VIEW INFORMATION ABOUT THAT SPECIFIC TASK

[18/f6351b]

[2f/007bc5] # BY DEFAULT NEXTFLOW SHOWS THE HASH OF 1 TASK PER PROCESS.

# THE WORKFLOW OUTPUTS

WORLD!

HELLO

When using the -resume flag, successfully completed tasks are skipped and the previously cached results are used in downstream tasks.

nextflow run main.nf -resumeIn practice, every execution starts from the beginning. However, when using resume, before launching a task, Nextflow uses the unique ID to check if:

- the working directory exists

- it contains a valid command exit status

- it contains the expected output files.

The mechanism works by assigning a unique ID to each task. This unique ID is used to create a separate execution directory, called the working directory, where the tasks are executed and the results stored. A task’s unique ID is generated as a 128-bit hash number obtained from a composition of the task’s:

- Inputs values

- Input files

- Command line string

- Container ID

- Conda environment

- Environment modules

- Any executed scripts in the bin directory

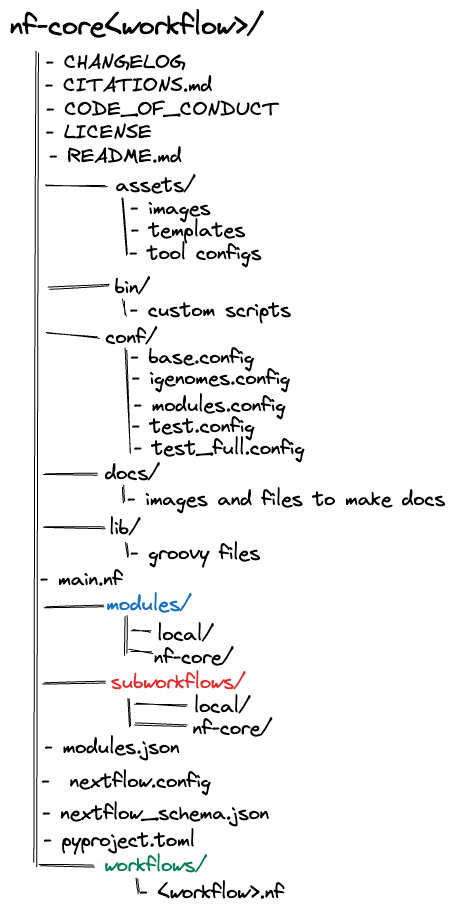

An basic, overall professional Nextflow pipeline structure should look like this:

.

├── main.nf

├── modules

│ ├── local

│ └── nf-core

├── subworkflows

│ ├── local

│ └── nf-core

└── workflows

├── illumina.nf

├── nanopore.nf

└── sra_download.nf

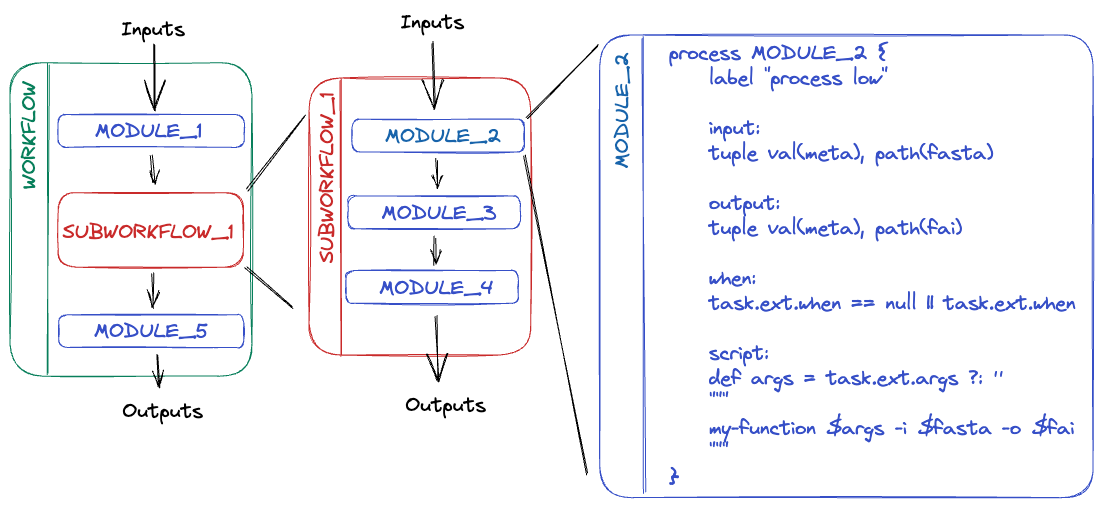

Terminology:

- Module:

- An atomic process

- e.g. a module containing a single tool such as FastQC

- An atomic process

- Subworkflow:

- A chain of multiple modules with a higher-level of functionality

- e.g. a subworkflow to run multiple QC tools on FastQ files

- A chain of multiple modules with a higher-level of functionality

- Workflows:

- An end-to-end pipeline

- DSL1: A large monolithic script

- DSL2: A combination of individual modules and subworkflows

- e.g. a pipeline to produce a series of final outputs from one or more inputs.

main.nf:- The entry point for Nextflow

- Where all the workflows will be called from

Note

DSL1 and DSL2 refer to two versions of the Nextflow Domain Specific Language (DSL).

The first thing that Nextflow looks for when a workflow is run is configuration files in multiple locations. Since each configuration file can contain conflicting settings, the sources are ranked to determine which settings are applied. Possible configuration sources, in order of priority:

-

Parameters specified on the command line (

--something value) -

Parameters provided using the

-params-fileoption -

Config file specified using the

-c my_configoption -

The config file named

nextflow.configin the current directory -

The config file named

nextflow.configin the workflow project directory -

The config file

$HOME/.nextflow/config -

Values defined within the pipeline script itself (e.g.

main.nf)

Note

Precedence is in order of 'distance'. A handy guide to understand configuration precedence is in order of 'distance from the command-line invocation'. Parameters specified directly on the CLI --example foo are "closer" to the run than configuration specified in the remote repository.

When more than one of these options for specifying configurations are used, they are merged, so that the settings in the first override the same settings appearing in the second, and so on.

This is an example of a Nextflow configuration in a file called nextflow.config

propertyOne = 'world'

propertyTwo = "Hello $propertyOne"

customPath = "$PATH:/my/app/folder"Note

The quotes act like they do in bash. Variables inside single quotes remain literal. Variables inside double quotes get expanded (including environment variables)

Configuration settings can be organized in different scopes by dot prefixing the property names with a scope identifier, or grouping the properties in the same scope using the curly brackets notation. For example:

alpha.x = 1

alpha.y = 'string value..'

beta {

p = 2

q = 'another string ..'

}The scope params allows the definition of workflow parameters that override the values defined in the main workflow script.

This is useful to consolidate one or more execution parameters in a separate file.

nextflow.config

params.foo = 'Bonjour'

params.bar = 'le monde!'snippet.nf

params.foo = 'Hello'

params.bar = 'world!'

// print both params

println "$params.foo $params.bar"Any variables that are set using params. can be modified through the command line when executing Nextflow using two dashes (--)

E.g.

nextflow run snippet.nf -resume --foo "Bye"Note

In Nextflow, single dashes (-) in command line arguments refer to Nextflow commands (e.g. -resume), while double dashes (--) refer to workflow parameters

Note

Values assigned to a the config params params. will be treated as value channels (see more information below on value channels)

The env scope allows the definition of one or more variables that will be exported into the environment where the workflow tasks will be executed.

env.NCBI_SETTINGS = params.settings_fileProcess directives allow the specification of settings for the task execution such as cpus, memory, container, and other resources in the workflow script.

This is useful when prototyping a small workflow script.

However, it’s always a good practice to decouple the workflow execution logic from the process configuration settings, i.e. it’s strongly suggested to define the process settings in the workflow configuration file instead of the workflow script.

The process configuration scope allows the setting of any process directives in the Nextflow configuration file:

nextflow.config

process {

cpus = 10

memory = 8.GB

container = 'biocontainers/bamtools:v2.4.0_cv3'

}The above config snippet defines the cpus, memory and container directives for all processes in your workflow script. Depending on your executor these things may behave differently.

The withLabel selectors allow the configuration of all processes annotated with a label directive as shown below:

process {

withLabel: big_mem {

cpus = 16

memory = 64.GB

queue = 'long'

}

}The above configuration example assigns 16 cpus, 64 Gb of memory and the long queue to all processes annotated with the big_mem label.

Labels can be added using the label directive

e.g.

process bigTask {

label 'big_mem'

```

<task script>

```

}In the same manner, the withName selector allows the configuration of a specific process in your pipeline by its name. For example:

process {

withName: hello {

cpus = 4

memory = 8.GB

queue = 'short'

}

}A process selector can also be negated prefixing it with the special character !. For example:

process {

withLabel: 'foo' { cpus = 2 }

withLabel: '!foo' { cpus = 4 }

withName: '!align.*' { queue = 'long' }

}The above configuration snippet sets 2 cpus for the processes annotated with the foo label and 4 cpus to all processes not annotated with that label. Finally it sets the use of long queue to all process whose name does not start with align.

Both label and process name selectors allow the use of a regular expression in order to apply the same configuration to all processes matching the specified pattern condition.

For example:

process {

withLabel: 'foo|bar' {

cpus = 2

memory = 4.GB

}

}The above configuration snippet sets 2 cpus and 4 GB of memory to the processes annotated with a label foo and bar.

A process selector can be negated prefixing it with the special character !.

For example:

process {

withLabel: 'foo' { cpus = 2 }

withLabel: '!foo' { cpus = 4 }

withName: '!align.*' { queue = 'long' }

}The above configuration snippet sets 2 cpus for the processes annotated with the foo label and 4 cpus to all processes not annotated with that label. Finally it sets the use of long queue to all process whose name does not start with align.

If you already have a conda environment in your machine that you want to use for your processes, you can use

nextflow.config

process.conda = "/home/ubuntu/miniconda2/envs/nf-tutorial"

You can specify the path of an existing Conda environment as either directory or the path of Conda environment YAML file.

When a workflow script is launched, Nextflow looks for a file named nextflow.config in the current directory and in the script base directory (if it is not the same as the current directory). Finally, it checks for the file: $HOME/.nextflow/config.

When more than one of the above files exists, they are merged, so that the settings in the first override the same settings that may appear in the second, and so on.

The default config file search mechanism can be extended by providing an extra configuration file by using the command line option: -c <config file>.

Information on writing these config files can be found here https://training.nextflow.io/basic_training/config/.

If you add the following code to the nextflow.config file

process.executor = 'slurm'Then Nextflow will write the SLURM job script for every file for you. Nextflow will manage each process as a separate job that is submitted to the cluster using the sbatch command.

More information on how to configure this further can be found here https://www.nextflow.io/docs/latest/executor.html#slurm

When running Nextflow on a HPC, it's recommended to run it as a job on a compute node. This is because a lot of computing clusters have strict rules on running processes on login nodes. Therefore, it's always advisable to create jobscripts like this for all your Nextflow jobs.

launch_nf.sh

#!/bin/bash

#SBATCH --partition WORK

#SBATCH --mem 5G

#SBATCH -c 1

#SBATCH -t 12:00:00

WORKFLOW=$1

CONFIG=$2

# Use a conda environment where you have installed Nextflow

# (may not be needed if you have installed it in a different way)

conda activate Nextflow

nextflow -C ${CONFIG} run ${WORKFLOW}and launch the workflow using

sbatch launch_nf.sh /home/my_user/path/my_workflow.nf /home/my_user/path/my_config_file.confThe following information about configuration is taken from the Nextflow advanced training.

There may be some configuration values that you will want applied on all runs for a given system. These configuration values should be written to ~/.nextflow/config.

For example - you may have an account on a HPC system and you know that you will always want to submit jobs using the SLURM scheduler when using that machine and always use the Singularity container engine. In this case, your ~/.nextflow/config file may include:

process.executor = 'slurm'

singularity.enable = trueThese configuration values would be inherited by every run on that system without you needing to remember to specify them each time.

Process directives (listed here) can be overridden using the process block.

For example, if we wanted to specify that all tasks for a given run should use 2 cpus. In the nextflow.config file in the current working directory add:

process {

cpus = 2

}... and then run:

nextflow run rnaseq-nfWe can make the configuration more specific by using process selectors.

We can use process names and/or labels to apply process-level directives to specific tasks:

process {

withName: 'RNASEQ:INDEX' {

cpus = 2

}

}Glob pattern matching can also be used:

process {

withLabel: '.*:INDEX' {

cpus = 2

}

}This will run any task that ends with :INDEX to run with 2 cpus.

Just to make sure the globbing is working, you can also configure your processes to have a tag directive. The process tag is the value that's printed out in parentheses after the process name.

For example, when run the Nextflow pipeline with the above configuration will output this

N E X T F L O W ~ version 23.04.3

Launching `https://github.com/nextflow-io/rnaseq-nf` [fabulous_bartik] DSL2 - revision: d910312506 [master]

R N A S E Q - N F P I P E L I N E

===================================

transcriptome: /home/gitpod/.nextflow/assets/nextflow-io/rnaseq-nf/data/ggal/ggal_1_48850000_49020000.Ggal71.500bpflank.fa

reads : /home/gitpod/.nextflow/assets/nextflow-io/rnaseq-nf/data/ggal/ggal_gut_{1,2}.fq

outdir : results

executor > local (4)

[1d/3c5cfc] process > RNASEQ:INDEX (ggal_1_48850000_49020000) [100%] 1 of 1 ✔

[38/a6b717] process > RNASEQ:FASTQC (FASTQC on ggal_gut) [100%] 1 of 1 ✔

[39/5f1cc4] process > RNASEQ:QUANT (ggal_gut) [100%] 1 of 1 ✔

[f4/351d02] process > MULTIQC [100%] 1 of 1 ✔

Done! Open the following report in your browser --> results/multiqc_report.html

If you modify the nextflow.config to look like this:

process {

withLabel: '.*:INDEX' {

cpus = 2

tag = "Example debug"

}

}The output will then turn into this, showing that only the processes with :INDEX will be affected.

N E X T F L O W ~ version 23.04.3

Launching `https://github.com/nextflow-io/rnaseq-nf` [fabulous_bartik] DSL2 - revision: d910312506 [master]

R N A S E Q - N F P I P E L I N E

===================================

transcriptome: /home/gitpod/.nextflow/assets/nextflow-io/rnaseq-nf/data/ggal/ggal_1_48850000_49020000.Ggal71.500bpflank.fa

reads : /home/gitpod/.nextflow/assets/nextflow-io/rnaseq-nf/data/ggal/ggal_gut_{1,2}.fq

outdir : results

executor > local (4)

[1d/3c5cfc] process > RNASEQ:INDEX (Example debug) [100%] 1 of 1 ✔

[38/a6b717] process > RNASEQ:FASTQC (FASTQC on ggal_gut) [100%] 1 of 1 ✔

[39/5f1cc4] process > RNASEQ:QUANT (ggal_gut) [100%] 1 of 1 ✔

[f4/351d02] process > MULTIQC [100%] 1 of 1 ✔

Done! Open the following report in your browser --> results/multiqc_report.html

We can specify dynamic directives using closures that are computed as the task is submitted. This allows us to (for example) scale the number of CPUs used by a task by the number of input files. This is one of the benefits of Nextflow, which is that because it is such a dynamic paradigm, there are elements of the task that can be calculated as the task is being executed, rather than before when the run begins.

Give the FASTQC process in the rnaseq-nf workflow

process FASTQC {

tag "FASTQC on $sample_id"

conda 'fastqc=0.12.1'

publishDir params.outdir, mode:'copy'

input:

tuple val(sample_id), path(reads)

output:

path "fastqc_${sample_id}_logs"

script:

"""

fastqc.sh "$sample_id" "$reads"

"""

}We might choose to scale the number of CPUs for the process by the number of files in reads:

process {

withName: 'FASTQC' {

cpus = { reads.size() }

}

}Remember, dynamic directives are supplied a closure, in this case { reads.size() }. This is telling Nextflow "I want to defer evaluation of this. I'm just supplying a closure that will be evaluated later" (Think like callback functions in JavaScript).

We can even use the size of the input files. Here we simply sum together the file sizes (in bytes) and use it in the tag block:

process {

withName: 'FASTQC' {

cpus = { reads.size() }

tag = {

total_bytes = reads*.size().sum()

"Total size: ${total_bytes as MemoryUnit}"

}

}

}Note

MemoryUnit is a Nextflow built-in class for turning strings and integers into human-readable memory units.

When we run this:

N E X T F L O W ~ version 23.04.3

Launching `https://github.com/nextflow-io/rnaseq-nf` [fabulous_bartik] DSL2 - revision: d910312506 [master]

R N A S E Q - N F P I P E L I N E

===================================

transcriptome: /home/gitpod/.nextflow/assets/nextflow-io/rnaseq-nf/data/ggal/ggal_1_48850000_49020000.Ggal71.500bpflank.fa

reads : /home/gitpod/.nextflow/assets/nextflow-io/rnaseq-nf/data/ggal/ggal_gut_{1,2}.fq

outdir : results

executor > local (4)

[1d/3c5cfc] process > RNASEQ:INDEX (ggal_1_48850000_49020000) [100%] 1 of 1 ✔

[38/a6b717] process > RNASEQ:FASTQC (Total size: 1.3 MB) [100%] 1 of 1 ✔

[39/5f1cc4] process > RNASEQ:QUANT (ggal_gut) [100%] 1 of 1 ✔

[f4/351d02] process > MULTIQC [100%] 1 of 1 ✔

Done! Open the following report in your browser --> results/multiqc_report.html

Note

Dynamic directives need to be supplied as closures encases in curly braces.

Nextflow gives you the option of resubmitting a task if a task fails. This is really useful if you're working with a flakey program or dealing with network I/O.

The most common use for dynamic process directives is to enable tasks that fail due to insufficient memory to be resubmitted for a second attempt with more memory.

To enable this, two directives are needed:

maxRetrieserrorStrategy

The errorStrategy directive determines what action Nextflow should take in the event of a task failure (a non-zero exit code). The available options are:

terminate: (default) Nextflow terminates the execution as soon as an error condition is reported. Pending jobs are killedfinish: Initiates an orderly pipeline shutdown when an error condition is raised, waiting the completion of any submitted job.ignore: Ignores processes execution errors. (dangerous)retry: Re-submit for execution a process returning an error condition.

If the errorStrategy is retry, then it will retry up to the value of maxRetries times.

If using a closure to specify a directive in configuration, you have access to the task variable, which includes the task.attempt value - an integer specifying how many times the task has been retried. We can use this to dynamically set values such as memory and cpus

process {

withName: 'RNASEQ:QUANT' {

errorStrategy = 'retry'

maxRetries = 3

memory = { 2.GB * task.attempt }

time = { 1.hour * task.attempt }

}

}Note

Be aware of the differences between configuration and process syntax differences.

- When defining values inside configuration, an equals sign

=is required as shown above. - When specifying process directives inside the process (in a

.nffile), no=is required:

process MULTIQC {

cpus 2

// ...In Nextflow, a process is the basic computing primitive to execute foreign functions (i.e., custom scripts or tools).

The process definition starts with the keyword process, followed by the process name and finally the process body delimited by curly brackets.

It is a best practice to always name processes in UPPERCASE. This way you can easily see what are process blocks and what are regular functions.

A basic process, only using the script definition block, looks like the following:

process SAYHELLO {

script:

"""

echo 'Hello world!'

"""

}However, the process body can contain up to five definition blocks:

-

Directives are initial declarations that define optional settings

-

Input defines the expected input channel(s)

- Requires a qualifier

-

Output defines the expected output channel(s)

- Requires a qualifier

-

When is an optional clause statement to allow conditional processes

-

Script is a string statement that defines the command to be executed by the process' task

The full process syntax is defined as follows:

process < name > {

[ directives ]

input:

< process inputs >

output:

< process outputs >

when:

< condition >

[script|shell|exec]:

"""

< user script to be executed >

"""

}Take a look at the last part of the output when you run a Nextflow pipeline

# YOUR PROCESSES AND OUTPUTS

[18/f6351b] process > SPLITLETTERS (1) [100%] 1 of 1 ✔

[2f/007bc5] process > CONVERTTOUPPER (1) [100%] 2 of 2 ✔

WORLD!

HELLO

Breaking down the processes section further this is what each part means

# THE HEXADECIMAL HASH FOR THE TASK (ALSO THE TASK DIRECTORY NAME)

[18/f6351b]

# THE NAME OF THE PROCESS USED FOR THE TASK

process > SPLITLETTERS

# I'M NOT SURE WHAT THIS NUMBER MEANS YET. MAYBE THE CURRENT PROCESS?

(1)

# THE PROGRESS OF ALL THE TASKS (OR MAYBE THE CURRENT TASK?)

[100%]

# ENUMERATED PROGRESS OF ALL THE TASKS

1 of 1

# SIGN SHOWING THE TASK RAN SUCCESSFULLY

✔

# THE HEXADECIMAL HASH FOR THE TASK (LAST TASK ONLY IF THERE ARE MORE THAN ONE)

[2f/007bc5]

# THE NAME OF THE PROCESS USED FOR THE TASK

process > CONVERTTOUPPER

# I'M NOT SURE WHAT THIS NUMBER MEANS YET. MAYBE THE CURRENT PROCESS?

(1)

# THE PROGRESS OF ALL THE TASKS (OR MAYBE THE CURRENT TASK?)

[100%]

# ENUMERATED PROGRESS OF ALL THE TASKS

2 of 2

# SIGN SHOWING THE TASK RAN SUCCESSFULLY

✔

The hexadecimal numbers, like 18/f6351b, identify the unique process execution, that is called a task. These numbers are also the prefix of the directories where each task is executed. You can inspect the files produced by changing to the directory $PWD/work and using these numbers to find the task-specific execution path (e.g. Go to $PWD/work/18/f6351b46bb9f65521ea61baaaa9eff to find all the information on the task performed using the SPLITLETTERS process).

Note

Inside the work directory for the specific task, you will also find the Symbolic links used as inputs for the specific task, not copies of the files themselves.

If you look at the second process in the above examples, you notice that it runs twice (once for each task), executing in two different work directories for each input file. The ANSI log output from Nextflow dynamically refreshes as the workflow runs; in the previous example the work directory 2f/007bc5 is the second of the two directories that were processed (overwriting the log with the first). To print all the relevant paths to the screen, disable the ANSI log output using the -ansi-log flag.

Example

nextflow run hello.nf -ansi-log falseWill output

N E X T F L O W ~ version 23.10.0

Launching `hello.nf` [boring_bhabha] DSL2 - revision: 197a0e289a

[18/f6351b] Submitted process > SPLITLETTERS (1)

[dc/e177f3] Submitted process > CONVERTTOUPPER (1) # NOTICE THE HASH FOR TASK 1 IS VISIBLE NOW

[2f/007bc5] Submitted process > CONVERTTOUPPER (2)

HELLO

WORLD!

Now you can find out in which directory everything related to every task performed is stored straight from the console.

Note

Even if you don't use the -ansi-log false flag, you can still see the hashes/directories all the tasks are stored in using the .nextflow.log file. The task directories can be found in the [Task monitor] logs.

Every task that is executed by Nextflow will produe a bunch of hidden files in the tasks work directory beginning with .command. Below are a list of all of them and what they contain.

This file is created whenever the task really started.

Whenever you are debugging a pipeline and you don't know if a task really started or not, you can check for the existence of this file.

This file contains all the errors that may have occured for this task.

This file contains the logs created for this task (e.g. with log.info or through other methods).

This file contains anything that was printed to your screen (the standard output).

This file shows you the jobscript that Nextflow created to run the script (e.g. If you are running your scripts with SLURM, it will show you the SLURM job script Nextflow created and that was subsequently called with sbatch).

This script contains all the functions Nextflow needs to make sure your script runs on whatever executor you configured (e.g. locally, in the cloud, on a HPC, with or withouth container, etc.)

You're not really supposed to meddle with this file but sometimes you may want to see what's in it. (E.g. To see what Docker command was used to start the container etc.)

This file contains the final script that was run for that task.

Example: If this is in the workflow

params.greeting = 'Hello world!'

process SPLITLETTERS {

input:

val x

output:

path 'chunk_*'

"""

printf '$x' | split -b 6 - chunk_

"""

}

workflow {

letters_ch = SPLITLETTERS(greeting_ch)

}The .command.sh file for a task run on this process will look like this

printf 'Hello world!' | split -b 6 - chunk_This is very useful for troubleshooting when things don't work like you'd expect.

The log.info command can be used to print multiline information using groovy’s logger functionality. Instead of writing a series of println commands, it can be used to include a multiline message.

log.info """\

R N A S E Q - N F P I P E L I N E

===================================

transcriptome: ${params.transcriptome_file}

reads : ${params.reads}

outdir : ${params.outdir}

"""

.stripIndent(true)log.info not only prints the ouput of the command to the screen (stout), but also prints the results to the log file.

Usually when you write Nextflow scripts you will add indenting so you can better read the code. However when you want to print the code to the screen you often don't want indenting. The .stripIndent(true) method removes the indents from the output.

.view is a channel operator that consumes every element of a channel and prints it to the screen.

Example

// main.nf

params.reads = "${baseDir}/data/reads/ENCSR000COQ1_{1,2}.fastq.gz"

workflow {

reads_ch = Channel.fromFilePairs(params.reads)

reads_ch.view()

}nextflow run main.nf

# Outputs

# [ENCSR000COQ1, [path/to/ENCSR000COQ1_1.fastq.gz, path/to/ENCSR000COQ1_2.fastq.gz]]You can see in this case it outputs a single channel element created from the .fromFilePairs channel operator.

- The first item is an ID (in this case the replicate ID)

- The second item is a list with all the items (in this case the path to the reads)

In addition to the argument-less usage of view as shown above, this operator can also take a closure to customize the stdout message. We can create a closure to print the value of the elements in a channel as well as their type, for example.

def timesN = { multiplier, it -> it * multiplier }

def timesTen = timesN.curry(10)

workflow {

Channel.of( 1, 2, 3, 4, 5 )

| map( timesTen )

| view { "Found '$it' (${it.getClass()})"}

}The input block allows you to define the input channels of a process, similar to function arguments. A process may have at most one input block, and it must contain at least one input.

The input block follows the syntax shown below:

input:

<input qualifier> <input name>An input definition consists of a qualifier and a name. The input qualifier defines the type of data to be received. This information is used by Nextflow to apply the semantic rules associated with each qualifier, and handle it properly depending on the target execution platform (grid, cloud, etc).

The following input qualifiers are available:

val: Access the input value by name in the process script.

file: (DEPRECATED) Handle the input value as a file, staging it properly in the execution context.

path: Handle the input value as a path, staging the file properly in the execution context.

env: Use the input value to set an environment variable in the process script.

stdin: Forward the input value to the process stdin special file.

tuple: Handle a group of input values having any of the above qualifiers.

each: Execute the process for each element in the input collection.

More information on how each of these input qualifiers work can be found here https://www.nextflow.io/docs/latest/process.html#inputs

A key feature of processes is the ability to handle inputs from multiple channels. However, it’s important to understand how channel contents and their semantics affect the execution of a process.

Consider the following example:

ch1 = Channel.of(1, 2, 3)

ch2 = Channel.of('a', 'b', 'c')

process FOO {

debug true

input:

val x

val y

script:

"""

echo $x and $y

"""

}

workflow {

FOO(ch1, ch2)

}Ouputs:

1 and a

3 and c

2 and b

Both channels emit three values, therefore the process is executed three times, each time with a different pair.

The process waits until there’s a complete input configuration, i.e., it receives an input value from all the channels declared as input.

When this condition is verified, it consumes the input values coming from the respective channels, spawns a task execution, then repeats the same logic until one or more channels have no more content.

This means channel values are consumed serially one after another and the first empty channel causes the process execution to stop, even if there are other values in other channels.

What happens when channels do not have the same cardinality (i.e., they emit a different number of elements)?

ch1 = Channel.of(1, 2, 3)

ch2 = Channel.of('a')

process FOO {

debug true

input:

val x

val y

script:

"""

echo $x and $y

"""

}

workflow {

FOO(ch1, ch2)

}Outputs:

1 and a

In the above example, the process is only executed once because the process stops when a channel has no more data to be processed.

However, replacing ch2 with a value channel will cause the process to be executed three times, each time with the same value of a.

For more information see here.

The each qualifier allows you to repeat the execution of a process for each item in a collection every time new data is received.

This is a very good way to try multiple parameters for a certain process.

Example:

sequences = Channel.fromPath("$baseDir/data/ggal/*_1.fq")

methods = ['regular', 'espresso']

process ALIGNSEQUENCES {

debug true

input:

path seq

each mode

script:

"""

echo t_coffee -in $seq -mode $mode

"""

}

workflow {

ALIGNSEQUENCES(sequences, methods)

}Outputs:

t_coffee -in gut_1.fq -mode regular

t_coffee -in lung_1.fq -mode espresso

t_coffee -in liver_1.fq -mode regular

t_coffee -in gut_1.fq -mode espresso

t_coffee -in lung_1.fq -mode regular

t_coffee -in liver_1.fq -mode espresso

In the above example, every time a file of sequences is received as an input by the process, it executes three tasks, each running a different alignment method set as a mode variable. This is useful when you need to repeat the same task for a given set of parameters.

A double asterisk (**) in a glob pattern works like * but also searches through subdirectories. For example, imagine this is your file structure

data

├── 2023-11-08_upn22_rep-1_mhmw--100mg

│ └── no_sample

│ └── 20231108_1310_MC-115499_FAX00407_d135d0ec

│ ├── fastq_fail

│ │ ├── FAX00407_fail_d135d0ec_b0bb43ca_0.fastq.gz

│ │ └── FAX00407_fail_d135d0ec_b0bb43ca_1.fastq.gz

│ ├── fastq_pass

│ │ ├── FAX00407_pass_d135d0ec_b0bb43ca_0.fastq.gz

│ │ └── FAX00407_pass_d135d0ec_b0bb43ca_1.fastq.gz

│ ├── other_reports

│ │ ├── pore_scan_data_FAX00407_d135d0ec_b0bb43ca.csv

│ │ └── temperature_adjust_data_FAX00407_d135d0ec_b0bb43ca.csv

│ ├── pod5_fail

│ │ ├── FAX00407_fail_d135d0ec_b0bb43ca_0.pod5

│ │ └── FAX00407_fail_d135d0ec_b0bb43ca_1.pod5

│ └── pod5_pass

│ ├── FAX00407_pass_d135d0ec_b0bb43ca_0.pod5

│ └── FAX00407_pass_d135d0ec_b0bb43ca_1.pod5

├── 2023-11-12_upn22_rep-2_mhmw--recovery-elude-1

│ └── no_sample

│ └── 20231112_1338_MC-115499_FAX00228_b67d08a5

│ ├── fastq_fail

│ │ ├── FAX00228_fail_b67d08a5_dc19481f_0.fastq.gz

│ │ └── FAX00228_fail_b67d08a5_dc19481f_1.fastq.gz

│ ├── fastq_pass

│ │ ├── FAX00228_pass_b67d08a5_dc19481f_0.fastq.gz

│ │ └── FAX00228_pass_b67d08a5_dc19481f_1.fastq.gz

│ ├── final_summary_FAX00228_b67d08a5_dc19481f.txt

│ ├── other_reports

│ │ ├── pore_scan_data_FAX00228_b67d08a5_dc19481f.csv

│ │ └── temperature_adjust_data_FAX00228_b67d08a5_dc19481f.csv

│ ├── pod5_fail

│ │ ├── FAX00228_fail_b67d08a5_dc19481f_0.pod5

│ │ └── FAX00228_fail_b67d08a5_dc19481f_1.pod5

│ ├── pod5_pass

│ │ ├── FAX00228_pass_b67d08a5_dc19481f_0.pod5

│ │ └── FAX00228_pass_b67d08a5_dc19481f_1.pod5

│ └── sequencing_summary_FAX00228_b67d08a5_dc19481f.txt

├── 2023-11-16_upn22_rep-3_mhmw

│ └── no_sample

│ └── 20231116_0945_MC-115499_FAX00393_849b7392

│ ├── barcode_alignment_FAX00393_849b7392_a554d814.tsv

│ ├── fastq_fail

│ │ ├── FAX00393_fail_849b7392_a554d814_0.fastq.gz

│ │ └── FAX00393_fail_849b7392_a554d814_1.fastq.gz

│ ├── fastq_pass

│ │ ├── FAX00393_pass_849b7392_a554d814_0.fastq.gz

│ │ └── FAX00393_pass_849b7392_a554d814_1.fastq.gz

│ ├── final_summary_FAX00393_849b7392_a554d814.txt

│ ├── other_reports

│ │ ├── pore_scan_data_FAX00393_849b7392_a554d814.csv

│ │ └── temperature_adjust_data_FAX00393_849b7392_a554d814.csv

│ ├── pod5_fail

│ │ ├── FAX00393_fail_849b7392_a554d814_0.pod5

│ │ └── FAX00393_fail_849b7392_a554d814_1.pod5

│ ├── pod5_pass

│ │ ├── FAX00393_pass_849b7392_a554d814_0.pod5

│ │ └── FAX00393_pass_849b7392_a554d814_1.pod5

│ ├── pore_activity_FAX00393_849b7392_a554d814.csv

│ ├── report_FAX00393_20231116_0945_849b7392.html

│ ├── report_FAX00393_20231116_0945_849b7392.json

│ ├── report_FAX00393_20231116_0945_849b7392.md

│ ├── sample_sheet_FAX00393_20231116_0945_849b7392.csv

│ ├── sequencing_summary_FAX00393_849b7392_a554d814.txt

│ └── throughput_FAX00393_849b7392_a554d814.csv

└── 2023-10-26_upn22_rep-3_pci

└── no_sample

└── 20231026_1515_MC-115499_FAW96674_9d505d15

├── barcode_alignment__9d505d15_1f674c3a.tsv

├── fastq_fail

│ ├── FAW96674_fail_9d505d15_1f674c3a_0.fastq.gz

│ └── FAW96674_fail_9d505d15_1f674c3a_1.fastq.gz

├── fastq_pass

│ ├── FAW96674_pass_9d505d15_1f674c3a_0.fastq.gz

│ └── FAW96674_pass_9d505d15_1f674c3a_1.fastq.gz

├── final_summary_FAW96674_9d505d15_1f674c3a.txt

├── other_reports

│ ├── pore_scan_data_FAW96674_9d505d15_1f674c3a.csv

│ └── temperature_adjust_data_FAW96674_9d505d15_1f674c3a.csv

├── pod5_fail

│ ├── FAW96674_fail_9d505d15_1f674c3a_0.pod5

│ └── FAW96674_fail_9d505d15_1f674c3a_1.pod5

├── pod5_pass

│ ├── FAW96674_pass_9d505d15_1f674c3a_0.pod5

│ └── FAW96674_pass_9d505d15_1f674c3a_1.pod5

├── pore_activity__9d505d15_1f674c3a.csv

├── report__20231026_1515_9d505d15.html

├── report__20231026_1515_9d505d15.json

├── report__20231026_1515_9d505d15.md

├── sample_sheet__20231026_1515_9d505d15.csv

├── sequencing_summary_FAW96674_9d505d15_1f674c3a.txt

└── throughput__9d505d15_1f674c3a.csv

33 directories, 60 files

All the .fastq.gz files can be grabbed using the ** wildcard to search through all the subdirectories to look for the files with the .fastq extensions.

fastq_ch = Channel.fromPath("data/**/*.fastq.gz").collect()

When you run a program, theres a very high likelihood that many output or intermediate files will be created. what the output: syntax specifies is the only file or files (or stdout) that your want to include in your output channel for the next process or processes.

The output declaration block defines the channels used by the process to send out the results produced.

Only one output block, that can contain one or more output declaration, can be defined. The output block follows the syntax shown below:

output:

<output qualifier> <output name>, emit: <output channel>This is very similar to the input block, except it can also have an emit: option.

The path qualifier specifies one or more files produced by the process into the specified channel as an output.

If you use single quotes (') around the output name, the name of the file outputted by the program has to match exactly to the one in the file path, otherwise it won't be collected in the process output.

Example

process RANDOMNUM {

output:

path 'result.txt'

script:

"""

echo \$RANDOM > result.txt

"""

}

workflow {

receiver_ch = RANDOMNUM()

receiver_ch.view()

}When an output file name contains a wildcard character (* or ?) it is interpreted as a glob path matcher. This allows us to capture multiple files into a list object and output them as a sole emission.

For example:

process SPLITLETTERS {

output:

path 'chunk_*'

script:

"""

printf 'Hola' | split -b 1 - chunk_

"""

}

workflow {

letters = SPLITLETTERS()

letters.view()

}Ouputs:

[/workspace/gitpod/nf-training/work/ca/baf931d379aa7fa37c570617cb06d1/chunk_aa, /workspace/gitpod/nf-training/work/ca/baf931d379aa7fa37c570617cb06d1/chunk_ab, /workspace/gitpod/nf-training/work/ca/baf931d379aa7fa37c570617cb06d1/chunk_ac, /workspace/gitpod/nf-training/work/ca/baf931d379aa7fa37c570617cb06d1/chunk_ad]

Some caveats on glob pattern behavior:

- Input files are not included in the list of possible matches

- Glob pattern matches both files and directory paths

- When a two stars pattern

**is used to recourse across directories, only file paths are matched i.e., directories are not included in the result list.

When an output file name needs to be expressed dynamically, it is possible to define it using a dynamic string that references values defined in the input declaration block or in the script global context.

For example:

species = ['cat', 'dog', 'sloth']

sequences = ['AGATAG', 'ATGCTCT', 'ATCCCAA']

Channel

.fromList(species)

.set { species_ch }

process ALIGN {

input:

val x

val seq

output:

path "${x}.aln"

script:

"""

echo align -in $seq > ${x}.aln

"""

}

workflow {

genomes = ALIGN(species_ch, sequences)

genomes.view()

}In the above example, each time the process is executed an alignment file is produced whose name depends on the actual value of the x input.

The ${..} syntax allows you to pass in input variable names to the other parts of the process.

A lot of examples show have used multiple input and output channels that can handle one value at a time. However, Nextflow can also handle a tuple of values.

The input and output declarations for tuples must be declared with a tuple qualifier followed by the definition of each element in the tuple.

This is really useful when you want to carry the ID of a sample through all the steps of your pipeline. The sample ID is something you'd want to carry through every step of your process so you can track what these files are. The files can change names after a lot of input and output steps but keeping them in a tuple with their sample ID will make it easier to figure out what they are. You might have different pieces of metadata being kept in the tuple as well.

Example:

reads_ch = Channel.fromFilePairs('data/ggal/*_{1,2}.fq')

process FOO {

input:

tuple val(sample_id), path(sample_id_paths)

output:

tuple val(sample_id), path('sample.bam')

script:

"""

echo your_command_here --sample $sample_id_paths > sample.bam

"""

}

workflow {

sample_ch = FOO(reads_ch)

sample_ch.view()

}Outputs:

[lung, /workspace/gitpod/nf-training/work/23/fe268295bab990a40b95b7091530b6/sample.bam]

[liver, /workspace/gitpod/nf-training/work/32/656b96a01a460f27fa207e85995ead/sample.bam]

[gut, /workspace/gitpod/nf-training/work/ae/3cfc7cf0748a598c5e2da750b6bac6/sample.bam]

Nextflow allows the use of alternative output definitions within workflows to simplify your code.

These are done with the .out attribute and the emit: option.

emit:is more commonly used than.outindexing

By using .out, your are getting the output channel of one process, and you can pass it in as the input channel of another process

Example

workflow {

reads_ch = Channel.fromFilePairs(params.reads)

prepare_star_genome_index(params.genome)

rnaseq_mapping_star(params.genome,

prepare_star_genome_index.out,

reads_ch)

}When a process defines multiple output channels, each output can be accessed using the array element operator (out[0], out[1], etc.)

Outputs can also be accessed by name if the emit option is specified

process example_process {

output:

path '*.bam', emit: samples_bam

'''

your_command --here

'''

}

workflow {

example_process()

example_process.out.samples_bam.view()

}If you have a process that only has a static pathname, for example

process rnaseq_call_variants {

container 'quay.io/broadinstitute/gotc-prod-gatk:1.0.0-4.1.8.0-1626439571'

tag "${sampleId}"

input:

path genome

path index

path dict

tuple val(sampleId), path(bam), path(bai)

output:

tuple val(sampleId), path('final.vcf')

script:

"""

...

"""This won't create a name conflict for every sample that gets processed.

This is because Nextflow creates a new directory for every task a process performs. So if you're trying to process 10 samples (run 10 tasks from a single process), you're going to have 10 isolated folders.

If you only had the path variable defined and not the tuple with the sampleId then it may have caused an issue but the way it's defined here file conflicts won't be an issue because every sample will get it's own folder.

Note

The Nextflow team suggests using a tuple with the ID attached to the sample instead of using the fair directive. You may experience some performance hits and less parallelism using the fair directive.

While channels do emit items in the order that they are received (FIFO structure), processes do not necessarily process items in the order that they are received (because of implicit parallelization and later processes ending before earlier ones). While this isn't an issue in most cases, it is important to know.

For example

process basicExample {

input:

val x

"echo process job $x"

}

workflow {

def num = Channel.of(1,2,3)

basicExample(num)

}Can output

process job 3

process job 1

process job 2

Notice in the above example that the value 3 was processed before the others.

The fair directive (new in version 22.12.0-edge), when enabled, guarantees that process outputs will be emitted in the order in which they were received. This is because the fair process directive distributes computing resources in a "fair" way (comes from fair-threading) to ensure the first one finishes first and so on.

Note

The Nextflow team suggests using a tuple with the ID attached to the sample instead of using the fair directive. You may experience some performance hits and less parallelism using the fair directive.

Example:

process EXAMPLE {

fair true

input:

val x

output:

tuple val(task.index), val(x)

script:

"""

sleep \$((RANDOM % 3))

"""

}

workflow {

channel.of('A','B','C','D') | EXAMPLE | view

}The above example produces:

[1, A]

[2, B]

[3, C]

[4, D]

The script block within a process is a string statement that defines the command to be executed by the process' task.

process CONVERTTOUPPER {

input:

path y

output:

stdout

script:

"""

cat $y | tr '[a-z]' '[A-Z]'

"""

}By default, Nextflow expects a shell script in the script block.

Note

Since Nextflow uses the same Bash syntax for variable substitutions in strings, Bash environment variables need to be escaped using the \ character. The escaped version will be resolved later, returning the task directory (e.g. work/7f/f285b80022d9f61e82cd7f90436aa4/), while $PWD would show the directory where you're running Nextflow.

Example:

process FOO {

debug true

script:

"""

echo "The current directory is \$PWD"

"""

}

workflow {

FOO()

}

// Outputs The current directory is /workspace/gitpod/nf-training/work/7a/4b050a6cdef4b6c1333ce29f7059a0And without \

process FOO {

debug true

script:

"""

echo "The current directory is $PWD"

"""

}

workflow {

FOO()

}

// The current directory is /workspace/gitpod/nf-trainingIt can be tricky to write a script that uses many Bash variables. One possible alternative is to use a script string delimited by single-quote characters (').

process BAR {

debug true

script:

'''

echo "The current directory is $PWD"

'''

}

workflow {

BAR()

}

// The current directory is /workspace/gitpod/nf-training/work/7a/4b050a6cdef4b6c1333ce29f7059a0However, this using the single quotes (') will block the usage of Nextflow variables in the command script.

Another alternative is to use a shell statement instead of script and use a different syntax for Nextflow variables, e.g., !{..}. This allows the use of both Nextflow and Bash variables in the same script.

Example:

params.data = 'le monde'

process BAZ {

shell:

'''

X='Bonjour'

echo $X !{params.data}

'''

}

workflow {

BAZ()

}If you are using another language, like R or Python, you need the shebang so that Nextflow knows which software to use to interpret this code.

Example:

process CONVERTTOUPPER {

input:

path y

output:

stdout

script:

"""

#!/usr/bin/env python

with open("$y") as f:

print(f.read().upper(), end="")

"""

}The process script can also be defined in a completely dynamic manner using an if statement or any other expression for evaluating a string value.

Example:

params.compress = 'gzip'

params.file2compress = "$baseDir/data/ggal/transcriptome.fa"

process FOO {

debug true

input:

path file

script:

if (params.compress == 'gzip')

"""

echo "gzip -c $file > ${file}.gz"

"""

else if (params.compress == 'bzip2')

"""

echo "bzip2 -c $file > ${file}.bz2"

"""

else

throw new IllegalArgumentException("Unknown compressor $params.compress")

}

workflow {

FOO(params.file2compress)

}Real-world workflows use a lot of custom user scripts (BASH, R, Python, etc.). Nextflow allows you to consistently use and manage these scripts. Simply put them in a directory named bin in the workflow project root. They will be automatically added to the workflow execution PATH.

For example, imagine this is a process block inside of main.nf

process FASTQ {

tag "FASTQ on $sample_id"

input:

tuple val(sample_id), path(reads)

output:

path "fastqc_${sample_id}_logs"

script:

"""

mkdir fastqc_${sample_id}_logs

fastqc -o fastqc_${sample_id}_logs -f fastq -q {reads}

"""

}The FASTQC process in main.nf could be replaced by creating an executable script named fastqc.sh in the bin directory as shown below:

Create a new file named fastqc.sh with the following content:

fastqc.sh

#!/bin/bash

set -e

set -u

sample_id=${1}

reads=${2}

mkdir fastqc_${sample_id}_logs

fastqc -o fastqc_${sample_id}_logs -f fastq -q ${reads}Give it execute permission and move it into the bin directory:

chmod +x fastqc.sh

mkdir -p bin

mv fastqc.sh binOpen the main.nf file and replace the FASTQC process script block with the following code:

main.nf

script:

"""

fastqc.sh "$sample_id" "$reads"

"""Channel factories are methods that can be used to create channels explicitly.

For example, the of method allows you to create a channel that emits the arguments provided to it, for example:

ch = Channel.of( 1, 3, 5, 7 )

ch.view { "value: $it" }The first line in this example creates a variable ch which holds a channel object. This channel emits the arguments supplied to the of method. Thus the second line prints the following:

value: 1

value: 3

value: 5

value: 7Channel factories also have options that can be used to modify their behaviour. For example, the checkIfExists option can be used to check if the specified path contains file pairs. If the path does not contain file pairs, an error is thrown. A full list of options can be found in the channel factory documentation.

The fromFilePairs method creates a channel emitting the file pairs matching a glob pattern provided by the user. The matching files are emitted as tuples in which the first element is the grouping key of the matching pair and the second element is the list of files (sorted in lexicographical order). For example:

Channel

.fromFilePairs('/my/data/SRR*_{1,2}.fastq')

.view()It will produce an output similar to the following:

[SRR493366, [/my/data/SRR493366_1.fastq, /my/data/SRR493366_2.fastq]]

[SRR493367, [/my/data/SRR493367_1.fastq, /my/data/SRR493367_2.fastq]]

[SRR493368, [/my/data/SRR493368_1.fastq, /my/data/SRR493368_2.fastq]]

[SRR493369, [/my/data/SRR493369_1.fastq, /my/data/SRR493369_2.fastq]]

[SRR493370, [/my/data/SRR493370_1.fastq, /my/data/SRR493370_2.fastq]]

[SRR493371, [/my/data/SRR493371_1.fastq, /my/data/SRR493371_2.fastq]]

The Channel.fromSRA channel factory makes it possible to query the NCBI SRA archive and returns a channel emitting the FASTQ files matching the specified selection criteria.

To learn more about how to use the fromSRA channel factory, see here.

Nextflow operators are methods that allow you to manipulate channels. Every operator, with the exception of set and subscribe, produces one or more new channels, allowing you to chain operators to fit your needs.

There are seven main groups of operators are described in greater detail within the Nextflow Reference Documentation, linked below:

- Filtering operators

- Transforming operators

- Splitting operators

- Combining operators

- Forking operators

- Maths operators

- Other operators

Sometimes the output channel of one process doesn't quite match the input channel of the next process and so it has to be modified slightly. This can be performed using channel operators. A full list of channel operators can be found here https://www.nextflow.io/docs/latest/operator.html.

For example, in this code:

rnaseq_gatk_analysis

.out

.groupTuple()

.join(prepare_vcf_for_ase.out.vcf_for_ASE)

.map { meta, bams, bais, vcf -> [meta, vcf, bams, bais] }

.set { grouped_vcf_bam_bai_ch }.groupTuplegroups tuples that contain a common first element.joinjoins two channels taking the key into consideration.mapapplies a function to every element of a channel.setsaves this channel with a new name

Step by step this looks like:

rnaseq_gatk_analysis

.out

/* Outputs

[ENCSR000COQ, /workspace/gitpod/hands-on/work/c9/dfd66e253754b61195a166ac7726ff/ENCSR000COQ1.final.uniq.bam, /workspace/gitpod/hands-on/work/c9/dfd66e253754b61195a166ac7726ff/ENCSR000COQ1.final.uniq.bam.bai]

[ENCSR000COQ, /workspace/gitpod/hands-on/work/c9/dfd66e253754b61195a166ac7726ff/ENCSR000COQ2.final.uniq.bam, /workspace/gitpod/hands-on/work/c9/dfd66e253754b61195a166ac7726ff/ENCSR000COQ2.final.uniq.bam.bai]

*/

.groupTuple()

/* Outputs

[ENCSR000COQ, [/workspace/gitpod/hands-on/work/c9/dfd66e253754b61195a166ac7726ff/ENCSR000COQ1.final.uniq.bam, /workspace/gitpod/hands-on/work/92/b1ea340ce922d13bdce2985b2930f2/ENCSR000COQ2.final.uniq.bam], [/workspace/gitpod/hands-on/work/c9/dfd66e253754b61195a166ac7726ff/ENCSR000COQ1.final.uniq.bam.bai, /workspace/gitpod/hands-on/work/92/b1ea340ce922d13bdce2985b2930f2/ENCSR000COQ2.final.uniq.bam.bai]]

*/

.join(prepare_vcf_for_ase.out.vcf_for_ASE)

/* Outputs

[ENCSR000COQ, [/workspace/gitpod/hands-on/work/c9/dfd66e253754b61195a166ac7726ff/ENCSR000COQ1.final.uniq.bam, /workspace/gitpod/hands-on/work/92/b1ea340ce922d13bdce2985b2930f2/ENCSR000COQ2.final.uniq.bam], [/workspace/gitpod/hands-on/work/c9/dfd66e253754b61195a166ac7726ff/ENCSR000COQ1.final.uniq.bam.bai, /workspace/gitpod/hands-on/work/92/b1ea340ce922d13bdce2985b2930f2/ENCSR000COQ2.final.uniq.bam.bai], /workspace/gitpod/hands-on/work/ea/4a41fbeb591ffe48cfb471890b8f5c/known_snps.vcf]

*/

.map { meta, bams, bais, vcf -> [meta, vcf, bams, bais] }

/* Outputs

[ENCSR000COQ, /workspace/gitpod/hands-on/work/ea/4a41fbeb591ffe48cfb471890b8f5c/known_snps.vcf, [/workspace/gitpod/hands-on/work/c9/dfd66e253754b61195a166ac7726ff/ENCSR000COQ1.final.uniq.bam, /workspace/gitpod/hands-on/work/92/b1ea340ce922d13bdce2985b2930f2/ENCSR000COQ2.final.uniq.bam], [/workspace/gitpod/hands-on/work/c9/dfd66e253754b61195a166ac7726ff/ENCSR000COQ1.final.uniq.bam.bai, /workspace/gitpod/hands-on/work/92/b1ea340ce922d13bdce2985b2930f2/ENCSR000COQ2.final.uniq.bam.bai]]

*/

.set { grouped_vcf_bam_bai_ch }Just keep in mind processes and channel operators are not guaranteed to emit items in the order that they were received, as they are executed concurrently. This can lead to unintended effects based if you use a operator that takes multiple inputs. For example, the using the merge channel operator can lead to different results upon different runs based on the order in which the processes finish. You should always use a matching key (e.g. sample ID) to merge multiple channels, so that they are combined in a deterministic way (using an operator like join instead).

The view operator prints the items emitted by a channel to the console standard output, appending a new line character to each item.

For example:

Channel

.of('foo', 'bar', 'baz')

.view()Outputs:

foo

bar

baz

An optional closure parameter can be specified to customize how items are printed.

For example:

Channel

.of('foo', 'bar', 'baz')

.view { "- $it" }- foo

- bar

- baz

Note

The closure replaced the () brackets in the view operator with {} brackets.

The map operator applies a function of your choosing to every item emitted by a channel and returns the items obtained as a new channel. The function applied is called the mapping function and is expressed with a closure.

In the example below the groovy reverse method has been used to reverse the order of the characters in each string emitted by the channel.

Channel

.of('hello', 'world')

.map { it -> it.reverse() }

.view()Outputs:

olleh

dlrow

A map can associate a generic tuple to each element and can contain any data.

In the example below the groovy size method is used to return the length of each string emitted by the channel.

Channel

.of('hello', 'world')

.map { word -> [word, word.size()] }

.view()Outputs:

[hello, 5]

[world, 5]

The first operator emits the first item in a source channel, or the first item that matches a condition, and outputs a value channel. The condition can be a regular expression, a type qualifier (i.e. Java class), or a boolean predicate.

For example:

// no condition is specified, emits the very first item: 1

Channel.of( 1, 2, 3 )

.first()

.view()

// emits the first item matching the regular expression: 'aa'

Channel.of( 'a', 'aa', 'aaa' )

.first( ~/aa.*/ )

.view()

// emits the first String value: 'a'

Channel.of( 1, 2, 'a', 'b', 3 )

.first( String )

.view()

// emits the first item for which the predicate evaluates to true: 4

Channel.of( 1, 2, 3, 4, 5 )

.first { it > 3 }

.view()Since this returns a value channel and value channels are inexaustable, this is really good for channels that contain files which are reused oftens, like reference genomes.

Example:

reference = Channel.fromPath("data/genome.fasta").first()The .flatten operator transforms a channel in such a way that every item of type Collection or Array is flattened so that each single entry is emitted separately by the resulting channel. For example:

Channel

.of( [1,[2,3]], 4, [5,[6]] )

.flatten()

.view()Outputs

1

2

3

4

5

6

Note

This is different to .mix because .mix operates on items emitted from channels, not Collection or Array objects.

The .collect channel operator is basically the oposite of the .flatten channel operator, where it collects all the items emitted by a channel to a List and return the resulting object as a sole emission. For example:

Channel

.of( 1, 2, 3, 4 )

.collect()

.view()Outputs

[1,2,3,4]

By default, .collect will flatten nested list objects and collect their items individually. To change this behaviour, set the flat option to false.

Example:

Channel

.of( [1, 2], [3, 4] )

.collect()

.view()

// Outputs

// [1,2,3,4]

Channel

.of( [1, 2], [3, 4] )

.collect(flat: false)

.view()

// Outputs

// [[1,2],[3,4]]The .collect operator also can take a sort option (false by default). sort

When true, the collected items are sorted by their natural ordering. Can also be a closure or a Comparator which defines how items are compared during sorting.

The .buffer channel operator gathers the items emitted by the source channel into subsets and emits these subsets separately.

There are a number of ways you can regulate how buffer gathers the items from the source channel into subsets, however one of the most convienient ways of using it is with buffer( size: n ). transform the source channel in such a way that it emits tuples made up of n elements. For example:

Channel

.of( 1, 2, 3, 1, 2, 3, 1 )

.buffer( size: 2 )

.view()Outputs

[1, 2]

[3, 1]

[2, 3]

Be aware that if there is an incomplete tuple it is discarded. To emit the last items in a tuple containing less than n elements, use buffer( size: n, remainder: true ). For example:

Channel

.of( 1, 2, 3, 1, 2, 3, 1 )

.buffer( size: 2, remainder: true )

.view()Outputs

[1, 2]

[3, 1]

[2, 3]

[1]

The mix operator combines the items emitted by two (or more) channels into a single queue channel.

For example:

c1 = Channel.of( 1, 2, 3 )

c2 = Channel.of( 'a', 'b' )

c3 = Channel.of( 'z' )

c1.mix(c2,c3)

.subscribe onNext: { println it }, onComplete: { println 'Done' }Outputs:

1

2

3

'a'

'b'

'z'

Note

The items emitted by the resulting mixed channel may appear in any order, regardless of which source channel they came from. Thus, the following example could also be a possible result of the above example:

'z'

1

'a'

2

'b'

3

The mix operator and the collect operator are often chained together to gather the outputs of the multiple processes as a single input. Operators can be used in combinations to combine, split, and transform channels.

Example:

MULTIQC(quant_ch.mix(fastqc_ch).collect())You will only want one task of MultiQC to be executed to produce one report. Therefore, you can use the mix channel operator to combine the quant_ch and the fastqc_ch channels, followed by the collect operator, to return the complete channel contents as a single element.

Note

mix is different to .flatten because flatten operates on Collection or Array objects, not individual items.

Things can get quite complicated when you have lots of different tuples, especially when you're trying to use something like matching keys.

For example, if you had a sample with a bunch of different chromosomes, and you wanted to split them up all together and process them individually. How would you merge them back together based on a sample ID?

The groupTuple operator is useful for this.

The groupTuple operator collects tuples (or lists) of values emitted by the source channel, grouping the elements that share the same key into a list afterwards. Finally, it emits a new tuple object for each distinct key collected.

By default, the first element of each tuple is used as the grouping key. The by option can be used to specify a different index, or list of indices. See here for more details.

Channel

.of([1, 'A'], [1, 'B'], [2, 'C'], [3, 'B'], [1, 'C'], [2, 'A'], [3, 'D'])

.groupTuple()

.view()Outputs

[1, [A, B, C]]

[2, [C, A]]

[3, [B, D]]

groupTuple is very useful alongside 'meta maps' (see here for example)

Note

By default, groupTuple is a blocking operator, meanining it won't emit anything until all the inputs have been adjusted (see example above for further explaination).

By default, if you don’t specify a size, the groupTuple operator will not emit any groups until all inputs have been received. If possible, you should always try to specify the number of expected elements in each group using the size option, so that each group can be emitted as soon as it’s ready. In cases where the size of each group varies based on the grouping key, you can use the built-in groupKey() function, which allows you to define a different expected size for each group:

chr_frequency = ["chr1": 2, "chr2": 3]

Channel.of(

['region1', 'chr1', '/path/to/region1_chr1.vcf'],

['region2', 'chr1', '/path/to/region2_chr1.vcf'],

['region1', 'chr2', '/path/to/region1_chr2.vcf'],

['region2', 'chr2', '/path/to/region2_chr2.vcf'],

['region3', 'chr2', '/path/to/region3_chr2.vcf']

)

.map { region, chr, vcf -> tuple( groupKey(chr, chr_frequency[chr]), vcf ) }

.groupTuple()

.view()Outputs:

[chr1, [/path/to/region1_chr1.vcf, /path/to/region2_chr1.vcf]]

[chr2, [/path/to/region1_chr2.vcf, /path/to/region2_chr2.vcf, /path/to/region3_chr2.vcf]]

As a further explaination, take a look at the following example which shows a dummy read mapping process.:

process MapReads {

input:

tuple val(meta), path(reads)

path(genome)

output:

tuple val(meta), path("*.bam")

"touch out.bam"

}

workflow {

reference = Channel.fromPath("data/genome.fasta").first()

Channel.fromPath("data/samplesheet.csv")

| splitCsv( header:true )

| map { row ->

meta = row.subMap('id', 'repeat', 'type')

[meta, [

file(row.fastq1, checkIfExists: true),

file(row.fastq2, checkIfExists: true)]]

}

| set { samples }

MapReads( samples, reference )

| view

}Let's consider that you might now want to merge the repeats. You'll need to group bams that share the id and type attributes.

MapReads( samples, reference )

| map { meta, bam -> [meta.subMap('id', 'type'), bam]}

| groupTuple

| viewThis is easy enough, but the groupTuple operator has to wait until all items are emitted from the incoming queue before it is able to reassemble the output queue. If even one read mapping job takes a long time, the processing of all other samples is held up. You need a way of signalling to Nextflow how many items are in a given group so that items can be emitted as early as possible.

By default, the groupTuple operator groups on the first item in the element, which at the moment is a Map. You can turn this map into a special class using the groupKey method, which takes our grouping object as a first parameter and the number of expected elements in the second parameter.

MapReads( samples, reference )

| map { meta, bam ->

key = groupKey(meta.subMap('id', 'type'), NUMBER_OF_ITEMS_IN_GROUP)

[key, bam]

}

| groupTuple

| viewSo modifying the upstream channel would look like this:

workflow {

reference = Channel.fromPath("data/genome.fasta").first()

Channel.fromPath("data/samplesheet.csv")

| splitCsv( header:true )

| map { row ->

meta = row.subMap('id', 'repeat', 'type')

[meta, [file(row.fastq1, checkIfExists: true), file(row.fastq2, checkIfExists: true)]]

}

| map { meta, reads -> [meta.subMap('id', 'type'), meta.repeat, reads] }

| groupTuple

| map { meta, repeats, reads -> [meta + [repeatcount:repeats.size()], repeats, reads] }

| transpose

| map { meta, repeat, reads -> [meta + [repeat:repeat], reads]}

| set { samples }

MapReads( samples, reference )

| map { meta, bam ->

key = groupKey(meta.subMap('id', 'type'), meta.repeatcount)

[key, bam]

}

| groupTuple

| view

}Now that you have our repeats together in an output channel, you can combine them using "advanced bioinformatics":

process CombineBams {

input:

tuple val(meta), path("input/in_*_.bam")

output:

tuple val(meta), path("combined.bam")

"cat input/*.bam > combined.bam"

}

// ...

// In the workflow

MapReads( samples, reference )

| map { meta, bam ->

key = groupKey(meta.subMap('id', 'type'), meta.repeatcount)

[key, bam]

}

| groupTuple

| CombineBamsSo because here groupKey is being used, the elements from groupTuple were emitted much faster than they would otherwise have been because you don't have to wait for all of the mapping operations to complete before your groupTuple operation starts to emit items. The groupTuple operator already knows that some of these samples are ready because as soon as the second argument of groupKey is satisfied (in this case the length of the meta.repeatcount value) it knows that the tuple is ready to be emitted and will emit it immediately instead of having to wait for all the samples. This is very useful for when you have large runs with tens to hundreds of samples and will save lots of time by emitting ready items as soon as possible for downstream process to begin working on them.

Note

The class of a groupKey object is nextflow.extension.GroupKey. If you need the raw contents of the groupKey object (e.g. the metadata map), you can use the .getGroupTarget() method to extract those. See here for more information.

The transpose operator is often misunderstood. It can be thought of as the inverse of the groupTuple operator (see here for an example).

Given the following workflow, the groupTuple and transpose operators cancel each other out. Removing lines 8 and 9 returns the same result.

Given a workflow that returns one element per sample, where we have grouped the samplesheet lines on a meta containing only id and type:

workflow {

Channel.fromPath("data/samplesheet.csv")

| splitCsv(header: true)

| map { row ->

meta = [id: row.id, type: row.type]

[meta, row.repeat, [row.fastq1, row.fastq2]]

}

| groupTuple

| view

}Outputs:

N E X T F L O W ~ version 23.04.1

Launching `./main.nf` [spontaneous_rutherford] DSL2 - revision: 7dc1cc0039

[[id:sampleA, type:normal], [1, 2], [[data/reads/sampleA_rep1_normal_R1.fastq.gz, data/reads/sampleA_rep1_normal_R2.fastq.gz], [data/reads/sampleA_rep2_normal_R1.fastq.gz, data/reads/sampleA_rep2_normal_R2.fastq.gz]]]

[[id:sampleA, type:tumor], [1, 2], [[data/reads/sampleA_rep1_tumor_R1.fastq.gz, data/reads/sampleA_rep1_tumor_R2.fastq.gz], [data/reads/sampleA_rep2_tumor_R1.fastq.gz, data/reads/sampleA_rep2_tumor_R2.fastq.gz]]]

[[id:sampleB, type:normal], [1], [[data/reads/sampleB_rep1_normal_R1.fastq.gz, data/reads/sampleB_rep1_normal_R2.fastq.gz]]]

[[id:sampleB, type:tumor], [1], [[data/reads/sampleB_rep1_tumor_R1.fastq.gz, data/reads/sampleB_rep1_tumor_R2.fastq.gz]]]

[[id:sampleC, type:normal], [1], [[data/reads/sampleC_rep1_normal_R1.fastq.gz, data/reads/sampleC_rep1_normal_R2.fastq.gz]]]

[[id:sampleC, type:tumor], [1], [[data/reads/sampleC_rep1_tumor_R1.fastq.gz, data/reads/sampleC_rep1_tumor_R2.fastq.gz]]]

If we add in a transpose, each repeat number is matched back to the appropriate list of reads:

workflow {

Channel.fromPath("data/samplesheet.csv")

| splitCsv(header: true)

| map { row ->

meta = [id: row.id, type: row.type]

[meta, row.repeat, [row.fastq1, row.fastq2]]

}

| groupTuple

| transpose

| view

}Outputs:

N E X T F L O W ~ version 23.04.1

Launching `./main.nf` [elegant_rutherford] DSL2 - revision: 2c5476b133

[[id:sampleA, type:normal], 1, [data/reads/sampleA_rep1_normal_R1.fastq.gz, data/reads/sampleA_rep1_normal_R2.fastq.gz]]

[[id:sampleA, type:normal], 2, [data/reads/sampleA_rep2_normal_R1.fastq.gz, data/reads/sampleA_rep2_normal_R2.fastq.gz]]

[[id:sampleA, type:tumor], 1, [data/reads/sampleA_rep1_tumor_R1.fastq.gz, data/reads/sampleA_rep1_tumor_R2.fastq.gz]]

[[id:sampleA, type:tumor], 2, [data/reads/sampleA_rep2_tumor_R1.fastq.gz, data/reads/sampleA_rep2_tumor_R2.fastq.gz]]

[[id:sampleB, type:normal], 1, [data/reads/sampleB_rep1_normal_R1.fastq.gz, data/reads/sampleB_rep1_normal_R2.fastq.gz]]

[[id:sampleB, type:tumor], 1, [data/reads/sampleB_rep1_tumor_R1.fastq.gz, data/reads/sampleB_rep1_tumor_R2.fastq.gz]]

[[id:sampleC, type:normal], 1, [data/reads/sampleC_rep1_normal_R1.fastq.gz, data/reads/sampleC_rep1_normal_R2.fastq.gz]]

[[id:sampleC, type:tumor], 1, [data/reads/sampleC_rep1_tumor_R1.fastq.gz, data/reads/sampleC_rep1_tumor_R2.fastq.gz]]

The join operator is very similar to the groupTuple operator except it joins elements from multiple channels.

The join operator creates a channel that joins together the items emitted by two channels with a matching key. The key is defined, by default, as the first element in each item emitted.

left = Channel.of(['X', 1], ['Y', 2], ['Z', 3], ['P', 7])

right = Channel.of(['Z', 6], ['Y', 5], ['X', 4])

left.join(right).view()Output

[Z, 3, 6]

[Y, 2, 5]

[X, 1, 4]

Note

by default, join drops elements that don't have a match (Notice the P key and its corresponding list elements in the above example is missing from the output). This behaviour can be changed with the remainder option. See here for more details.

The branch operator allows you to forward the items emitted by a source channel to one or more output channels.

The selection criterion is defined by specifying a closure that provides one or more boolean expressions, each of which is identified by a unique label. For the first expression that evaluates to a true value, the item is bound to a named channel as the label identifier.

Example:

Channel

.of(1, 2, 3, 10, 40, 50)

.branch {

small: it <= 10

large: it > 10

}

.set { result }

result.small.view { "$it is small" }

result.large.view { "$it is large" }Output

1 is small

40 is large

2 is small

10 is small

3 is small

50 is large

An element is only emitted to a channel where the test condition is met. If an element does not meet any of the tests, it is not emitted to any of the output channels. You can 'catch' any such samples by specifying true as a condition.

Example:

workflow {

Channel.fromPath("data/samplesheet.csv")

| splitCsv( header: true )

| map { row -> [[id: row.id, repeat: row.repeat, type: row.type], [file(row.fastq1), file(row.fastq2)]] }

| branch { meta, reads ->

tumor: meta.type == "tumor"

normal: meta.type == "normal"

other: true

}

| set { samples }

samples.tumor | view { "Tumor: $it"}

samples.normal | view { "Normal: $it"}

samples.other | view { log.warn "Non-tumour or normal sample found in samples: $it"}

}other here will catch any rows that don't fall into either branches. This is good for testing for typos or errors in the data.

You can then combine this with log functions log.warn to warn the user there may be an error or more strictly log.error to halt the pipeline.

You may also want to emit a slightly different element than the one passed as input. The branch operator can (optionally) return a new element to an channel. For example, to add an extra key in the meta map of the tumor samples, we add a new line under the condition and return our new element. In this example, we modify the first element of the List to be a new list that is the result of merging the existing meta map with a new map containing a single key:

branch { meta, reads ->

tumor: meta.type == "tumor"

return [meta + [newKey: 'myValue'], reads]

normal: true

}The multiMap channel operator is similar to the branch channel operator

The multiMap (documentation) operator is a way of taking a single input channel and emitting into multiple channels for each input element.

Let's assume we've been given a samplesheet that has tumor/normal pairs bundled together on the same row. View the example samplesheet with:

cd operators

cat data/samplesheet.ugly.csvUsing the splitCsv operator would give us one entry that would contain all four fastq files. Let's consider that we wanted to split these fastqs into separate channels for tumor and normal. In other words, for every row in the samplesheet, we would like to emit an entry into two new channels. To do this, we can use the multiMap operator:

workflow {

Channel.fromPath("data/samplesheet.ugly.csv")

| splitCsv( header: true )

| multiMap { row ->

tumor:

metamap = [id: row.id, type:'tumor', repeat:row.repeat]