Part 2: Front & Backend

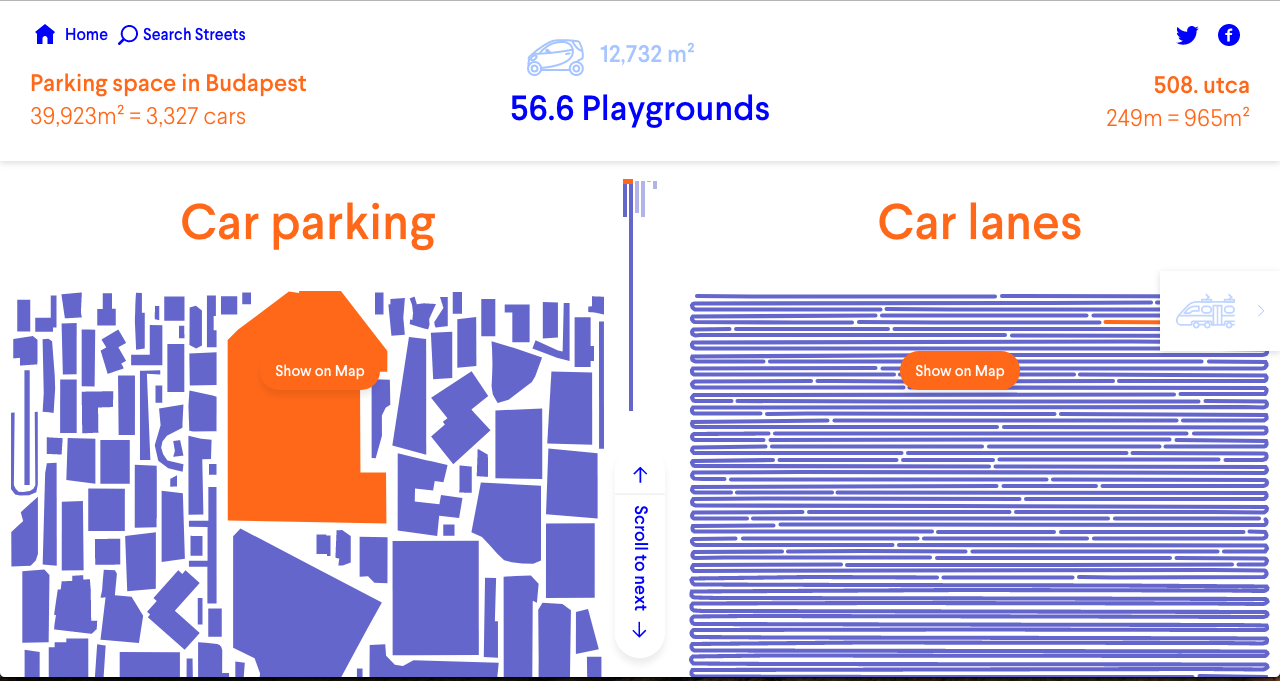

What the Street!? was derived out of the question “How do new and old mobility concepts change our cities?”. It was raised by Michael Szell and Stephan Bogner during their residency at moovel lab. With support of the lab team they set out to wrangle data of cities around the world to develop and design this unique mobility space report.

What the Street!? was made out of open-source software and resources. Thanks to the OpenStreetMap contributors and many other pieces we put together the puzzle of urban mobility space seen above.

Implemented project URL: https://whatthestreet.com/

Read more about the technical details behind What the Street!? on our blog https://move-lab.space/blog/about-what-the-street and in the scientific paper https://www.cogitatiopress.com/urbanplanning/article/view/1209/.

The complete codebase consist of two independent parts:

- Data Wrangling

- Front & Backend

This is part 2. You can find part 1 here: https://github.com/mszell/whatthestreet-datawrangling

What the Street!? runs with:

- A node.js app (using express.js) that serves the front-end (this repo), the front-end based on react.js using next.js

- A mongodb server which contains the data needed for the node.js app

- An external script to generate the video "gifs" of uncoiling the streets (not needed to run the app - they are generated before end)

You need node.js and mongodb installed on the machine in order to run the project.

The code has been forked during the project development from another codebase, and as we have been iterating fast to meet the deadline, there is not much style consistency between code ported from previous codebase and new code. For example: redux state management using some simple files containing all logic, but also in splitted files, variable naming, etc. However, this shouldn't impact the understanding of the codebase.

That said, this project wouldn't run well if the codebase wasn't correctly optimized. We took care of using best practices of front-end development to deliver a smooth interface, such as using PureComponent, requestAnimationFrame, prefetching, immutable data structures, server side rendering, avoiding costly dom operations during animation, etc.

Mongodb is not auth configured (no password / username)

To import the data into the mongodb, you should:

- download an example city from that here: https://gif.whatthestreet.com.s3.amazonaws.com/data/stuttgart_coiled.gz

- import the data with this command (you need to import each city in this db name schema: ${cityname}_coiled_2 )

mongorestore

--nsInclude 'stuttgart_coiled.*'

--nsFrom 'stuttgart_coiled.$collection$'

--nsTo 'stuttgart_coiled_2.$collection$'

--gzip

--archive=stuttgart_coiled.gz

- Double check the data is imported by running

mongo

show dbs

You should see something like:

stuttgart_coiled_2 0.202GB

- Copy this repo (you can exclude the documents folder)

- Configure the config.json file with your Mapbox token and analytics: https://github.com/mszell/whatthestreet/blob/master/config.json

npm install

npm run build (takes a while)

npm run start (will listen on the PORT variable or on 80 if not set)

You can customize those env variable to change the default:

MONGODB_URL (mongodb://localhost:27017)

MONGODB_USER (optional) works only if password is also set

MONGODB_PASSWORD (optional) works only if user is also set

MONGODB_SSL (optional) enables SSL for documentDB connection

PORT (default: 80 , port the http server is listening to)

We implemented a script that uses phantomjs to record mp4 files of the uncoiling animations - you can find it here: https://github.com/mszell/whatthestreet/blob/master/recorder/main.sh (used on Mac OS X, did not test on linux)

Dependencies:

- phantomjs

- ffmpeg

To run it, use:

./main.sh $CITY $MOBILITY_TYPE $STREET_ID

ex: ./main.sh berlin car 3

It will output the video file in the recorder/data/${city} folder.

The core of the script is https://github.com/mszell/whatthestreet/blob/master/recorder/record.js, the principle of recording an animation with phantom are:

-

Implement the animation with a slowdown factor because phantomjs can't get frames at 30 FPS (the separate implementation can be found here: https://github.com/mszell/whatthestreet/blob/master/app/mapmobile/MapMobile.js, and here you can see it)

-

Notify phantom when we are ready to animate (https://github.com/mszell/whatthestreet/blob/master/app/mapmobile/MapMobile.js#L281)

-

Phantom will take screenshots at 10 FPS and then we transform the frames into a video using ffmpeg (https://github.com/mszell/whatthestreet/blob/master/recorder/main.sh#L14)

It turned out that we were able to generate 20 cities in parallel on the same machine, so we didn't need to automate the uploading to s3, but here is some script that can help to do so.

Tobias Lauer

- Raphael Reimann

- Joey Lee

- Daniel Schmid

- Tilman Häuser

OpenStreetMap, a free alternative to services like Google Maps. Please contribute, if you notice poor data quality.