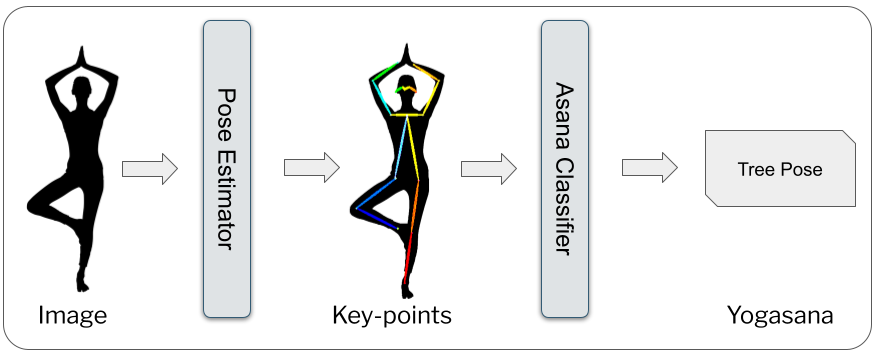

Official codebase for the paper "A View Independent Classification Framework for Yoga Postures" [SNCS][arxiv].

Packages required are listed in requirements. There is a dependency on LighGBM (paper) (docs), and rest of the packages are standard libraries.

The processed key point inferred datasets can be found here.

Preprocessing codes to generate key points dataset from raw video data and alhpapose key points can be found here. It also includes code for bounding box normalisation, key point selection and fold generation.

The main classifier class can be found here. It contains a unified api for training and inferencing of different classification methods like Adaboost, Gradient Boost, LightGBM, Random forests, and Histogram gradient boosting. It also has an option to ensemble three of these methods. Along with this main class, there is also a cascading classifier here, that is relevant for heirarchial classification like in Yoga-82.

The training and evaluation scripts can be found here. It has k fold cross validation, along with a visualisation script to visualise the inference on an entire video.

To reproduce the results, make necessary modifications (dataset paths, method etc) in the corresponding config files, and run main.py, with the path to the config as an argument. An example command to run frame wise evaluation on the in house dataset is shown below.

python3 main.py --cfg configs/in_house/frame_wise.yaml

Some relevant config files are:

- Frame wise Evaluation on in house dataset (yaml)

- Subject wise Evaluation on in house dataset (yaml)

- Camera wise Evaluation, training on 3 camera angles of in house dataset (yaml)

This is being developed as part of the Yogasana classification project under Prof. Rahul Garg, CSE, IITD.

If you find this work useful in your research, please consider citing it.

@article{chasmai2022view,

title={A View Independent Classification Framework for Yoga Postures},

author={Chasmai, Mustafa and Das, Nirjhar and Bhardwaj, Aman and Garg, Rahul},

journal={Springer Nature Computer Science},

url = {https://doi.org/10.1007/s42979-022-01376-7},

year={2022}

}