Benchpress [1] is a Snakemake workflow where structure learning algorithms, implemented in possibly different languages, can be executed and compared. The computations scale seamlessly on multiple cores or "... to server, cluster, grid and cloud environments, without the need to modify the workflow definition" - Snakemake. The documentation is found at https://benchpressdocs.readthedocs.io.

The following main functionalities are provided by Benchpress

- Benchmarks - Benchmark publically available structure learning algorithms.

- Algorithm development - Benchmark your own algorithm along with the existing ones while developing.

- Data analysis - Estimate the underlying graph structure for your own dataset(s).

Some systems require explicit installation of squash-tools. Using conda it can be installed as

$ conda install -c conda-forge squash-tools

Benchpress cannot run directly on macOS/Windows as it requires Singularity which is only supported by Linux systems. However, Linux can be installed (and the requirements above) on a virtual machine via e.g. VirtualBox.

As Benchpress is a Snakemake workflow, once the requirements are installed it requires no further installation but cloning the repository as

$ git clone https://github.com/felixleopoldo/benchpress.git

$ cd benchpress

If you are using a virtualiser such as VirtualBox, this folder should still be located on macOS/Windows and shared to the virtual machine. In this way, all the files used by Benchpress are reachable from macOS/Windows.

Benchpress supports five different data scenarios, built from combining different sources of graph parameters and data.

| Graph | Parameters | Data | |

|---|---|---|---|

| I | - | - | Fixed |

| II | Fixed | - | Fixed |

| III | Fixed | Fixed | Generated |

| IV | Fixed | Generated | Generated |

| V | Generated | Generated | Generated |

The directory resources/ contains the fixed graphs, parameters, and datasets that are already available. It containts, e.g., all the graphs (and corresponding parameters) from the Bayesian networks repository, downloaded from bnlearns homepage. You can also place your own files in the corresponding directories and use them in the same way as the existing ones. The methods to generate graphs, parameters and data are listed further down.

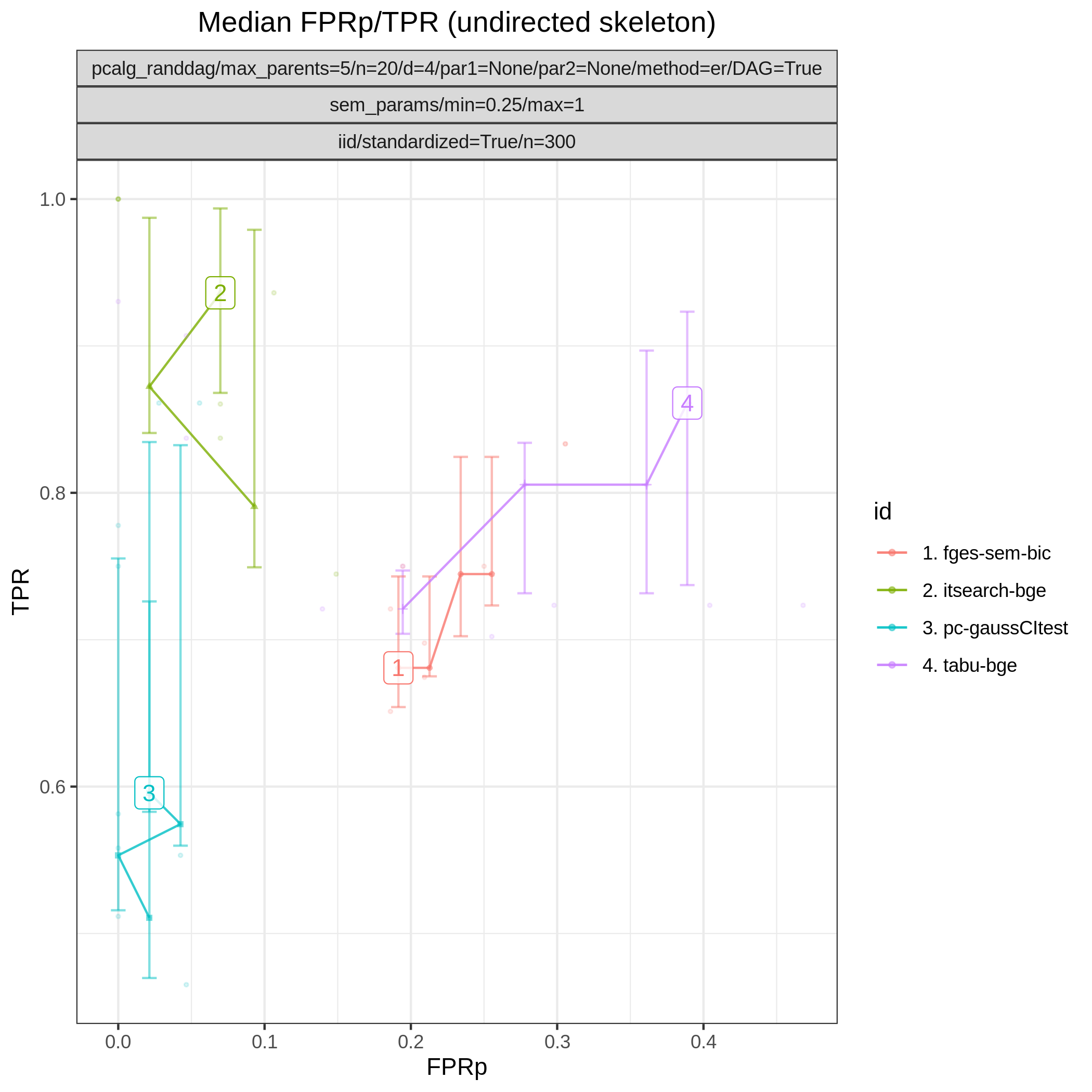

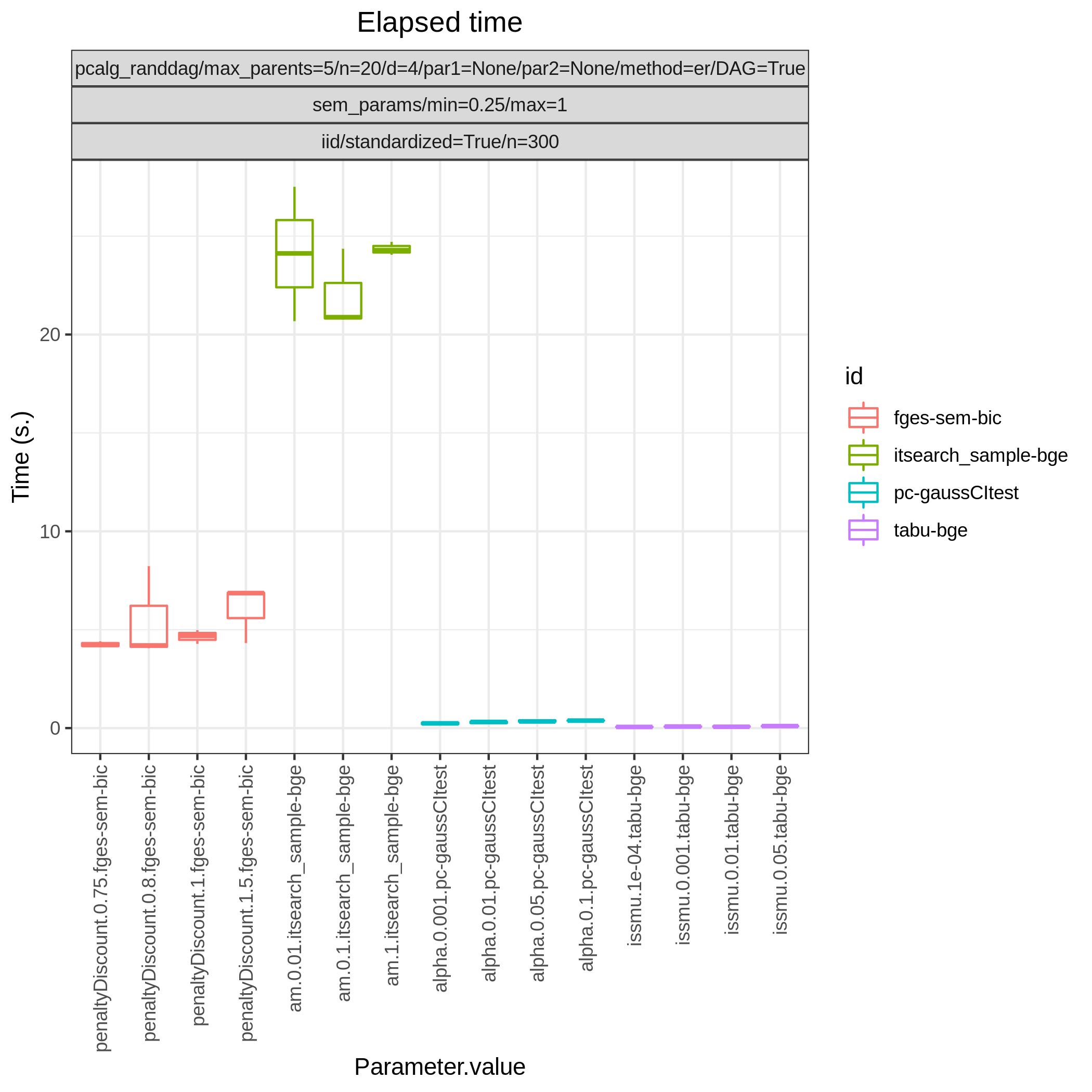

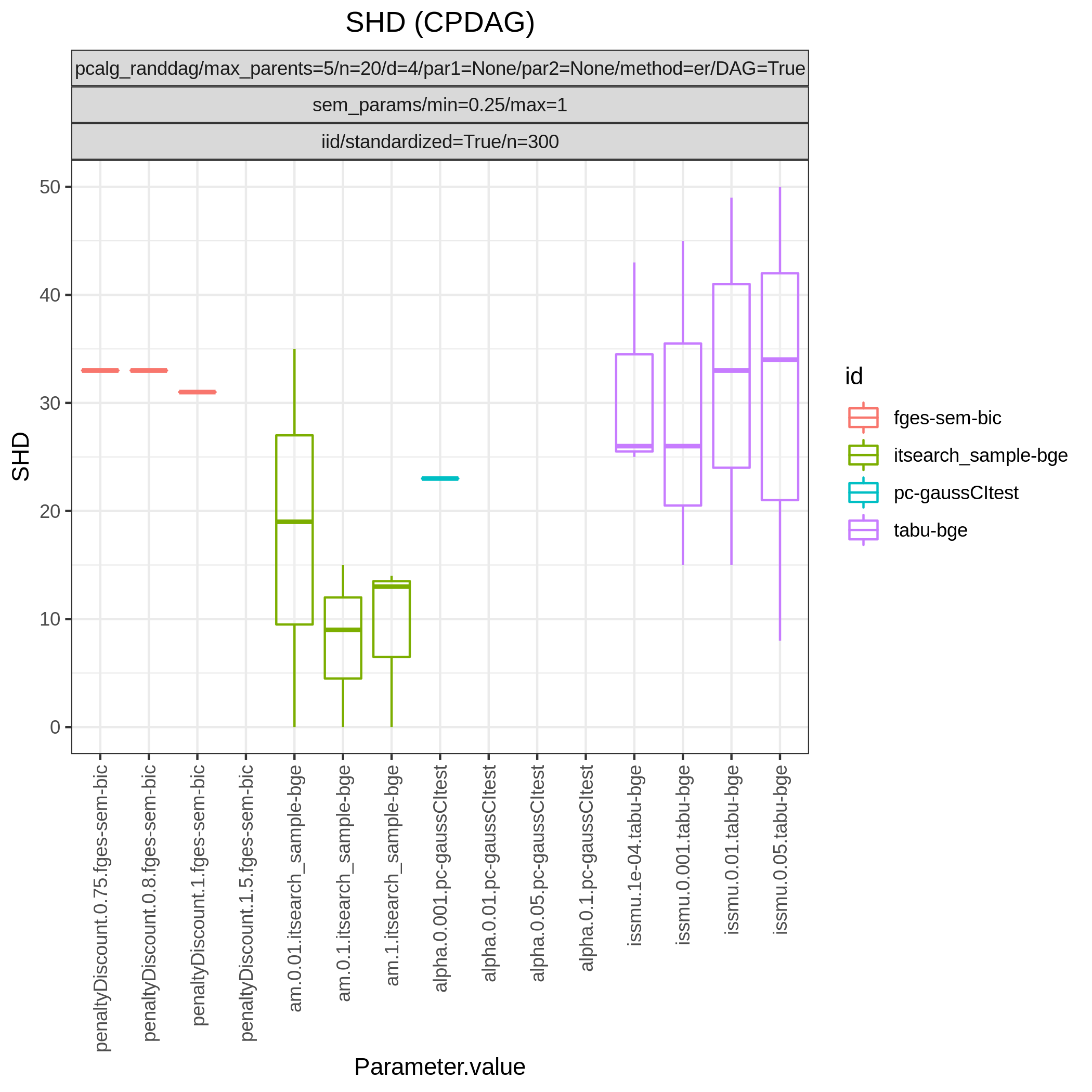

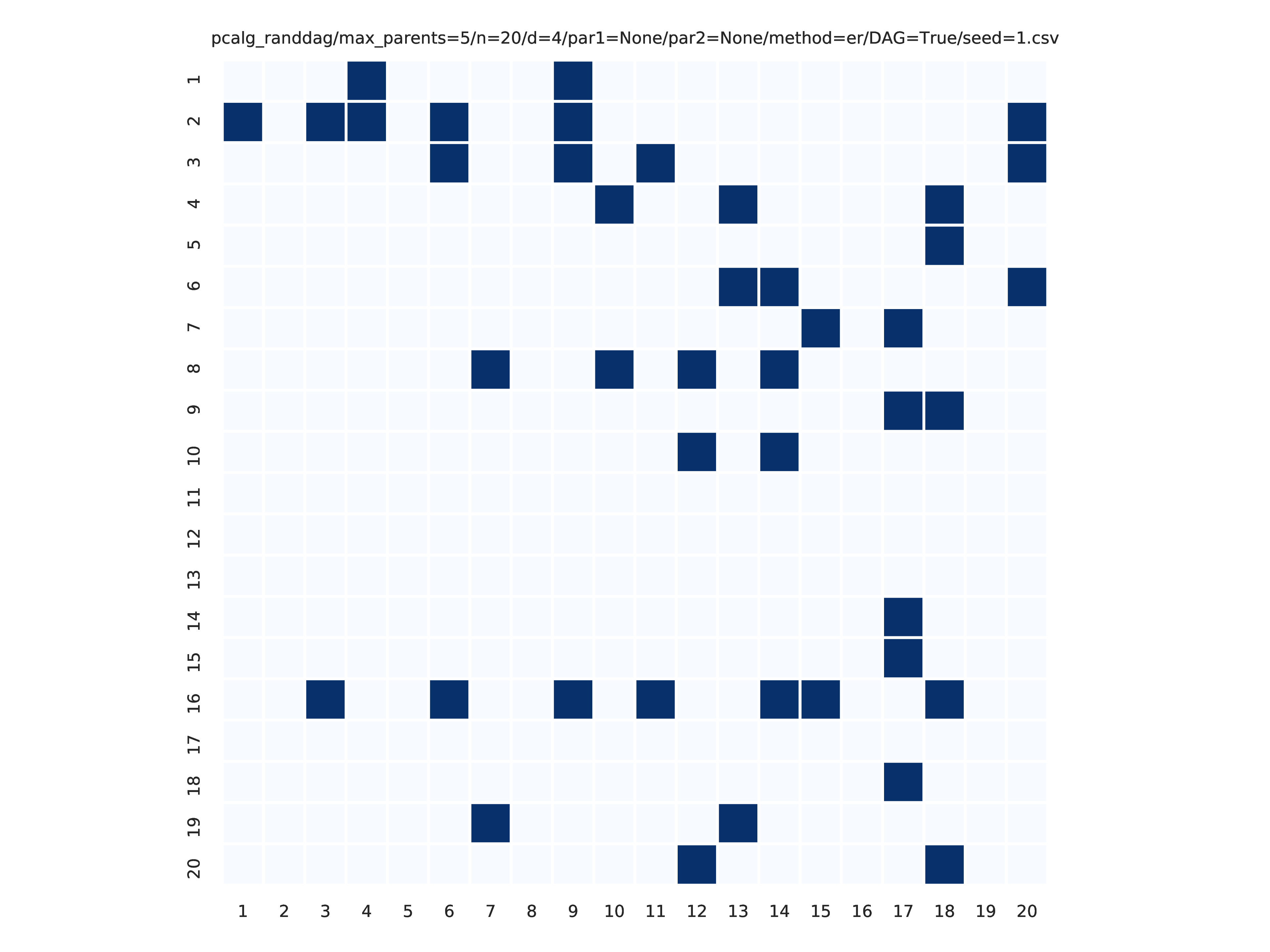

This study is an example of data scenario V based on three continuous datasets corresponing to three realisations of a random linear Gaussian structural equation model (SEM) with random DAG. The DAGs are sampled from a restricted Erdős–Rényi distribution using the pcalg_randdag module and the weight parameters are sampled uniformly using the sem_params module. For simplicity we use only a few structure learning modules here (bidag_itsearch, tetrad_fges, bnlearn_tabu, pcalg_pc) with different parameter settings. The full setup is found here config/config.json.

To run this study (333 jobs ~ 10 minutes on a 2-cores laptop) type

$ snakemake --cores all --use-singularity --configfile config/config.json

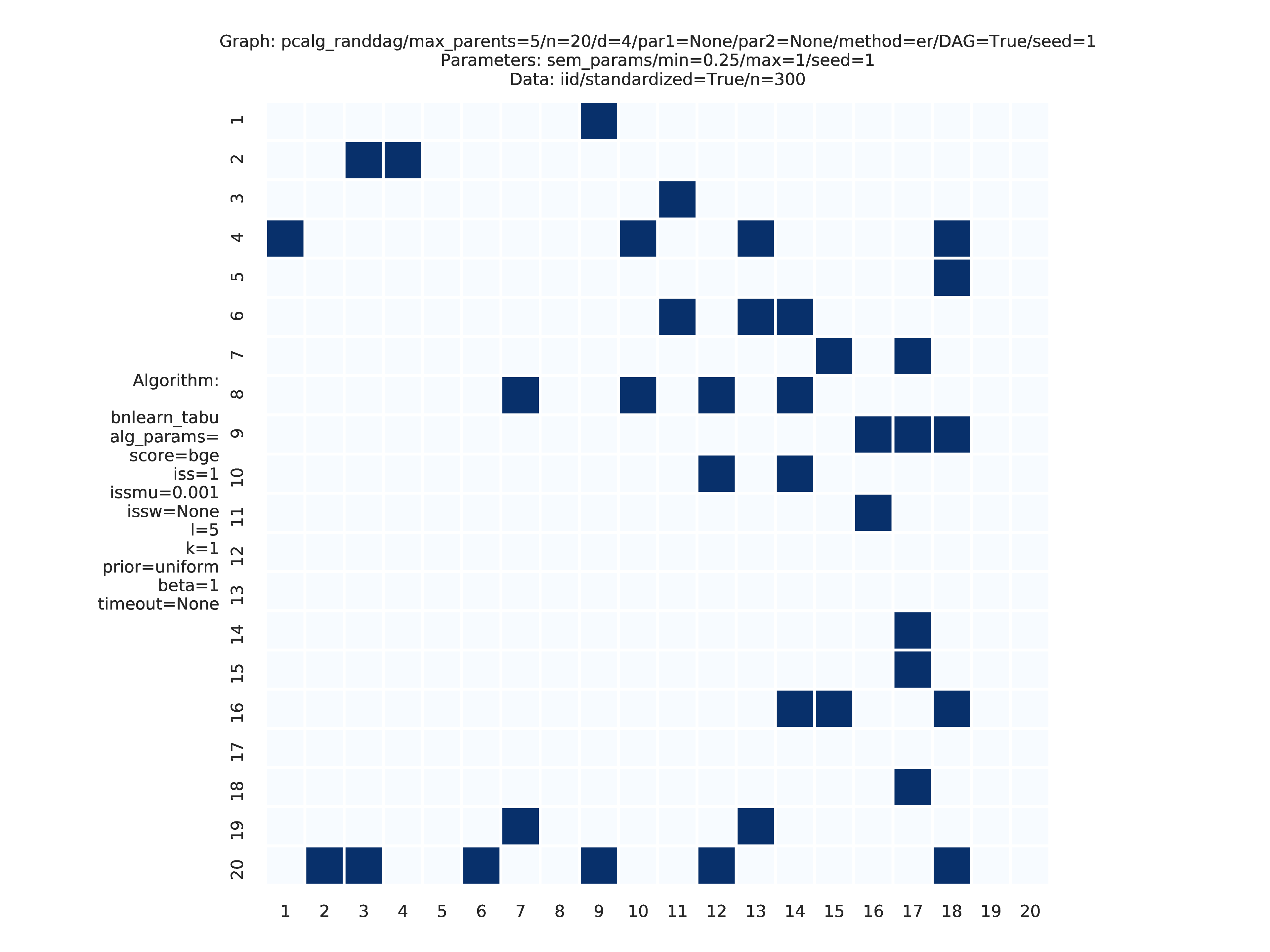

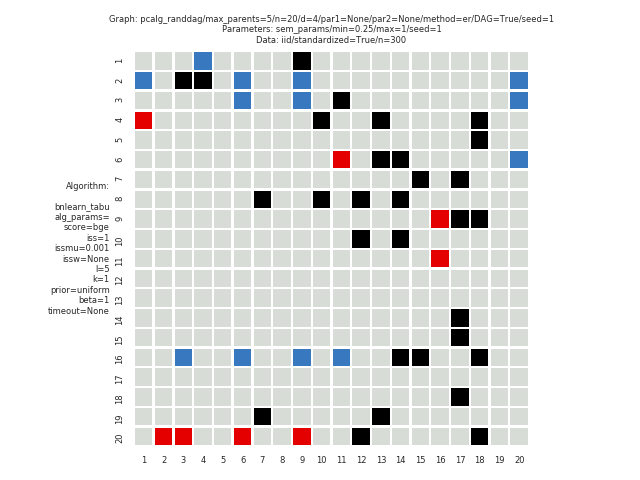

The following plots are generated by the benchmarks module

These plots are generated by the graph_plots module

You can easily use your own algorithm in Benchpress regardless of the programming language. To get the idea, perhaps the best way to start is to first use and alter the template R-script mylib_myalg.R as you like. It looks like this at the moment:

myparam1 <- snakemake@wildcards[["myparam1"]]

myparam2 <- snakemake@wildcards[["myparam2"]]

data <- read.csv(snakemake@input[["data"]], check.names = FALSE)

# This is a very fast way to estimate an undirected graph.

p <- ncol(data)

set.seed(as.integer(snakemake@wildcards[["replicate"]]))

start <- proc.time()[1]

adjmat <- matrix(runif(p * p), nrow = p, ncol = p) > 0.8

adjmat <- 1 * (adjmat | t(adjmat)) # Make it symmetric (undirected)

diag(adjmat) <- 0 # No self loops

colnames(adjmat) <- names(data) # Get labels from the data

totaltime <- proc.time()[1] - start

write.csv(adjmat, file = snakemake@output[["adjmat"]], row.names = FALSE, quote = FALSE)

write(totaltime, file = snakemake@output[["time"]])

write("None", file = snakemake@output[["ntests"]]) # Number of c.i. testsThe parameters used in the first two lines above are automatically generated from the JSON object in the mylib_myalg section of config/config.json. Feel free to add or change these keys or values. To test it you will have to add testing_myalg e.g. to the list of ids in the benchmarks section.

{

"id": "testing_myalg",

"myparam1": "somevalue",

"myparam2": [

1,

2

]

}If you want to use another programming language or link to some of your own scripts, you can edit mylib_myalg.smk to suite your algorithm.

if "mylib_myalg" in pattern_strings:

rule mylib_myalg:

input:

justatrigger="workflow/scripts/structure_learning_algorithms/mylib_myalg.R",

data = alg_input_data()

output:

adjmat = alg_output_adjmat_path("mylib_myalg"),

time = alg_output_time_path("mylib_myalg"),

ntests = alg_output_ntests_path("mylib_myalg")

container:

None

script:

"../scripts/structure_learning_algorithms/mylib_myalg.R"If R is not installed on your system, you may change the container from None to "docker://r-base" in order to run the script in a Singularity container based on the r-base Docker image.

To upload your algorithm to Benchpress, you should install it in a Docker image, push it to Docker Hub, and align the algorithm with the existing ones following CONTRIBUTING.md.

| Method | Graph | Language | Library | Version | Module id |

|---|---|---|---|---|---|

| randDAG | DAG,UG | R | pcalg | 2.7-3 | pcalg_randdag |

| graph.sim | DG,UG | R | BDgraph | 2.64 | bdgraph_graphsim |

| CTA [24] | DG | Python | trilearn | 1.2.3 | trilearn_cta |

| AR | DG | Python | trilearn | 1.2.3 | bandmat |

| AR random lag | DG | Python | trilearn | 1.2.3 | rand_bandmat |

| Fixed adjacency matrix | * | .csv | - | - | - |

| Distribution | Method | Graph | Language | Library | Version | Module id |

|---|---|---|---|---|---|---|

| Graph Wishart | rgwish | DG, UG | R | BDgraph | 2.64 | bdgraph_rgwish |

| Hyper Dirichlet [2] | - | DG | Python | trilearn | 1.2.3 | trilearn_hyper-dir |

| Graph intra-class | - | DG | Python | trilearn | 1.2.3 | trilearn_intra-class |

| Random SEM parameters | - | DAG | R | - | - | sem_params |

| Random probability tables | - | DAG | R | - | - | bin_bn |

| Fixed bn.fit object | - | DAG | .rds | bnlearn | - | - |

| Fixed SEM parameter matrix | - | DAG | .csv | - | - | - |

| Method | Language | Module id |

|---|---|---|

| I.I.D. data samples | - | iid |

| SEM I.I.D. data samples | Python | gcastle_iidsimulation |

| Fixed data file | .csv | - |

| Algorithm | Graph | Language | Library | Version | Module id |

|---|---|---|---|---|---|

| GOBNILP [3][33][34] | DAG | C | GOBNILP | #4347c64 | gobnilp |

| ASOBS [15] | DAG | R/Java | r.blip | 1.1 | rblip_asobs |

| FGES [9] | CPDAG | Java | TETRAD | 1.1.3 | tetrad_fges |

| FCI [5] | DAG | Java | TETRAD | 1.1.3 | tetrad_fci |

| RFCI [22] | CPDAG | Java | TETRAD | 1.1.3 | tetrad_rfci |

| GFCI [21] | DAG | Java | TETRAD | 1.1.3 | tetrad_gfci |

| PC [4][5] | CPDAG | R | pcalg | 2.7-3 | pcalg_pc |

| Dual PC [31] | CPDAG | R | dualPC | 4a5175d | dualpc |

| No tears [17] | DAG | Python | jmoss20/notears | #0c032a0 | notears |

| No tears | DAG | Python | gCastle | 1.0.3rc3 | gcastle_notears |

| PC | CPDAG | Python | gCastle | 1.0.3rc3 | gcastle_pc |

| ANM | DAG | Python | gCastle | 1.0.3rc3 | gcastle_anm |

| Direct LiNGAM | DAG | Python | gCastle | 1.0.3rc3 | gcastle_direct_lingam |

| ICALiNGAM | DAG | Python | gCastle | 1.0.3rc3 | gcastle_ica_lingam |

| NOTEARS-MLP | DAG | Python | gCastle | 1.0.3rc3 | gcastle_notears_nonlinear |

| NOTEARS-SOM | DAG | Python | gCastle | 1.0.3rc3 | gcastle_notears_nonlinear |

| NOTEARS-LOW-RANK | DAG | Python | gCastle | 1.0.3rc3 | gcastle_notears_low_rank |

| GOLEM | DAG | Python | gCastle | 1.0.3rc3 | gcastle_golem |

| GraNDAG | DAG | Python | gCastle | 1.0.3rc3 | gcastle_grandag |

| MCSL | DAG | Python | gCastle | 1.0.3rc3 | gcastle_mcsl |

| RL | DAG | Python | gCastle | 1.0.3rc3 | gcastle_rl |

| CORL | DAG | Python | gCastle | 1.0.3rc3 | gcastle_corl |

| HC [6] | DAG | R | bnlearn | 4.7 | bnlearn_hc |

| MMHC [23] | DAG | R | bnlearn | 4.7 | bnlearn_mmhc |

| Inter-IAMB [27] | CPDAG | R | bnlearn | 4.7 | bnlearn_interiamb |

| GS [26] | DAG | R | bnlearn | 4.7 | bnlearn_gs |

| Tabu [25] | DAG | R | bnlearn | 4.7 | bnlearn_tabu |

| PC stable [4][5] | CPDAG | R | bnlearn | 4.7 | bnlearn_pcstable |

| IAMB [27] | DAG | R | bnlearn | 4.7 | bnlearn_iamb |

| Fast IAMB | DAG | R | bnlearn | 4.7 | bnlearn_fastiamb |

| IAMB FDR | DAG | R | bnlearn | 4.7 | bnlearn_iambfdr |

| MMPC | DAG | R | bnlearn | 4.7 | bnlearn_mmpc |

| SI HITON-PC | DAG | R | bnlearn | 4.7 | bnlearn_sihitonpc |

| Hybrid PC | DAG | R | bnlearn | 4.7 | bnlearn_hpc |

| H2PC | DAG | R | bnlearn | 4.7 | bnlearn_h2pc |

| RSMAX2 | DAG | R | bnlearn | 4.7 | bnlearn_rsmax2 |

| Iterative MCMC [28] | DAG | R | BiDAG | 2.0.3 | bidag_itsearch |

| Order MCMC [28][29] | DAG | R | BiDAG | 2.0.3 | bidag_order_mcmc |

| Partition MCMC [30] | DAG | R | BiDAG | 2.0.3 | bidag_partition_mcmc |

| PGibbs [20] | DG | Python | trilearn | 1.2.3 | trilearn_pgibbs |

| GG99 single pair [18] | DG | Java | A. Thomas | - | gg99_singlepair |

| GT13 multi pair [19] | DG | Java | A. Thomas | - | gt13_multipair |

| Parallel DG | DG | Python | parallelDG | 0.3 | parallelDG |

| GLasso [31] | UG | Python | scikit-learn | 0.22.1 | sklearn_glasso |

| Function | Language | Library | Module id |

|---|---|---|---|

| Plot data with ggpairs | R | GGally | ggally_ggpairs |

| Plot true graphs | - | graphviz | graph_true_plots |

| Plot true graphs properties | R | ggplot2 | graph_true_stats |

| Plot estimated graphs | - | graphviz | graph_plots |

| Timing and ROC curves for TPR,FPR,FNR,... | R | ggplot2 | benchmarks |

| MCMC mean graph | Python | seaborn | mcmc_heatmaps |

| MCMC auto-correlation | Python | pandas | mcmc_autocorr_plots |

| MCMC trajectory | Python | pandas | mcmc_traj_plots |

Acronyms are used for Directed Acyclic Graphs (DAGs), Undirected Graphs (UGs), Decomposable Graphs (DGs), and Completed Partially DAGs (CPDAGs).

@misc{rios2021benchpress,

title={Benchpress: a scalable and platform-independent workflow for benchmarking structure learning algorithms for graphical models},

author={Felix L. Rios and Giusi Moffa and Jack Kuipers},

year={2021},

eprint={2107.03863},

archivePrefix={arXiv},

primaryClass={stat.ML}

}

Send an email to felix leopoldo rios at gmail com for questions.

Contrubutions are very welcomed. See CONTRIBUTING.md for instructions.

- Fork it!

- Create your feature branch:

git checkout -b my-new-feature - Commit your changes:

git commit -am 'Add some feature' - Push to the branch:

git push origin my-new-feature - Open a pull request

This project is licensed under the GPL-2.0 License - see the LICENSE file for details

- [1] Felix L. Rios and Giusi Moffa and Jack Kuipers Benchpress: a scalable and versatile workflow for benchmarking structure learning algorithms for graphical models. ArXiv eprints., 2107.03863, 2021.

- [2] Dawid AP, Lauritzen SL (1993). “Hyper Markov laws in the statistical analysis of decomposable graphical models.” The Annals of Statistics, 21(3), 1272–1317.

- [3] Cussens J (2012). “Bayesian network learning with cutting planes.” arXiv preprint arXiv:1202.3713.

- [4] Spirtes P, Glymour CN (1991). “An algorithm for fast recovery of sparse causal graphs.” Social science computer review, 9(1), 62–72.

- [5] Spirtes P, Glymour CN, Scheines R, Heckerman D (2000). Causation, prediction, and search. MIT press.

- [6] Russell S, Norvig P (2002). “Artificial intelligence: a modern approach.”

- [7] Scutari M, Vitolo C, Tucker A (2019b). “Learning Bayesian networks from big data with greedy search: computational complexity and efficient implementation.” Statistics and Computing, 29(5), 1095–1108.

- [8] Colombo D, Maathuis MH, Kalisch M, Richardson TS (2012). “Learning high-dimensional directed acyclic graphs with latent and selection variables.” The Annals of Statistics, pp. 294–321.

- [9] [Meek C (1997). Graphical Models: Selecting causal and statistical models. Ph.D. thesis, PhD thesis, Carnegie Mellon University.]

- [10] Chickering DM (2002). “Optimal structure identification with greedy search.” Journal of machine learning research, 3(Nov), 507–554.

- [11] Ogarrio JM, Spirtes P, Ramsey J (2016). “A hybrid causal search algorithm for latent variable models.” In Conference on Probabilistic Graphical Models, pp. 368–379.

- [12] J. Kuipers, P. Suter, and G. Moffa. Efficient sampling and structure learning of Bayesian networks. Journal of Computational and Graphical Statistics, 0(ja):1–32, 2021.

- [13] Margaritis D (2003). “Learning Bayesian network model structure from data.” PHD thesis, Carnegie-Mellon Univ Pittsburgh Pa School of Computer Science.

- [14] Tsamardinos I, Aliferis CF, Statnikov AR, Statnikov E (2003). “Algorithms for large scale Markov blanket discovery.” In FLAIRS conference, volume 2, pp. 376–380.

- [15] Tsamardinos I, Brown LE, Aliferis CF (2006). “The max-min hill-climbing Bayesian network structure learning algorithm.” Machine learning, 65(1), 31–78.

- [16] Scanagatta M, de Campos CP, Corani G, Zaffalon M (2015). “Learning Bayesian networks with thousands of variables.” In Advances in neural information processing systems, pp. 1864–1872.

- [17] Zheng X, Aragam B, Ravikumar PK, Xing EP (2018). “DAGs with NO TEARS: Continuous optimization for structure learning.” In Advances in Neural Information Processing Systems, pp. 9472–9483.

- [18] P. Giudici and P. J. Green. Decomposable graphical Gaussian model determination. Biometrika, 86(4):785–801, 1999.

- [19] P. J. Green and A. Thomas. Sampling decomposable graphs using a Markov chain on junction trees. Biometrika, 100(1):91–110, 2013.

- [20] J. Olsson, T. Pavlenko, and F. L. Rios. Bayesian learning of weakly structural Markov graph laws using sequential Monte Carlo methods. Electron. J. Statist., 13(2):2865–2897, 2019.

- [21] J. M. Ogarrio, P. Spirtes, and J. Ramsey. A hybrid causal search algorithm for latent variable models. In Conference on Probabilistic Graphical Models, pages 368–379, 2016.

- [22] D. Colombo, M. H. Maathuis, M. Kalisch, and T. S. Richardson. Learning high-dimensional directed acyclic graphs with latent and selection variables. The Annals of Statistics, pages 294–321, 2012.

- [23] I. Tsamardinos, L. E. Brown, and C. F. Aliferis. The max-min hill-climbing Bayesian network structure learning algorithm. Machine learning, 65(1):31–78, 2006.

- [24] J. Olsson, T. Pavlenko, and F. L. Rios. Sequential sampling of junction trees for decomposable graphs. Statistics and Computing (to appear).

- [25] S. Russell and P. Norvig. Artificial intelligence: a modern approach. 2002.

- [26] D. Margaritis. Learning Bayesian network model structure from data. Technical report, Carnegie-Mellon Univ Pittsburgh Pa School of Computer Science, 2003.

- [27] I. Tsamardinos, C. F. Aliferis, A. R. Statnikov, and E. Statnikov. Algorithms for large scale Markov blanket discovery. In FLAIRS conference, volume 2, pages 376–380, 2003.

- [29] Friedman and D. Koller. Being Bayesian about network structure. A Bayesian approach to structure discovery in Bayesian networks. Machine Learning, 50(1):95–125, Jan 2003.

- [30] J. Kuipers and G. Moffa. Partition MCMC for inference on acyclic digraphs. Journal of the American Statistical Association, 112(517):282–299, 2017.

- [31] Jerome Friedman, Trevor Hastie, Robert Tibshirani, Sparse inverse covariance estimation with the graphical lasso, Biostatistics, Volume 9, Issue 3, July 2008, Pages 432–441.

- [32] E. Giudice, J. Kuipers, and G. Moffa. The dual pc algorithm for structure learning. arXiv preprint arXiv:2112.09036, 2021.

- [33] M. Bartlett and J. Cussens. Integer linear programming for the bayesian network structure learning problem. Artificial Intelligence, 244:258–271, 2017. Combining Constraint Solving with Mining and Learning

- [34] J. Cussens, M. Järvisalo, J. H. Korhonen, and M. Bartlett. Bayesian network structure learning with integer programming: Polytopes, facets and complexity. Journal of Artificial Intelligence Research, 58:185–229, 2017