| CVF Paper | Webpage | 5-min Video | Poster | Blog

| CVF Paper | Webpage | 5-min Video | Poster | Blog

Xuan Xiong, Yicheng Liu, Tianyuan Yuan, Yue Wang, Yilun Wang, Hang Zhao*

- Introduction

- Model Zoo

- Installation

- Getting Started

- Architecture

- Contact

- Acknowledgements

- Citation

- License

A neural representation of HD maps to improve local map inference performance for autonomous driving.

This repo is the official implementation of "Neural Map Prior for Autonomous Driving"(CVPR 2023). Our main contributions are:

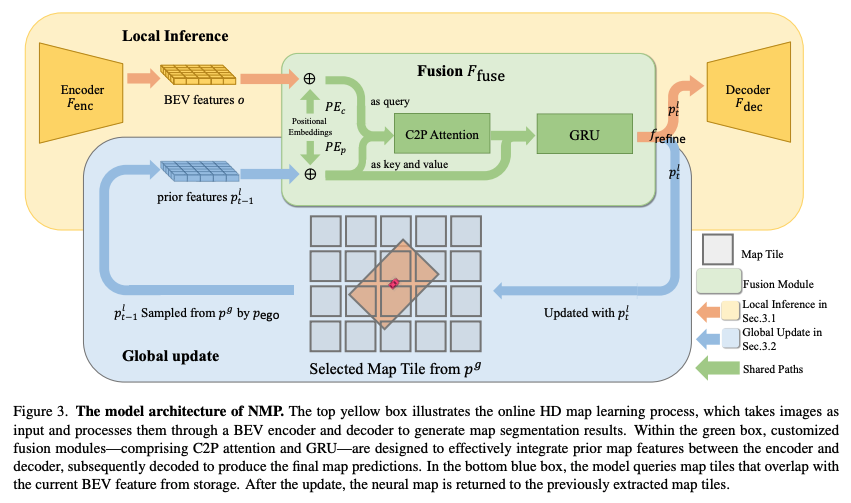

- A novel mapping paradigm: integrates the maintenance of global maps and the inference of online local maps.

- Efficient fusion modules: current-to-prior attention and gated recurrent unit modules facilitate the efficient fusion of global and local map features.

- Easy integration with HD map learning methods: Neural Map Prior can be easily applied to various map segmentation and detection methods. Simple replacement for your online map inference model in your novel algorithms. Moreover, our approach demonstrates significant advancements in challenging scenarios, such as bad weather conditions and longer perception ranges.

- Sparse map tiles: a memory-efficient approach for storing neural representations of city-scale HD maps.

Notes

- The most challenging part of the training and testing process is distributing the appropriate map tile to each GPU and

keeping samples in the same map tiles updated on the same GPU. This process includes two steps:

- Determine the map tile for each GPU. This can be done with some functions

in

project/neural_map_prior/map_tiles/lane_render.py. - Rewrite the sampler so that each GPU can only sample the samples in the map tile assigned to it. This can be done

in

tools/data_sampler.py.

- Determine the map tile for each GPU. This can be done with some functions

in

We experiment with BEVFormer, lift-spat-shoot, HDMapNet and VectorMapNet architectures on nuScenes.

| Model Config | Modality | Divider | Crossing | Boundary | All(mIoU) | Checkpoint Link |

|---|---|---|---|---|---|---|

| BEVFormer | Camera | 49.20 | 28.67 | 50.43 | 42.76 | model |

| BEVFormer + NMP | Camera | 54.20 | 34.52 | 56.94 | 48.55 | model |

Please check installation for installation and data_preparation for preparing the nuScenes dataset.

Please check getting_started for training, evaluation, and visualization of neural_map_prior.

Any questions or suggestions are welcome!

- Xuan Xiong abby.xxn@outlook.com

We would like to thank all who contributed to the open-source projects listed below. Our project would be impossible to get done without the inspiration of these outstanding researchers and developers.

- BEV

Perception: BEVFormer

, Lift, Splat, Shoot

- HD Map

Learning: HDMapNet

, VectorMapNet

- Vision

Transformer: Swin Transformer

- open-mmlab

The designate project/neural_map_prior as a module is inspired by the implementations

of DETR3D

If you find neural_map_prior useful in your research or applications, please consider citing:

@inproceedings{xiong2023neuralmapprior,

author = {Xiong, Xuan and Liu, Yicheng and Yuan, Tianyuan and Wang, Yue and Wang, Yilun and Zhao Hang},

title = {Neural Map Prior for Autonomous Driving},

journal = {Proceedings of the IEEE/CVF International Conference on Computer Vision (CVPR)},

year = {2023}

}

This project is licensed under the Apache 2.0 license.