For this setup, catkin_ws is the name of active ROS Workspace, if your workspace name is different, change the commands accordingly If you do not have an active ROS workspace, you can create one by:

$ mkdir -p ~/catkin_ws/src

$ cd ~/catkin_ws/

$ catkin_makeNow that you have a workspace, clone or download this repo into the src directory of your workspace:

$ cd ~/catkin_ws/src

$ git clone https://github.com/nick-zanobini/RoboND-Perception-Project.gitNote: If you have the Kinematics Pick and Place project in the same ROS Workspace as this project, please remove the 'gazebo_grasp_plugin' directory from the RoboND-Perception-Project/ directory otherwise ignore this note.

Now install missing dependencies using rosdep install:

$ cd ~/catkin_ws

$ rosdep install --from-paths src --ignore-src --rosdistro=kinetic -yBuild the project:

$ cd ~/catkin_ws

$ catkin_makeAdd following to your .bashrc file

export GAZEBO_MODEL_PATH=~/catkin_ws/src/RoboND-Perception-Project/pr2_robot/models:$GAZEBO_MODEL_PATH

If you haven’t already, following line can be added to your .bashrc to auto-source all new terminals

source ~/catkin_ws/devel/setup.bash

You can launch the project scenario like this:

$ roslaunch pr2_robot pick_place_project.launch- Extract features and train an SVM model on new objects (see

pick_list_*.yamlin/pr2_robot/config/for the list of models you'll be trying to identify).- I binned each image into 32 bins after converting to the HSV color space and extracted features from 5000 images per item in

pick_list_3.yaml. I figured that all the items are in the largest pick list so it will work on the two previous lists.

- I binned each image into 32 bins after converting to the HSV color space and extracted features from 5000 images per item in

- Write a ROS node and subscribe to

/pr2/world/pointstopic. This topic contains noisy point cloud data that you must work with. - Use filtering and RANSAC plane fitting to isolate the objects of interest from the rest of the scene.

- Apply Euclidean clustering to create separate clusters for individual items.

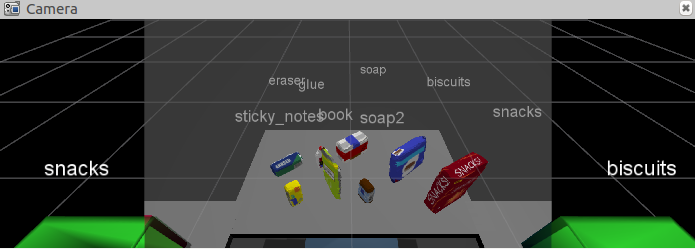

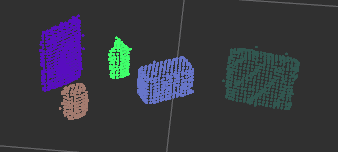

- Perform object recognition on these objects and assign them labels (markers in RViz).

- Calculate the centroid (average in x, y and z) of the set of points belonging to that each object.

- Create ROS messages containing the details of each object (name, pick_pose, etc.) and write these messages out to

.yamlfiles, one for each of the 3 scenarios (test1-3.worldin/pr2_robot/worlds/). See the exampleoutput.yamlfor details on what the output should look like. - Submit a link to your GitHub repo for the project or the Python code for your perception pipeline and your output

.yamlfiles (3.yamlfiles, one for each test world). You must have correctly identified 100% of objects frompick_list_1.yamlfortest1.world, 80% of items frompick_list_2.yamlfortest2.worldand 75% of items frompick_list_3.yamlintest3.world. - Congratulations! Your Done!

- To create a collision map, publish a point cloud to the

/pr2/3d_map/pointstopic and make sure you change thepoint_cloud_topicto/pr2/3d_map/pointsinsensors.yamlin the/pr2_robot/config/directory. This topic is read by Moveit!, which uses this point cloud input to generate a collision map, allowing the robot to plan its trajectory. Keep in mind that later when you go to pick up an object, you must first remove it from this point cloud so it is removed from the collision map! - Rotate the robot to generate collision map of table sides. This can be accomplished by publishing joint angle value(in radians) to

/pr2/world_joint_controller/command - Rotate the robot back to its original state.

- Create a ROS Client for the “pick_place_routine” rosservice. In the required steps above, you already created the messages you need to use this service. Checkout the PickPlace.srv file to find out what arguments you must pass to this service.

- If everything was done correctly, when you pass the appropriate messages to the

pick_place_routineservice, the selected arm will perform pick and place operation and display trajectory in the RViz window - Place all the objects from your pick list in their respective dropoff box and you have completed the challenge!

- Looking for a bigger challenge? Load up the

challenge.worldscenario and see if you can get your perception pipeline working there!

Rubric Points

Here I will consider the rubric points individually and describe how I addressed each point in my implementation.

-

Start with World 2:

Registered Points

-

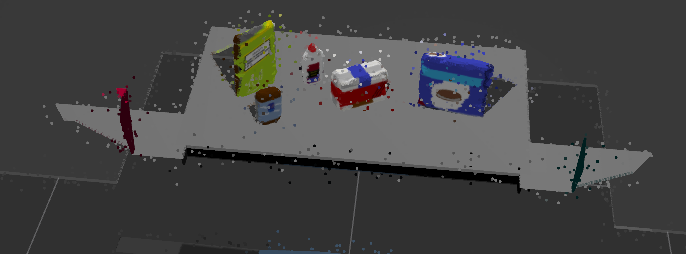

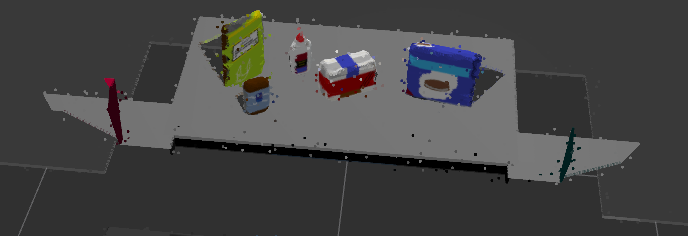

Filter noise with

statistical_outlier_filterUnfiltered Point Cloud Filtered Point Cloud

-

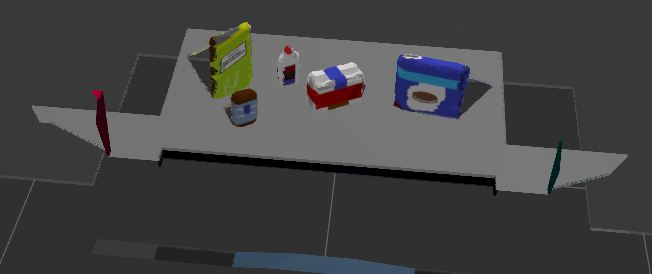

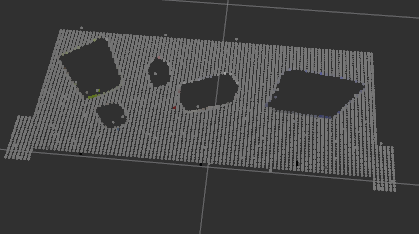

Downsample the filtered point cloud with the

voxel_grid_filterso that each cube with the shapeLEAF_SIZE x LEAF_SIZE x LEAF_SIZEis represented by the centroid of that cube. -

Filter out any data outside the region of interest (The objects on the table and the table) with a

passthrough_filterin both thezandxaxesParameter Value Voxel Filter: LEAF_SIZE 0.01 Passthrough Filter (z): axis min 0.609 Passthrough Filter (z): axis max 1.4 Passthrough Filter (x): axis min -0.50 Passthrough Filter (x): axis max 0.50 RANSAC Segmentation: max distance 0.01 -

Extract inliers (objects on the table) and outliers (table) using the RANSAC Plane Segmentation

Inliers (Objects) Outliers (Table)

-

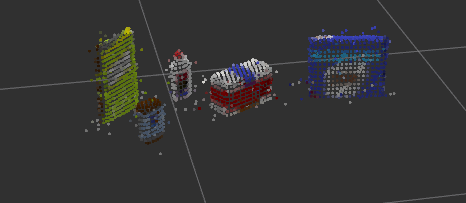

Segment each object using Euclidean Clustering

- My parameters were:

Parameter Value ClusterTolerance 0.014 MinClusterSize 20 MaxClusterSize 2000 Euclidean Clustered Objects

-

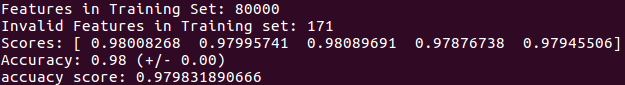

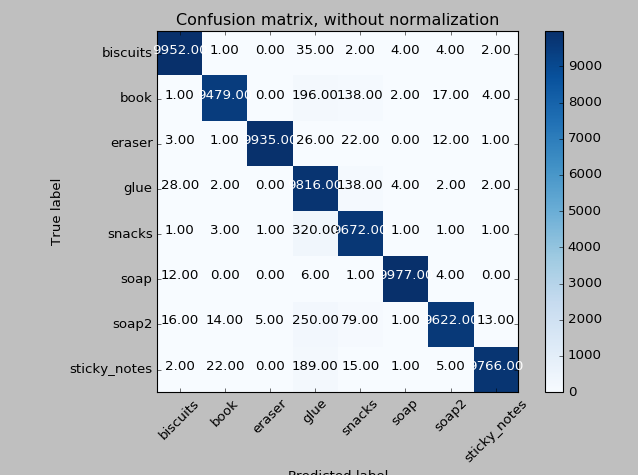

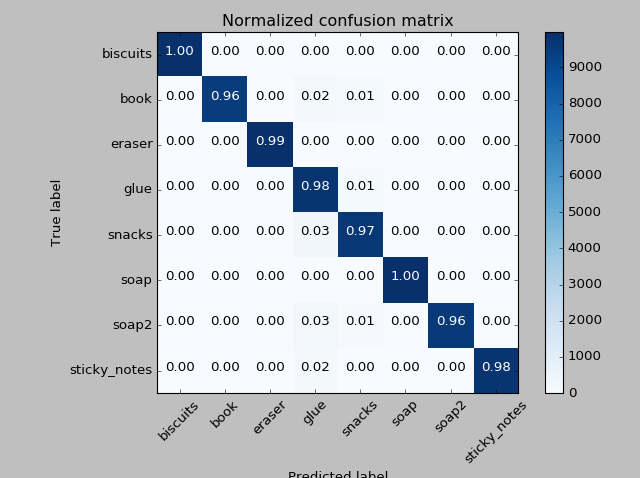

Here are the parameters I used to capture features and train my model:

Parameter Value Pictures 10,000 Bins (Color & Normals) 64 Color Space HSV Total Features 80,000 Model Training Output

Confusion Matrix (Not Normalized) Confusion Matrix (Normalized)

Model Training Output

These can be found in the root directory of the project.